Intellics.ai

@IntellicsAI

Information security, applied machine learning

You might like

Cutlass 4.0 Python DSL @__tensorcore__ Fully python! Performance parity! Join these two sessions at @NVIDIAGTC

Introducing The AI CUDA Engineer: An agentic AI system that automates the production of highly optimized CUDA kernels. sakana.ai/ai-cuda-engine… The AI CUDA Engineer can produce highly optimized CUDA kernels, reaching 10-100x speedup over common machine learning operations in…

1/ Google Research unveils new paper: "Titans: Learning to Memorize at Test Time" It introduces human-like memory structures to overcome the limits of Transformers, with one "SURPRISING" feature. Here's why this is huge for AI. 🧵👇

The @NVIDIA Project DIGITS personal AI supercomputer will enable developers to prototype, fine-tune and inference some of our most advanced Llama models locally.

"By making @AIatMeta Llama models open source, we're committed to democratizing access to cutting-edge AI technology. With Project DIGITS, developers can harness the power of Llama locally, unlocking new possibilities for innovation and collaboration.” -- @Ahmad_Al_Dahle, VP,…

A few technical insights on the new Llama vision models we’re releasing today 🦙🧵

Well, this at least made my day. I think it’s good demonstration of generative #LLM limitations 🥲 reddit.com/r/ChatGPT/comm…

After a few more evals and writing, the proper announcement: I'm releasing JaColBERT, a ColBERT-based model for 🇯🇵 Document Retrieval/RAG, trained exclusively on 🇯🇵 Data, and reaching very strong performance! (Report: ben.clavie.eu/JColBERT_v1.pdf, arXiv soon!) huggingface.co/bclavie/JaColB…

In AI, the ratio of attention on hypothetical, future, forms of harm to actual, current, realized forms of harm seems out of whack. Many of the hypothetical forms of harm, like AI "taking over", are based on highly questionable hypotheses about what technology that does not…

New blog post: Multimodality and Large Multimodal Models (LMMs) Being able to work with data of different modalities -- e.g. text, images, videos, audio, etc. -- is essential for AI to operate in the real world. This post covers multimodal systems in general, including Large…

We believe that AI models benefit from an open approach, both in terms of innovation and safety. Releasing models like Code Llama means the entire community can evaluate their capabilities, identify issues & fix vulnerabilities. github.com/facebookresear…

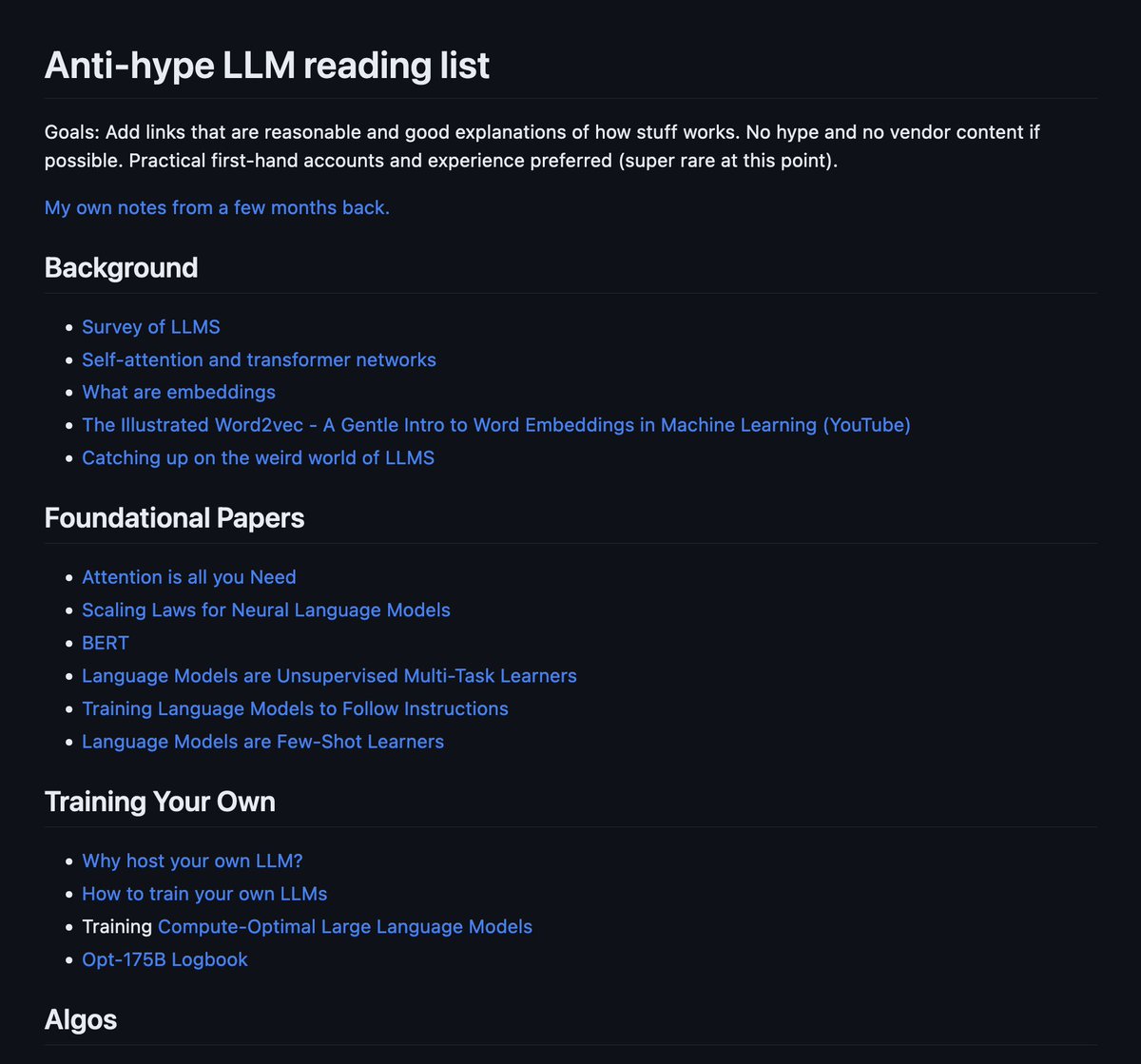

Anti-hype LLM reading list. Pretty good list. gist.github.com/veekaybee/be37…

In the interest of open science and sharing our research, we've published a paper outlining the work on our recently released SeamlessM4T all-in-one multilingual & multimodal translation model. Read the full paper ➡️ bit.ly/44uzanK

You can try Code Llama now in the Code Llama playground @huggingface space — it's also available in the Hugging Face ecosystem, starting with transformers version 4.33.

Code Llama with @huggingface🤗 Yesterday, @MetaAI released Code Llama, a family of open-access code LLMs! Today, we release the integration in the Hugging Face ecosystem🔥 Models: 👉 huggingface.co/codellama blog post: 👉 hf.co/blog/codellama Blog post covers how to use it!

We evaluated Code Llama against existing solutions on both HumanEval & MBPP. - It performed better than open-source, code-specific LLMs & Llama 2. - Code Llama 34B scored the highest vs other SOTA open solutions on MBPP — on par w/ ChatGPT. More info ➡️ bit.ly/45JiPwJ

What makes CLIP work? The contrast with negatives via softmax? The more negatives, the better -> large batch-size? We'll answer "no" to both in our ICCV oral🤓 By introducing SigLIP, a simpler CLIP that also works better and is more scalable, we can study the extremes. Hop in🧶

【新公開】 Stability AI Japan は最高性能の日本語言語モデル「Japanese StableLM Base Alpha 7B」と「Japanese StableLM Instruct Alpha 7B」を公開しました!技術面などの詳細はこちらのブログをご参照ください。👉ja.stability.ai/blog/japanese-……

No More GIL! the Python team has officially accepted the proposal. Congrats @colesbury on his multi-year brilliant effort to remove the GIL, and a heartfelt thanks to the Python Steering Council and Core team for a thoughtful plan to make this a reality. discuss.python.org/t/a-steering-c…

This is huge: Llama-v2 is open source, with a license that authorizes commercial use! This is going to change the landscape of the LLM market. Llama-v2 is available on Microsoft Azure and will be available on AWS, Hugging Face and other providers Pretrained and fine-tuned…

We believe an open approach is the right one for the development of today's Al models. Today, we’re releasing Llama 2, the next generation of Meta’s open source Large Language Model, available for free for research & commercial use. Details ➡️ bit.ly/3Dh9hNp

Very cool experiment by @chillgates_ Distributed MPI inference using llama.cpp with 6 Raspberry Pis - each one with 8GB RAM "sees" 1/6 of the entire 65B model. Inference starts around ~1:10 Follow the progress here: github.com/ggerganov/llam…

Yeah. I have ChatGPT at home. Not a silly 7b model. A full-on 65B model that runs on my pi cluster, watch how the model gets loaded across the cluster with mmap and does round-robin inferencing 🫡 (10 seconds/token) (sped up 16x)

United States Trends

- 1. Rams 22.7K posts

- 2. Seahawks 28.4K posts

- 3. 49ers 19.9K posts

- 4. Lions 74.4K posts

- 5. Commanders 83.5K posts

- 6. Niners 4,786 posts

- 7. Stafford 7,770 posts

- 8. Canada Dry 1,147 posts

- 9. Bills 143K posts

- 10. Cardinals 10.5K posts

- 11. #OnePride 4,378 posts

- 12. Dan Campbell 2,768 posts

- 13. Giants 71.4K posts

- 14. #RaiseHail 3,320 posts

- 15. DO NOT CAVE 10.3K posts

- 16. Daboll 14.6K posts

- 17. Gibbs 7,482 posts

- 18. Joe Whitt 1,592 posts

- 19. Jags 7,576 posts

- 20. Dart 28.5K posts

You might like

-

Sandeco

Sandeco

@sandeco -

Rampalli Karthik 🇯🇵🇺🇸

Rampalli Karthik 🇯🇵🇺🇸

@karthashok008 -

Yoshiyuki Nakai 中井喜之

Yoshiyuki Nakai 中井喜之

@yoshiyukinakai -

Social Value Decision Making Lab

Social Value Decision Making Lab

@Decision_Tokyo -

John Lau

John Lau

@JohnKSLau -

sabarinathan

sabarinathan

@sabarinathan_7 -

Dhruv

Dhruv

@dhruvrnaik -

Mayank Bhaskar

Mayank Bhaskar

@cataluna84 -

(MJ) Mrityunjay

(MJ) Mrityunjay

@mrityunjay_99 -

yoovraj ゆび

yoovraj ゆび

@yoovraj -

Alexander González

Alexander González

@alexndrglez -

knaveen

knaveen

@knaveen

Something went wrong.

Something went wrong.