Andrew Saxe

@SaxeLab

Prof at @GatsbyUCL and @SWC_Neuro, trying to figure out how we learn. Bluesky: @SaxeLab Mastodon: @[email protected]

You might like

How does in-context learning emerge in attention models during gradient descent training? Sharing our new Spotlight paper @icmlconf: Training Dynamics of In-Context Learning in Linear Attention arxiv.org/abs/2501.16265 Led by Yedi Zhang with @Aaditya6284 and Peter Latham

📢 Job alert We are looking for a Postdoctoral Fellow to work with @ArthurGretton on creating statistically efficient causal and interaction models with the aim of elucidating cellular interactions. ⏰Deadline 27-Aug-2025 ℹ️ ucl.ac.uk/work-at-ucl/se…

🎓Thrilled to share I’ve officially defended my PhD!🥳 At @GatsbyUCL, my research explored how prior knowledge shapes neural representations. I’m deeply grateful to my mentors, @SaxeLab and Caswell Barry, my incredible collaborators, and everyone who supported me! Stay tuned!

If you’re working on symmetry and geometry in neural representations, submit your work to NeurReps and join the community in San Diego ! 🤩 Deadline August 22nd.

Are you studying how structure shapes computation in the brain and in AI systems? 🧠 Come share your work in San Diego at NeurReps 2025! There is one month left until the submission deadline on August 22: neurreps.org/call-for-papers

If you can see it, you can feel it! Thrilled to share our new @NatureComms paper on how mice generalize spatial rules between vision & touch, led by brilliant co-first authors @giulio_matt & @GtnMaelle. More details in this thread 🧵 (1/7) doi.org/10.1038/s41467…

🥳 Congratulations to Rodrigo Carrasco-Davison on passing his PhD viva with minor corrections! 🎉 📜 Principles of Optimal Learning Control in Biological and Artificial Agents.

Come chat about this at the poster @icmlconf, 11:00-13:30 on Wednesday in the West Exhibition Hall #W-902!

How does in-context learning emerge in attention models during gradient descent training? Sharing our new Spotlight paper @icmlconf: Training Dynamics of In-Context Learning in Linear Attention arxiv.org/abs/2501.16265 Led by Yedi Zhang with @Aaditya6284 and Peter Latham

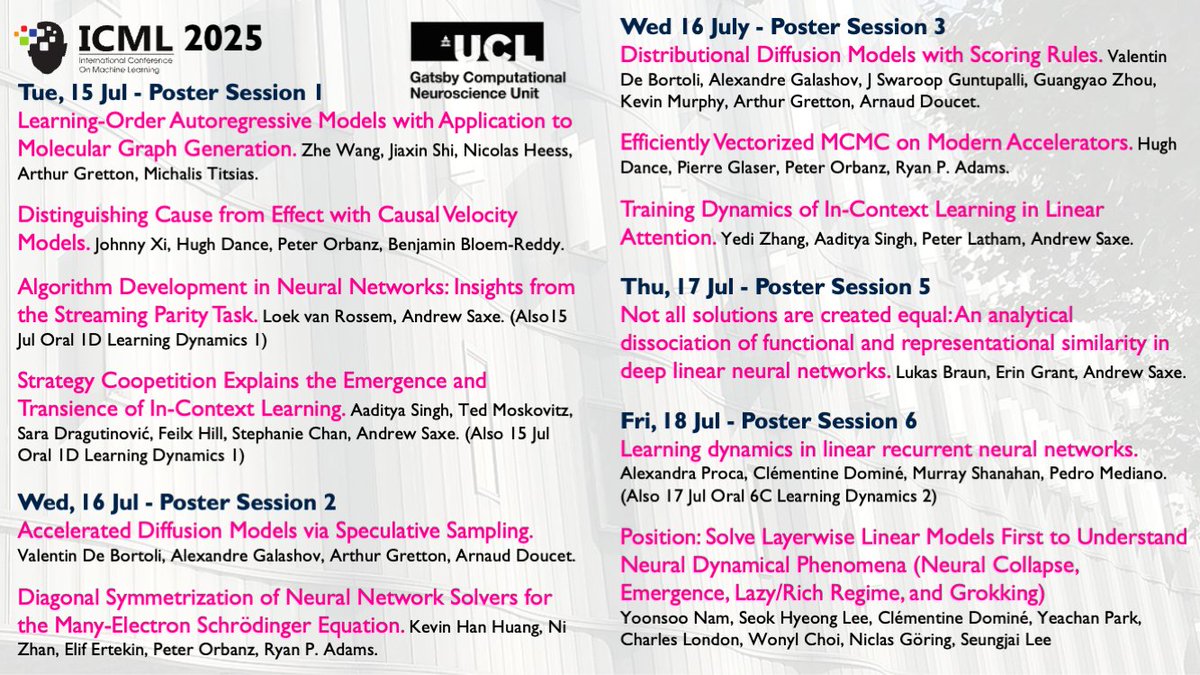

👋 Attending #ICML2025 next week? Don't forget to check out work involving our researchers!

Excited to present this work in Vancouver at #ICML2025 today 😀 Come by to hear about why in-context learning emerges and disappears: Talk: 10:30-10:45am, West Ballroom C Poster: 11am-1:30pm, East Exhibition Hall A-B # E-3409

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why? Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns. Read on 🔎⏬

How do task dynamics impact learning in networks with internal dynamics? Excited to share our ICML Oral paper on learning dynamics in linear RNNs! with @ClementineDomi6 @mpshanahan @PedroMediano openreview.net/forum?id=KGOcr…

United States Trends

- 1. #NXXT_Earnings N/A

- 2. Good Friday 47K posts

- 3. #FanCashDropPromotion N/A

- 4. Summer Walker 21.3K posts

- 5. #FridayVibes 3,890 posts

- 6. #GringosVenezuelaNoSeToca 1,078 posts

- 7. Wale 35.7K posts

- 8. #FinallyOverIt 6,933 posts

- 9. Happy Friyay 1,181 posts

- 10. Go Girl 25.8K posts

- 11. RED Friday 2,549 posts

- 12. Meek 6,200 posts

- 13. Saylor 30.1K posts

- 14. $BTC 119K posts

- 15. SONIC RACING 2,347 posts

- 16. Robbed You 4,662 posts

- 17. Bubba 8,787 posts

- 18. Monaleo 2,305 posts

- 19. For Christ 22.4K posts

- 20. 1-800 Heartbreak 1,856 posts

You might like

-

Daniel Yamins

Daniel Yamins

@dyamins -

Tim Behrens

Tim Behrens

@behrenstimb -

Stefano Fusi

Stefano Fusi

@StefanoFusi2 -

Yael Niv @yaelniv.bsky.social

Yael Niv @yaelniv.bsky.social

@yael_niv -

Gatsby Computational Neuroscience Unit

Gatsby Computational Neuroscience Unit

@GatsbyUCL -

CosyneMeeting

CosyneMeeting

@CosyneMeeting -

Robert Yang

Robert Yang

@GuangyuRobert -

Kim Stachenfeld (neurokim.bsky.social)

Kim Stachenfeld (neurokim.bsky.social)

@neuro_kim -

Ida Momennejad

Ida Momennejad

@criticalneuro -

Kanaka Rajan

Kanaka Rajan

@KanakaRajanPhD -

SueYeon Chung

SueYeon Chung

@s_y_chung -

Surya Ganguli

Surya Ganguli

@SuryaGanguli -

David Sussillo

David Sussillo

@SussilloDavid -

Tim Vogels

Tim Vogels

@TPVogels -

Jonathan Pillow

Jonathan Pillow

@jpillowtime

Something went wrong.

Something went wrong.