Upesh

@Upesh_AI

Data Scientist @FICO_corp AI Enthusiast B. Tech in Mathematics & Computing @IITGuwahati Dakshanite Navodayan #Letsconnect

You might like

Optimizers in Deep Learning, a thread🧵 First of all let's talk about why we need optimizer if we have SGD(stochastic gradient descent), GD(gradient descent), mini-batch SGD

A simple trick cuts your LLM costs by 50%! Just stop using JSON and use this instead: TOON (Token-Oriented Object Notation) slashes your LLM token usage in half while keeping data perfectly readable. Here's why it works: TOON's sweet spot: uniform arrays with consistent…

Introducing the File Search Tool in the Gemini API, our hosted RAG solution with free storage and free query time embeddings 💾 We are super excited about this new approach and think it will dramatically simplify the path to context aware AI systems, more details in 🧵

You are in an AI engineer interview at Google. The interviewer asks: "Our data is spread across several sources (Gmail, Drive, etc.) How would you build a unified query engine over it?" You: "I'll embed everything in a vector DB and do RAG." Interview over! Here's what you…

Quick Question to folks who do model finetuning One of the trickiest parts of fine-tuning LLMs, in my experience, is retaining the original capabilities of the post-trained model. Let’s take an example say you want to fine-tune a VLM for OCR, layout detection, or any other…

Do this: 1. Open AWS and create an account. 2. Go to EC2, spin up an instance, generate a key pair, and SSH into it from your local system. Just play around install Nginx, deploy a Node app, break things, fix them. 3. Decide to launch something? Go to Security Groups open…

Don’t overthink it. • Learn EC2 → compute • Learn S3 → storage • Learn RDS → database • Learn IAM → security • Learn VPC → networking • Learn Lambda → automation • Learn CloudWatch → monitoring • Learn Route 53 → DNS & domains You don’t need every AWS service.…

This November, history changes. An NVIDIA H100 GPU—100 times more powerful than any GPU ever flown in space—launches to orbit. It will run Google's Gemma—the open-source version of Gemini. In space. For the first time. First AI training in orbit. First model fine-tuning in…

Today, we’re announcing a major breakthrough that marks a significant step forward in the world of quantum computing. For the first time in history, our teams at @GoogleQuantumAI demonstrated that a quantum computer can successfully run a verifiable algorithm, 13,000x faster than…

The security vulnerability we found in Perplexity’s Comet browser this summer is not an isolated issue. Indirect prompt injections are a systemic problem facing Comet and other AI-powered browsers. Today we’re publishing details on more security vulnerabilities we uncovered.

I still can’t believe this thing is taking our jobs.

The term “AGI” is currently a vague, moving goalpost. To ground the discussion, we propose a comprehensive, testable definition of AGI. Using it, we can quantify progress: GPT-4 (2023) was 27% of the way to AGI. GPT-5 (2025) is 58%. Here’s how we define and measure it: 🧵

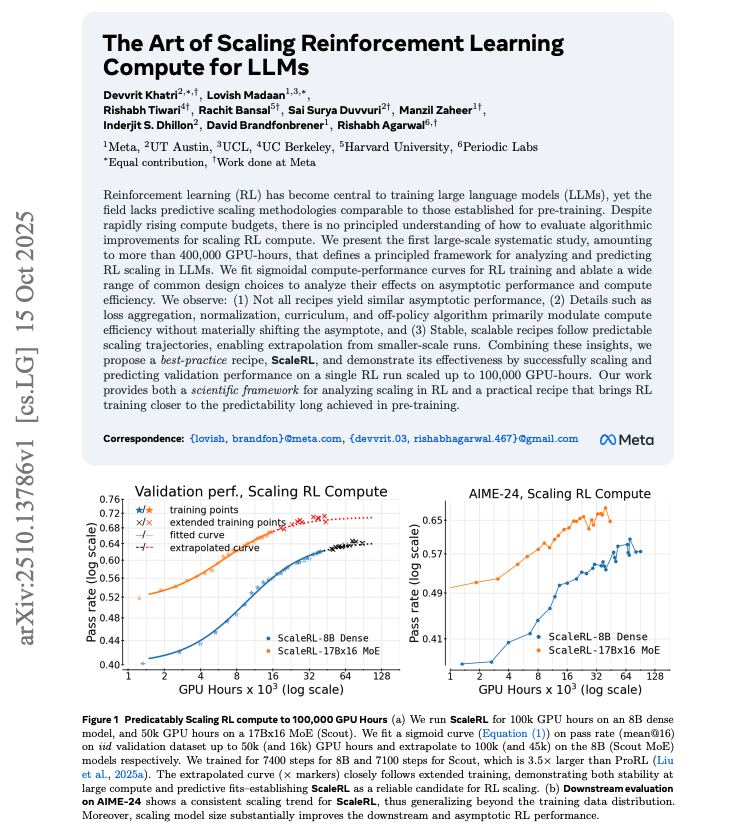

Banger paper from Meta and collaborators. This paper is one of the best deep dives yet on how reinforcement learning (RL) actually scales for LLMs. The team ran over 400,000 GPU hours of experiments to find a predictable scaling pattern and a stable recipe (ScaleRL) that…

Google officially starts selling TPUs to external customers and competes directly with Nvidia now

Broadcom's 5th customer ($10B) isn't Apple or XAI It's Anthropic. They won't design a new chip. They will be buying TPUs from Broadcom. Expect Anthropic to announce a funding round from Google soon.

nanochat d32, i.e. the depth 32 version that I specced for $1000, up from $100 has finished training after ~33 hours, and looks good. All the metrics go up quite a bit across pretraining, SFT and RL. CORE score of 0.31 is now well above GPT-2 at ~0.26. GSM8K went ~8% -> ~20%,…

How does a vector database work? It's a question I get asked constantly. So, let’s refresh. At its core, a vector database retrieves data objects using vector search. But let's break down what's actually happening under the hood. 𝗧𝗵𝗲 𝗙𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻: 𝗩𝗲𝗰𝘁𝗼𝗿…

Research Scientist interview at Google. Interviewer: "You need to quantize a model from FP16 to INT8. Walk me through how you'd do it without destroying quality." Your answer: "I'll just convert all weights to INT8 format" ❌ Rejected. Here's the critical mistake: Don't say:…

An exciting milestone for AI in science: Our C2S-Scale 27B foundation model, built with @Yale and based on Gemma, generated a novel hypothesis about cancer cellular behavior, which scientists experimentally validated in living cells. With more preclinical and clinical tests,…

Google shocked the world. They solved the code security nightmare that's been killing developers for decades. DeepMind's new AI agent "Codemender" just auto-finds and fixes vulnerabilities in your code. Already shipped 72 solid fixes to major open source projects. This is…

My brain broke when I read this paper. A tiny 7 Million parameter model just beat DeepSeek-R1, Gemini 2.5 pro, and o3-mini at reasoning on both ARG-AGI 1 and ARC-AGI 2. It's called Tiny Recursive Model (TRM) from Samsung. How can a model 10,000x smaller be smarter? Here's how…

You're in a ML Engineer interview at Google, and the interviewer asks: "GPUs vs TPUs which one to choose?" Here's how you answer:

United States Trends

- 1. Veterans Day 419K posts

- 2. Tangle and Whisper 4,400 posts

- 3. Woody 16.9K posts

- 4. Jeezy 1,583 posts

- 5. State of Play 30.3K posts

- 6. Toy Story 5 23.4K posts

- 7. AiAi 12.2K posts

- 8. Luka 87.5K posts

- 9. #ShootingStar N/A

- 10. Gambit 48.6K posts

- 11. Nico 150K posts

- 12. Errtime N/A

- 13. Wanda 32.6K posts

- 14. #SonicRacingCrossWorlds 3,091 posts

- 15. NiGHTS 59.4K posts

- 16. Tish 6,794 posts

- 17. Nightreign DLC 14.4K posts

- 18. Antifa 206K posts

- 19. SBMM 1,869 posts

- 20. Wike 138K posts

Something went wrong.

Something went wrong.