Hussein Hazimeh

@hazimeh_h

Research scientist @OpenAI. @MIT phd

You might like

Today OpenAI announced o3, its next-gen reasoning model. We've worked with OpenAI to test it on ARC-AGI, and we believe it represents a significant breakthrough in getting AI to adapt to novel tasks. It scores 75.7% on the semi-private eval in low-compute mode (for $20 per task…

o3 and o3-mini are my favorite models ever. o3 essentially solves AIME (>90%), GPQA (~90%), ARC-AGI (~90%), and it gets 1/4th of the Frontier Maths. To understand how insane 25% on Frontier Maths is, see this quote by Tim Gowers. The sparks are intensifying ...

Scaling Laws for Downstream Task Performance of Large Language Models Studies how the choice of the pretraining data and its size affect downstream cross-entropy and BLEU score arxiv.org/abs/2402.04177

Very excited to share the paper from my last @GoogleAI internship: Scaling Laws for Downstream Task Performance of LLMs. arxiv.org/pdf/2402.04177… w/ Natalia Ponomareva, @hazimeh_h, Dimitris Paparas, Sergei Vassilvitskii, and @sanmikoyejo 1/6

'LibMTL: A Python Library for Deep Multi-Task Learning', by Baijiong Lin, Yu Zhang. jmlr.org/papers/v24/22-… #mtl #python #libmtl

'L0Learn: A Scalable Package for Sparse Learning using L0 Regularization', by Hussein Hazimeh, Rahul Mazumder, Tim Nonet. jmlr.org/papers/v24/22-… #sparse #regularization #l0learn

A new preprint on ALL-SUM: an ensembled L0Learn method for faster, more accurate polygenetic risk scores(PRSs) using GWAS summary stats. It ensembles L0+L2 regulated PRSs across toning grids. Applied to many UKB phenotypes. Congrats to @Tony_Chen6, @AndrewHaoyu, Rahul Mazumder

Very excited share our preprint on a new method for constructing polygenic risk scores from GWAS summary statistics. Big thanks to my amazing advisors @AndrewHaoyu, Rahul Mazumder (MIT), and @XihongLin for their support on this project. biorxiv.org/content/10.110…

Very excited share our preprint on a new method for constructing polygenic risk scores from GWAS summary statistics. Big thanks to my amazing advisors @AndrewHaoyu, Rahul Mazumder (MIT), and @XihongLin for their support on this project. biorxiv.org/content/10.110…

Check out our work on Flexible Modeling and Multitask Learning using Differentiable Tree Ensembles in KDD'22 Paper: dl.acm.org/doi/10.1145/35… Our paper had won Best Student Paper Award in KDD'22 (Research Track).

Great article covering six papers on Mixture of Experts, of which one is ours 🙂 (DSelect-K with @hazimeh_h, @achowdhery, and others): arxiv.org/abs/2106.03760

my article about MoE routing layers is out! I took it down to 6 routing papers:

my article about MoE routing layers is out! I took it down to 6 routing papers:

I've got a big ol' post coming out in artificial fintelligence where I read 7 routing papers for MoE models and summarize them. hopefully coming out later this week (maybe weekend).

Really excited to share the beta release of #SynthID, a watermarking tool created by @GoogleDeepMind and made available via @GoogleCloud to help tag and identify AI-generated images

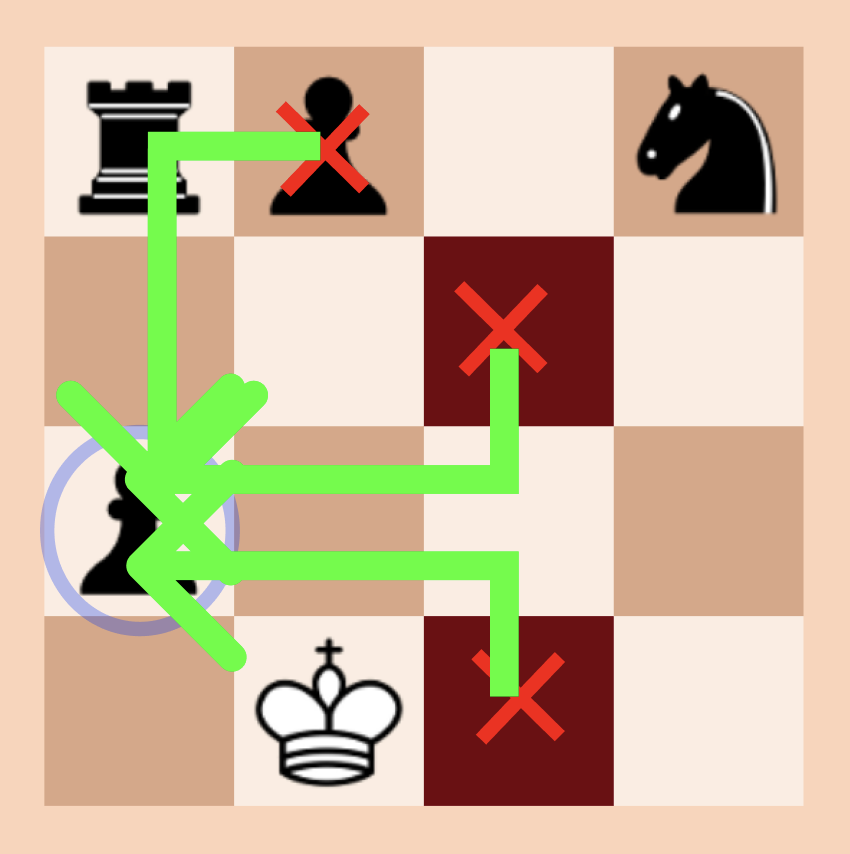

(1/2) I used ML to make Chess more fun in Single-Player. If you: - are an ML practitioner / work in AI, - like chess, math or puzzle games, - enjoy stories abt code, hacks, and setbacks, then this tech story is for you. A deep dive into the ML side of puzzle design.

Check out our work on pruning neural nets! The method leverages several ideas from combinatorial optimization and high-dimensional statistics to enable faster and more accurate pruning Blog: goo.gle/3E12kAc ICML paper: proceedings.mlr.press/v202/benbaki23…

Introducing CHITA, an optimization-based approach for pruning pre-trained neural networks at scale. Learn how it leverages advances from several fields and outperforms state-of-the-art pruning methods in terms of scalability and performance tradeoffs → goo.gle/3E12kAc

New Article: "How to DP-fy ML: A Practical Guide to Machine Learning with Differential Privacy" by Ponomareva, Hazimeh, Kurakin, Xu, Denison, McMahan, Vassilvitskii, Chien, and Thakurta jair.org/index.php/jair…

Today, we discuss the current state of differentially private ML (DP-ML) research with an overview of common techniques for obtaining DP-ML models, engineering challenges, mitigation techniques and current open questions. Learn more ↓ goo.gle/3Iril5k

Excited to announce that our image immunization paper is accepted as an *Oral* at #ICML2023! 🔥 Come chat with us about it in Hawaii!

Last week on @TheDailyShow, @Trevornoah asked @OpenAI @miramurati a (v. important) Q: how can we safeguard against AI-powered photo editing for misinformation? youtu.be/Ba_C-C6UwlI?t=… My @MIT students hacked a way to "immunize" photos against edits: gradientscience.org/photoguard/ (1/8)

How easy is it for adversaries to hide image content from classifiers through obfuscations? Our new benchmark allows you to evaluate this! Dataset and evaluation code: github.com/deepmind/image… Paper: arxiv.org/abs/2301.12993. Joint work between @DeepMind, @Google & @GoogleAI 🧵

Training ML models with differential privacy could be challenging. To aid practitioners, we wrote a detailed survey with known best practices of DP-training of ML models: arxiv.org/abs/2303.00654

Mark your calendars! The 2022 MIP Workshop feat. DANniversary, will be held May 23-26, 2022, at DIMACS, Rutgers University. This year MIP will also host a computational competition, to be launched on November 16th. More info at mixedinteger.org/2022/

United States Trends

- 1. #NXXT_Earnings N/A

- 2. Good Friday 47.6K posts

- 3. #FanCashDropPromotion N/A

- 4. Summer Walker 21.4K posts

- 5. #FridayVibes 3,926 posts

- 6. #GringosVenezuelaNoSeToca 1,200 posts

- 7. Wale 35.9K posts

- 8. #FinallyOverIt 6,968 posts

- 9. Happy Friyay 1,188 posts

- 10. Go Girl 25.9K posts

- 11. RED Friday 2,564 posts

- 12. Meek 6,209 posts

- 13. Saylor 30.4K posts

- 14. $BTC 119K posts

- 15. SONIC RACING 2,368 posts

- 16. Robbed You 4,679 posts

- 17. Bubba 8,852 posts

- 18. Monaleo 2,314 posts

- 19. For Christ 22.5K posts

- 20. 1-800 Heartbreak 1,876 posts

Something went wrong.

Something went wrong.