Minseong Bae

@kylebae1017

M.S. Student in KAIST @MLVLab. Interested in #AI4Science.

Diffusion language models are making a splash (again)! To learn more about this fascinating topic, check out ⏩ my video tutorial (and references within): youtu.be/8BTOoc0yDVA ⏩discrete diffusion reading group: @diffusion_llms

youtube.com

YouTube

Diffusion Language Models: The Next Big Shift in GenAI

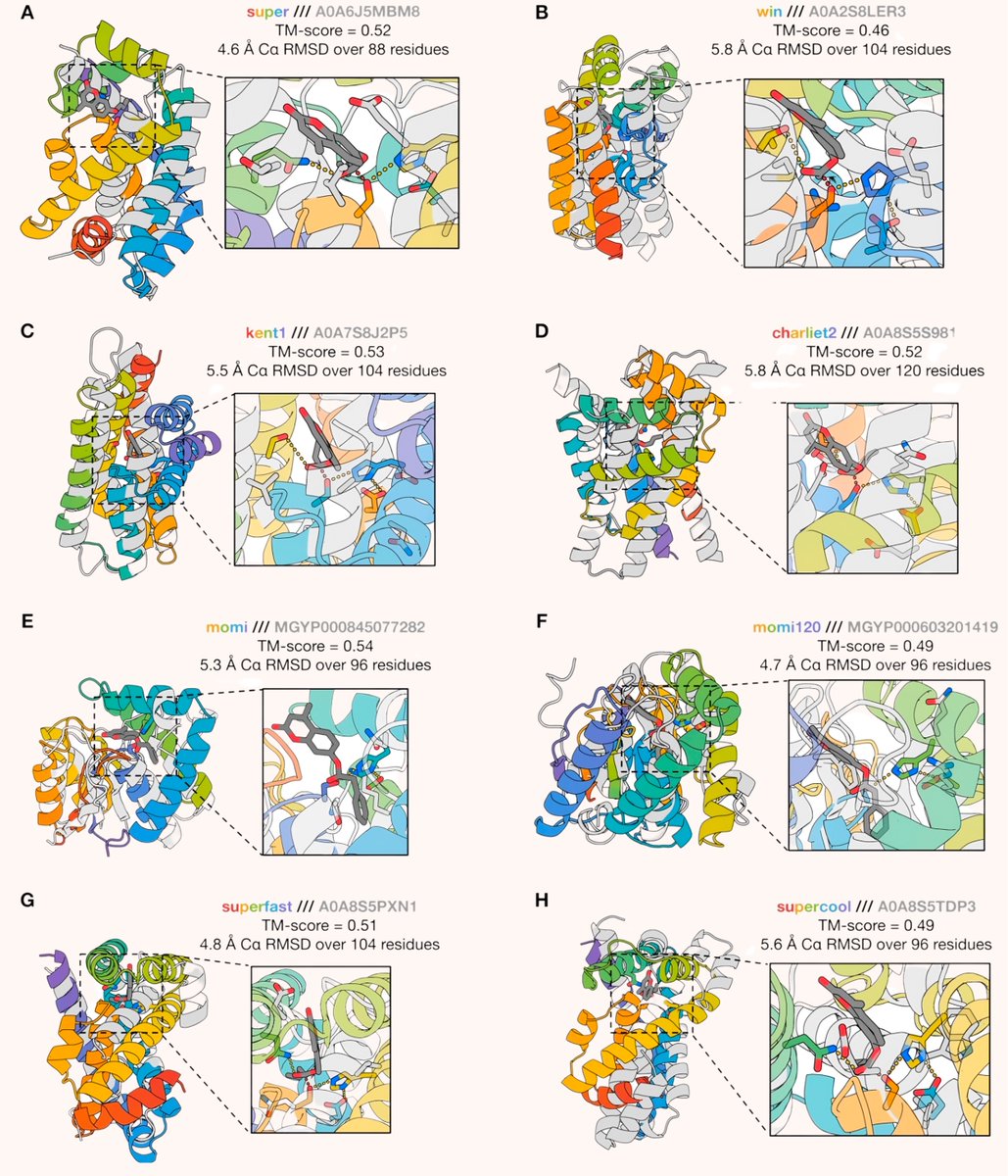

Atomically accurate de novo antibody design with diffusion models Antibodies recognize specific molecular “patches” on proteins—epitopes. Modern therapeutics depend heavily on finding antibodies that bind exactly the right patch. But today, we still obtain them mostly through…

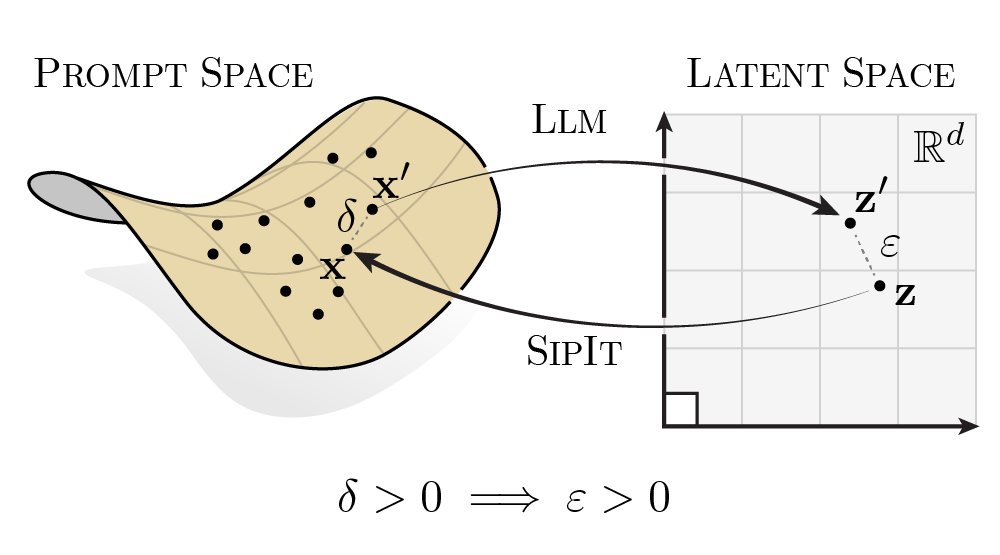

LLMs are injective and invertible. In our new paper, we show that different prompts always map to different embeddings, and this property can be used to recover input tokens from individual embeddings in latent space. (1/6)

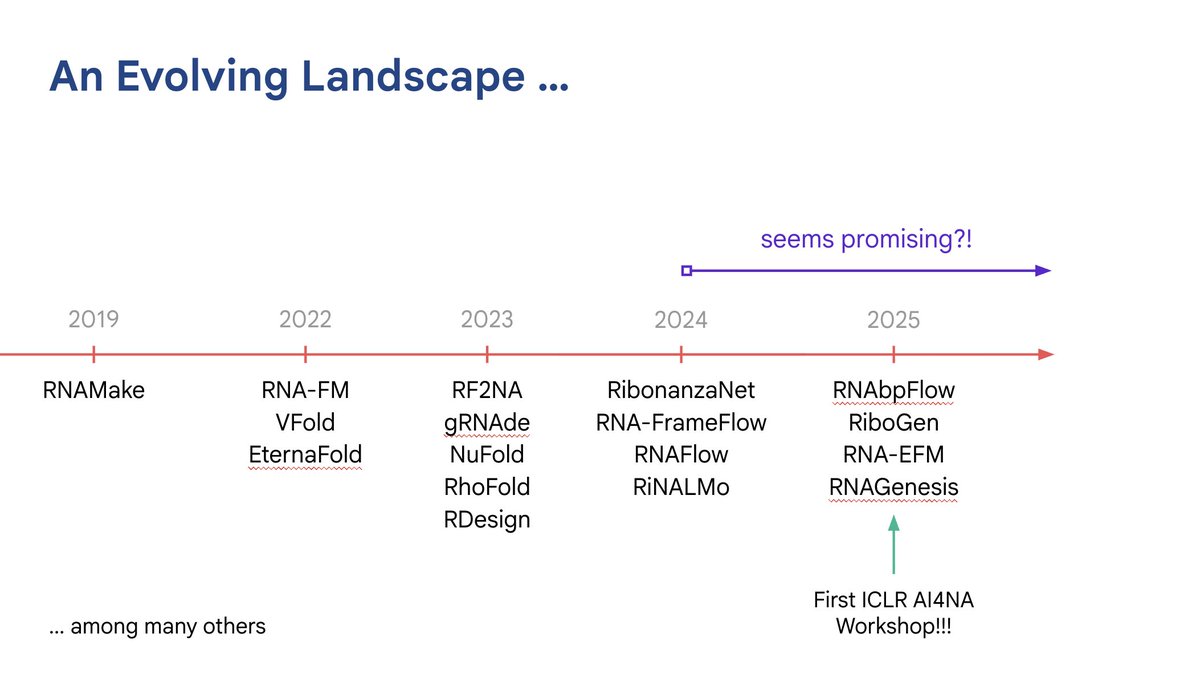

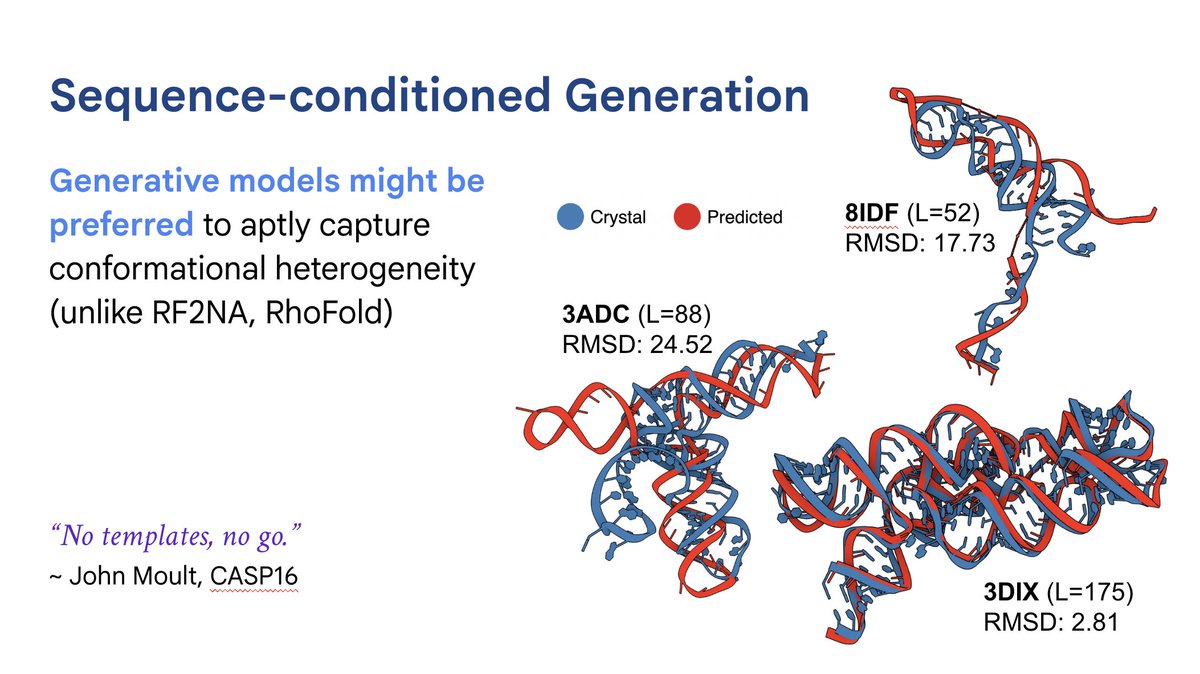

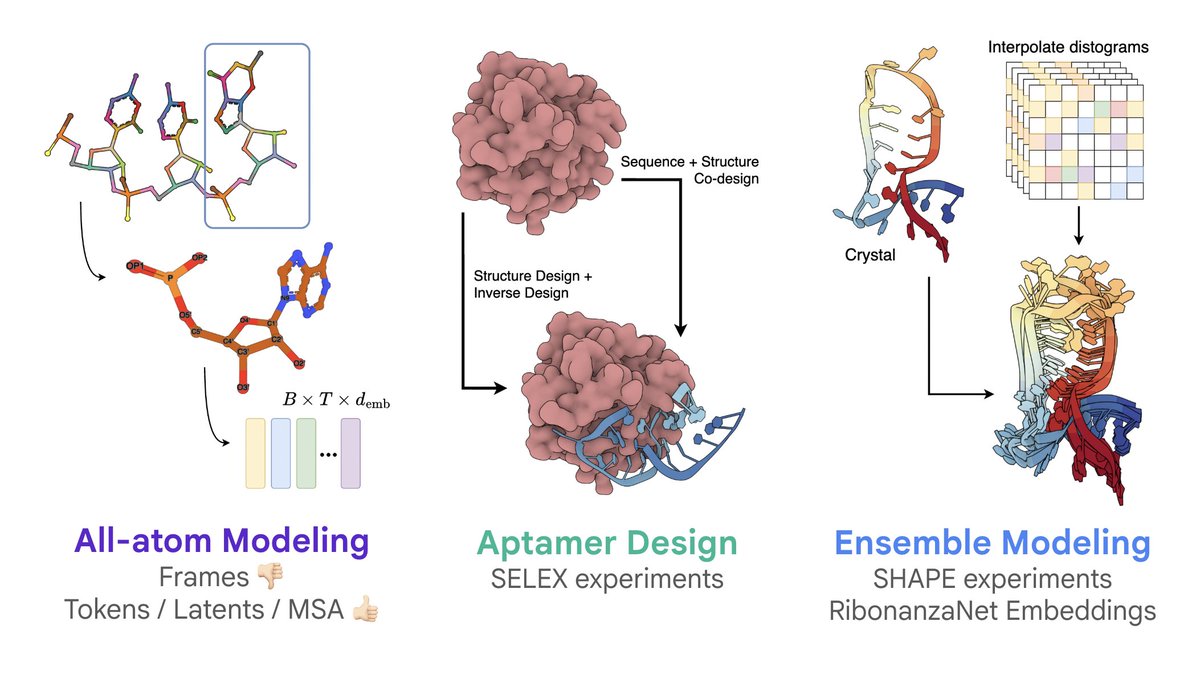

Recently gave a talk at @FlagshipPioneer on how far we've come in AI methods for RNA design, and where the field is potentially heading. Shared a similar (shorter) version with Rhiju Das' group at Stanford BioE this summer. Lots of fun convos thereafter 🤔 The talk contains some…

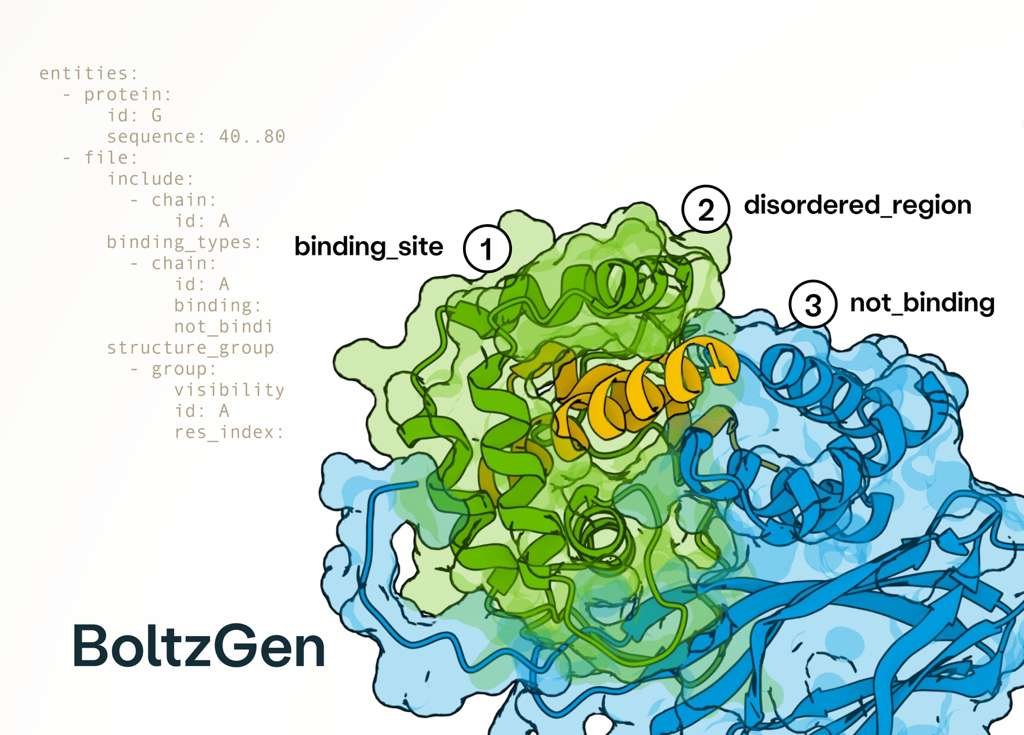

Excited to release BoltzGen which brings SOTA folding performance to binder design! The best part of this project has been collaborating with many leading biologists who tested BoltzGen at an unprecedented scale, showing success on many novel targets and pushing its limits! 🧵..

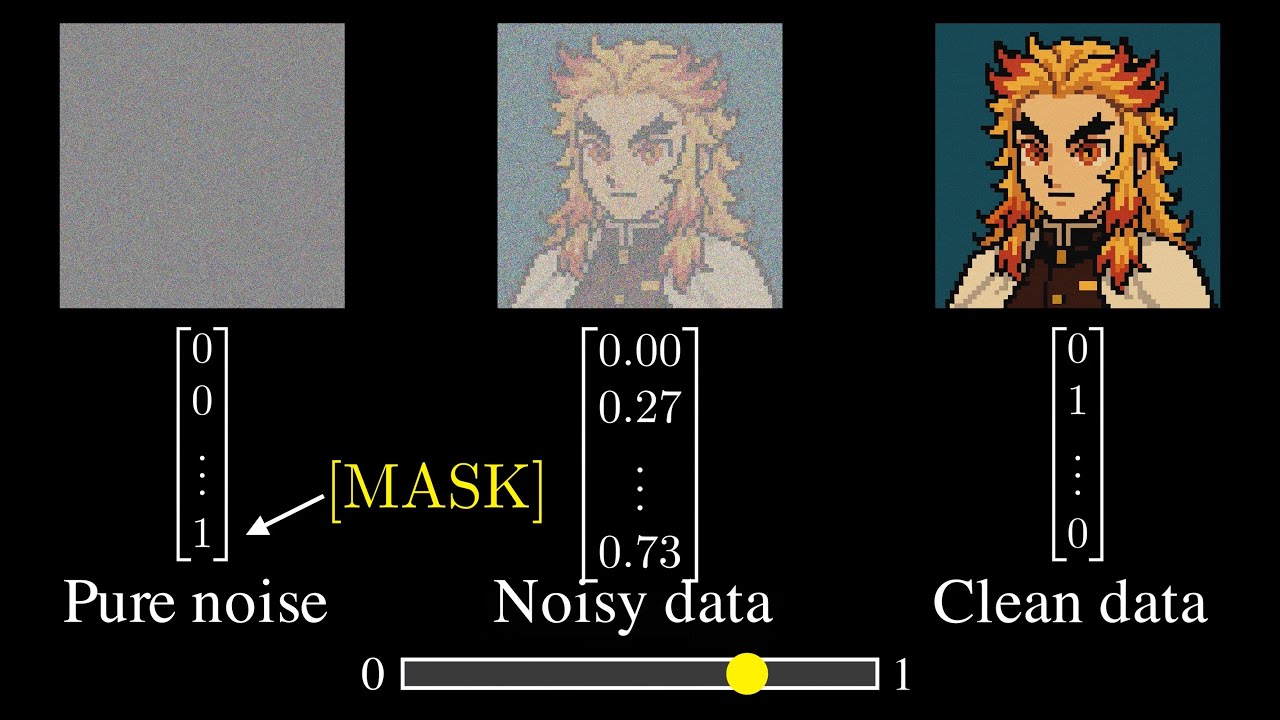

Nice, short post illustrating how simple text (discrete) diffusion can be. Diffusion (i.e. parallel, iterated denoising, top) is the pervasive generative paradigm in image/video, but autoregression (i.e. go left to right bottom) is the dominant paradigm in text. For audio I've…

BERT is just a Single Text Diffusion Step! (1/n) When I first read about language diffusion models, I was surprised to find that their training objective was just a generalization of masked language modeling (MLM), something we’ve been doing since BERT from 2018. The first…

Anyone know what program & settings was used to make these figs? I assume it isn't pymol or chimera

Protein designers have long dreamed of building new enzymes from scratch. Unfortunately, enzymes are highly dynamic; they move around a lot and have intermediate states that are difficult to design computationally. But a recent paper from David Baker’s group at the Institute…

In diffusion LMs, discrete methods have all but displaced continuous ones (🥲). Interesting new trend: why not both? Use continuous methods to make discrete diffusion better. Diffusion duality: arxiv.org/abs/2506.10892 CADD: arxiv.org/abs/2510.01329 CCDD: arxiv.org/abs/2510.03206

New survey on diffusion language models: arxiv.org/abs/2508.10875 (via @NicolasPerezNi1). Covers pre/post-training, inference and multimodality, with very nice illustrations. I can't help but feel a bit wistful about the apparent extinction of the continuous approach after 2023🥲

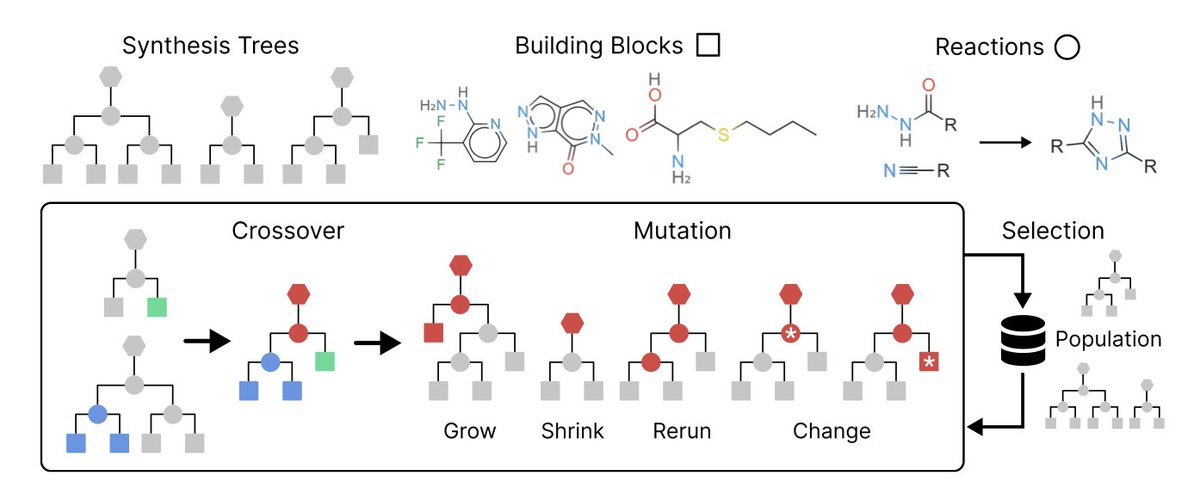

A Genetic Algorithm for Navigating Synthesizable Molecular Spaces 1. This paper introduces SynGA, a genetic algorithm designed to operate directly over synthesis routes, ensuring that all generated molecules are synthesizable. This is a significant step forward in addressing the…

SimpleFold: Folding Proteins is Simpler than You Think "we introduce SimpleFold, the first flow-matching based protein folding model that solely uses general purpose transformer blocks. Protein folding models typically employ computationally expensive modules involving…

Intern-S1: A Scientific Multimodal Foundation Model "Intern-S1 is a multimodal Mixture-of-Experts (MoE) model with 28 billion activated parameters and 241 billion total parameters, continually pre-trained on 5T tokens, including over 2.5T tokens from scientific domains. In the…

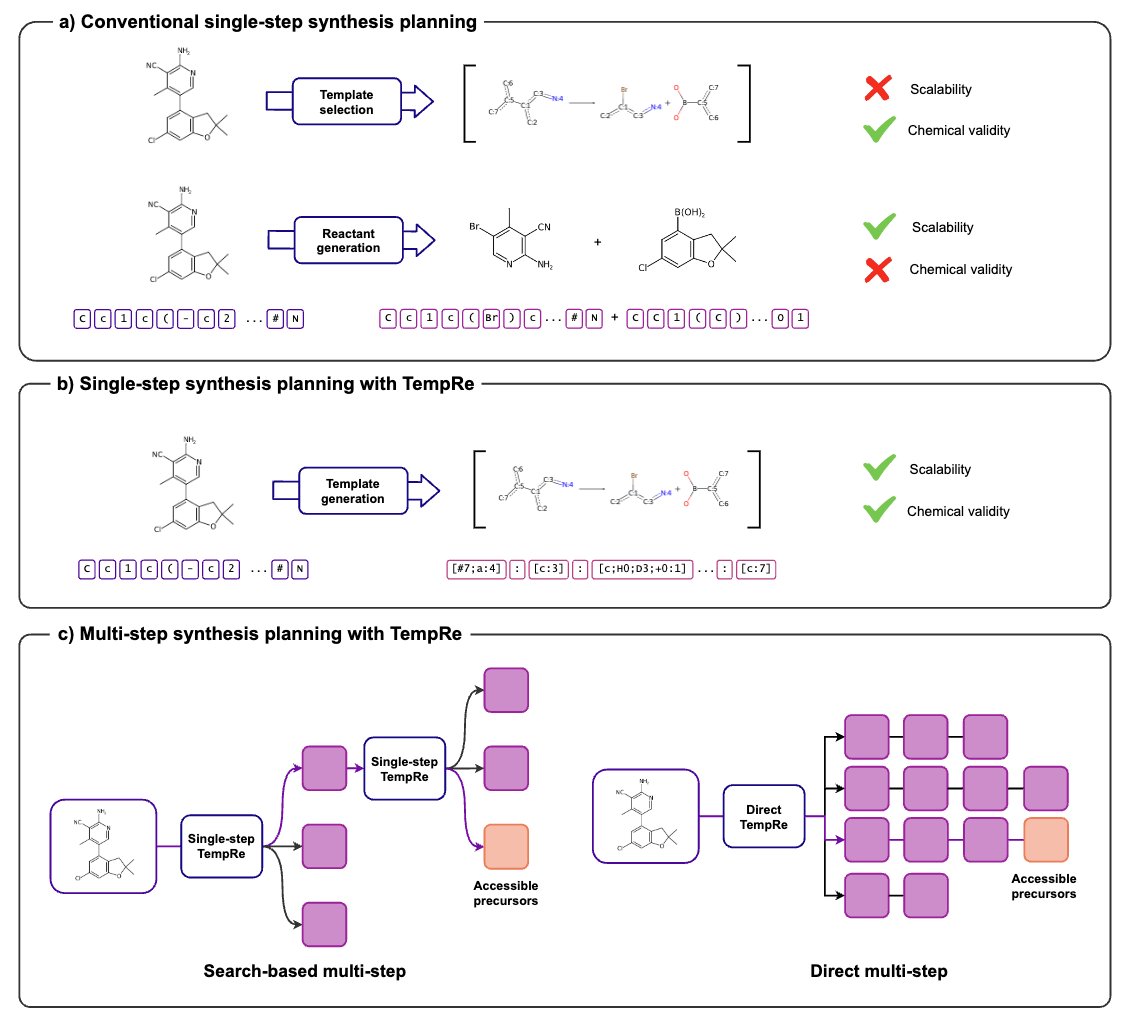

TempRe reframes retrosynthesis as template generation: a seq-to-seq Transformer predicts SMARTS templates token-by-token (P2T) and even autowrites full multi-step routes (Direct TempRe) #GenAI #DrugDiscovery

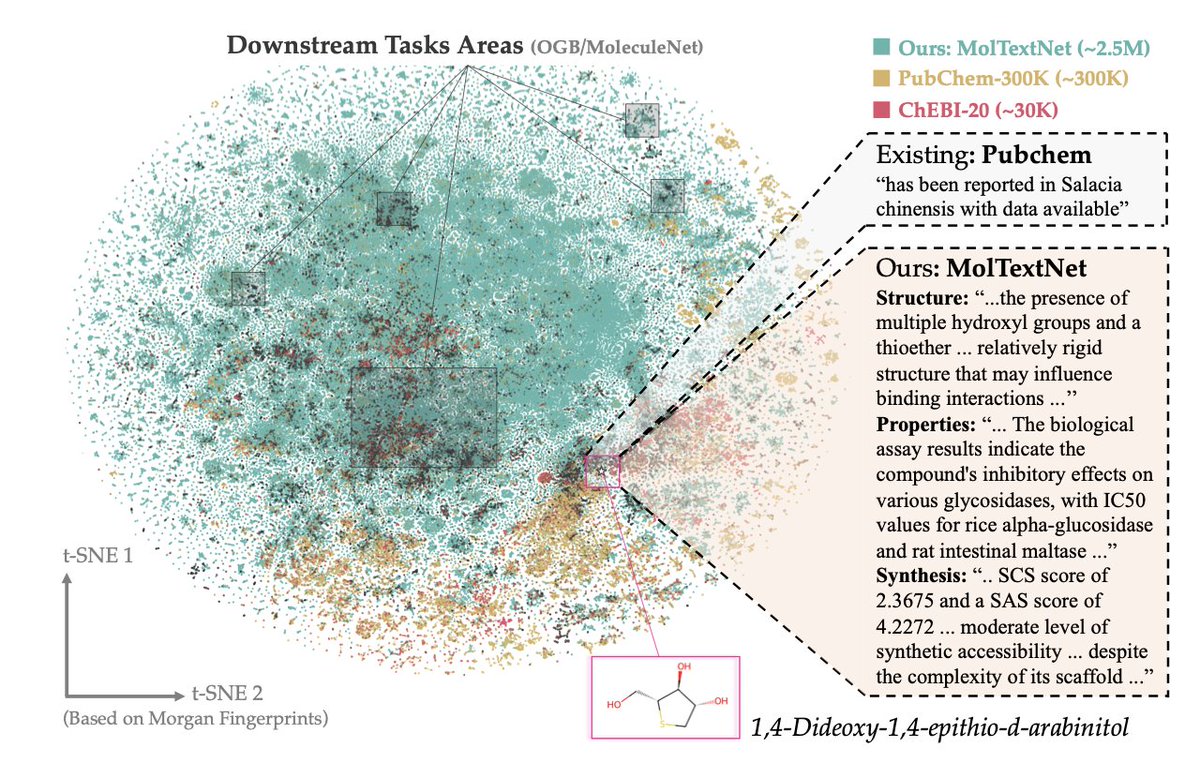

MolTextNet: A Two-Million Molecule-Text Dataset for Multimodal Molecular Learning 1.MolTextNet introduces a dataset of 2.5 million molecule-text pairs, designed to support large-scale multimodal learning between molecular graphs and natural language. Each entry provides…

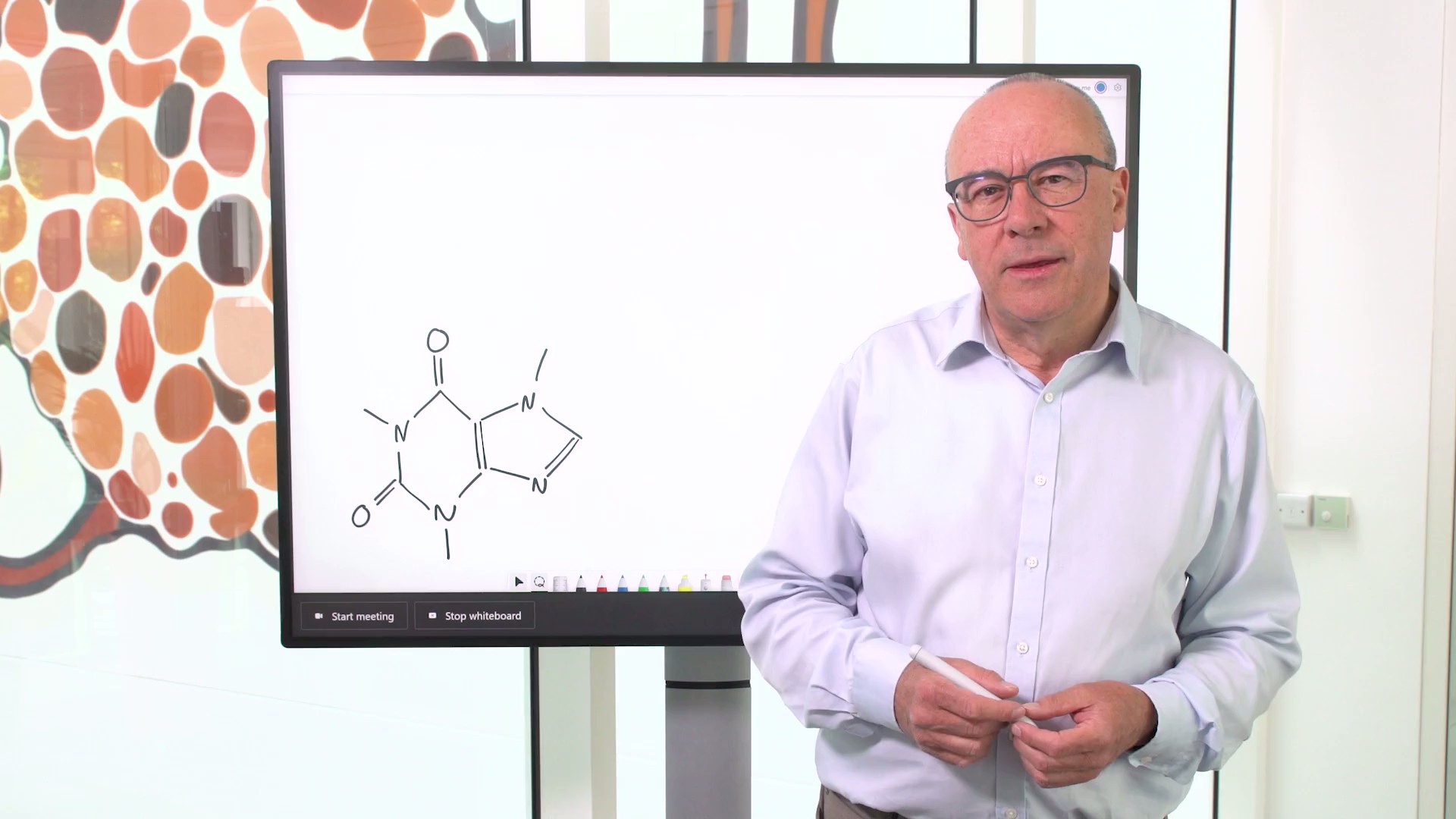

Chris Bishop, Technical Fellow and Director of Microsoft Research AI for Science, explains how Microsoft researchers recently achieved a milestone in solving a grand challenge that has hampered scientists and slowed innovation for decades. Watch the video: msft.it/6019SQtUX

ChatGPT now can analyze, manipulate, and visualize molecules and chemical information via the RDKit library. Useful for scientific work across health, biology, and chemistry.

The two most common questions I get via cold email are 1) what should I work on, and 2) how do I get a job doing research, engineering, etc.? I wrote a new post summarizing my advice for people trying to enter Biology+ML, from undergrads to early career. 👇 (link below)

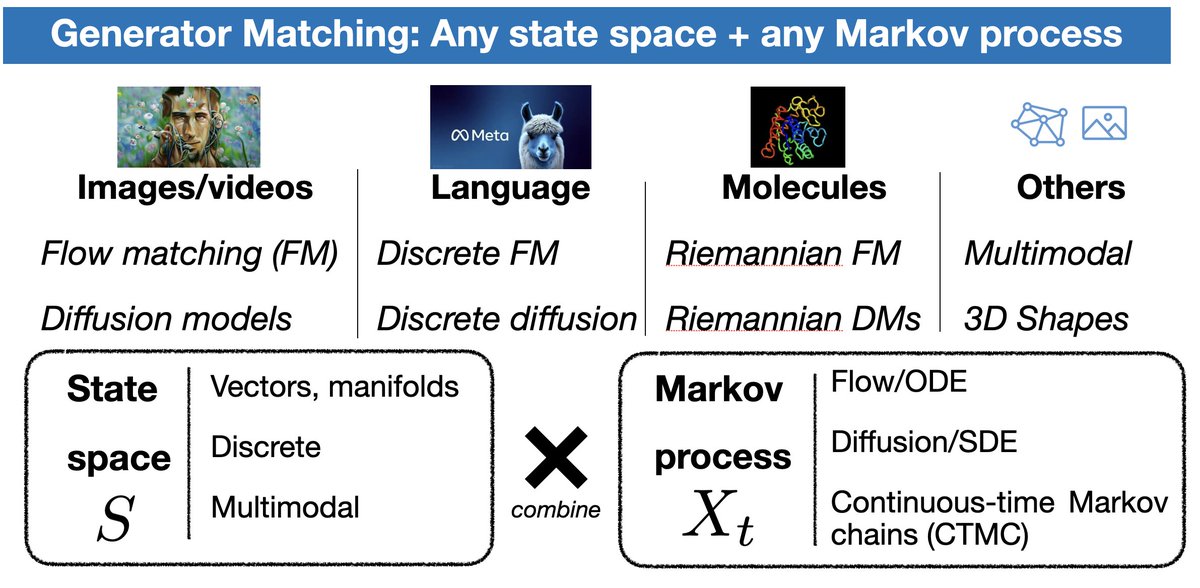

Come to our oral presentation on Generator Matching at ICLR 2025 tomorrow (Saturday). Learn about a generative model that works for any data type and Markov process! Oral: 3:30pm (Peridot 202-203, session 6E) Poster: 10am-12:30pm #172 (Hall 3 + Hall 2B) arxiv.org/abs/2410.20587

Very excited to share our interview with @DrYangSong. This is Part 2 of our history of diffusion series — score matching, the SDE/ODE interpretation, consistency models, and more. Enjoy!

Free alternative for ChemDraw! Meet MolDraw. 100% free to use!

Today in the @timmermanreport -- why we need natural language, and not just DNA, RNA, and protein, to represent complex concepts in biology: timmermanreport.com/2025/01/ai-nee… @ldtimmerman has the best blog in the world for biotech. Amazing to be publishing something with him!

United States Trends

- 1. Thanksgiving 151K posts

- 2. #IDontWantToOverreactBUT 1,385 posts

- 3. Jimmy Cliff 25.6K posts

- 4. #GEAT_NEWS 1,437 posts

- 5. #WooSoxWishList 3,060 posts

- 6. #NutramentHolidayPromotion N/A

- 7. #MondayMotivation 13.8K posts

- 8. Victory Monday 4,621 posts

- 9. DOGE 238K posts

- 10. TOP CALL 5,167 posts

- 11. Monad 178K posts

- 12. $ENLV 19.5K posts

- 13. Justin Tucker N/A

- 14. Good Monday 52.8K posts

- 15. Feast Week 2,113 posts

- 16. The Harder They Come 3,950 posts

- 17. $GEAT 1,323 posts

- 18. AI Alert 3,172 posts

- 19. $MON 35K posts

- 20. Vini 38.7K posts

Something went wrong.

Something went wrong.