Workshop on Large Language Model Memorization

@l2m2_workshop

The First Workshop on Large Language Model Memorization.

📢 @aclmeeting notifications have been sent out, making this the perfect time to finalize your commitment. Don't miss the opportunity to be part of the workshop! 🔗 Commit here: openreview.net/group?id=aclwe… 🗓️ Deadline: May 20, 2025 (AoE) #ACL2025 #NLProc

L2M2 will be tomorrow at VIC, room 1.31-32! We hope you will join us for a day of invited talks, orals, and posters on LLM memorization. The full schedule and accepted papers are now on our website: sites.google.com/view/memorizat…

I'm psyched for my 2 *different* talks on Friday @aclmeeting: 1.@llm_sec (11:00): What does it mean for an AI agent to preserve privacy? 2.@l2m2_workshop (16:00): Emergent Misalignment thru the Lens of Non-verbatim Memorization (& phonetic to visual attacks!) Join us!

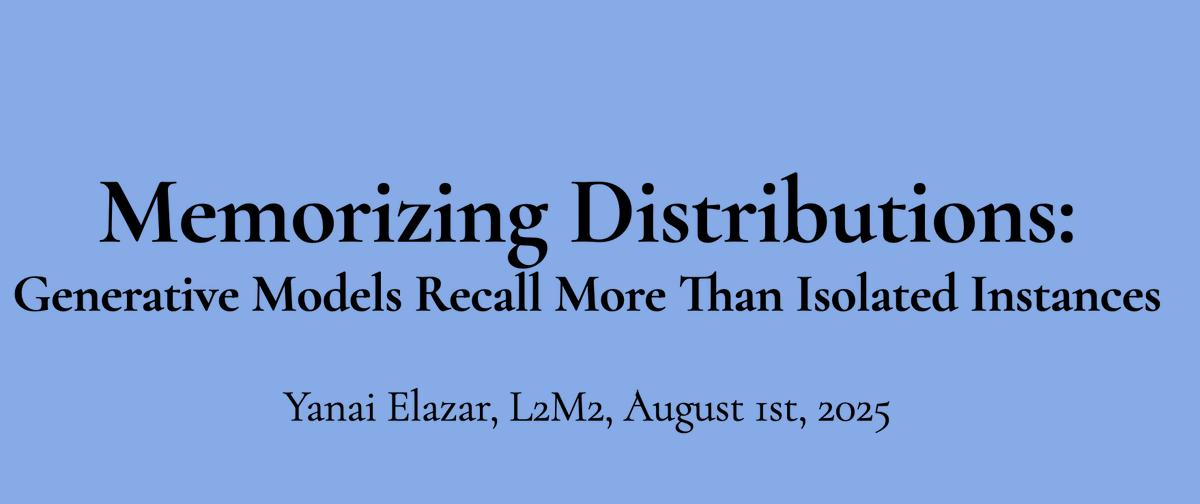

I'll be at #ACL2025 next week! Catch me at the poster sessions, eating sachertorte, schnitzel and speaking about distributional memorization at the @l2m2_workshop

L2M2 is happening this Friday in Vienna at @aclmeeting #ACL2025NLP! We look forward to the gathering of memorization researchers in the NLP community. Invited talks include: @yanaiela @niloofar_mire @rzshokri and see our website for the full program. sites.google.com/view/memorizat…

For years it’s been an open question — how much is a language model learning and synthesizing information, and how much is it just memorizing and reciting? Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

Do language models just copy text they've seen before, or do they have generalizable abilities? ⬇️This new tool from Ai2 will be very useful for such questions! And allow me to plug our paper on this topic: We find that LLMs are mostly not copying! direct.mit.edu/tacl/article/d… 1/2

As infini-gram surpasses 500 million API calls, today we're announcing two exciting updates: 1. Infini-gram is now open-source under Apache 2.0! 2. We indexed the training data of OLMo 2 models. Now you can search in the training data of these strong, fully-open LLMs. 🧵 (1/4)

Hi all, reminder that our direct submission deadline is April 15th! We are co-located at ACL'25 and you can submit archival or non-archival. You can also submit work published elsewhere (non-archival) Hope to see your submission! sites.google.com/view/memorizat…

Want to know what training data has been memorized by models like GPT-4? We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models, without requiring access to 🙅♀️ Model weights 🙅♀️ Training data 🙅♀️ Token probabilities 🧵1/5

Adding or removing PII in LLM training can *unlock previously unextractable* info. Even if “John.Mccarthy” never reappears, enough Johns & Mccarthys during post-training can make it extractable later! New paper on PII memorization & n-gram overlaps: arxiv.org/abs/2502.15680

we show for the first time ever how to privacy audit LLM training. we give new SOTA methods that show how much models can memorize. by using our methods, you can know beforehand whether your model is going to memorize its training data, and how much, and when, and why! (1/n 🧵)

🎉 Happy to announce that the L2M2 workshop has been accepted at @aclmeeting! #NLProc #ACL2025 More details will follow soon. Stay tuned and spread the word! 📣

United States Trends

- 1. #NXXT_Earnings N/A

- 2. Good Friday 47K posts

- 3. #FanCashDropPromotion N/A

- 4. Summer Walker 21.3K posts

- 5. #FridayVibes 3,890 posts

- 6. #GringosVenezuelaNoSeToca 1,078 posts

- 7. Wale 35.7K posts

- 8. #FinallyOverIt 6,933 posts

- 9. Happy Friyay 1,181 posts

- 10. Go Girl 25.8K posts

- 11. RED Friday 2,549 posts

- 12. Meek 6,200 posts

- 13. Saylor 30.1K posts

- 14. $BTC 119K posts

- 15. SONIC RACING 2,347 posts

- 16. Robbed You 4,662 posts

- 17. Bubba 8,787 posts

- 18. Monaleo 2,305 posts

- 19. For Christ 22.4K posts

- 20. 1-800 Heartbreak 1,856 posts

Something went wrong.

Something went wrong.