你可能会喜欢

i love the fact that the model keeps learning (valid loss decrease) even when the training loss (blue) seems to plateau. there is something very deep happening in that jiggling of the training loss

I spent the past month reimplementing DeepMind’s Genie 3 world model from scratch Ended up making TinyWorlds, a 3M parameter world model capable of generating playable game environments demo below + everything I learned in thread (full repo at the end)👇🏼

We’re announcing a major advance in the study of fluid dynamics with AI 💧 in a joint paper with researchers from @BrownUniversity, @nyuniversity and @Stanford.

New blog post: Implicit ODE Solvers Are Not Universally More Robust than Explicit ODE Solvers, Or Why No ODE Solver is Best Talks about how "robust" methods can be less robust in practice. Justifies hundreds of methods in #julialang #sciml stochasticlifestyle.com/implicit-ode-s…

stochasticlifestyle.com

Implicit ODE Solvers Are Not Universally More Robust than Explicit ODE Solvers, Or Why No ODE...

A very common adage in ODE solvers is that if you run into trouble with an explicit method, usually some explicit Runge-Kutta method like RK4, then you should try an implicit method. Implicit...

Does a smaller latent space lead to worse generation in latent diffusion models? Not necessarily! We show that LDMs are extremely robust to a wide range of compression rates (10-1000x) in the context of physics emulation. We got lost in latent space. Join us 👇

Really cool to see how the bigger-doesn't-mean-better trends in language models also seem to hold up for science models Hope this means that computational physics will no longer be solely in the domain of enormous HPC clusters

Does a smaller latent space lead to worse generation in latent diffusion models? Not necessarily! We show that LDMs are extremely robust to a wide range of compression rates (10-1000x) in the context of physics emulation. We got lost in latent space. Join us 👇

there is the urgent need for understanding the internal behavior of deep nets. (I am at a numerical analysis conference, tired of listening to talks blindly applying a random architecture to Navier Stokes with periodic boundary conditions)

The big breakthrough for convnets was the first GPU-accelerated CUDA implementation, which immediately started winning first place in image classification competitions. Remember when that happened? I do. That was Dan Ciresan in 2011

Who invented convolutional neural networks (CNNs)? 1969: Fukushima had CNN-relevant ReLUs [2]. 1979: Fukushima had the basic CNN architecture with convolution layers and downsampling layers [1]. Compute was 100 x more costly than in 1989, and a billion x more costly than…

![SchmidhuberAI's tweet image. Who invented convolutional neural networks (CNNs)?

1969: Fukushima had CNN-relevant ReLUs [2].

1979: Fukushima had the basic CNN architecture with convolution layers and downsampling layers [1]. Compute was 100 x more costly than in 1989, and a billion x more costly than…](https://pbs.twimg.com/media/GxbseeFWQAAyDY7.png)

'Mathematics compares the most diverse phenomena and discovers the secret analogies that unite them.' -- Joseph Fourier

Every organism yearns to maximize its mutual information with the future. One way is to live forever, but the optimal way is to be fruitful (genetically and memetically) and multiply your bits (ideas and genes) so they take up more of the future lightcone.

1 year later, still far away

i'm increasingly convinced that "transformative ai" is going to look like an abundance of specialized models for everything from drug design to weather sims to robotics to supply chains, not one agent to rule them all. we're going to need a lot more ai researchers

humans are imitation machines, the base level of “intelligence” has already been achieved

LLMs are trained to imitate patterns of language, not to discover or verify truth. So, when asked to speak as an expert in an area where perceived experts have a widespread misconception, the LLM will parrot that misconception, adopting the register and vocabulary of experts.

it got navier-stokes

One nice thing you can do with an interactive world model, look down and see your footwear ... and if the model understands what puddles are. Genie 3 creation.

The point of college is not to prepare you for "professional success." It's to improve your mind. Nor are these distinct paths. The people who achieve the greatest "professional success" are those who think of college as more than job training.

Upgraded from Llama 3 to Qwen3 as my go-to model for research experiments, so I implemented qwen3 from scratch: github.com/rasbt/LLMs-fro… Trade-off: Qwen3 0.6B is deeper (28x vs 16x layers) & slower than the wider Llama 3 1B but more memory efficient due to fewer params

New paper on the generalization of Flow Matching arxiv.org/abs/2506.03719 🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn **can only generate training points**? with @Qu3ntinB, Anne Gagneux & Rémi Emonet 👇👇👇

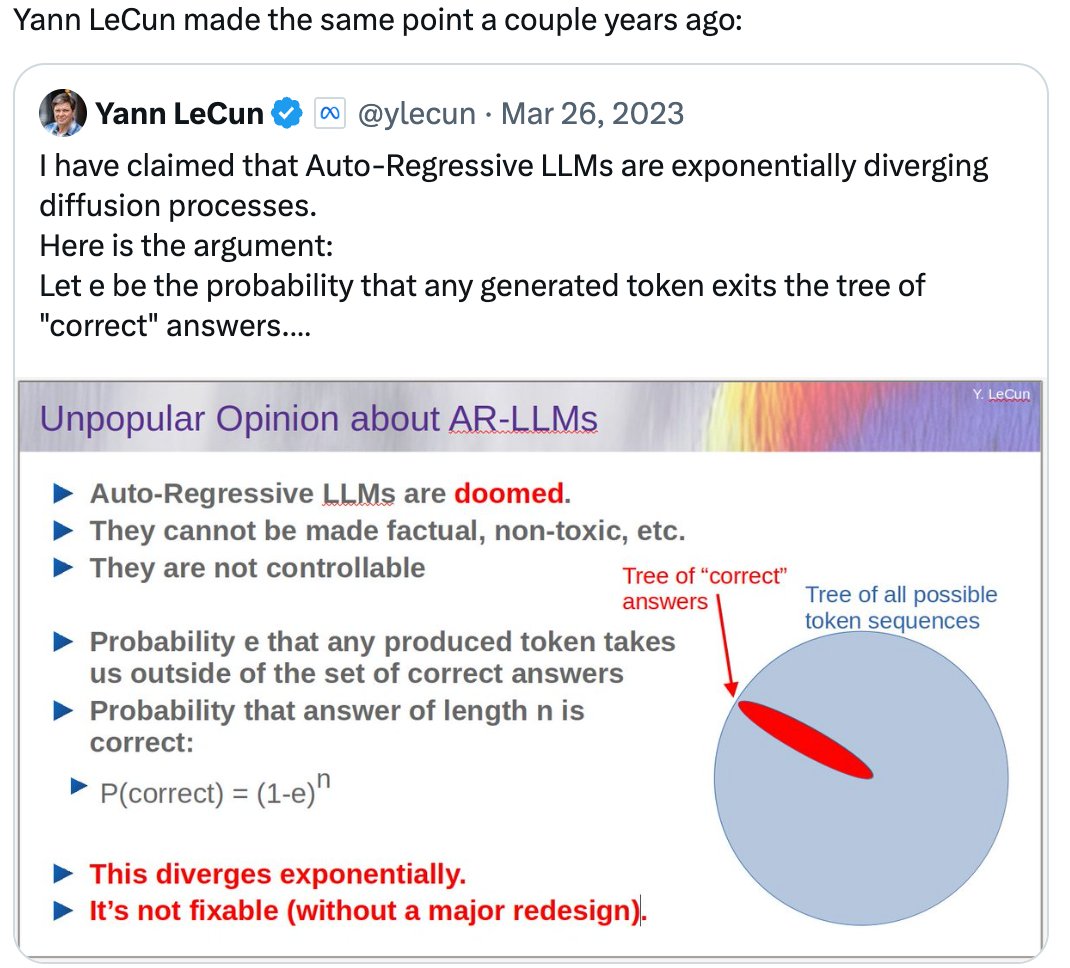

Is exponentially accumulating errors what LeCun said all along? That part might be true, but it doesn't mean LLMs are 'doomed'. Dropping the error rate from 10% to 1% (per 10min) makes 10h tasks possible. In practice, the error rate has been halving every 4 months(!). In…

Why can AIs code for 1h but not 10h? A simple explanation: if there's a 10% chance of error per 10min step (say), the success rate is: 1h: 53% 4h: 8% 10h: 0.002% @tobyordoxford has tested this 'constant error rate' theory and shown it's a good fit for the data chance of…

United States 趋势

- 1. #SNME 27.2K posts

- 2. Georgia 48.4K posts

- 3. Lagway 3,654 posts

- 4. Drew 120K posts

- 5. Jaire 9,225 posts

- 6. Jade Cargill 11.4K posts

- 7. Forever Young 34.7K posts

- 8. #UFCVegas110 8,286 posts

- 9. Gators 6,189 posts

- 10. #GoDawgs 5,404 posts

- 11. Florida 78.4K posts

- 12. Lebby 1,001 posts

- 13. Gunner Stockton 1,082 posts

- 14. Nigeria 591K posts

- 15. Salter N/A

- 16. Shapen 1,037 posts

- 17. Miami 62.4K posts

- 18. Howie 3,112 posts

- 19. #HailState 1,155 posts

- 20. Tiffany 21.3K posts

Something went wrong.

Something went wrong.