LTL-UvA

@ltl_uva

Language Technology Lab @UvA_Amsterdam

You might like

Key Finding 2: With an observation of the increasing reliability of the model's self-knowledge for distinguishing misaligned and clean data at the token level, we propose self-correction to leverage the model's self-knowledge to correct the training supervision.

I am happy to share that our paper "How to Learn in a Noisy World? Self-correcting the Real-World Data Noise in Machine Translation" was accepted by NAACL 2025. Paper Link: arxiv.org/pdf/2407.02208 #NLP #NAACL

Key Finding 1: We simulate the primary source of noise in the parallel corpus, i.e., semantic misalignment, and show the limited effectiveness of widely-used sentence-level pre-filters for detecting it. This underscores the necessity of handling data noise in a fine-grained way.

I am happy to share that our paper "How to Learn in a Noisy World? Self-correcting the Real-World Data Noise in Machine Translation" was accepted by NAACL 2025. Paper Link: arxiv.org/pdf/2407.02208 #NLP #NAACL

The paper explores the effect of kNN on ASR using Whisper in general, as well as on speaker adaptation and bias. kNN is a non-parametric method that adapts the output of a model to a domain by searching for neighbouring tokens in a datastore at each step.

LTL News: Happy to announce that Maya's paper got accepted by NAACL 2025 (findings) 🥳#naacl #nlp Paper Link: arxiv.org/abs/2410.18850

We show empirically that LLMs fail to exploit grammatical explanations for translation; instead we find parallel examples mainly drive translation performance. While grammatical knowledge does not help translation, LLMs benefit from our typological prompt for linguistic tasks.

LTL News: Happy to announce that Seth's paper got accepted by ICLR (spotlight) 🥳@sethjsa Paper Link: arxiv.org/abs/2409.19151

LTL News: Happy to announce that Seth's paper got accepted by ICLR (spotlight) 🥳@sethjsa Paper Link: arxiv.org/abs/2409.19151

Our work “Can LLMs Really Learn to Translate a Low-Resource Language from One Grammar Book?” is now on arXiv! arxiv.org/abs/2409.19151 - in collaboration with @davidstap, @diwuNLP, @c_monz , and Khalil Sima'an from @illc_amsterdam and @ltl_uva 🧵

4. Representational Isomorphism and Alignment of Multilingual Large Language Models. We will release Di's paper later! #EMNLP2024 #NLProc

Language Technology Lab got four papers accepted for #EMNLP2024! Congrats to authors Kata Naszadi, Shaomu Tan, Baohao Liao @baohao_liao, Di Wu @diwuNLP 🥳🥳

3. How to identify intrinsic task modularity within multilingual translation networks? Check out Shaomu's paper: arxiv.org/abs/2404.11201

Language Technology Lab got four papers accepted for #EMNLP2024! Congrats to authors Kata Naszadi, Shaomu Tan, Baohao Liao @baohao_liao, Di Wu @diwuNLP 🥳🥳

2. ApiQ: Finetuning of 2-Bit Quantized Large Language Model, check out Baohao's paper: arxiv.org/abs/2402.05147 #EMNLP2024

Language Technology Lab got four papers accepted for #EMNLP2024! Congrats to authors Kata Naszadi, Shaomu Tan, Baohao Liao @baohao_liao, Di Wu @diwuNLP 🥳🥳

1. Can you learn the meaning of words from someone who thinks you are smarter than you are? Check out Kata's paper: arxiv.org/pdf/2410.05851 #EMNLP2024 #NLProc

Language Technology Lab got four papers accepted for #EMNLP2024! Congrats to authors Kata Naszadi, Shaomu Tan, Baohao Liao @baohao_liao, Di Wu @diwuNLP 🥳🥳

Language Technology Lab got four papers accepted for #EMNLP2024! Congrats to authors Kata Naszadi, Shaomu Tan, Baohao Liao @baohao_liao, Di Wu @diwuNLP 🥳🥳

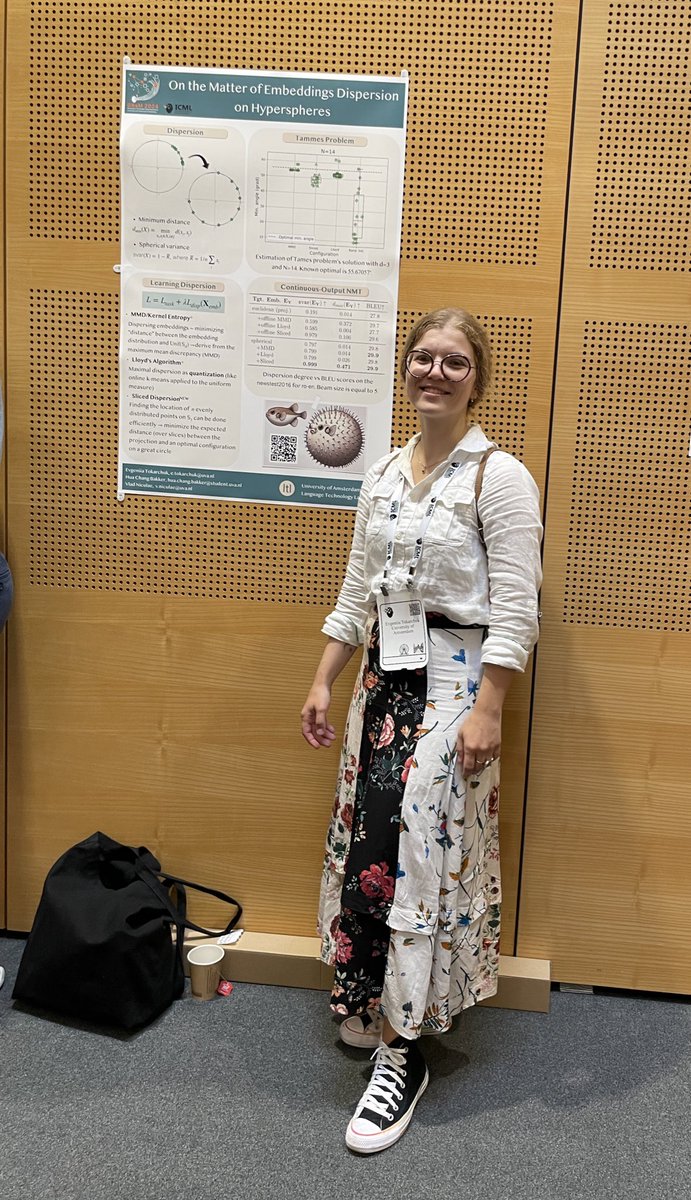

Just returned from MT Marathon 2024 in Prague - thanks to @ufal_cuni for organising a great week! Between the insightful talks and collaboration on a mini research project, I presented a poster of my recent work. And of course, we explored the sights of Prague too - in 30°C heat!

🚨 New paper 🚨 Our multilingual system for the WMT24 general shared task obtain: --- Constrained track: 6 🥇3 🥈 1 🥉 --- Open & Constrained track: 1 🥇2 🥈 2 🥉 A simple and effective pipeline to adapt LLM to multilingual machine translation. paper: arxiv.org/abs/2408.11512

Language Technology Lab at ACL🇹🇭! Busy poster presentation by @davidstap @diwuNLP #ACL2024 #ACL2024NLP

Inspiring day at GRaM @GRaM_org_ workshop! My only complaint: too short! I want more! 😁 Thanks to organizers for such a great experience ❤️ Amazing talks (personal favorites by @ninamiolane and by @bose_joey), great posters and panel session I genuinely enjoyed. #ICML2024

Come check our poster tomorrow at @GRaM_org_ @icmlconf if you want to discuss dispersion of text embeddings on hyperspheres! 27.07 at Poster session 2. #ICML2024

United States Trends

- 1. Under Armour 8,250 posts

- 2. Megyn Kelly 42.4K posts

- 3. Nike 28K posts

- 4. Blue Origin 12.1K posts

- 5. Curry Brand 6,914 posts

- 6. New Glenn 12.6K posts

- 7. Shohei Ohtani 12.2K posts

- 8. Aden Holloway N/A

- 9. #InternetInvitational N/A

- 10. Senator Fetterman 23.6K posts

- 11. #2025CaracasWordExpo 19.1K posts

- 12. Brainiac 10.1K posts

- 13. NL MVP 7,113 posts

- 14. Operación Lanza del Sur 10K posts

- 15. Thursday Night Football 2,811 posts

- 16. Judge 132K posts

- 17. Operation Southern Spear 8,340 posts

- 18. Vine 40.3K posts

- 19. Matt Gaetz 21.1K posts

- 20. #drwfirstgoal N/A

You might like

-

Baohao Liao

Baohao Liao

@baohao_liao -

Amir Soleimani

Amir Soleimani

@ASoleimaniB -

Informatics Institute University of Amsterdam

Informatics Institute University of Amsterdam

@UvA_IvI -

Edoardo Ponti

Edoardo Ponti

@PontiEdoardo -

Mario Giulianelli

Mario Giulianelli

@glnmario -

Katia Shutova

Katia Shutova

@KatiaShutova -

Jiahuan Pei

Jiahuan Pei

@ppsunrise -

Ercong Nie @ EMNLP

Ercong Nie @ EMNLP

@NielKlug -

Hosein Mohebbi

Hosein Mohebbi

@hmohebbi75 -

Suvam Mukherjee

Suvam Mukherjee

@SuvamMukherjee

Something went wrong.

Something went wrong.