Marco Ciccone

@mciccone_AI

Postdoctoral Fellow @VectorInst - Collaborative, Decentralized, Modular ML - Competition chair @NeurIPSConf 2021, 2022, 2023 - PhD @polimi ex @NVIDIA @NNAISENSE

Anda mungkin suka

🚨 Life update 🚨 I moved to Toronto 🇨🇦and joined @VectorInst as a Postdoctoral Fellow to work with @colinraffel and his lab on collaborative, decentralized, and modular machine learning to democratize ML model development. Exciting times ahead! 🪿

🚨 Excited to give a tutorial on Model Merging next week at #NeurIPS2025 in San Diego! Join us on 📅Tue 2 Dec 9.30 am - 1 pm PST

Excited to be at @NeurIPSConf next week co-presenting our tutorial: "Model Merging: Theory, Practice, and Applications" 🔥 Proud to do this with my PhD advisor Colin Raffel, our research fellow @mciccone_AI, and an incredible panel of speakers 💙 #NeurIPS2025 #ModelMerging

I am excited to be organizing the 8th scaling workshop at @NeurIPSConf this year! Dec 5-6 | 5-8pm PT | Hard Rock Hotel San Diego Co-organized by @cerebras, @Mila_Quebec, and @mbzuai Register: luma.com/gy51vuqd

To put things in perspective on how crazy our field is: - Yoshua Bengio has reached 1M citations in almost 40 years of career - The Transformer papers by itself has reached 200K citations in 8 years

I am at a point where I need a feature like "is it me or is it down" specifically for debugging multi-node and multi-GPU communications with NCCL

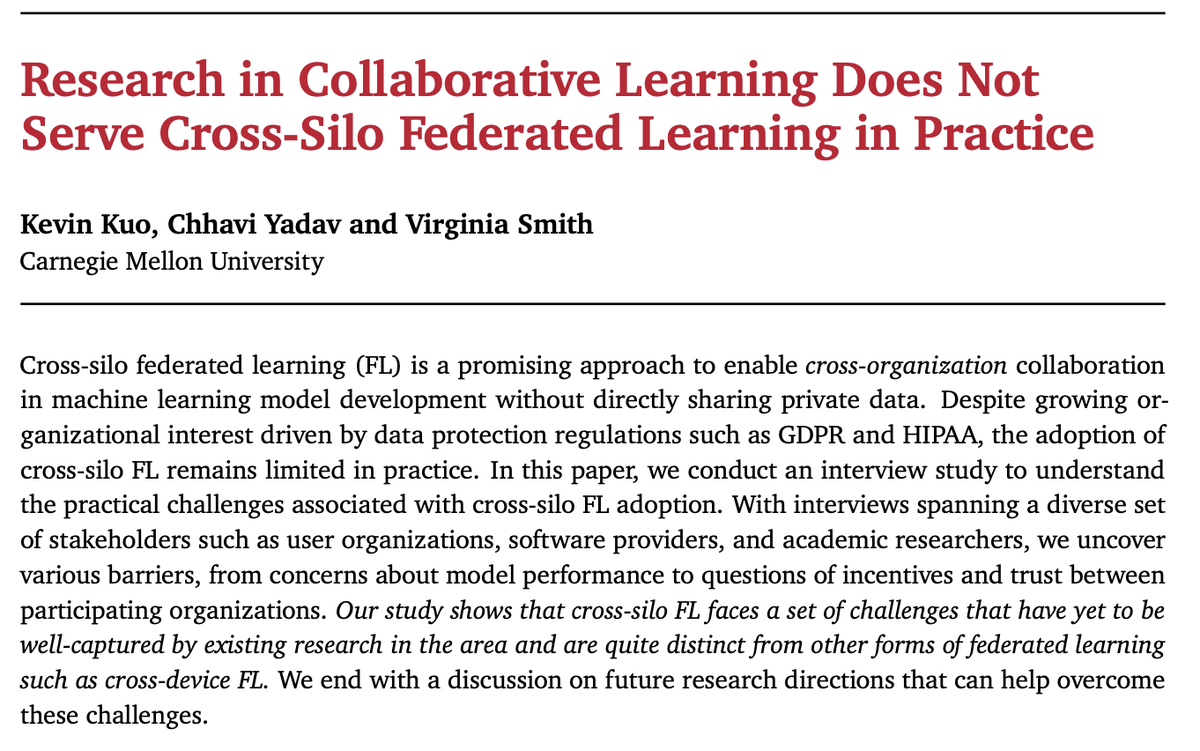

🚀 Federated Learning (FL) promises collaboration without data sharing. While Cross-Device FL is a success and deployed widely in industry, we don’t see Cross-Silo FL (collaboration between organizations) taking off despite huge demand and interest. Why could this be the case? 🤔…

Heading to #COLM2025 in the beautiful Montreal 🍁 Excited to discuss distributed learning and modular approaches for mixing and merging specialized language models - Ping me if you are there!

It is so refreshing to see such an example of quality over quantity research in academia. Congrats @deepcohen!

This is the third, last, and best paper from my PhD. By some metrics, an ML PhD student who writes just three conference papers is "unproductive." But I wouldn't have had it any other way 😉 !

If you want to learn about KFAC this is the best place to start!

KFAC is everywhere—from optimization to influence functions. While the intuition is simple, implementation is tricky. We (@BalintMucsanyi, @2bys2 ,@runame_) wrote a ground-up intro with code to help you get it right. 📖 arxiv.org/abs/2507.05127 💻 github.com/f-dangel/kfac-…

💯 This is why we need modular and specialized models instead of generalist ones

GPT-5 is the most significant product release in AI history, but not for the reason you might think. What it signals is that we're moving from the "bigger model, better results" era to something much more nuanced. This is a genuine inflection point. The fact that people call a…

If your PhD advisor has a statement like this, you know you have made the right choice. Good job and good luck with your new lab @maksym_andr! "...We are not necessarily interested in getting X papers accepted at NeurIPS/ICML/ICLR. We are interested in making an impact..."

🚨 Incredibly excited to share that I'm starting my research group focusing on AI safety and alignment at the ELLIS Institute Tübingen and Max Planck Institute for Intelligent Systems in September 2025! 🚨 Hiring. I'm looking for multiple PhD students: both those able to start…

Despite the impressive output of @gabriberton (super-deserved results), it seems like a good time as any to remind ourselves that PhDs are not about the number of papers and that people should prioritize learning how to conduct research rather than maximizing meaningless metrics.

A few numbers from my PhD: 8 first-author top-conference (CVPR/ICCV/ECCV) papers 100% acceptance rate per paper 80% acceptance rate per submission 1 invited long talk at CVPR tutorial 5 top-conf demos (acceptance rate 100% vs ~30% average) ~2k GitHub stars

Conference networking be like

Not entirely true - better understanding of optimization issues of neural networks, residual connections, normalization layers… and in insight, imagenet was clearly showing the way that data is all you need

very surprising that fifteen years of hardcore computer vision research contributed ~nothing toward AGI except better optimizers we still don't have models that get smarter when we give them eyes

Nice parallel!

ML labs seem to have quite the parallels with F1 teams. Over the top of my mind:

Dan’s talk was a masterclass — Go watch the recording. Super clear, packed with results, and genuinely one of the most well-delivered talks I’ve seen in a while.

Happening in 30 minutes in West Ballroom A - looking forward to sharing our work on Distillation Scaling Laws!

6/ @JoshSouthern13 and I be at #ICML2025, poster session Tuesday — stop by and chat if you're around! ... I would also be happy to meet up and chat about graphs, (graphs and) LLMs, and how to detect their hallucinations 😳 Feel free to reach out!

I'll discuss distributed learning on Saturday, July 12. First, I'll cover current methods needing high bandwidth, then next-generation methods for decentralized learning

Distributed Training in Machine Learning🌍 Join us on July 12th as @Ar_Douillard explores key methods like FSDP, Pipeline & Expert Parallelism, plus emerging approaches like DiLoCo and SWARM—pushing the limits of global, distributed training. Learn more: tinyurl.com/9ts5bj7y

Even crazier is that the rmsprop algorithm was introduced by @geoffreyhinton in his neural networks course, and we cite his slides - so it's fine!

Fantastic work from @allen_ai - asynchronous training of MoEs on private datasets and a domain-aware router. Akin to cross-silo FL, but no synchronization and no fear of heterogeneity anymore on LLMs. Nice, clean, and of course modular!

The bottleneck in AI isn't just compute - it's access to diverse, high-quality data, much of which is locked away due to privacy, legal, or competitive concerns. What if there was a way to train better models collaboratively, without actually sharing your data? Introducing…

United States Tren

- 1. #ALLOCATION 110K posts

- 2. #JUPITER 112K posts

- 3. The BIGGЕST 496K posts

- 4. Kanata 18.6K posts

- 5. #GMMTVxTPDA2025 80.7K posts

- 6. Lakers 49.7K posts

- 7. Dillon Brooks 7,511 posts

- 8. Hololive 15.1K posts

- 9. Giants 87.2K posts

- 10. Bron 25.6K posts

- 11. Patriots 135K posts

- 12. Dart 37.1K posts

- 13. #WWERaw 73.5K posts

- 14. #AvatarFireAndAsh 3,374 posts

- 15. Suns 19.9K posts

- 16. STEAK 10.7K posts

- 17. Drake Maye 24.9K posts

- 18. James Cameron 5,077 posts

- 19. Collin Gillespie 2,147 posts

- 20. Pats 16.3K posts

Anda mungkin suka

-

Mathieu

Mathieu

@miniapeur -

Shimon Whiteson

Shimon Whiteson

@shimon8282 -

Jakob Foerster

Jakob Foerster

@j_foerst -

Taco Cohen

Taco Cohen

@TacoCohen -

Csaba Szepesvari

Csaba Szepesvari

@CsabaSzepesvari -

Pasquale Minervini

Pasquale Minervini

@PMinervini -

Debora Caldarola

Debora Caldarola

@debcaldarola -

Francesco Orabona

Francesco Orabona

@bremen79 -

Adam Golinski

Adam Golinski

@adam_golinski -

Riccardo Volpi

Riccardo Volpi

@rvolpis -

M2L school

M2L school

@M2lSchool -

Massimo Caccia @NeurIPS

Massimo Caccia @NeurIPS

@MassCaccia -

Ludovic Denoyer

Ludovic Denoyer

@LudovicDenoyer -

Matteo Papini

Matteo Papini

@papinimat -

Carl Doersch

Carl Doersch

@CarlDoersch

Something went wrong.

Something went wrong.