Michael Dillon

@micdillon

Applied Scientist @ Amazon / Math Nerd

You might like

Releasing the Sparse Transformer, a network which sets records at predicting what comes next in a sequence — whether text, images, or sound. Improvements to neural 'attention' let it extract patterns from sequences 30x longer than possible previously: openai.com/blog/sparse-tr…

1. Compute matters, specially for RL agents, but neither convnets nor LSTMs were invented when focusing on scale.

Rich Sutton has a new blog post entitled “The Bitter Lesson” (incompleteideas.net/IncIdeas/Bitte…) that I strongly disagree with. In it, he argues that the history of AI teaches us that leveraging computation always eventually wins out over leveraging human knowledge.

One could argue for these loops being genuine topological features of the underlying ImageNet data, since neural nets are a continuous map and t-sne/umap try to preserve neighborhood structure. (Counter argument: be cautious to draw conclusions from t-sne/umap layouts!)

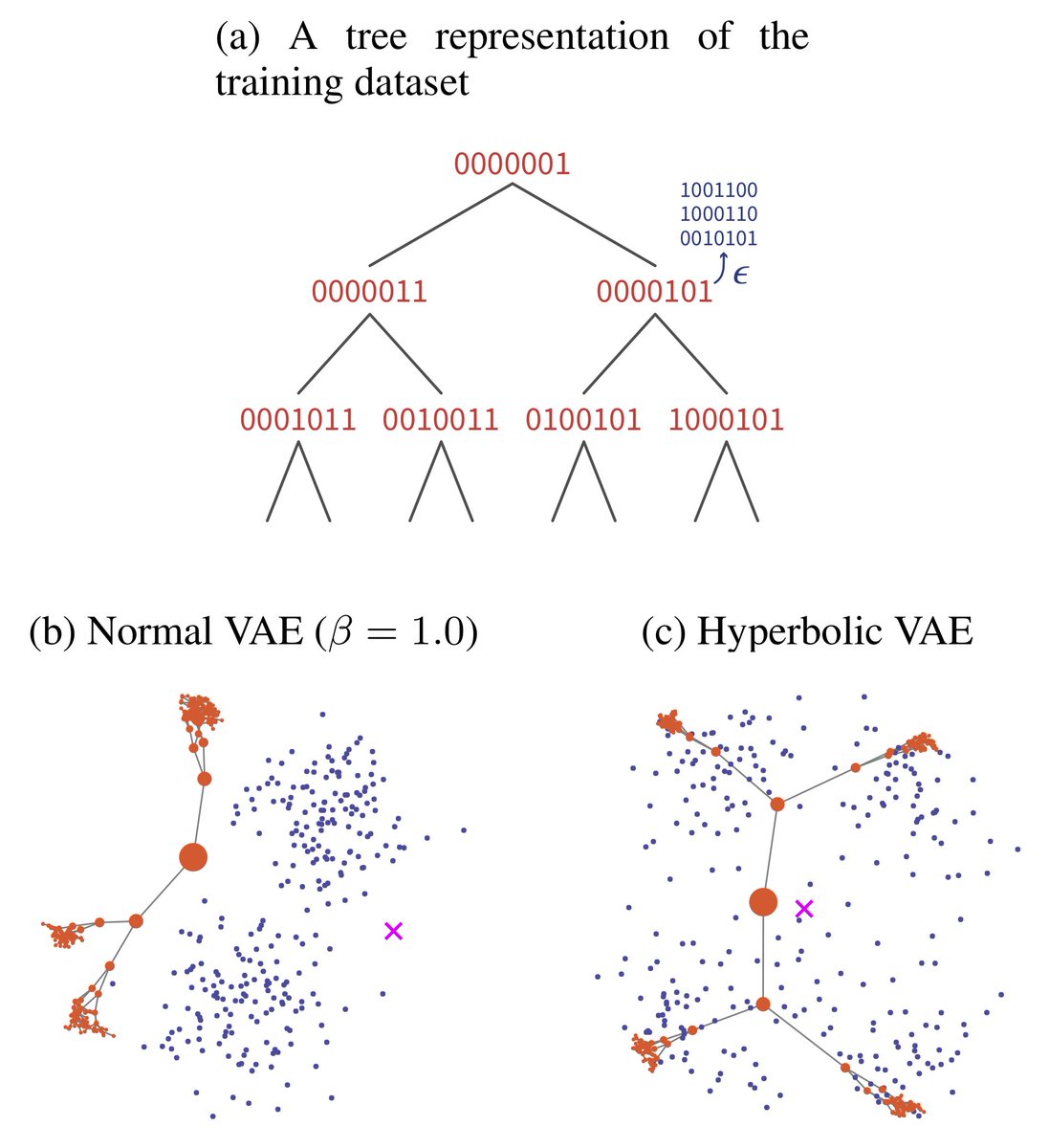

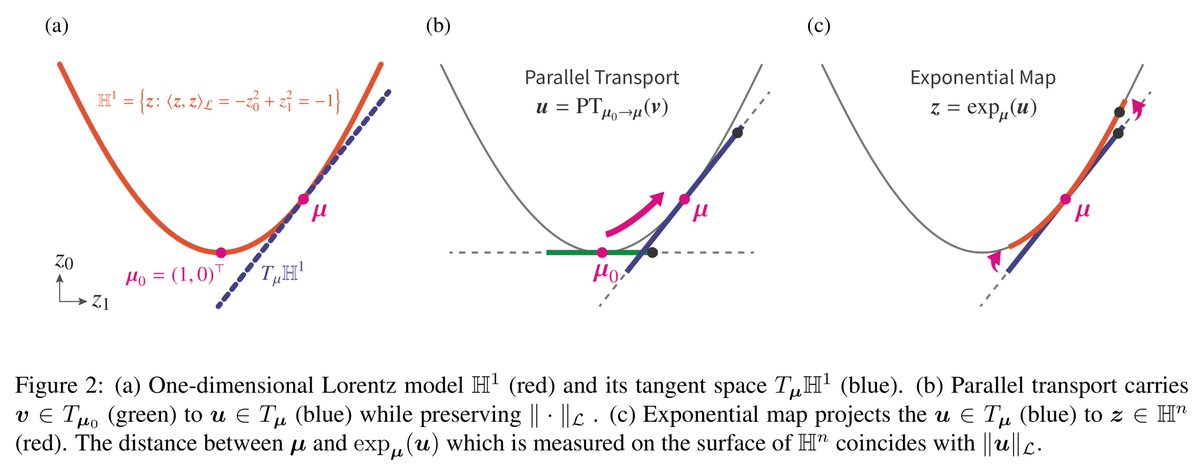

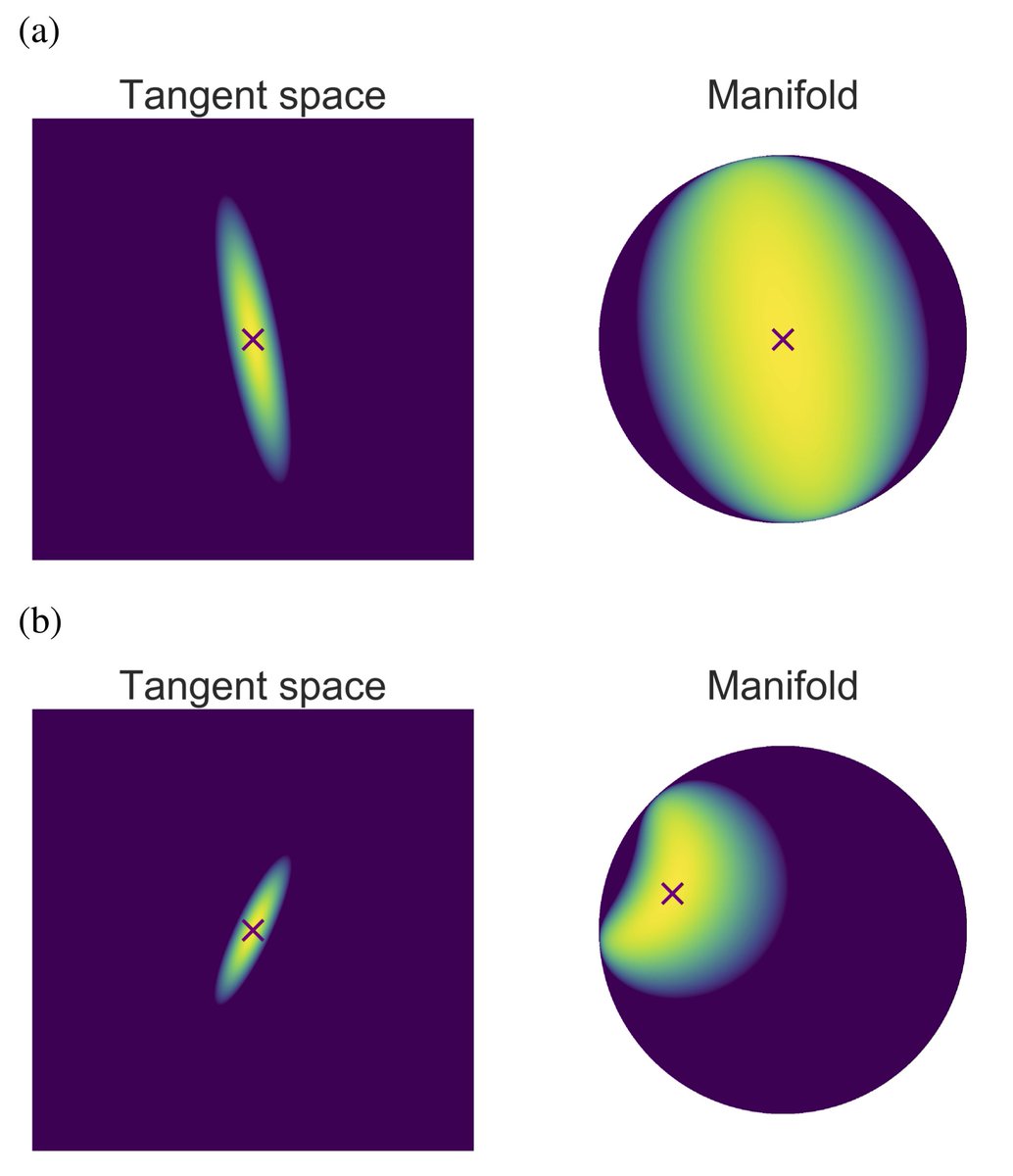

Our new paper is out! We proposed a Gaussian-like distribution on hyperbolic space whose density can be evaluated and differentiated analytically. Our Hyperbolic VAEs capture hierarchical structure well. w/ @guguchi_yama, @mooopan, @Masomatics. arxiv.org/abs/1902.02992

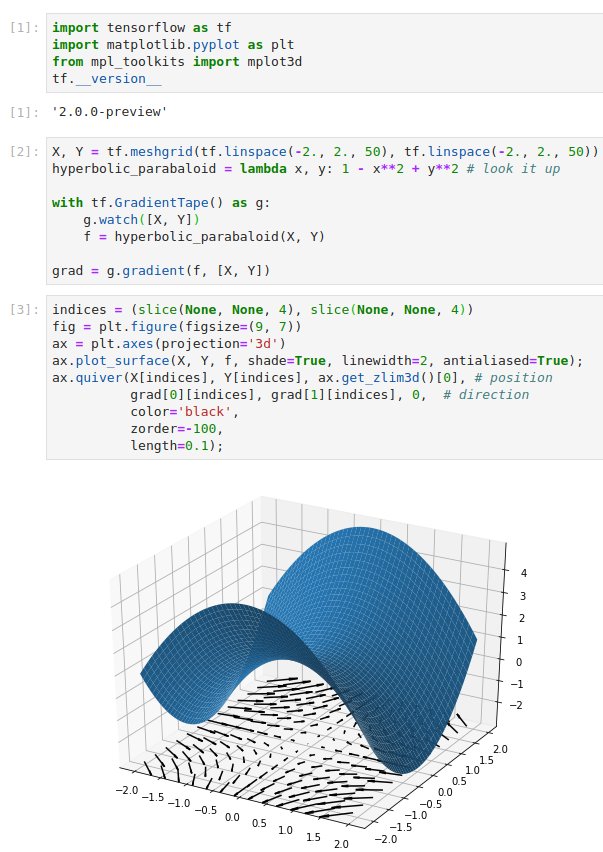

Working on a little differential geometry notebook with @TensorFlow 2.0 and @matplotlib's pleasant 3d interface.

Very nice disambiguation of statistical notation, thanks to @avehtari

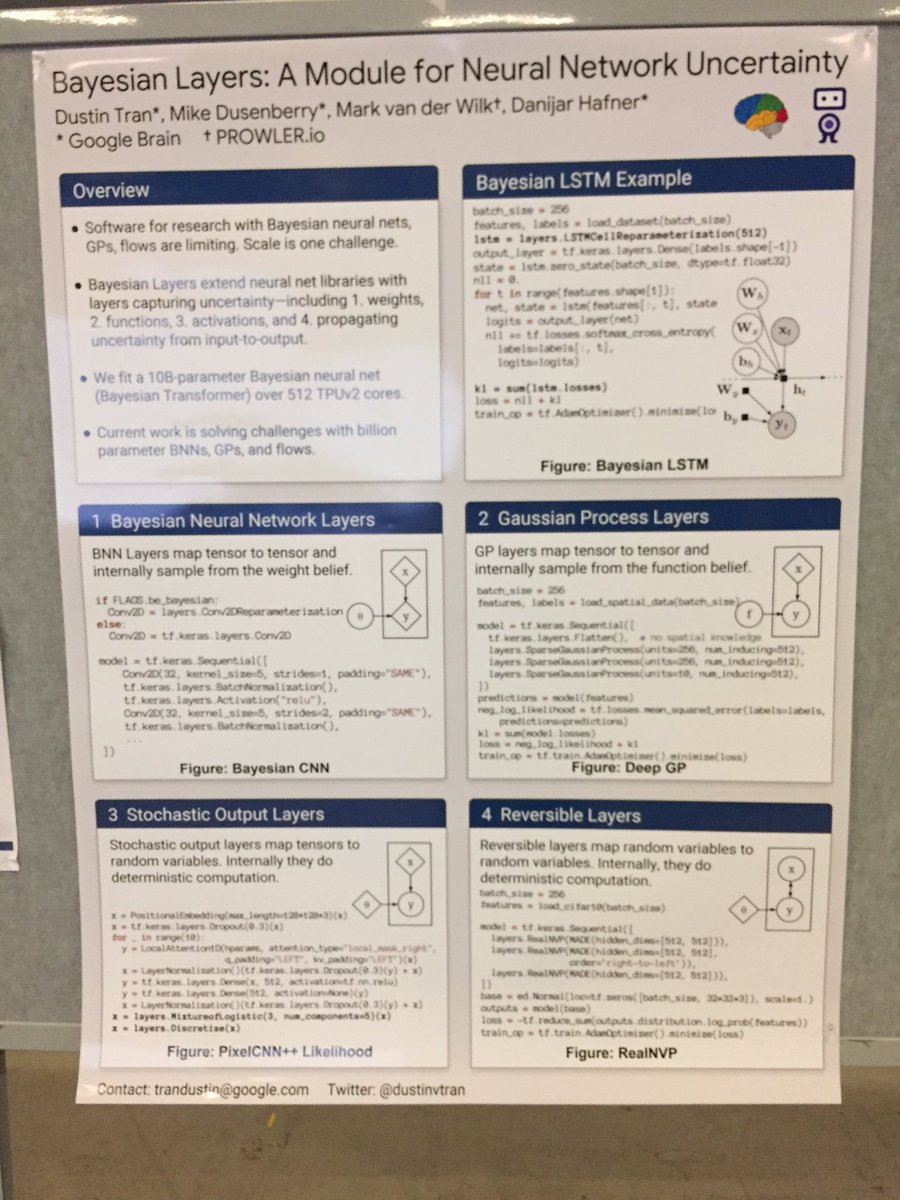

Interesting in quickly experimenting with BNNs, GPs, and flows? Check out Bayesian Layers, a simple layer API for designing and scaling up architectures. #NeurIPS2018 Bayesian Deep Learning, Happening now.

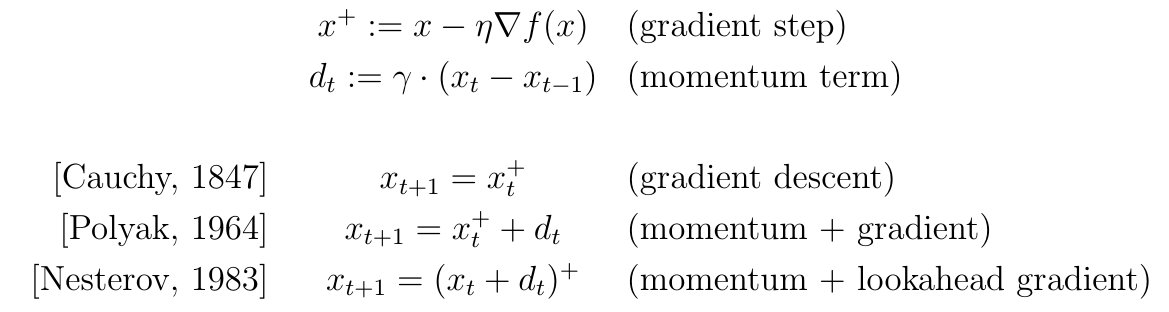

A fun way to describe Nesterov's momentum:

We just open-sourced a suite of ODE solvers in PyTorch: github.com/rtqichen/torch… Everything happens on the GPU and is differentiable. Now you can use ODEs in your deep learning models! Credit to @rtqichen.

So far we used ODEs for image classification, time series models, and density estimation: arxiv.org/abs/1806.07366. We implemented backprop using the adjoint method, which has constant memory cost. This let us scale ODE-based normalizing flows to SOTA: arxiv.org/abs/1810.01367

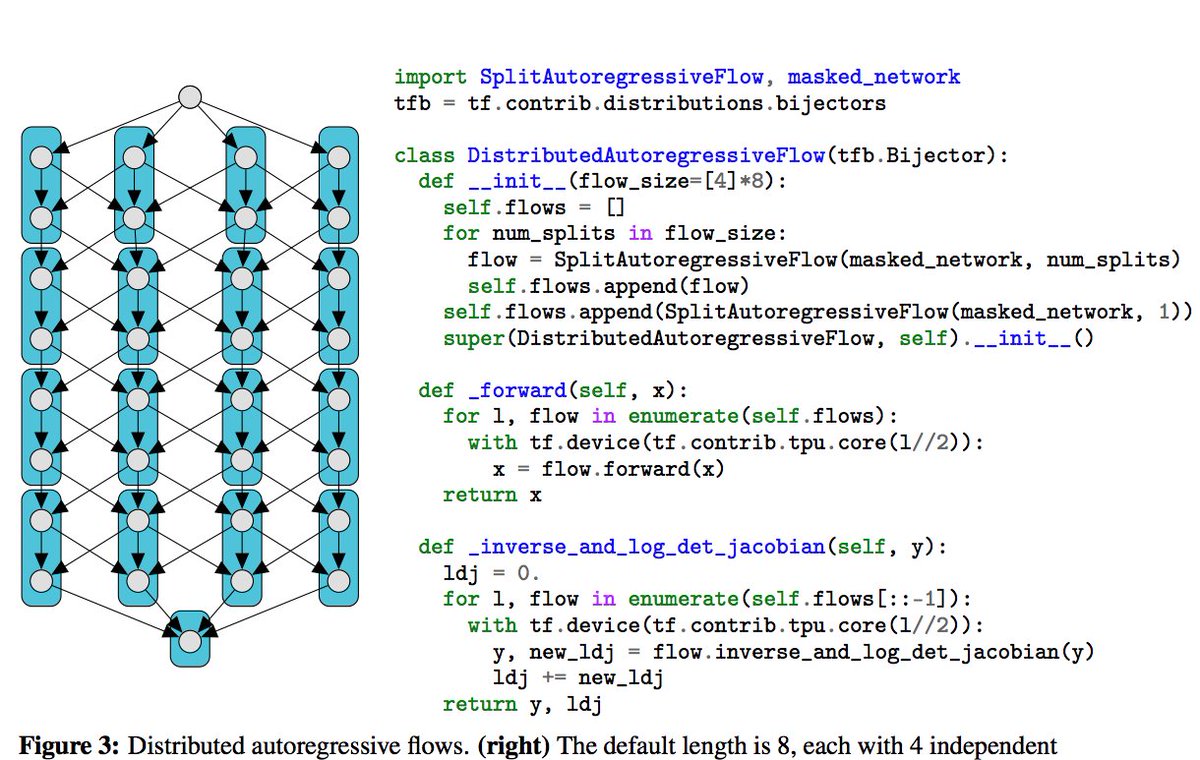

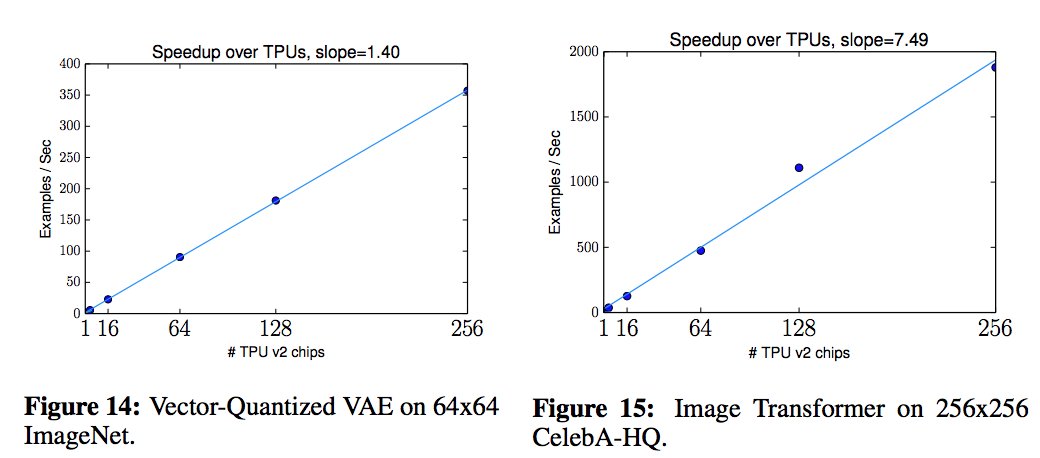

"Simple, Distributed, and Accelerated Probabilistic Programming". The #NIPS2018 paper for Edward2. Scaling probabilistic programs to 512 TPUv2 cores and 100+ million parameter models. arxiv.org/abs/1811.02091 github.com/google-researc…

#SundayClassicPaper📜: A Decision-based View of Causality. Lots of work going on in causality, so useful to reflect on this view defining causation in terms of unresponsiveness, and on Howard-form influence diagrams (aistats95). 🍂🎃 citeseerx.ist.psu.edu/viewdoc/downlo…

The videos from the 3 lectures I gave at #MLSS2018 are online🌱. If you watch any part of the 4.5 hours leave me some feedback: what's missing, should be left out, reordered, etc Video 1: youtu.be/BgZ_UFsjCD4 Video 2: youtu.be/YdwbVgEsg2M Video 3: youtu.be/B_F8PtU2XMU

youtube.com

YouTube

MLSS 2018 Madrid - Shakir Mohamed 3

The attendees at #mlss2018 Madrid were one of the most engaged I've ever had: so many questions and thoughts😻. I gave 3 lectures on 'Planting the Seeds of Probabilistic Thinking'. 🌱🎲 See the slides here: shakirm.com/slides/MLSS201…

Thought he’d just focus on Kavanaugh. Instead he gave best response on rape culture I’ve ever heard from a man! I aspire to be this reflective. Damn! #CancelKavanaugh #StopKanavaugh

Yordan Zaykov at #PROBPROG: "Infer.NET is now open-source." That's incredible news for the probabilistic programming community! github.com/dotnet/infer

Taming VAEs: A theoretical analysis of their properties and behaviour in the high-capacity regime. We also argue for a different way of training these models for robust control of key properties. It was fun thinking about this with @fabiointheuk arxiv.org/abs/1810.00597

New book on probabilistic programming on arXiv. I’m sure the authors @hyang144 @jwvdm @frankdonaldwood will welcome feedback. arxiv.org/abs/1809.10756

United States Trends

- 1. McDermott N/A

- 2. Beane N/A

- 3. #MLKDay N/A

- 4. Dr. Martin Luther King Jr. N/A

- 5. Don Lemon N/A

- 6. Dr. King N/A

- 7. Daboll N/A

- 8. Joe Brady N/A

- 9. Pegula N/A

- 10. Buffalo N/A

- 11. Happy MLK N/A

- 12. Dolly N/A

- 13. 25th Amendment N/A

- 14. #MondayMotivation N/A

- 15. FACE Act N/A

- 16. Christians N/A

- 17. Norway N/A

- 18. MLK Jr N/A

- 19. Zac Taylor N/A

- 20. Good Monday N/A

Something went wrong.

Something went wrong.