Michael O’Rourke

@michaeld7

Founder of Dimension 7(d7)

You might like

New Anthropic research: Signs of introspection in LLMs. Can language models recognize their own internal thoughts? Or do they just make up plausible answers when asked about them? We found evidence for genuine—though limited—introspective capabilities in Claude.

Schmidhuber's team casually proving Recursive self-improvement works at scale & it generalizes... Coding agents rewrote themselves 100's of times until it matched human engineers You CANT do it by benchmark maxing BUT You CAN track agent 'family trees' to find which lineages…

Our Huxley-Gödel Machine learns to rewrite its own code, estimating its own long-term self-improvement potential. It generalizes on new tasks (SWE-Bench Lite), matching the best officially checked human-engineered agents. Arxiv 2510.21614 With @Wenyi_AI_Wang, @PiotrPiekosAI,…

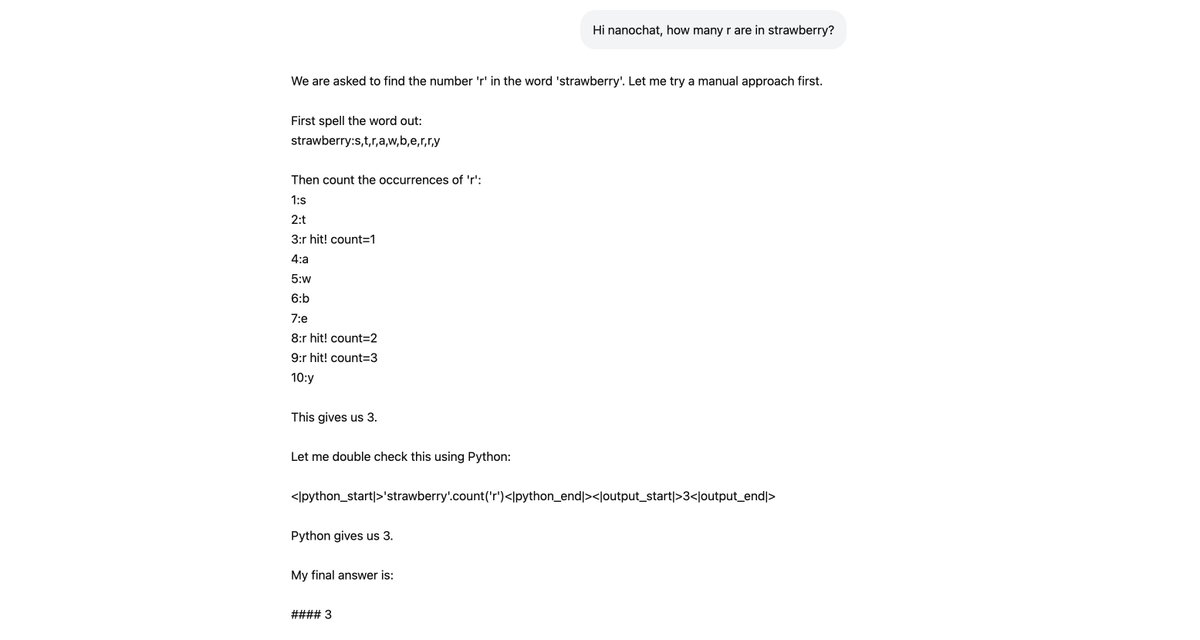

Last night I taught nanochat d32 how to count 'r' in strawberry (or similar variations). I thought this would be a good/fun example of how to add capabilities to nanochat and I wrote up a full guide here: github.com/karpathy/nanoc… This is done via a new synthetic task…

A new 30-minute presentation from @aelluswamy, Tesla’s VP of AI, has been released, where he talks about FSD, AI and the team’s latest progress. Highlight from the presentation: • Tesla's vehicle fleet can provide 500 years of driving data every single day. Curse of…

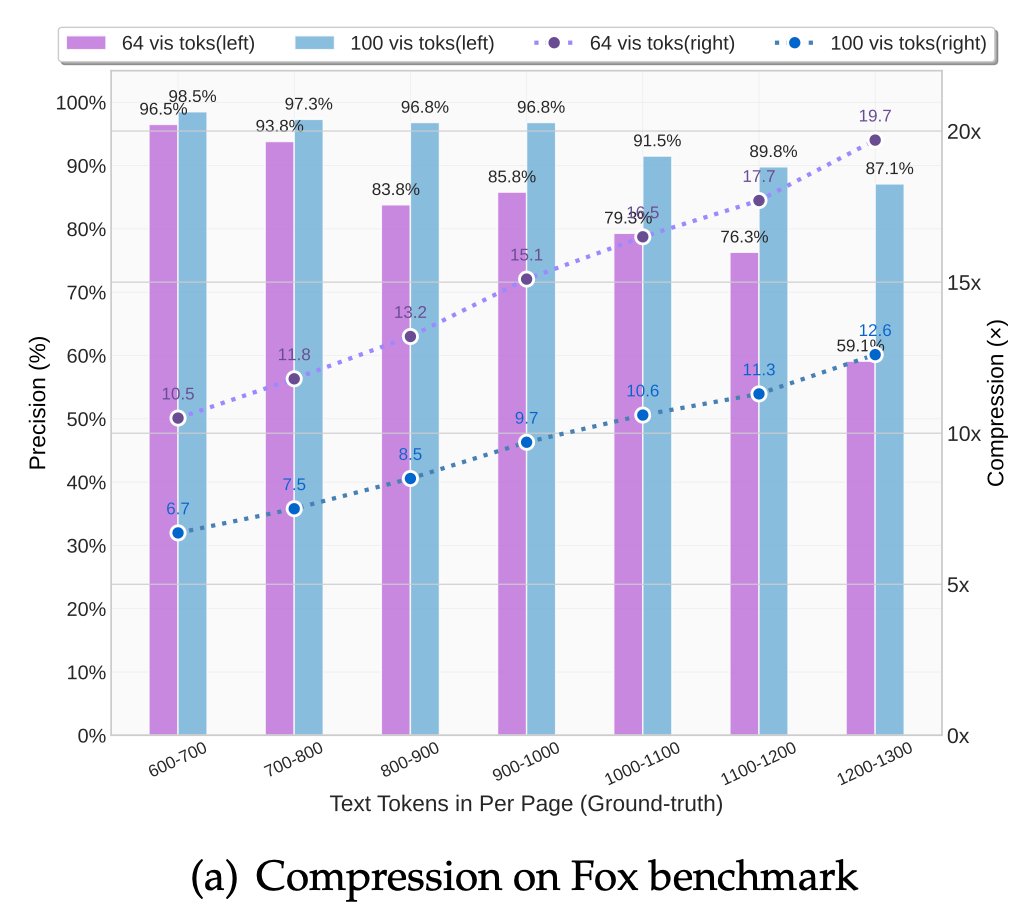

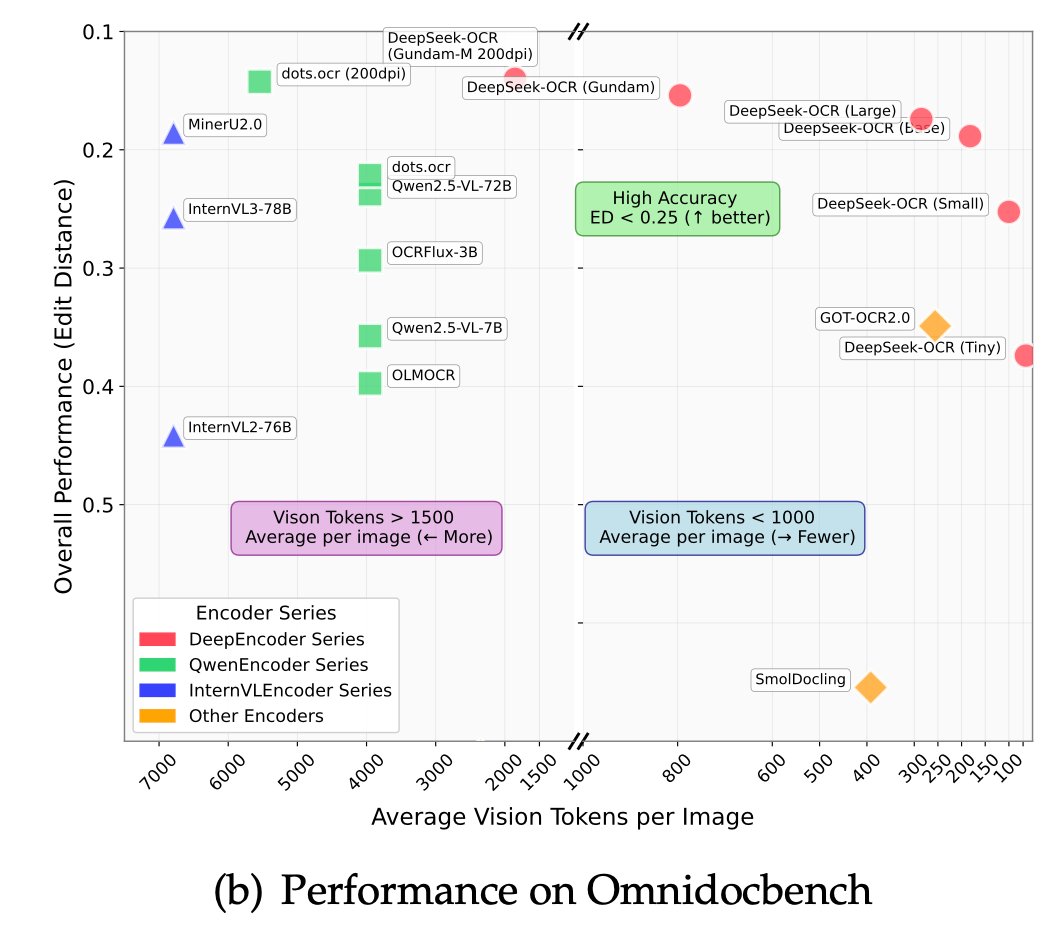

I quite like the new DeepSeek-OCR paper. It's a good OCR model (maybe a bit worse than dots), and yes data collection etc., but anyway it doesn't matter. The more interesting part for me (esp as a computer vision at heart who is temporarily masquerading as a natural language…

🚀 DeepSeek-OCR — the new frontier of OCR from @deepseek_ai , exploring optical context compression for LLMs, is running blazingly fast on vLLM ⚡ (~2500 tokens/s on A100-40G) — powered by vllm==0.8.5 for day-0 model support. 🧠 Compresses visual contexts up to 20× while keeping…

To learn more about temporal difference learning, you could read the original paper (incompleteideas.net/papers/sutton-…) or watch this video (videolectures.net/videos/deeplea…).

The Dwarkesh/Andrej interview is worth watching. Like many others in the field, my introduction to deep learning was Andrej’s CS231n. In this era when many are involved in wishful thinking driven by simple pattern matching (e.g., extrapolating scaling laws without nuance), it’s…

This is the JPEG moment for AI. Optical compression doesn't just make context cheaper. It makes AI memory architectures viable. Training data bottlenecks? Solved. - 200k pages/day on ONE GPU - 33M pages/day on 20 nodes - Every multimodal model is data-constrained. Not anymore.…

My pleasure to come on Dwarkesh last week, I thought the questions and conversation were really good. I re-watched the pod just now too. First of all, yes I know, and I'm sorry that I speak so fast :). It's to my detriment because sometimes my speaking thread out-executes my…

The @karpathy interview 0:00:00 – AGI is still a decade away 0:30:33 – LLM cognitive deficits 0:40:53 – RL is terrible 0:50:26 – How do humans learn? 1:07:13 – AGI will blend into 2% GDP growth 1:18:24 – ASI 1:33:38 – Evolution of intelligence & culture 1:43:43 - Why self…

The most interesting part for me is where @karpathy describes why LLMs aren't able to learn like humans. As you would expect, he comes up with a wonderfully evocative phrase to describe RL: “sucking supervision bits through a straw.” A single end reward gets broadcast across…

The @karpathy interview 0:00:00 – AGI is still a decade away 0:30:33 – LLM cognitive deficits 0:40:53 – RL is terrible 0:50:26 – How do humans learn? 1:07:13 – AGI will blend into 2% GDP growth 1:18:24 – ASI 1:33:38 – Evolution of intelligence & culture 1:43:43 - Why self…

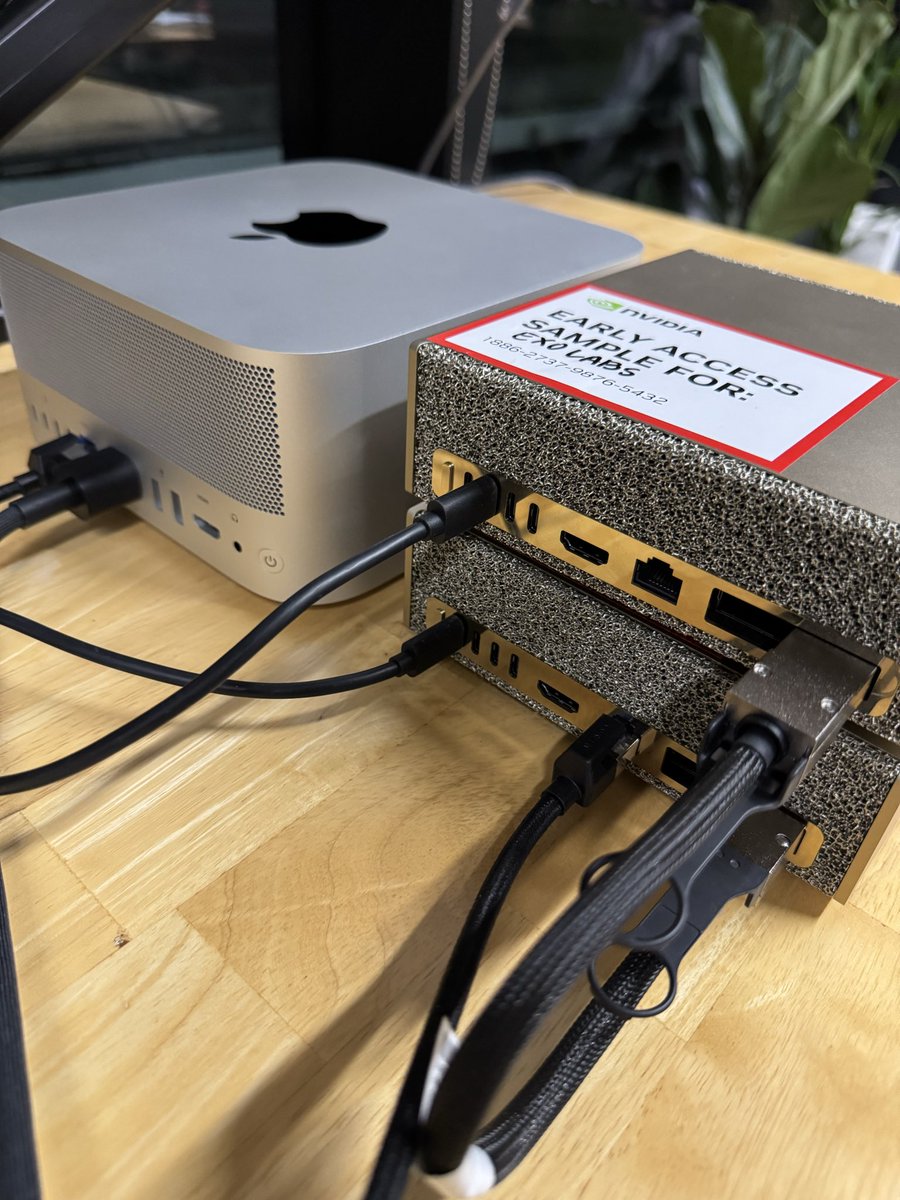

Clustering NVIDIA DGX Spark + M3 Ultra Mac Studio for 4x faster LLM inference. DGX Spark: 128GB @ 273GB/s, 100 TFLOPS (fp16), $3,999 M3 Ultra: 256GB @ 819GB/s, 26 TFLOPS (fp16), $5,599 The DGX Spark has 3x less memory bandwidth than the M3 Ultra but 4x more FLOPS. By running…

Very excited to share @theworldlabs ‘s latest research work RTFM!! It’s a real-time, persistent, and 3D consistent generative World Model running on *a single* H100 GPU! Blog and live demo are available below! 🤩

Generative World Models will inevitably be computationally demanding, potentially scaling beyond even the requirements of today’s LLMs. But we believe they are a crucial research direction to explore in the future of rendering and spatial intelligence. worldlabs.ai/blog/rtfm

An exciting milestone for AI in science: Our C2S-Scale 27B foundation model, built with @Yale and based on Gemma, generated a novel hypothesis about cancer cellular behavior, which scientists experimentally validated in living cells. With more preclinical and clinical tests,…

Meta just did the unthinkable. They figured out how to train AI agents without rewards, human demos, or supervision and it actually works better than both. It’s called 'Early Experience', and it quietly kills the two biggest pain points in agent training: → Human…

All top open-weight models are now Chinese

🚨 Gemini 3.0 Pro - ONE SHOTTED I asked it for UBUNTU web os as everyone asked me for it and the result is mind blowing. prompt : Create a fully functional Linux desktop environment (Ubuntu/GNOME style) as a complete web operating system in a single HTML file with embedded…

🚨 Gemini 3.0 Pro - ecpt checkpoint Holy shit Guys , i want everyone to see this retweet as much as you can to get this to mainstream , i dont ask for this normally All apps work , apple animation , minimize , tools , browser , and everything literally this is the best we can…

Really happy to be announcing the chips we’ve been cooking the past 18 months! OpenAI kicked off the reasoning wave with o1, but months before that we’d already started designing a chip tuned precisely for reasoning inference of OpenAI models. In January 2024, I joined OpenAI as…

We're partnering with Broadcom to deploy 10GW of chips designed by OpenAI. Building our own hardware, in addition to our other partnerships, will help all of us meet the world’s growing demand for AI. openai.com/index/openai-a…

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

🔥 GPT-6 may not just be smarter, it might be alive (in the computational sense). A new research paper called SEAL, Self-Adapting Language Models (arXiv:2506.10943) describes how an AI can continuously learn after deployment, evolving its own internal representations without…

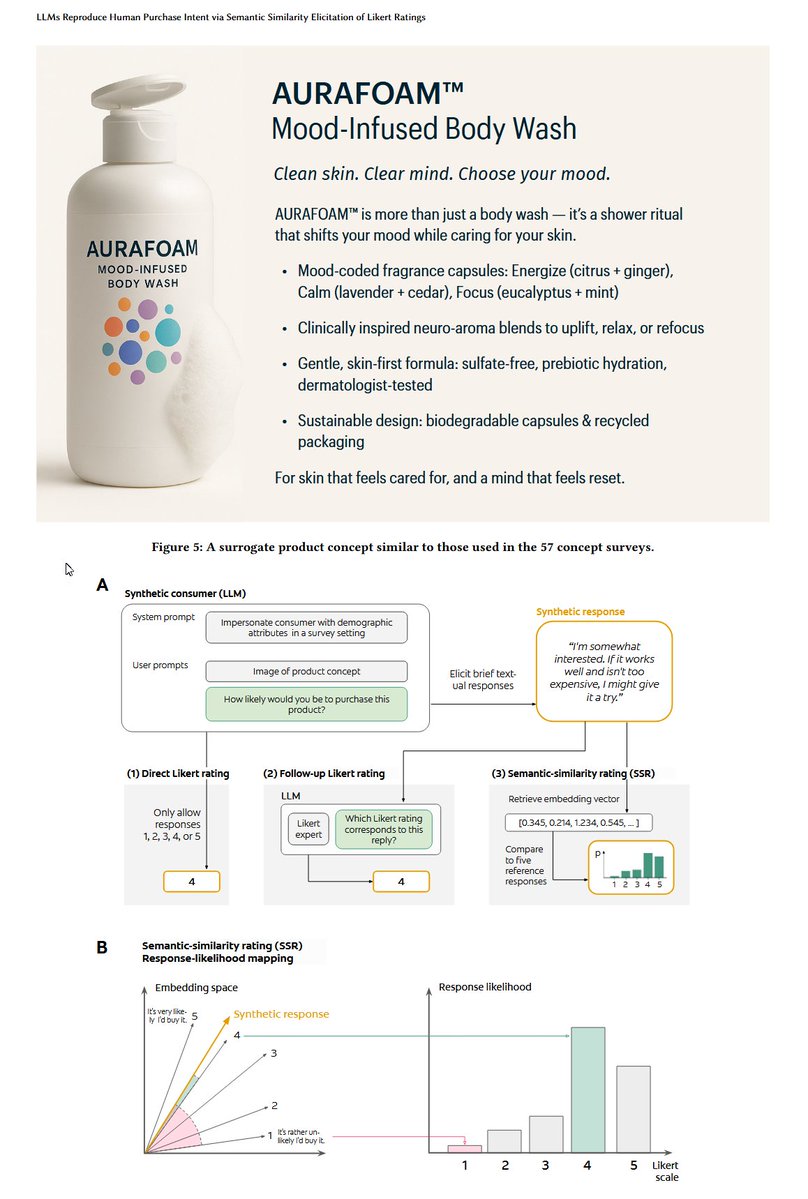

This paper shows that you can predict actual purchase intent (90% accuracy) by asking an LLM to impersonate a customer with a demographic profile, giving it a product & having it give its impressions, which another AI rates. No fine-tuning or training & beats classic ML methods.

United States Trends

- 1. Dodgers 665K posts

- 2. #WorldSeries 320K posts

- 3. Yamamoto 206K posts

- 4. Blue Jays 118K posts

- 5. Will Smith 48K posts

- 6. Miguel Rojas 41K posts

- 7. Kershaw 32.7K posts

- 8. Yankees 15.4K posts

- 9. Baseball 160K posts

- 10. Dave Roberts 12.9K posts

- 11. Vladdy 21.8K posts

- 12. Kendrick 16.9K posts

- 13. Ohtani 85.5K posts

- 14. #Worlds2025 27.2K posts

- 15. Jeff Hoffman 3,639 posts

- 16. Auburn 14.3K posts

- 17. Cubs 7,572 posts

- 18. Nike 36K posts

- 19. Mets 11.5K posts

- 20. Phillies 3,530 posts

You might like

Something went wrong.

Something went wrong.