You might like

I’m starting to get into a habit of reading everything (blogs, articles, book chapters,…) with LLMs. Usually pass 1 is manual, then pass 2 “explain/summarize”, pass 3 Q&A. I usually end up with a better/deeper understanding than if I moved on. Growing to among top use cases. On…

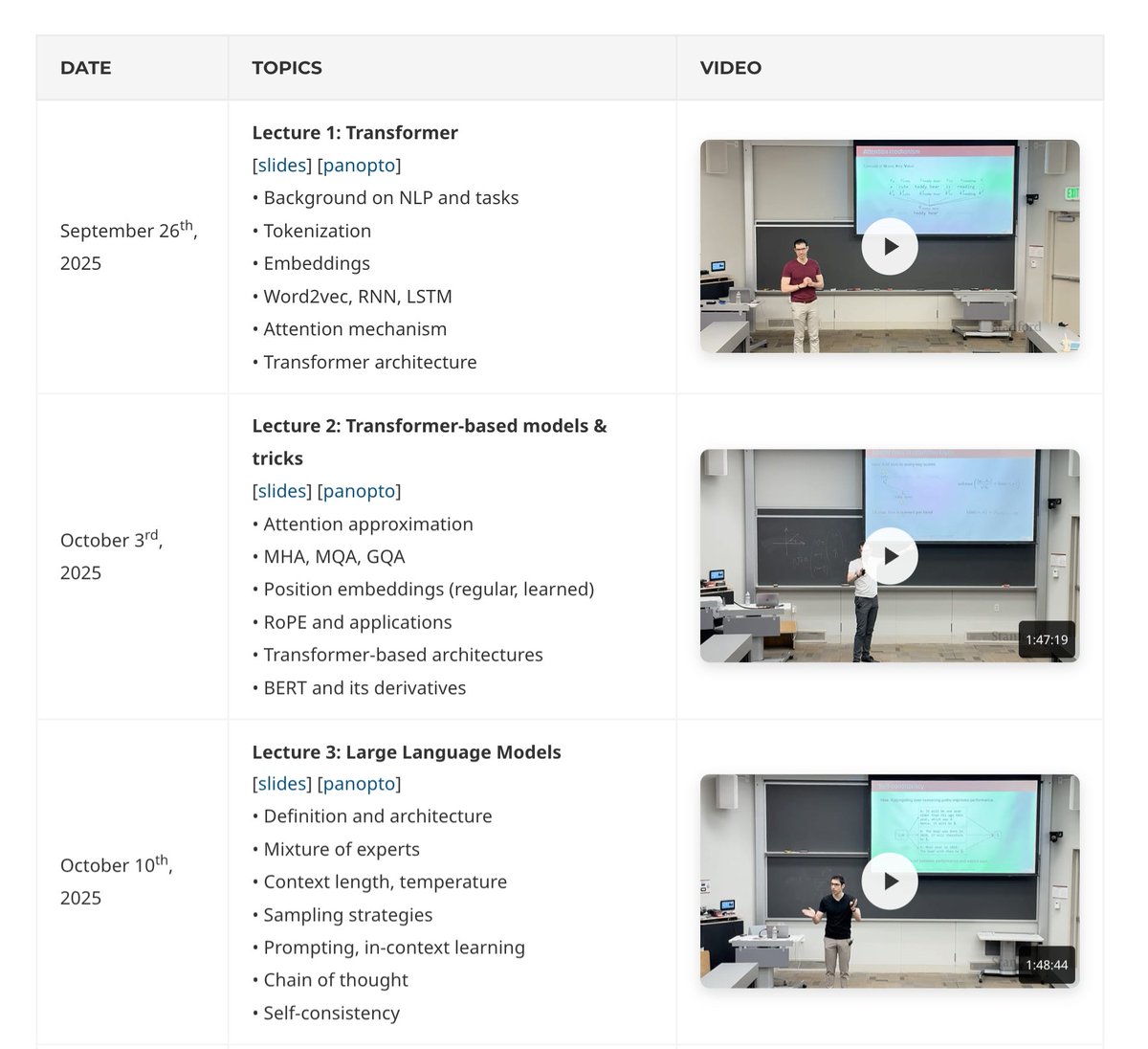

Stanford just released a new course for this Fall: Transformers & Large Language Models by the Amidi brothers. Three videos are already available for free on YouTube. SYLLABUS: > Transformers (tokenization, embeddings, attention, architecture) > LLM foundations (MoEs, types of…

Explains shell: paste a command with options, get a full explanation of what it does explainshell.com We should do that for #python

Step by step, the rest of my semester of numerical computing course notes is turning into Jupyter notebooks with embedded questions and computations. If it's useful to anyone on here, feel free to use: cs.cornell.edu/courses/cs4220…

Bored at home? Need a new friend? Hang out with BART, the newest model available in transformers (thx @sam_shleifer) , with the hefty 2.6 release (notes: github.com/huggingface/tr…). Now you can get state-of-the-art summarization with a few lines of code: 👇👇👇

I already told you it is nice to have many things imported in your PYTHONSTARTUP file, but if you use ipython/jupyter, it's even better to "pip install ipython-autoimport". Because then you put this in PYTHONSTARTUP:

One of my goals for the year was to start blogging again. With the new @fastdotai course this gives me a great motivator to do so! So meet the first one: fastai and the new DataBlock API: muellerzr.github.io/fastblog/2020/… @bhutanisanyam1 tried to beat me to it, I had to prove him wrong.

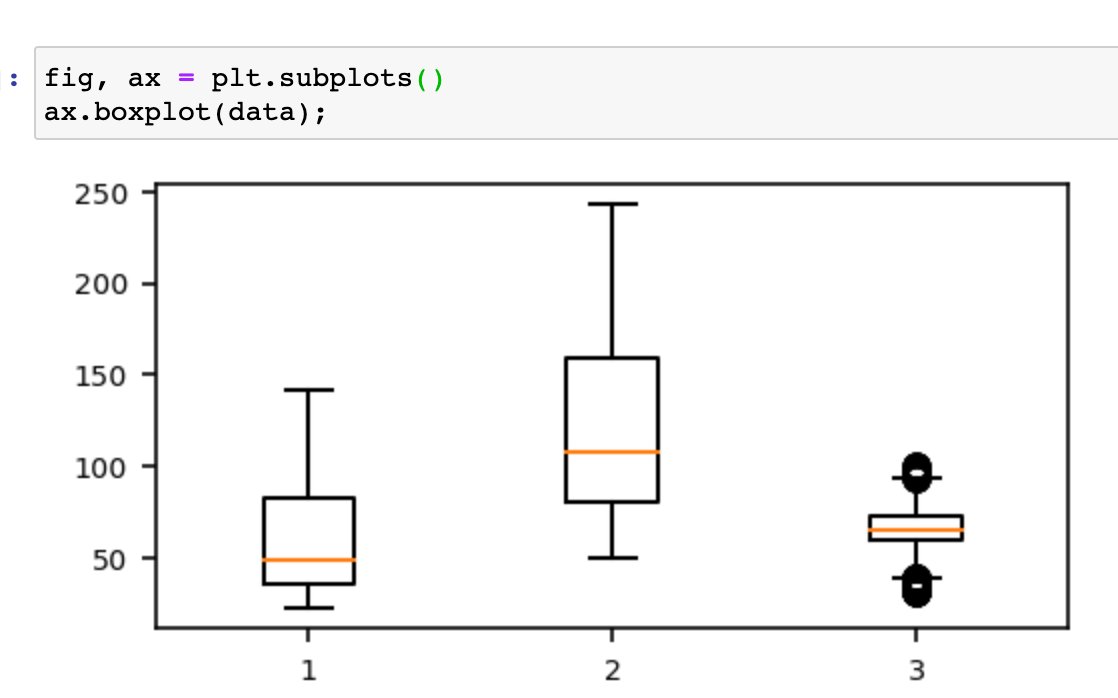

Hate having your notebooks filled with returned items from matplotlib? Add a semicolon to the end to suppress the output.

Not everyone can afford to train huge neural models. So, we typically *reduce* model size to train/test faster. However, you should actually *increase* model size to speed up training and inference for transformers. Why? [1/6] 👇 bair.berkeley.edu/blog/2020/03/0… arxiv.org/abs/2002.11794

![Eric_Wallace_'s tweet image. Not everyone can afford to train huge neural models. So, we typically *reduce* model size to train/test faster.

However, you should actually *increase* model size to speed up training and inference for transformers.

Why? [1/6] 👇

bair.berkeley.edu/blog/2020/03/0…

arxiv.org/abs/2002.11794](https://pbs.twimg.com/media/ESXC8SvVAAAt0H4.jpg)

![Eric_Wallace_'s tweet image. Not everyone can afford to train huge neural models. So, we typically *reduce* model size to train/test faster.

However, you should actually *increase* model size to speed up training and inference for transformers.

Why? [1/6] 👇

bair.berkeley.edu/blog/2020/03/0…

arxiv.org/abs/2002.11794](https://pbs.twimg.com/media/ESXC9p0U8AAYz5Z.jpg)

If you wish to improve the quality of your python code, tooling is a low hanging fruit. Start with black, pylint, mypy and pytest. They will push you to write better code. However, installing these tools, while not difficult, comes with a few gotchas I'm here to help you avoid:

If you're learning JavaScript, here's a great resource for you. In this article you'll find some of the best JavaScript tutorials all in one place. Happy studying! freecodecamp.org/news/best-java…

Interested in working with sound in Python? 🎶🎙️🥁 github.com/earthspecies/f… now has full colab support - you can run the notebooks at a click of a button 🙂 Also, new addition - how to work with large, multi-gigabyte wav files

2019 Coronavirus dataset (January - February 2020) published by Brenda So #kaggle kaggle.com/brendaso/2019-…

At @RealAAAI, @geoffreyhinton recalls his advisor’s advice: “reading rots the mind”. Only once you have figured how you would solve a problem, then read the literature.

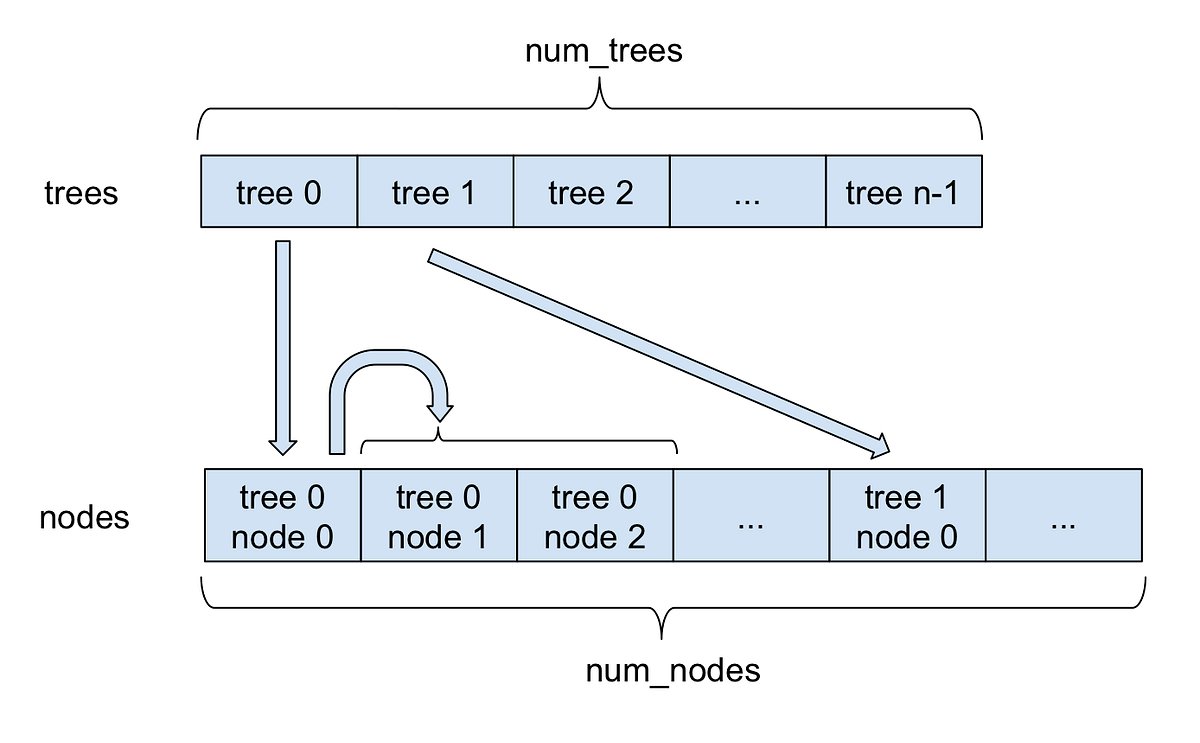

If you're running xgboost, lgbm, or other forest based models in production you need to check out our new forest inference library. 40x faster predictions, cheaper than cpu and way less rack space.

40x faster predictions for even the deepest random forests with @rapidsai FIL’s new sparse forest support - nvda.ws/2GBuZ11

I did a deep-dive into @GitHub Pages, and found it's possible to create a *really* easy way to host your own blog: no code, no terminal, no template syntax. I made "fast_template" to pull this together, & a guide showing beginners how to get blogging fast.ai/2020/01/16/fas…

Udacity #Kotlin Bootcamp: Lesson 2 Use the Kotlin interpreter to get acquainted with the basic language features. Find out what makes @kotlin similar to an aquarium as the team builds one. Curious? We were too! #KotlinFriday Free @Udacity course → goo.gle/2TcmtwT

United States Trends

- 1. #WWERaw 63.3K posts

- 2. Purdy 26.1K posts

- 3. Panthers 35.6K posts

- 4. Bryce 19.5K posts

- 5. 49ers 37.7K posts

- 6. Canales 13.1K posts

- 7. #FTTB 5,324 posts

- 8. Mac Jones 4,735 posts

- 9. Penta 9,613 posts

- 10. #KeepPounding 5,238 posts

- 11. Niners 5,391 posts

- 12. Gunther 14K posts

- 13. Gonzaga 2,909 posts

- 14. Jaycee Horn 2,633 posts

- 15. Jauan Jennings 1,904 posts

- 16. #RawOnNetflix 2,092 posts

- 17. Moehrig N/A

- 18. Ji'Ayir Brown 1,283 posts

- 19. Amen Thompson N/A

- 20. Logan Cooley N/A

You might like

-

Fabio Nonato

Fabio Nonato

@nonatofabio -

Ali

Ali

@ReticentGaurd -

Runar Bjørkavåg

Runar Bjørkavåg

@rkavag_bj -

Kalpak

Kalpak

@KalpakSeal -

Manish Shukla

Manish Shukla

@that_tech_guy10 -

Georgi Kovachev

Georgi Kovachev

@gtkovachev -

Bill Henry

Bill Henry

@votejudgebill -

mauro_iker

mauro_iker

@mauro_iker -

Kim Bitrus Choji

Kim Bitrus Choji

@kimbitrus_1 -

Sami Arja

Sami Arja

@Samiarja1 -

Kaue Colaneri

Kaue Colaneri

@kcolaneri -

Hincal Topcuoglu

Hincal Topcuoglu

@hincaltopcuogl1

Something went wrong.

Something went wrong.