polymorpheus

@polymorph3us

junior dev | happy to be here | LOTR | functions | types

Holy... You're telling me Gemini 3 made this? Which would take a human UI/UX and frontend engineer weeks of work…

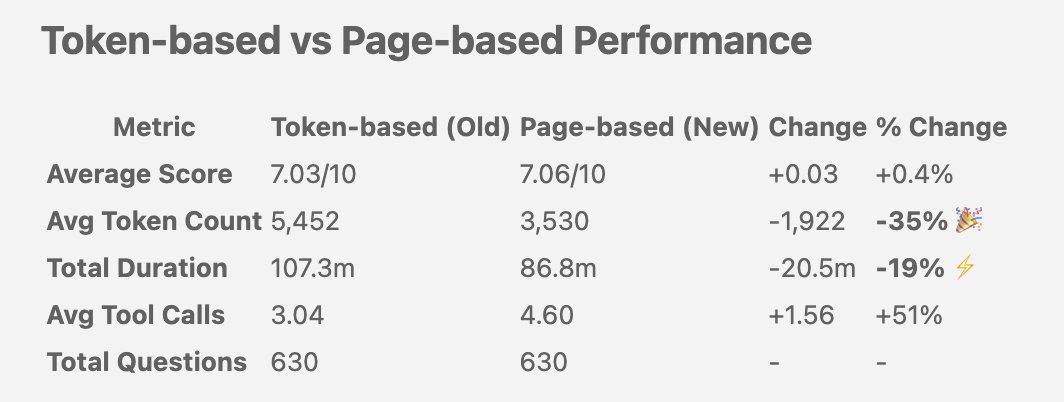

Switched Context7 from token-based to page-based retrieval — here’s what changed: ✅ 35% fewer tokens (5.4K → 3.5K) ⚡ 19% faster responses 🎯 Accuracy maintained at 7/10 630 questions benchmarked. Same quality — dramatically better efficiency. Results below.

Amazing test of Gemini 3’s multimodal reasoning capabilities: try generating a threejs voxel art scene using only an image as input Prompt: I have provided an image. Code a beautiful voxel art scene inspired by this image. Write threejs code as a single-page

We’ve been putting Gemini 3 Pro through real design tests in Figma Make: holiday projects, extreme aesthetic swings, and system-level tasks. It handled the fundamentals reliably and showed meaningful range in layout, style, and interaction. The pattern is clear: AI widens the…

Introducing the Parallel FindAll API. Create an entire dataset with a single search query. For example: "Find all dental practices in Ohio with a 4+ star review on Google" parallel.ai/blog/introduci…

the question I get the most at every conference or meetup: "what even are durable objects?" I spent the past 2 years building almost exclusively with durable objects, and today I'm compiling my notes and favourite patterns into a single blog post boristane.com/blog/what-are-…

Effect + DOs is going to be a blast. We're focused on this @alchemy_run with alchemy-effect, but aim to take it even further with universal, cross-cloud, type-safe bindings. Cloudflare at the root is perfect. In their words: "the Connectivity Cloud"

i hope yall are ready for me to be insufferable on the tl about durable objects

The most useful Category Theory abstractions: 1. Functor 2. Monad 3. Applicative 4. Semigroup 5. Monoid Honestly, if we can make at least these ideas mainstream, that would be good enough. Other common useful abstractions: 1. Contravariant 2. Profunctor 3. Bifunctor 4. Lenses…

I used Monoids, Applicative Functors, Contravariant and Comonads to design a composable logging library. I used Profunctors, Isomorphisms and Categories to design a correct-by-construction decoding library. There's so much power in leveraging foundational abstractions.

Currently using the best of everything: Package Manager → @bunjavascript Frontend → @tan_stack Start Authentication → @better_auth Backend → @convex Blob storage → @Cloudflare R2 Payments → @polar_sh / @autumnpricing Email → @resend Observability → @Sentry +…

Introducing group chat on Poe: a new way to collaborate with any AI and anyone you know, all in a single conversation. Now available to all users worldwide, groups can interact with any of the 200+ text, image, video, or audio models on Poe, plus any creator-made bots. (1/8)

Open sourcing InScene + InScene Annotate - a pair of LoRAs for steering in-scene image generation w/ QwenEdit. You can use a combination of prompts + annotations to generate consistent images inside a scene. In beta but v. powerful. Model + workflow below, training data soon.

"I am a full-on Cloudflare Zero Trust with Warp convert, and while I still have Tailscale running in parallel, almost everything I do now is going through Zero Trust tunnels." Great writeup on Cloudflare Tunnels from @dvcrn! david.coffee/cloudflare-zer…

when people diagnose “lack of agency” the real issue is lack of playfulness. fix playfulness and agency follows naturally. trying to "will" agency into your life as if you’re somehow broken only perpetuates the problem

my only advice to people who ask me for advice: you must learn to tell simple compelling stories. this skill will compound like crazy in every facet of your life. that’s it.

getting in just under the wire happy to present offworld.sh as my submission to the @tan_stack @convex hackathon 🫡 search for any public github repo to view its AI generated analysis (or kick one off!), including overall project summary, architecture details…

Debugging CI/CD pipelines locally was too complex & expensive to solve. AI tools changed that. We built Magnolia—run GitHub Actions, GitLab CI, and Forgejo pipelines locally. github.com/tuist/magnolia Not feature-complete yet, but open to contributions. No more push-pray-wait.

Kiro is generally available 👻 Specs made ‘planning first’ the default for AI assisted dev. Now Kiro IDE adds property based tests to check if your code actually matches your Spec. Real signals, not vibes. Plus a new Kiro CLI and full team support through AWS IAM Identity…

supervision-0.27.0 lets you parse and visualize Qwen3-VL object detection results prompt: person between albert and marie. to answer this, Qwen3 needs prior visual knowledge of Marie Curie and Albert Einstein, and it needs to understand reference terms like between.

how many taxis do you see in this image? Qwen3-VL is so good at recognition, multi-target grounding, and understands spatial relations. it blows my mind.

United States 趨勢

- 1. #TT_Telegram_sam11adel N/A

- 2. #hazbinhotelseason2 45.7K posts

- 3. LeBron 84.2K posts

- 4. #DWTS 54K posts

- 5. #LakeShow 4,004 posts

- 6. Peggy 19.2K posts

- 7. Whitney 16.1K posts

- 8. #InternationalMensDay 21.6K posts

- 9. Keyonte George 1,934 posts

- 10. Reaves 8,682 posts

- 11. Kwara 162K posts

- 12. Patrick Stump N/A

- 13. Jazz 27.2K posts

- 14. Grayson 7,047 posts

- 15. Orioles 7,171 posts

- 16. Celebrini 5,160 posts

- 17. DUSD N/A

- 18. Dearborn 233K posts

- 19. Taylor Ward 3,649 posts

- 20. Tatum 16.9K posts

Something went wrong.

Something went wrong.