#approximateinference search results

#MonteCarlo methods are #approximateInference techniques using stochastic simulation through sampling. The general idea is to draw independent samples from distr p(x) and approximate the expectation using sample averages.#LawOfLargeNumbers cs.cmu.edu/~epxing/Class/… #readingOfTheDay

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

AABI 2023 is accepting nominations for reviewers, invited speakers, panelist, and future organizing committee members. Let us know who you'd like to hear from! Self-nominations accepted. forms.gle/gBZUQsmXgNFmLC… #aabi #bayes #approximateinference #machinelearning #icml2023

We are happy to announce Turing.jl: an efficient library for general-purpose probabilistic #MachineLearning and #ApproximateInference, developed by researchers at @Cambridge_Uni. turing.ml cc: @Cambridge_CL, @OxfordStats, @CompSciOxford

Our paper "Linked Variational AutoEncoders for Inferring Substitutable and Supplementary Items" was accepted at #wsdm2019 #DeepLearning #ApproximateInference #VariationalAutoencoder #RecommenderSystem

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

When the prior isn't conjugate to the likelihood, the posterior cannot be solved analytically even for simple two-node #BN. Thus, #approximateInference is needed. Note: conjugacy is not a concern when calculating the posterior of discrete random variables. #readingOfTheDay

When the prior isn't conjugate to the likelihood, the posterior cannot be solved analytically even for simple two-node #BN. Thus, #approximateInference is needed. Note: conjugacy is not a concern when calculating the posterior of discrete random variables. #readingOfTheDay

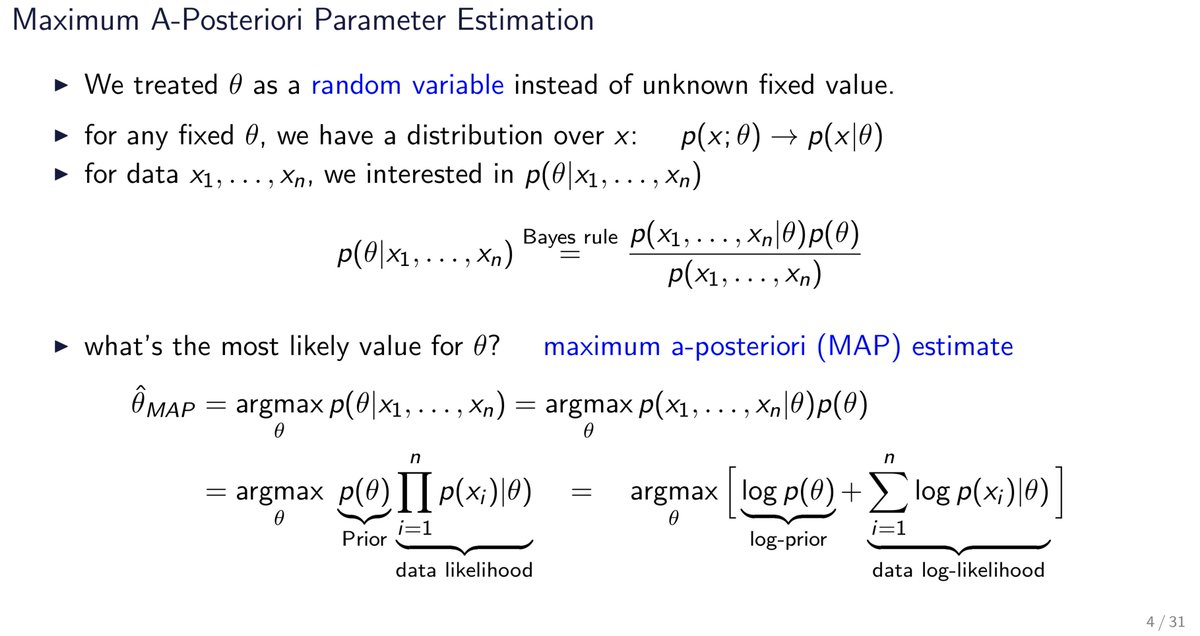

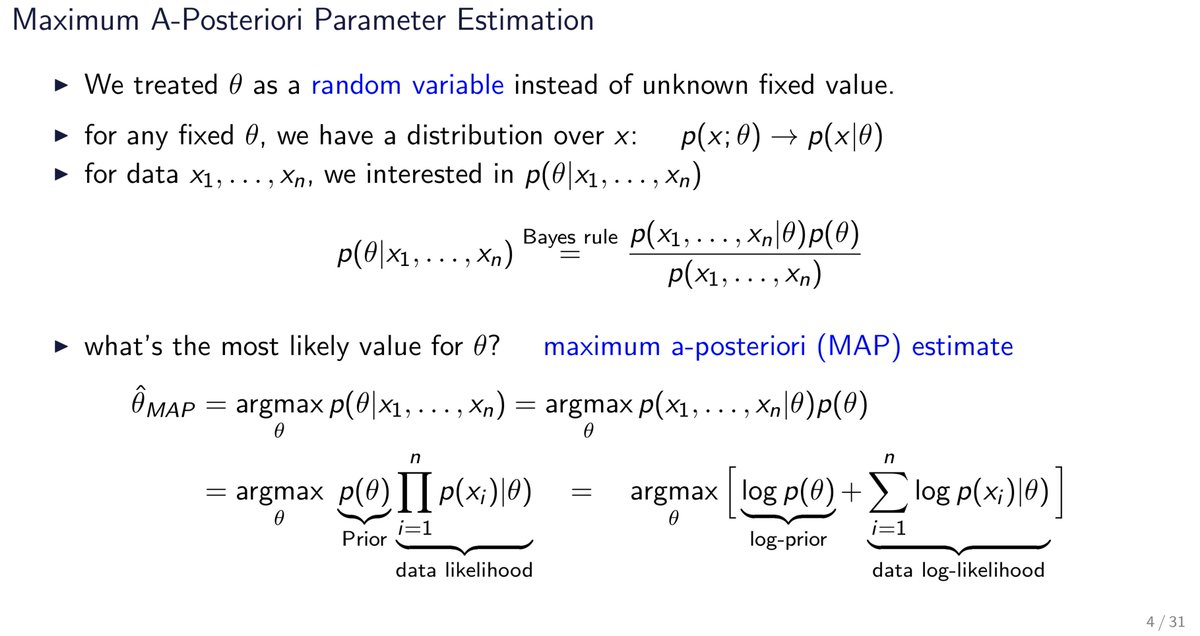

One way to learn the parameter θ of a #BN is #MaximumAPosteriori. We treat θ as a #randomVariable I/O unknown fixed value (as using MLE) and find the estimate that points to the highest peak of its distr. given data (the posterior distr.). #readingOfTheDay cvml.ist.ac.at/courses/PGM_W1…

AABI 2023 is accepting nominations for reviewers, invited speakers, panelist, and future organizing committee members. Let us know who you'd like to hear from! Self-nominations accepted. forms.gle/gBZUQsmXgNFmLC… #aabi #bayes #approximateinference #machinelearning #icml2023

We are happy to announce Turing.jl: an efficient library for general-purpose probabilistic #MachineLearning and #ApproximateInference, developed by researchers at @Cambridge_Uni. turing.ml cc: @Cambridge_CL, @OxfordStats, @CompSciOxford

#MonteCarlo methods are #approximateInference techniques using stochastic simulation through sampling. The general idea is to draw independent samples from distr p(x) and approximate the expectation using sample averages.#LawOfLargeNumbers cs.cmu.edu/~epxing/Class/… #readingOfTheDay

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

When the prior isn't conjugate to the likelihood, the posterior cannot be solved analytically even for simple two-node #BN. Thus, #approximateInference is needed. Note: conjugacy is not a concern when calculating the posterior of discrete random variables. #readingOfTheDay

When the prior isn't conjugate to the likelihood, the posterior cannot be solved analytically even for simple two-node #BN. Thus, #approximateInference is needed. Note: conjugacy is not a concern when calculating the posterior of discrete random variables. #readingOfTheDay

One way to learn the parameter θ of a #BN is #MaximumAPosteriori. We treat θ as a #randomVariable I/O unknown fixed value (as using MLE) and find the estimate that points to the highest peak of its distr. given data (the posterior distr.). #readingOfTheDay cvml.ist.ac.at/courses/PGM_W1…

Our paper "Linked Variational AutoEncoders for Inferring Substitutable and Supplementary Items" was accepted at #wsdm2019 #DeepLearning #ApproximateInference #VariationalAutoencoder #RecommenderSystem

#MonteCarlo methods are #approximateInference techniques using stochastic simulation through sampling. The general idea is to draw independent samples from distr p(x) and approximate the expectation using sample averages.#LawOfLargeNumbers cs.cmu.edu/~epxing/Class/… #readingOfTheDay

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

#VariationalInference is a deterministic #approximateInference. We approximate true posterior p(x) using a tractable distr q(x) found by minimizing *reverse* #KLDivergence KL(q||p). Note: #KLDivergence is asymmetric: KL(p||q)≠KL(q||p). #readingOfTheDay people.csail.mit.edu/dsontag/course…

When the prior isn't conjugate to the likelihood, the posterior cannot be solved analytically even for simple two-node #BN. Thus, #approximateInference is needed. Note: conjugacy is not a concern when calculating the posterior of discrete random variables. #readingOfTheDay

Something went wrong.

Something went wrong.

United States Trends

- 1. Northern Lights 35.9K posts

- 2. #DWTS 49.9K posts

- 3. #Aurora 7,370 posts

- 4. Justin Edwards 2,048 posts

- 5. Louisville 17.2K posts

- 6. #RHOSLC 6,324 posts

- 7. Andy 60.6K posts

- 8. Creighton 1,970 posts

- 9. #OlandriaxHarpersBazaar 4,566 posts

- 10. Gonzaga 2,598 posts

- 11. Lowe 12.5K posts

- 12. #GoAvsGo 1,420 posts

- 13. Oweh 2,029 posts

- 14. Kentucky 25.3K posts

- 15. JT Toppin N/A

- 16. Celtics 12.2K posts

- 17. Elaine 40.8K posts

- 18. Robert 100K posts

- 19. Dylan 31K posts

- 20. Go Cards 2,737 posts