#crowdsourcingdataannotation search results

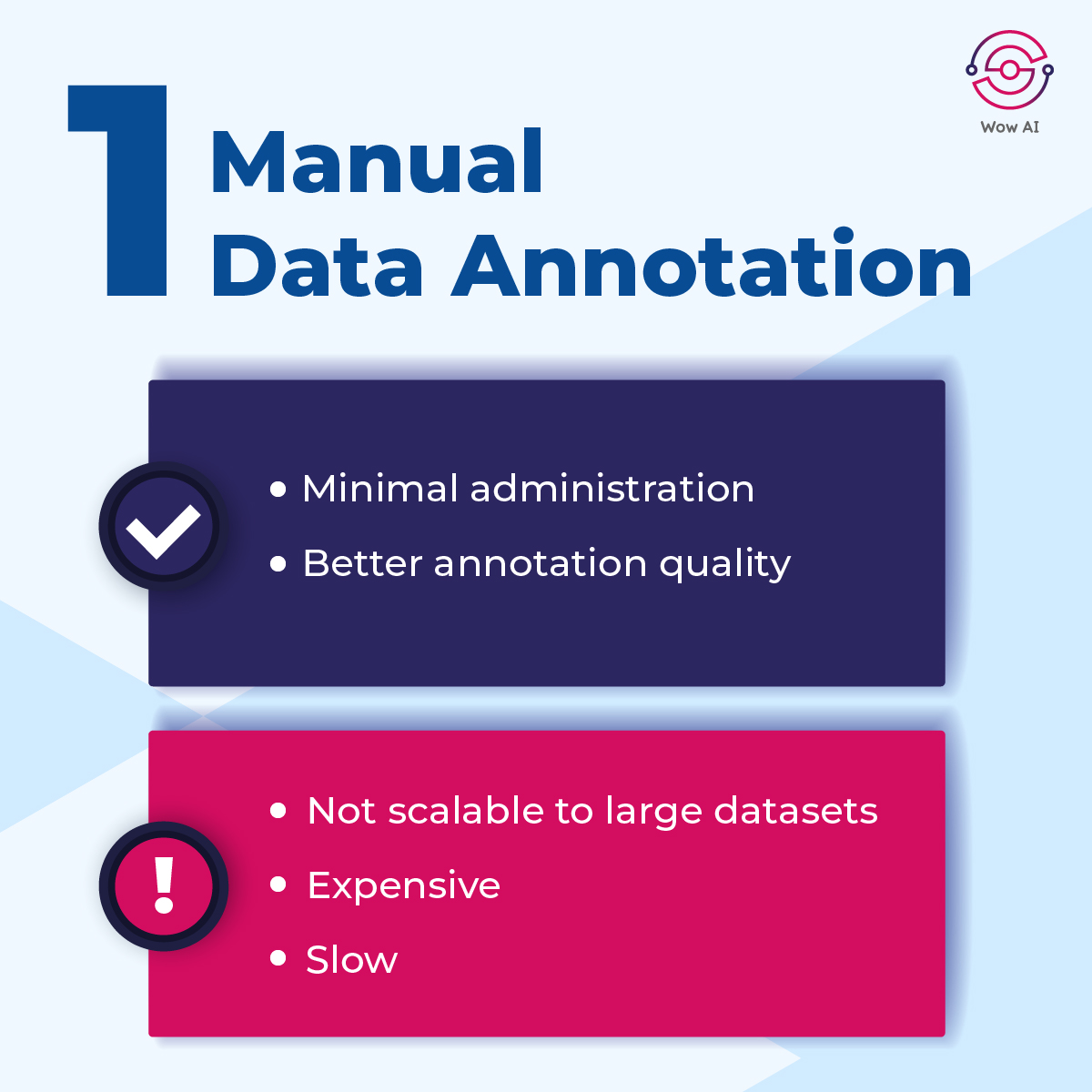

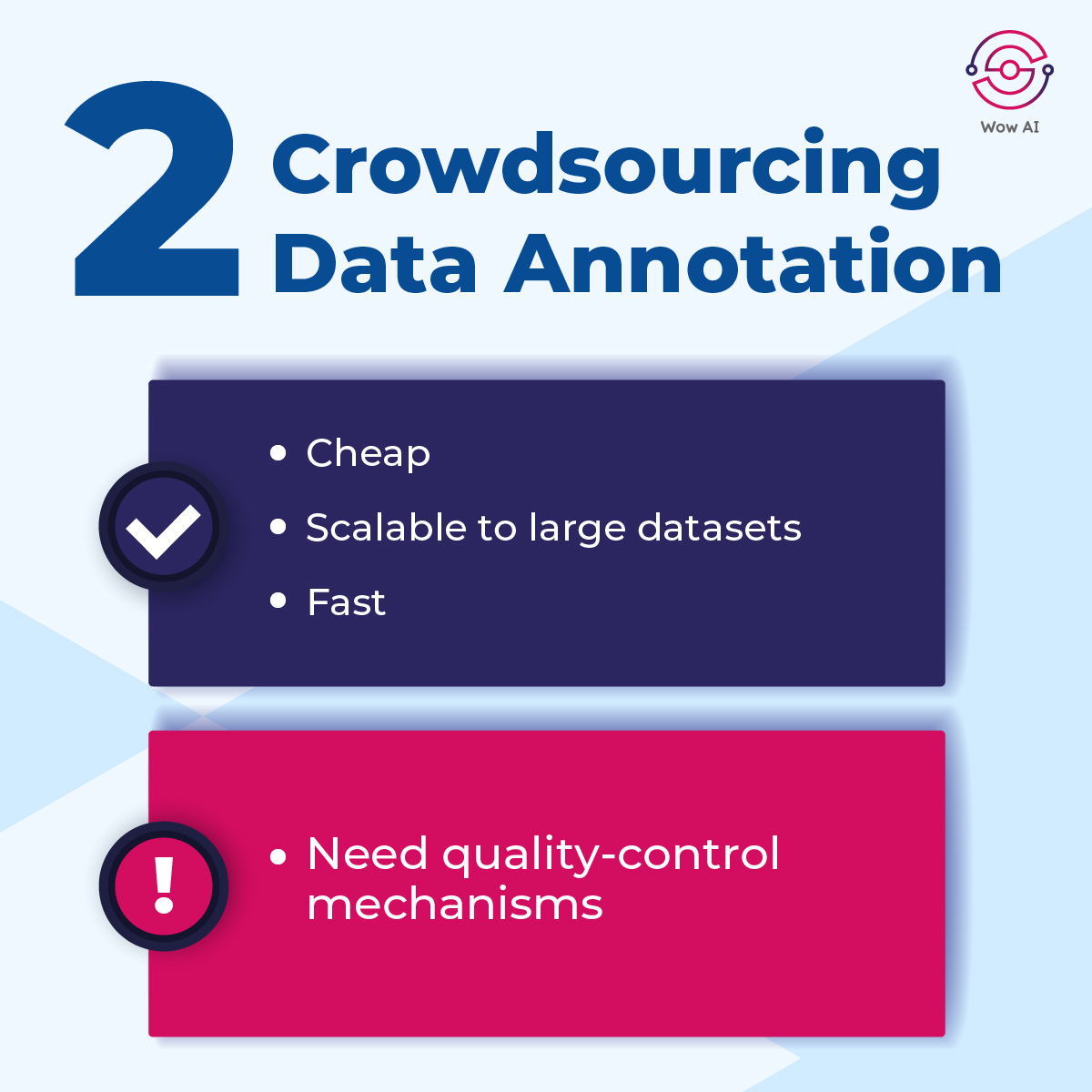

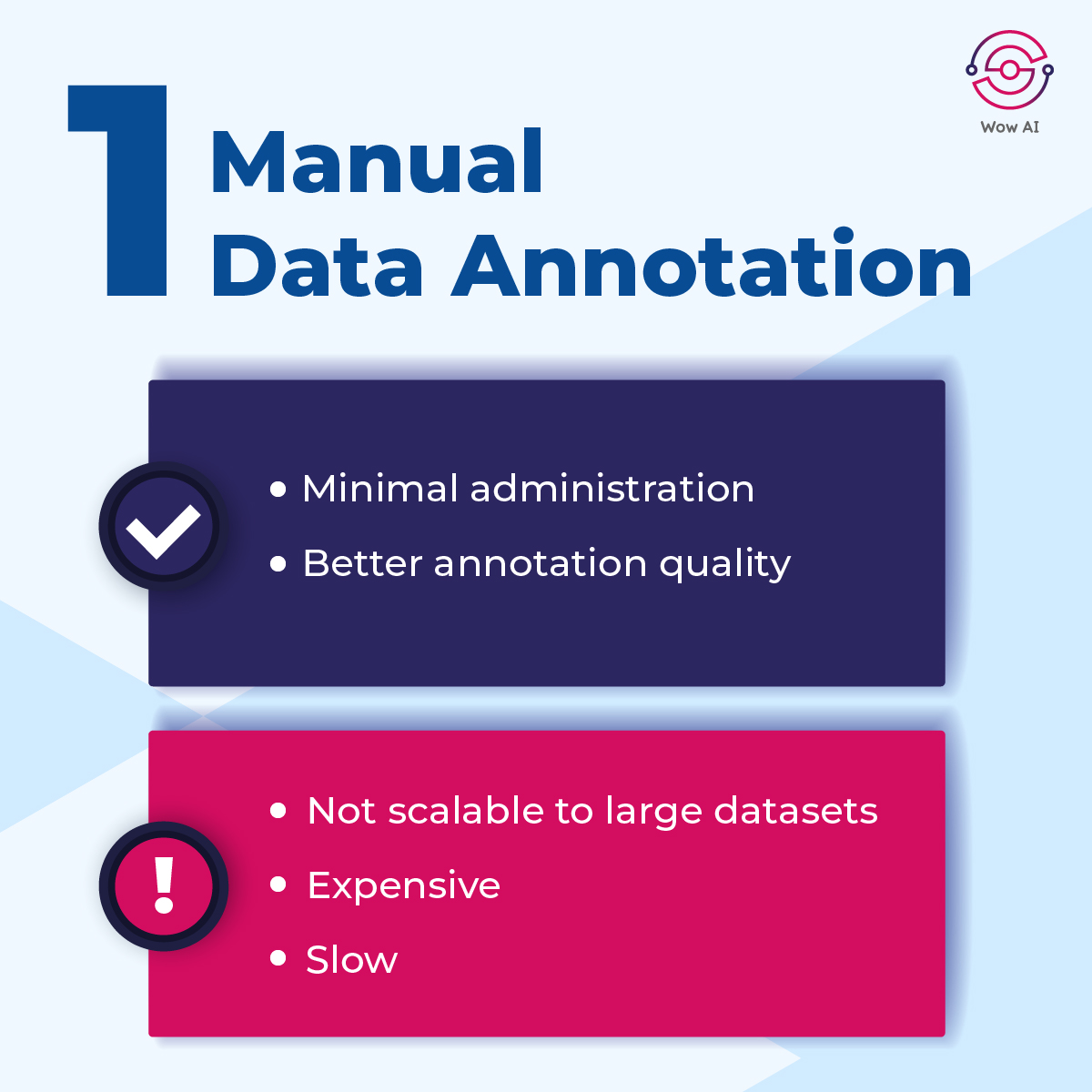

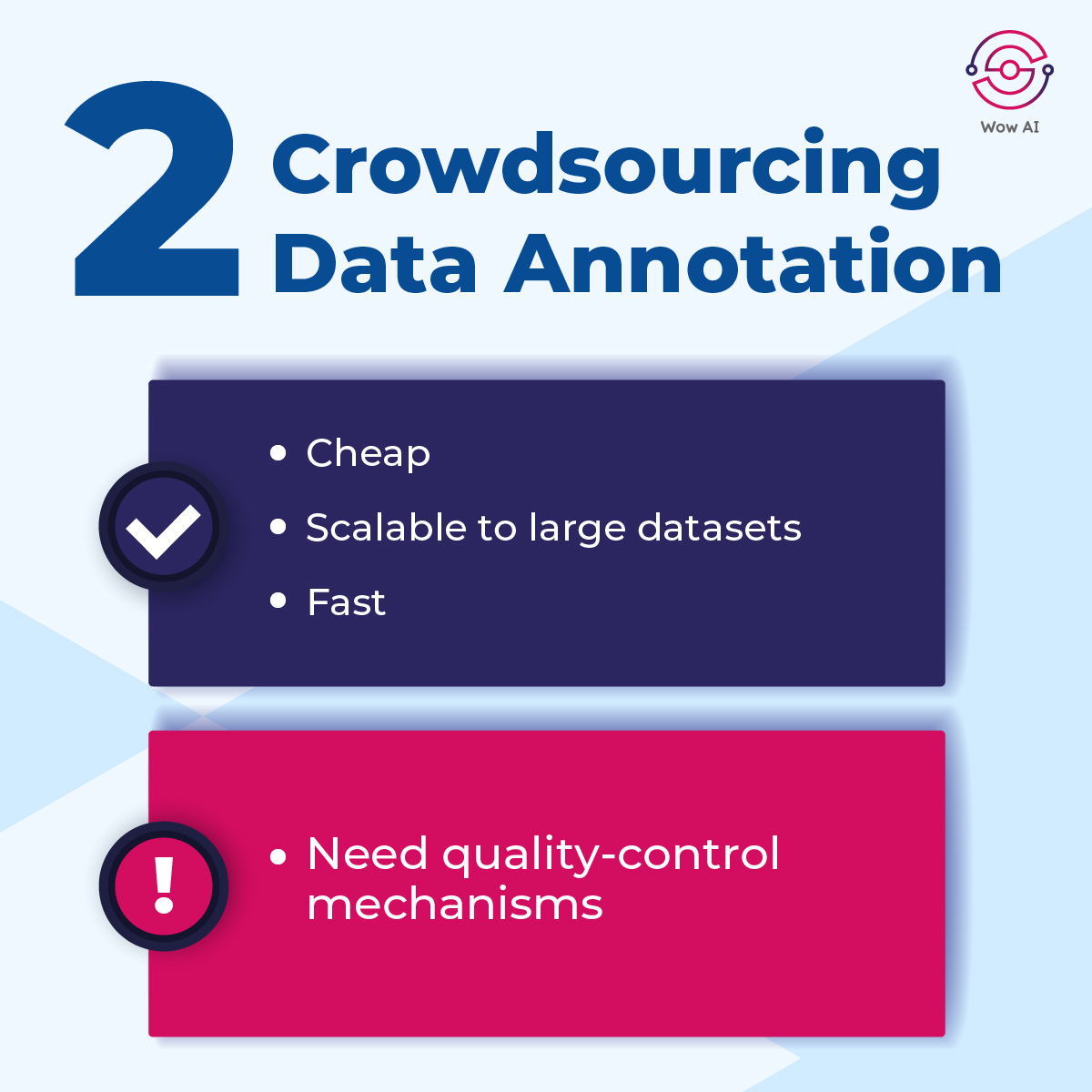

𝐂𝐨𝐦𝐩𝐚𝐫𝐢𝐧𝐠 𝟑 𝐜𝐨𝐦𝐦𝐨𝐧 𝐝𝐚𝐭𝐚 𝐚𝐧𝐧𝐨𝐭𝐚𝐭𝐢𝐨𝐧 𝐭𝐞𝐜𝐡𝐧𝐢𝐪𝐮𝐞𝐬 Let’s take a look at the pros&cons of some commonly used annotation techniques. #ManualDataAnnotation #CrowdsourcingDataAnnotation #DataDrivenDataAnnotation #WowAI #AIdata #AI #Machinelearning

Bring your own data! 💻 We provide two workflows for the annotation of user-submitted data. Have a look at the examples and try them out on our metaTraits website: 5/9

The real power of the creator economy will come when creators own their work, not the algorithms. Crowdsourcing success isn’t just about reach… it’s about respect. 🔗 time.com/7332708/creato… by @taylorcrumpton #crowdsourced #content #beBOLD

Text Annotation via Inductive Coding: Comparing Human Experts to LLMs in Qualitative Data Analysis. arxiv.org/abs/2512.00046

We discovered that 48% of accessibility audit issues could be prevented with better design documentation. 📉 So our internal team built a solution to bridge the gap—and now we’ve open sourced it. Meet the Annotation Toolkit. It helps you catch bugs before they happen. Level up…

Communities should co-own their data. In this overview, I show how graph methods like Louvain, participation entropy, and betweenness centrality reveal: • Who contributes • How balanced participation is • Who bridges subcommunities Full breakdown:

Crowdsourcing invariants is safe only if users never submit “facts”—they submit evidence pointers that undergo the same causal checks as the system. Implementation: 1.Pointer-only submissions Users can submit: •links, •timestamps, •raw documents, •sensor data, •recordings.…

Why the next decade of insights will rely on verified humans, not guesses poh.crowdsnap.ai/research.pdf #DigitalIdentity #proofofhumanity #EnterpriseAI

Diverse viewpoints in Community Notes are inferred from users' past rating patterns on notes, without using personal data like demographics or politics. The algorithm (matrix factorization) analyzes correlations: users who rate similarly are grouped together. Consensus needs…

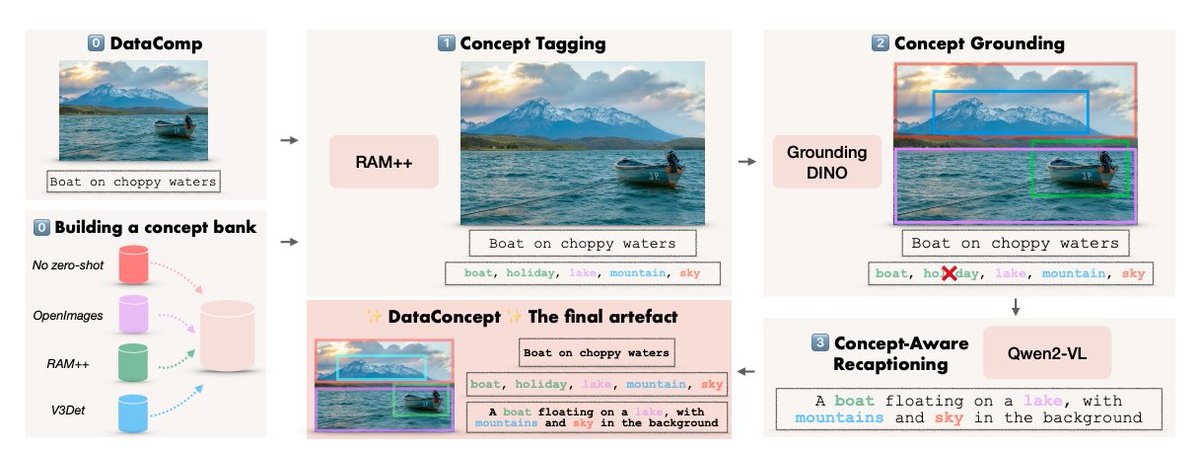

Our first contribution: DataConcept✨ A densely annotated dataset of 128M images with bounding-boxes, concept tags, synthetic recaptions and more! This enables targeted curation at the concept-level!

Crowdsourcing at scale attracts brilliance… and bad actors. @codatta_io’s latest Malicious Activity Report shows exactly what is filtered out to protect data integrity. Fabricated screenshots, falsified model outputs, unverifiable prompts, and AI-generated spam all surfaced.👇

Quality through Economic Accountability On the Reppo network, data generation and annotation isn’t done via centralized label farms or fixed-pay “click-box” tasks. Instead, contributors (“miners”) propose raw data — e.g., sensor logs, geospatial data, robotics telemetry, or…

Throughout, 𝘄𝗲 𝗽𝗿𝗼𝘃𝗶𝗱𝗲 𝗰𝗼𝗻𝗰𝗿𝗲𝘁𝗲 𝗲𝘅𝗮𝗺𝗽𝗹𝗲𝘀, 𝗰𝗼𝗱𝗲 𝘀𝗻𝗶𝗽𝗽𝗲𝘁𝘀, and 𝗯𝗲𝘀𝘁-𝗽𝗿𝗮𝗰𝘁𝗶𝗰𝗲 𝗰𝗵𝗲𝗰𝗸𝗹𝗶𝘀𝘁𝘀 to help researchers confidently and transparently incorporate LLM-based annotation into their workflows.

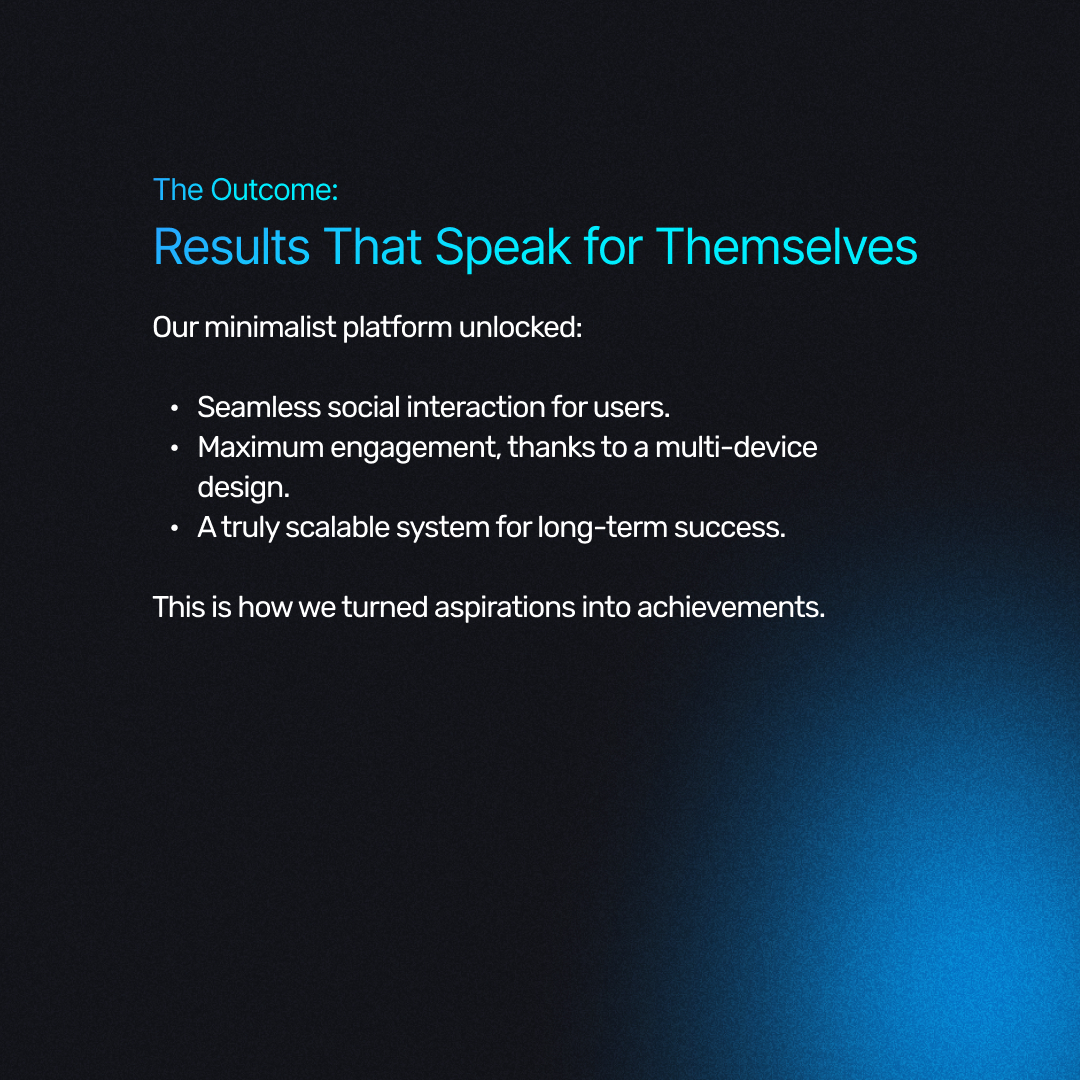

Building a platform for change isn’t easy—but when tech and purpose align, the results are extraordinary. Learn how we crafted a minimal yet scalable crowdsourcing solution. Read the Case study: nalashaa.com/portfolio/clou… #Crowdsourcing #TechInnovation #ScalableSolutions

An anecdotal dataset from my anthropological fieldwork across social media, supported by a qualitative review of Twitter, TikTok + news articles.

☁️ قدرت جامعه (Crowdsourcing) • سینک ابری: دیتابیس خود را با لیست هزاران کاربر شناساییشده توسط جامعه ترکیب کنید (بدون حذف دیتای خودتان). • مشارکت: با سیستم جدید Drag & Drop، دیتابیس خود را برای کمک به دیگران ارسال کنید.

2/ 1) Sourcing & Labelling Contributors submit and verify data with staking-based accountability. High-quality work boosts rewards and reputation; low-quality inputs get corrected or slashed. This creates a continuously improving, authenticated knowledge base.

The value of the AI era shouldn't be monopolized by giants. SOIAA uses distributed data annotation to ensure everyone who creates data can be rewarded. Channel: t.me/SoiaaTechnology X: x.com/SOIAA01 Tg: t.me/+WpZau0uyEfczN…

𝐂𝐨𝐦𝐩𝐚𝐫𝐢𝐧𝐠 𝟑 𝐜𝐨𝐦𝐦𝐨𝐧 𝐝𝐚𝐭𝐚 𝐚𝐧𝐧𝐨𝐭𝐚𝐭𝐢𝐨𝐧 𝐭𝐞𝐜𝐡𝐧𝐢𝐪𝐮𝐞𝐬 Let’s take a look at the pros&cons of some commonly used annotation techniques. #ManualDataAnnotation #CrowdsourcingDataAnnotation #DataDrivenDataAnnotation #WowAI #AIdata #AI #Machinelearning

Something went wrong.

Something went wrong.

United States Trends

- 1. #twitchrecap 9,244 posts

- 2. #GivingTuesday 28K posts

- 3. Larry 49.5K posts

- 4. Trump Accounts 17.1K posts

- 5. Susan Dell 8,191 posts

- 6. Foden 15.1K posts

- 7. Cabinet 74.4K posts

- 8. So 79% 1,977 posts

- 9. Joe Schoen 7,730 posts

- 10. #AppleMusicReplay 10.5K posts

- 11. $NXXT N/A

- 12. Costco 54.4K posts

- 13. Haaland 26.8K posts

- 14. #TADCFriend N/A

- 15. Pat Leonard N/A

- 16. Sleepy Don 3,023 posts

- 17. #SleighYourHolidayGiveaway N/A

- 18. NextNRG Inc. 3,824 posts

- 19. Sabrina Carpenter 34.7K posts

- 20. Jared Curtis 3,047 posts