#diffusivememristor search results

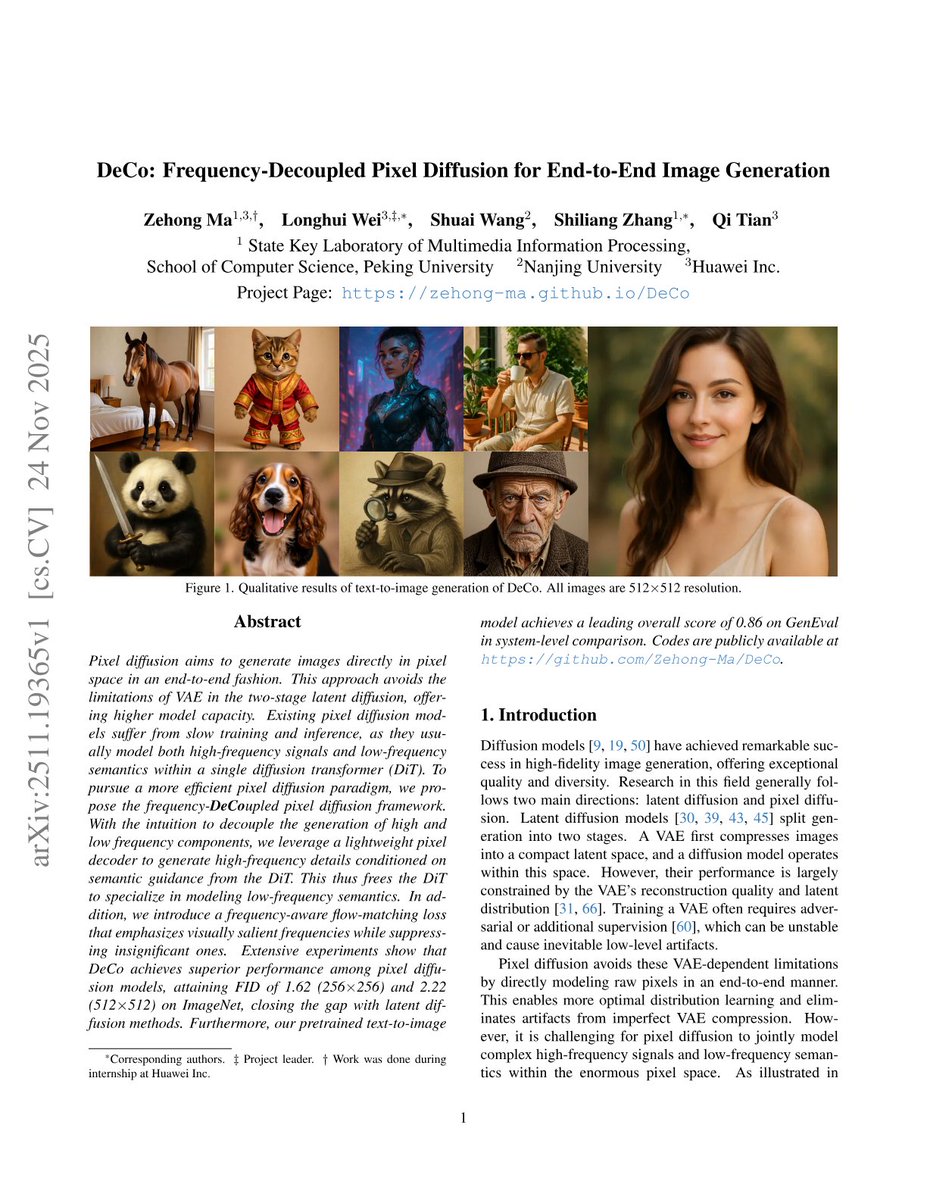

DeCo splits pixel diffusion: a DiT models low-frequency semantics while a small decoder adds high-frequency detail, with a frequency-aware loss, improving efficiency and approaching latent diffusion FID. Probably useful for researchers and engineers; probably not for...

Diffusion models add noise to data and learn to reverse it for generation, like in AI image creators. This paper proves strong bounds on how "heat" (a diffusion-like process) behaves on binary (Boolean) spaces, resolving a 1989 math puzzle almost fully. It's groundbreaking as it…

ah dont take it personally, i was shit-posting / rage-baiting ppl who are trying to use the "what happens when you lose your paintbrush?" point as "what if chatGPT goes down"? and actually, tbh, if you have a reasonable gaming PC, its v easy to set up stable diffusion.

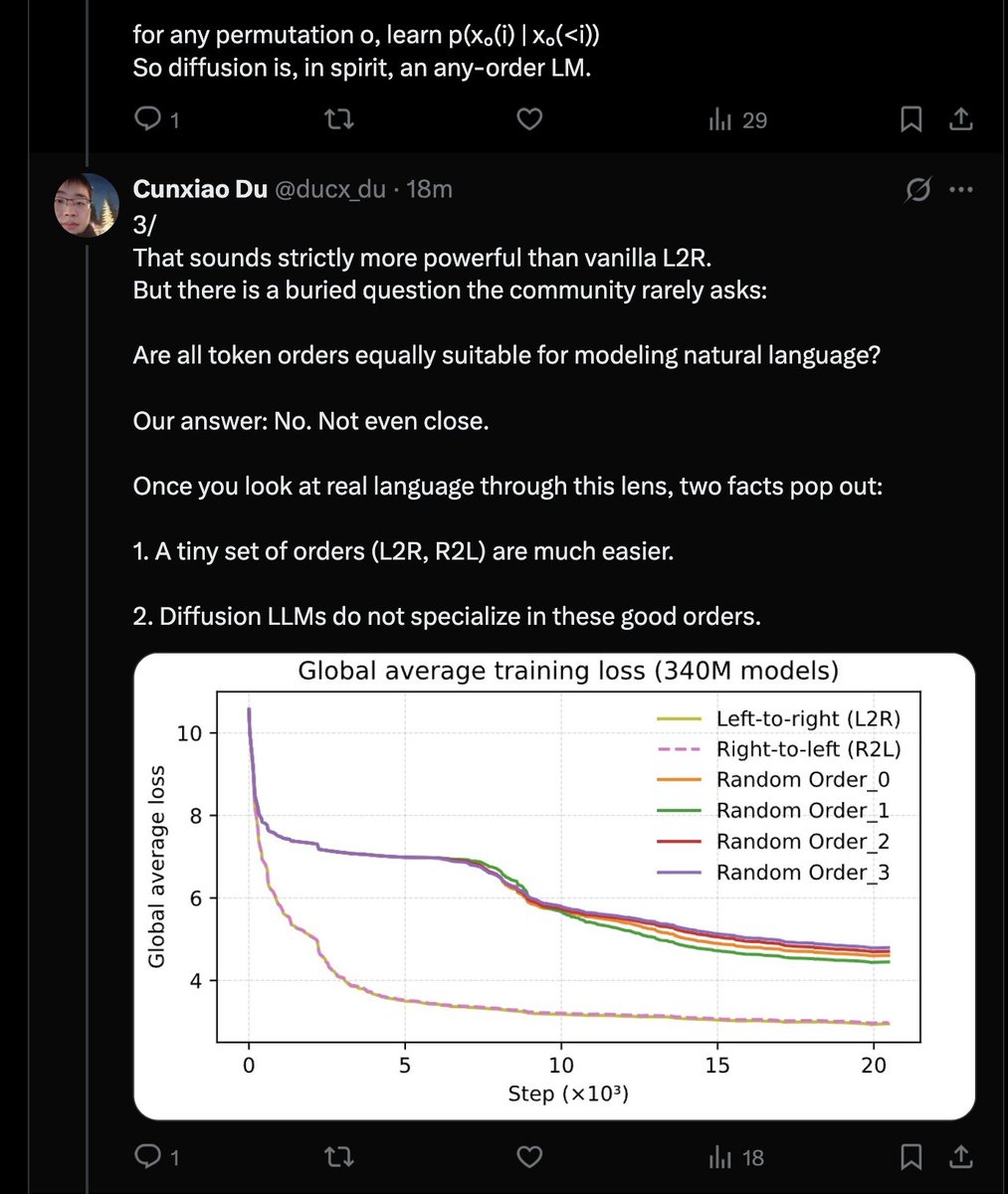

Extremely powerful narrative. Diffusion models by default make no sense because language is Markovian and L2R *or* *R2L* orders are strictly superior. It appears that the only sane way to train DLLMs is with log-sum loss.

Diffusion LLMs (DLLM) can do “any-order” generation, in principle, more flexible than left-to-right (L2R) LLM. Our main finding is uncomfortable: ➡️ In real language, this flexibility backfires: DLLMs become worse probabilistic models than the L2R / R2L AR LMs. This…

2/ To unpack this, we start from a simple lens: Diffusion LLMs ≈ Any-Order Language Models. Masked diffusion predicts arbitrary masked tokens from arbitrary visible ones. This is equivalent to learning all autoregressive factorizations over permutations of token order: for any…

Except that isn't how this technology works. A diffusion model is a latent map of weights that represents an AI's generalization of concepts. The training process would be more accurately compared to a human learning to draw by drawing what they see.

Just shipped text diffusion support in Transformer Lab! Text diffusion models have been getting more attention lately. They generate text by iteratively denoising a noisy or masked sequence, rather than predicting the next token like autoregressive models. Researchers are…

🌀 Diffusion language models have rapidly become one of the most important alternatives to autoregressive generation, and this new blog distills 🗞️six key papers🗞️ tracing the shift from left-to-right decoding to parallel, bidirectional refinement. starc.institute/blogs/diffusio… ✴️…

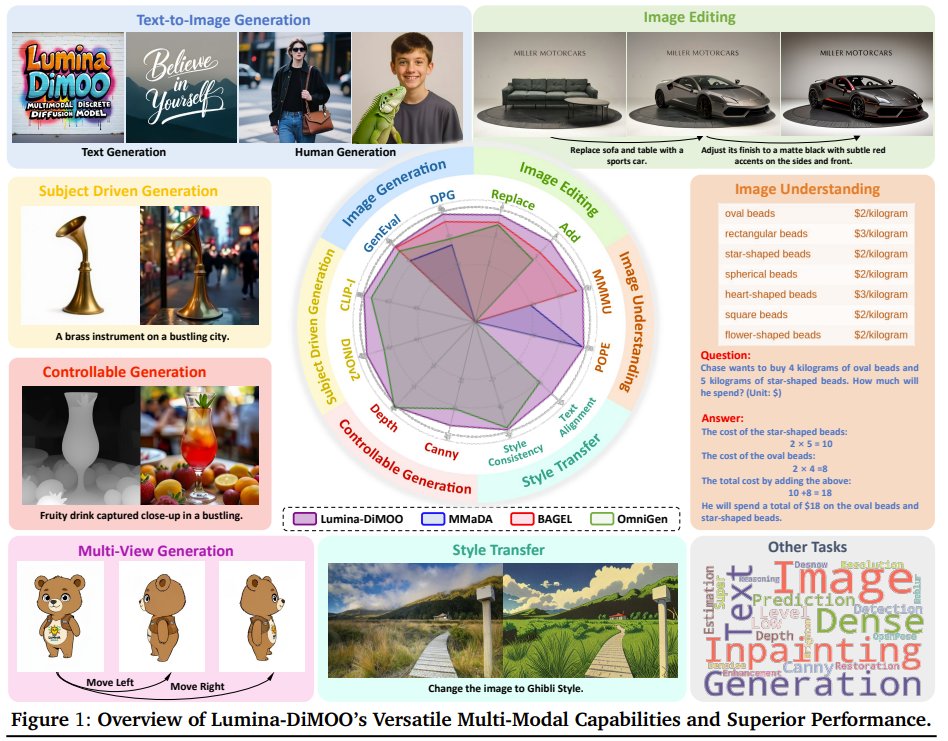

One discrete diffusion model could handle every multimodal task end to end? Lumina DiMOO is a fully discrete diffusion model for generation and understanding across text and images. It delivers faster sampling than AR and hybrid systems while supporting text to image, image…

Diffusion Modelの説明動画の中で一番わかりやすかった 画像空間の中の猫っぽい集合とそこから少しはみでたデータセットのペアを学習することで、与えられたノイズ画像と猫の集合の距離がなんとなくわかる ノイズ画像からその距離を引くことで猫の集合の中の点を抽出できる

Well, that isn't how this works. A diffusion model is not a collection of imagery. It is a latent map of weights representing an AI's generalization of concepts. The training process would be more accurately compared to a human learning to draw by drawing what they see.

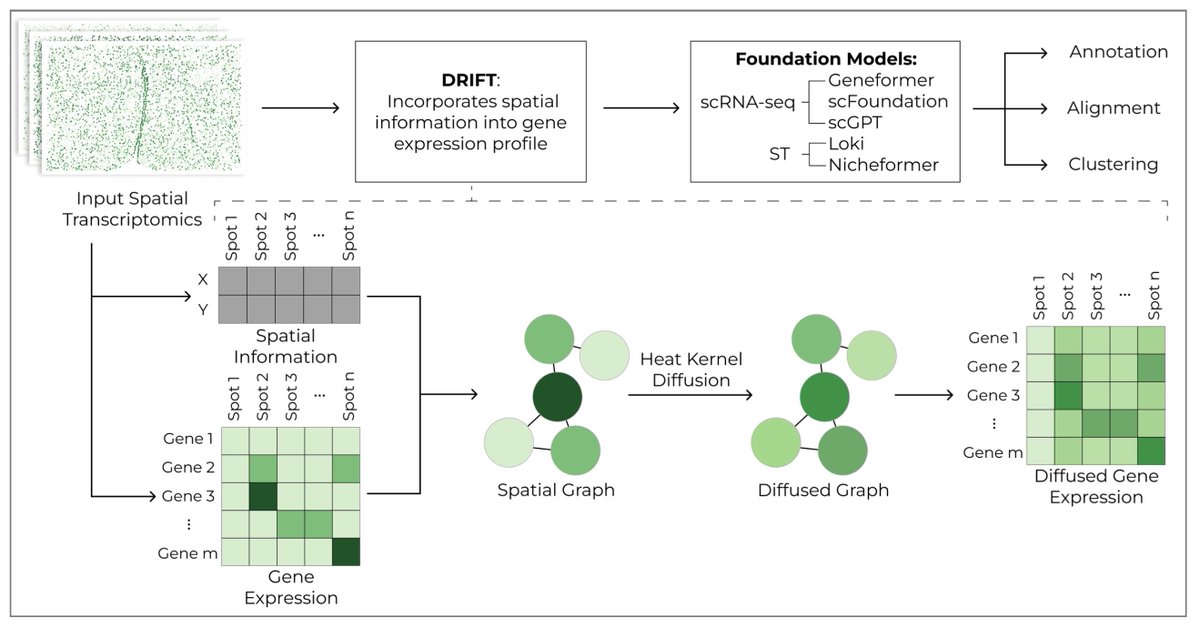

DRIFT: Diffusion-based Representation Integration for Foundation Models Improves Spatial Transcriptomics Analysis biorxiv.org/content/10.110…

[LG] Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone V Singh, O Ostapenko, P Noël, T Scholak [Mila – Quebec AI Institute & ServiceNow Research] (2025) arxiv.org/abs/2511.15927

![fly51fly's tweet image. [LG] Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone

V Singh, O Ostapenko, P Noël, T Scholak [Mila – Quebec AI Institute & ServiceNow Research] (2025)

arxiv.org/abs/2511.15927](https://pbs.twimg.com/media/G6T_b_ca4AAnUnF.png)

![fly51fly's tweet image. [LG] Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone

V Singh, O Ostapenko, P Noël, T Scholak [Mila – Quebec AI Institute & ServiceNow Research] (2025)

arxiv.org/abs/2511.15927](https://pbs.twimg.com/media/G6T_dWabsAAoqm0.jpg)

![fly51fly's tweet image. [LG] Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone

V Singh, O Ostapenko, P Noël, T Scholak [Mila – Quebec AI Institute & ServiceNow Research] (2025)

arxiv.org/abs/2511.15927](https://pbs.twimg.com/media/G6T_dW0bIAAEZHF.jpg)

![fly51fly's tweet image. [LG] Breaking the Bottleneck with DiffuApriel: High-Throughput Diffusion LMs with Mamba Backbone

V Singh, O Ostapenko, P Noël, T Scholak [Mila – Quebec AI Institute & ServiceNow Research] (2025)

arxiv.org/abs/2511.15927](https://pbs.twimg.com/media/G6T_eoxaQAAzVV_.jpg)

3. diffusion policy는 Imitation Learning이라는 패러다임을 위한 알고리즘입니다. 이것은 로봇 공학에 머신러닝을 사용하는 가장 직관적인 방법입니다. 로봇이 수행해야 할 행동과 관찰( 데이터 쌍을 수집합니다. 이를 사용해 지도 학습으로 모델을 훈련시키면 로봇이 똑같은 행동을 하게 됩니다.

Multimodal Diffusion Language Models for Thinking-Aware Editing and Generation #HackerNews github.com/tyfeld/MMaDA-P…

It can't do what you claim it does, because a diffusion model is not a collection of imagery. It is a latent map of weights representing an AI's generalization of concepts. It would be more accurate to compare training AI to a human learning to draw by drawing what they see.

Gemini diffusion will get rid of the slowness issue. And I think Inception's Mercury dllm is currently underrated.

Something went wrong.

Something went wrong.

United States Trends

- 1. Araujo 189K posts

- 2. Chelsea 658K posts

- 3. Barca 263K posts

- 4. Wizards 6,549 posts

- 5. Hazel 9,647 posts

- 6. Seton Hall 2,064 posts

- 7. Estevao 273K posts

- 8. Godzilla 26.8K posts

- 9. Barcelona 470K posts

- 10. Bishop Boswell N/A

- 11. Yamal 206K posts

- 12. Eric Morris 3,429 posts

- 13. Ferran 79.3K posts

- 14. Oklahoma State 5,058 posts

- 15. Leftover 6,811 posts

- 16. Witkoff 68K posts

- 17. Skippy 5,546 posts

- 18. Raising Arizona 2,006 posts

- 19. National Treasure 8,139 posts

- 20. Corey Kispert N/A