#interpretableml search results

The first version of my online book on Interpretable Machine Learning is out! christophm.github.io/interpretable-… I am very excited to release it. It's a guide for making machine learning models explainable. #interpretableML #iml #ExplainableAI #xai #MachineLearning #DataScience

Giving a talk on Explainable AI in Healthcare at @CTSICN in hour #responsibleAI #InterpretableML #explainableAI

We are having a mini-session in #causality at #pbdw2019 with first two talks by Rich Caruana and Yi Luo! #interpretableML #radonc @UMichRadOnc @MSFTResearch

Relying on XGBoost/RF feature importances to interpret your model? Read this first towardsdatascience.com/interpretable-… You are probably reporting misleading conclusions #XAI #interpretableML

Our paper "NoiseGrad: enhancing explanations by introducing stochasticity to model weights" has been accepted at #AAAI2022 🎉 See you (fingers crossed) in Canada 🇨🇦 arxiv.org/abs/2106.10185 #ML #InterpretableML #XAI

Looking forward to the 1st #XAI day webinar tomorrow, Sept. 3rd, at @DIAL_UniCam. Many thanks to the speakers @grau_isel, Eric S. Vorm and @leilanigilpin who will talk about the essentials of #interpretableML and its applications.

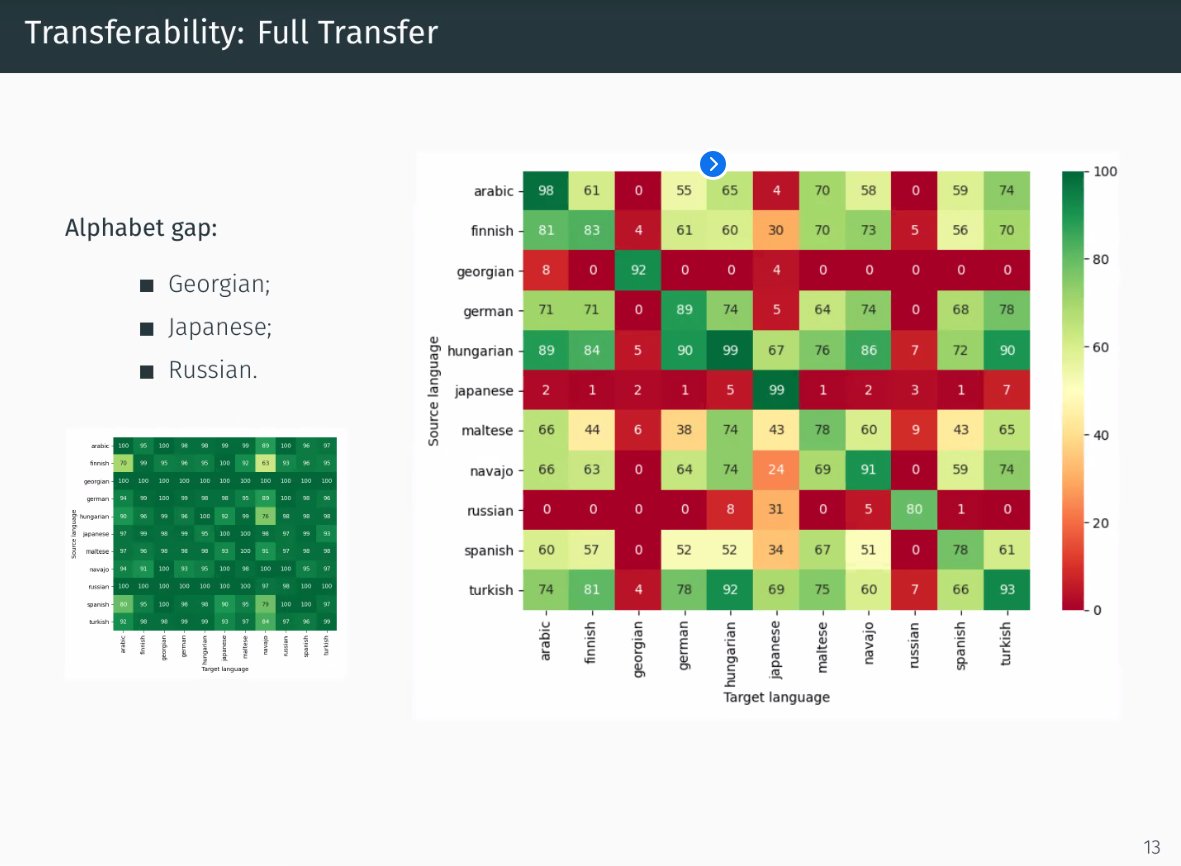

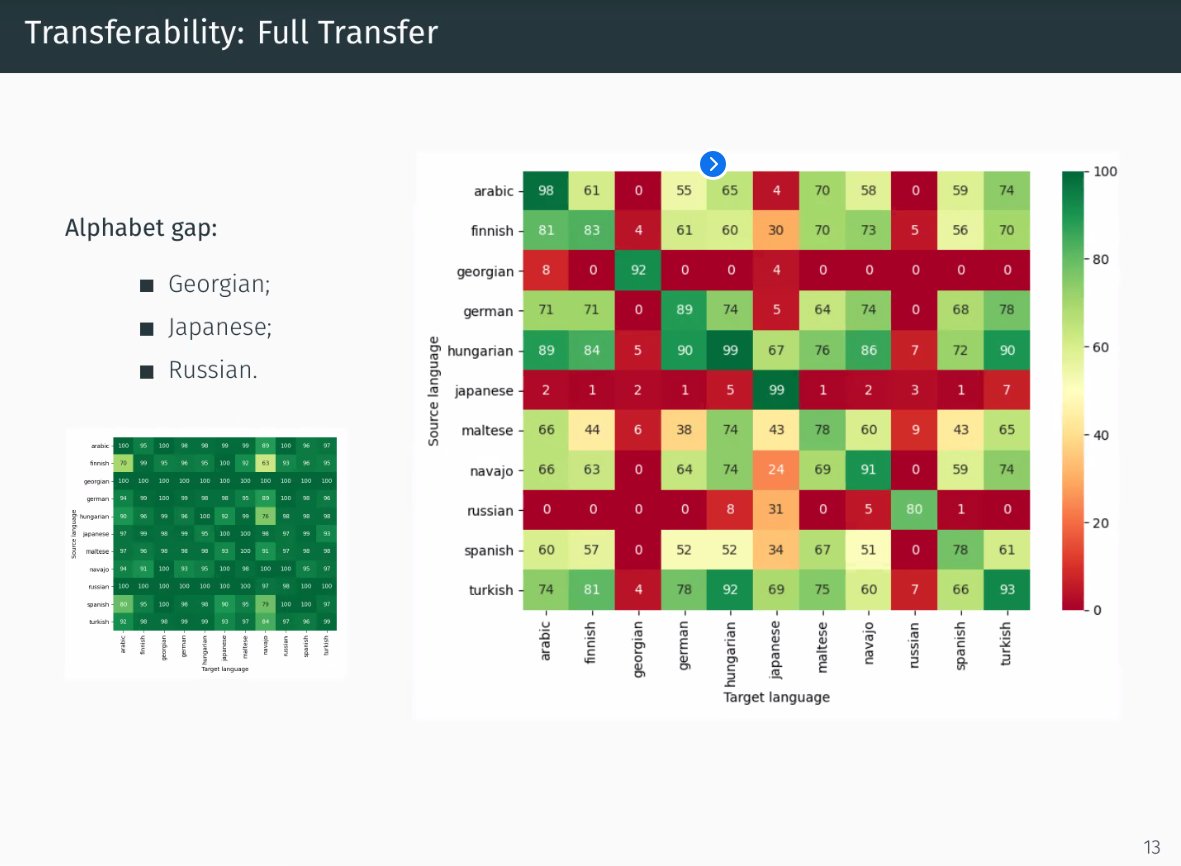

The first session of oral presentations has concluded, very interesting talks from A. Himmelhuber (Siemens) and M. Couceiro (U. Lorraine) on the topics GNN explanations and tranferability of analogies learned via DNNs. #AIMLAI @ECMLPKDD 2021. #xai #interpretableML

I've created a short demo in #rstats on how to enforce monotonic constraints in H2O #AutoML / Stacked Ensembles. #InterpretableML #xai Thanks to @Navdeep_Gill_ for the partial dependence plots! @h2oai 👉 gist.github.com/ledell/91beb92…

#KDD2019 keynote speaker @CynthiaRudin on #InterpretableML - recidivism models all perform about the same & Complicated models are preferred because they are profitable #explainableAI @kdd_news

.@aghaei_sina will be presenting our paper on strong formulations for optimal classification trees tomorrow at #MIP2020 — joint work with the one and only @GomezAndres8 #MIPforMachineLearning #InterpretableML

#ECDA2018 @hnfpb @RealGabinator thanks! Nice talk and nice overview about explanation methods in #DNN. #InterpretableML

We have 2 open #PhD positions where fellows will work with 24/7 recordings of physical activity: one focusing on #InterpretableML and one on the risk of common non-communicable diseases. Plz share the post in your network & help us find talented students: s.ntnu.no/labda

#AIMLAI @ECMLPKDD 2021 has started. Right now Prof Zhou (@zhoubolei) from CUHK is giving a keynote highlighting the efforts of his team towards making deep AI models think like humans. #xai #interpretableml #ai

A sensitivity analysis of a regression model of ocean temperature @RachelaFurner @DanJonesOcean @EmilyShuckburgh et al → doi.org/10.1017/eds.20… #OceanTemperature #DataScience #InterpretableML #ML #Oceanography #RegressionModel #ClimateModels #ClimateScience #MachineLearning

Yes, we did it again :-) Benedikt Bönninghoff, Robert Nickel and I - again at 1st place in the 2021 PAN@CLEF author identification challenge pan.webis.de/clef21/pan21-w… #interpretableML #machinelearning @HGI_Bochum @CASA_EXC @ika_rub @ruhrunibochum

NoiseGrad allows to enhance local explanations of #ML models by introducing stochasticity to the weights. However, it is also possible to improve Global Explanations! Check out by yourself! #XAI #InterpretableML github.com/understandable…

#shapleyvalue for #ML model `additive feature attribution` Semantics, pros, cons (shots from "#interpretableML book") Related concepts ⤷ #FeatureExtraction ⤷ #FeatureAttribution ⤷ #FeatureSelection ⤷ #ModelValidation Resources gist (growing): bit.ly/31XGNUs

2nd release this week: version 0.2 of the interpretable ML book 💪 christophm.github.io/interpretable-… New chapters: - shapley value - on explanations - short stories Reworked: - RuleFit - interpretability And I could use some help drawing cats: bit.ly/2spQDRL 🐱

github.com

Create better pictograms for explaining the Shapley value · Issue #20 · christophM/interpretable-...

They are hand drawn and I am not super happy with them. Help to improve those is appreciated. The location in the book: https://github.com/christophM/interpretable-ml-book/blob/master/chapters/05.6...

RT SHAP for Categorical Features dlvr.it/SSYRVn #interpretableml #machinelearning #shap #datascience #explainableai

Paper: nature.com/articles/s4200… Code: github.com/ohsu-cedar-com… #InterpretableML #AIinCancer #SingleCell #MultiOmics #InterpretableAI #CancerGenomics #OpenScience #Bioinformatics #ComputationalBiology #MachineLearning

#CVPR2025 #TrustworthyML #InterpretableML #GenAI #AI #MachineLearning #AIResearch #DeepLearning #ComputerVision

Our #ICLR paper, “Efficient & Accurate Explanation Estimation with Distribution Compression” made the top 5.1% of submissions and was selected as a Spotlight! Congrats to the first author @hbaniecki #xAI #interpretableML Paper: arxiv.org/abs/2406.18334

🚨 Are you at #INFORMS2024? Don't miss our session on Emerging Trends in Interpretable Machine Learning today at 2:15 PM! 🌟 Our speakers will dive into theoretical and applied aspects of interpretability and model multiplicity. 💡#InterpretableML #TrustworthyAI #Multiplicity

Special thanks to our supporting institutions: @UMI_Lab_AI @unipotsdam @LeibnizATB @bifoldberlin @FraunhoferHHI @TUBerlin #InterpretableML #MechInterp #ExplainableAI

#ICML #BayesianDeepLearning #InterpretableML #FoundationModel Ever wondered what concepts vision foundation models (e.g., ViTs) learn and use to make predictions?

🚀Just published our new paper in @EarthsFutureEiC! 🌍 We propose how #InterpretableML can be more broadly and effectively integrated into geoscientific research, highlighting key do's and don'ts when using IML for process understanding. Check it out: agupubs.onlinelibrary.wiley.com/doi/full/10.10…

#BayesianDeepLearning #InterpretableML #ConceptInterpretation #HumanAICollaboration Achieving the balance between accuracy and interpretability in machine learning models is a notable challenge. Models that are accurate often lack interpretability,

🌟 Seeking Postdoc Position in Interpretable Machine Learning! 🤖🔍 Strong ML background, eager to contribute to cutting-edge research. Looking for opportunities to collaborate and make an impact. #InterpretableML #Postdoc #AIResearch #phdchat

Two of my PRs are now merged with PySR: now you can use "min", "max" and "round" operators without any explicit sympy mapping. PySR is a Python interface to a Julia backend for Symbolic Regression #interpretableML github.com/MilesCranmer/P…

github.com

GitHub - MilesCranmer/PySR: High-Performance Symbolic Regression in Python and Julia

High-Performance Symbolic Regression in Python and Julia - MilesCranmer/PySR

We have in mind several directions to further investigate the topic in the future and we are excited about it, so we encourage and welcome any feedback or exchange of ideas on the paper’s topic! #deeplearning #explainableAI #interpretableML /5

#ICML2023 #BayesDL #InterpretableML Can we train self-interpretable time series models that generate actionable explanations? Come check out our Counterfactual Time Series (CounTS) in the oral session C2 at 3pm~4:30pm, July 27 and poster session 11:00am~1:30pm on July 25, Hall 1.

2️⃣ "Interpretable Machine Learning: A Guide for Making Black Box Models Explainable" by Christoph Molnar. Explore techniques to understand and interpret complex machine learning models, ensuring transparency and trust in AI systems. #InterpretableML #ExplainableAI

We have 2 open #PhD positions where fellows will work with 24/7 recordings of physical activity: one focusing on #InterpretableML and one on the risk of common non-communicable diseases. Plz share the post in your network & help us find talented students: s.ntnu.no/labda

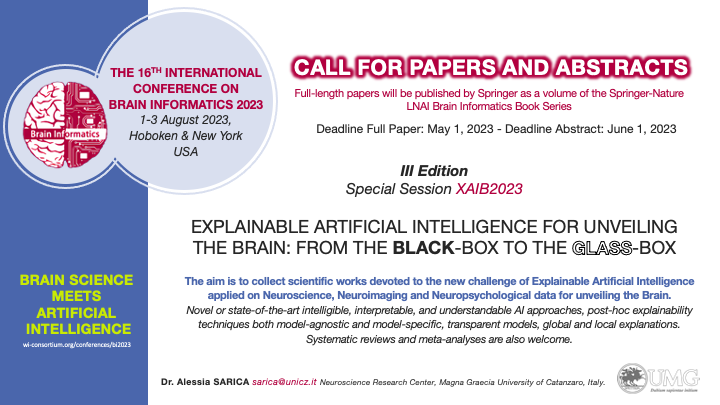

CALL FOR PAPERS AND ABSTRACTS “Explainable Artificial Intelligence For Unveiling The Brain: From The Black-Box To The Glass-Box” BrainInformatics2023 #explainableAI #explainableML #interpretableML #XAI #ArtificialIntelligence #MachineLearning #neuroscience #neuroimaging #brain

The first version of my online book on Interpretable Machine Learning is out! christophm.github.io/interpretable-… I am very excited to release it. It's a guide for making machine learning models explainable. #interpretableML #iml #ExplainableAI #xai #MachineLearning #DataScience

A sensitivity analysis of a regression model of ocean temperature @RachelaFurner @DanJonesOcean @EmilyShuckburgh et al → doi.org/10.1017/eds.20… #OceanTemperature #DataScience #InterpretableML #ML #Oceanography #RegressionModel #ClimateModels #ClimateScience #MachineLearning

We are having a mini-session in #causality at #pbdw2019 with first two talks by Rich Caruana and Yi Luo! #interpretableML #radonc @UMichRadOnc @MSFTResearch

Giving a talk on Explainable AI in Healthcare at @CTSICN in hour #responsibleAI #InterpretableML #explainableAI

An exponential growth in the application of machine learning (ML) models. But several times I run into this. #Data #InterpretableML #BlackBoxModels #ArtificialIntelligence

RT SHAP for Categorical Features dlvr.it/SSYRVn #interpretableml #machinelearning #shap #datascience #explainableai

The first session of oral presentations has concluded, very interesting talks from A. Himmelhuber (Siemens) and M. Couceiro (U. Lorraine) on the topics GNN explanations and tranferability of analogies learned via DNNs. #AIMLAI @ECMLPKDD 2021. #xai #interpretableML

Looking forward to the 1st #XAI day webinar tomorrow, Sept. 3rd, at @DIAL_UniCam. Many thanks to the speakers @grau_isel, Eric S. Vorm and @leilanigilpin who will talk about the essentials of #interpretableML and its applications.

RT AI Explainability Requires Robustness dlvr.it/SDtxqw #machinelearning #explainableai #interpretableml #adversarialattack

#AIMLAI @ECMLPKDD 2021 has started. Right now Prof Zhou (@zhoubolei) from CUHK is giving a keynote highlighting the efforts of his team towards making deep AI models think like humans. #xai #interpretableml #ai

Interpreting predictions made by a black box model with SHAP: bit.ly/3iO1vPT. Amazing work @scottlundberg! This kind of tool opens a whole new world of possibilities for practical AI applications. #interpretableML #MachineLearning #DataScience #SHAP #Python

#ECDA2018 @hnfpb @RealGabinator thanks! Nice talk and nice overview about explanation methods in #DNN. #InterpretableML

RT Analysing NYC Yellow Taxi Trip Records with InterpretML dlvr.it/Sgs913 #interpretableai #interpretableml #datascience #machinelearning

RT The Relationship between Interpretability and Fairness dlvr.it/SS655s #interpretableml #datascience #explainableai #algorithmfairness

Relying on XGBoost/RF feature importances to interpret your model? Read this first towardsdatascience.com/interpretable-… You are probably reporting misleading conclusions #XAI #interpretableML

I've created a short demo in #rstats on how to enforce monotonic constraints in H2O #AutoML / Stacked Ensembles. #InterpretableML #xai Thanks to @Navdeep_Gill_ for the partial dependence plots! @h2oai 👉 gist.github.com/ledell/91beb92…

#shapleyvalue for #ML model `additive feature attribution` Semantics, pros, cons (shots from "#interpretableML book") Related concepts ⤷ #FeatureExtraction ⤷ #FeatureAttribution ⤷ #FeatureSelection ⤷ #ModelValidation Resources gist (growing): bit.ly/31XGNUs

2nd release this week: version 0.2 of the interpretable ML book 💪 christophm.github.io/interpretable-… New chapters: - shapley value - on explanations - short stories Reworked: - RuleFit - interpretability And I could use some help drawing cats: bit.ly/2spQDRL 🐱

github.com

Create better pictograms for explaining the Shapley value · Issue #20 · christophM/interpretable-...

They are hand drawn and I am not super happy with them. Help to improve those is appreciated. The location in the book: https://github.com/christophM/interpretable-ml-book/blob/master/chapters/05.6...

Yes, we did it again :-) Benedikt Bönninghoff, Robert Nickel and I - again at 1st place in the 2021 PAN@CLEF author identification challenge pan.webis.de/clef21/pan21-w… #interpretableML #machinelearning @HGI_Bochum @CASA_EXC @ika_rub @ruhrunibochum

#KDD2019 keynote speaker @CynthiaRudin on #InterpretableML - recidivism models all perform about the same & Complicated models are preferred because they are profitable #explainableAI @kdd_news

Our paper "NoiseGrad: enhancing explanations by introducing stochasticity to model weights" has been accepted at #AAAI2022 🎉 See you (fingers crossed) in Canada 🇨🇦 arxiv.org/abs/2106.10185 #ML #InterpretableML #XAI

Something went wrong.

Something went wrong.

United States Trends

- 1. Veterans Day 420K posts

- 2. Tangle and Whisper 4,492 posts

- 3. Woody 17K posts

- 4. Jeezy 1,601 posts

- 5. State of Play 30.4K posts

- 6. Toy Story 5 23.9K posts

- 7. #ShootingStar N/A

- 8. Luka 85.3K posts

- 9. Errtime N/A

- 10. AiAi 12.2K posts

- 11. Nico 148K posts

- 12. Wanda 32K posts

- 13. NiGHTS 57.5K posts

- 14. #SonicRacingCrossWorlds 3,098 posts

- 15. Gambit 48.5K posts

- 16. Tish 6,836 posts

- 17. Nightreign DLC 14.7K posts

- 18. Marvel Tokon 2,979 posts

- 19. Travis Hunter 4,568 posts

- 20. SBMM 1,877 posts