#blackboxnlp search results

Modern neural networks can generalize compositionally given the correct dataset. How is this possible? If you're attending #blackboxnlp today at #emnlp, stop by our poster on "Discovering the Compositional Structure of Vector Representations" at 1:30-3p and 7-8:30p.

Cute bracketing and an important question in Leila Wehbe's talk (Language representations in human brains and artificial neural networks). I'm partial to the answer "definitely!" #BlackboxNLP #emnlp2018

Does multilingual BERT know how to translate? Can we neutralize language from its representations? Check out our new #BlackboxNLP paper: "It’s not Greek to mBERT: Inducing Word-Level Translations from Multilingual BERT", joint work with @ravfogel @yanaiela and @yoavgo (1/4)

One of the first papers I contributed to was published at #BlackboxNLP 📃, what an honor to, 6 years later, give a keynote at this wonderful venue 🧡 Thanks for having me! #EMNLP2025

Our second keynote of the day has just started! @vernadankers is now presenting "Memorization: Myth or Mystery?"

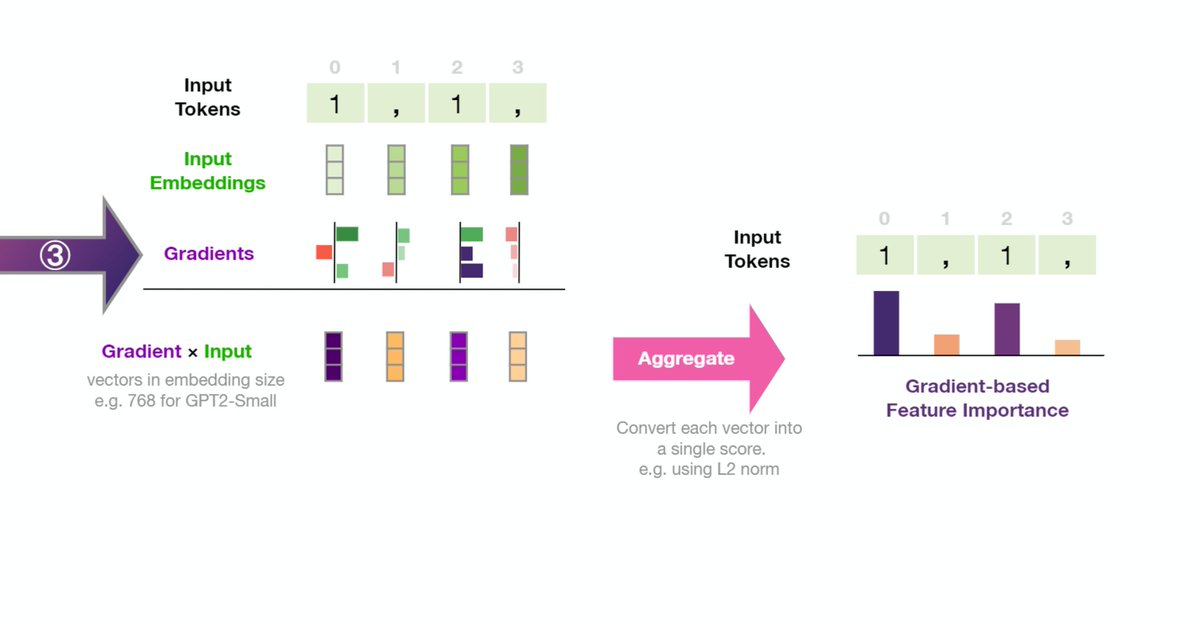

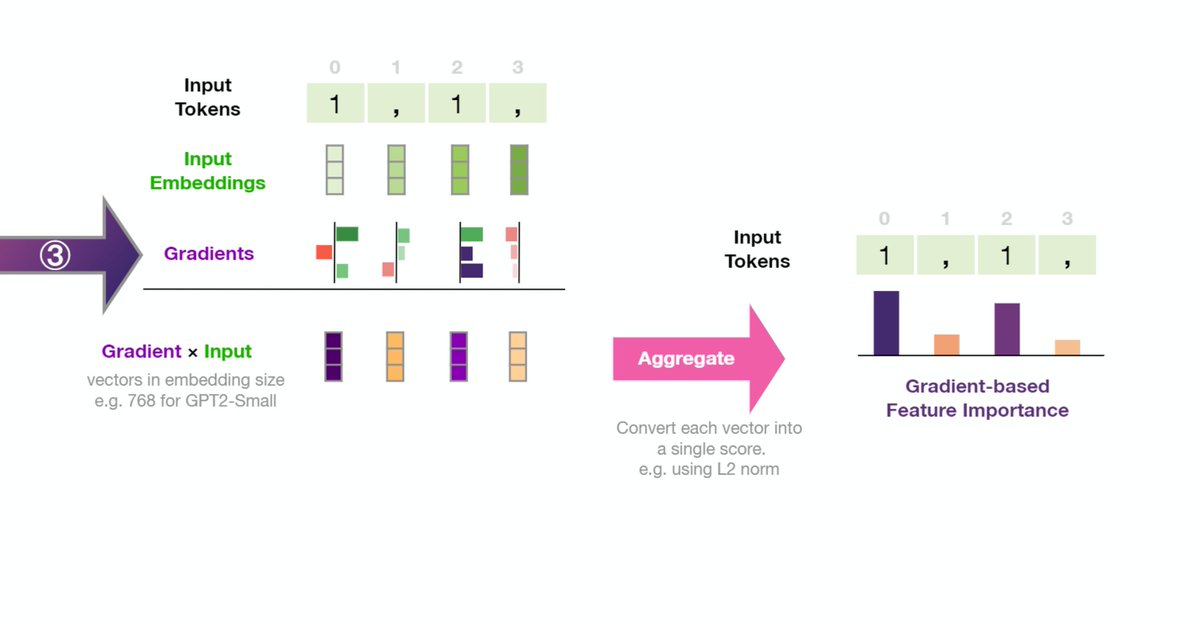

So many fascinating ideas at yesterday's #blackboxNLP workshop at #emnlp2020. Too many bookmarked papers. Some takeaways: 1- There's more room to adopt input saliency methods in NLP. With Grad*input and Integrated Gradients being key gradient-based methods.

RNN LMs can learn some but not all island constraints (E. Wilcox, R. Levy, T. Morita & R. Futrell). Subject islands are hard. Yet unanswered Q (paraphrased M. Baroni): does gradience suggest islands aren't grammatical, but are instead likely processing? #BlackboxNLP #emnlp2018

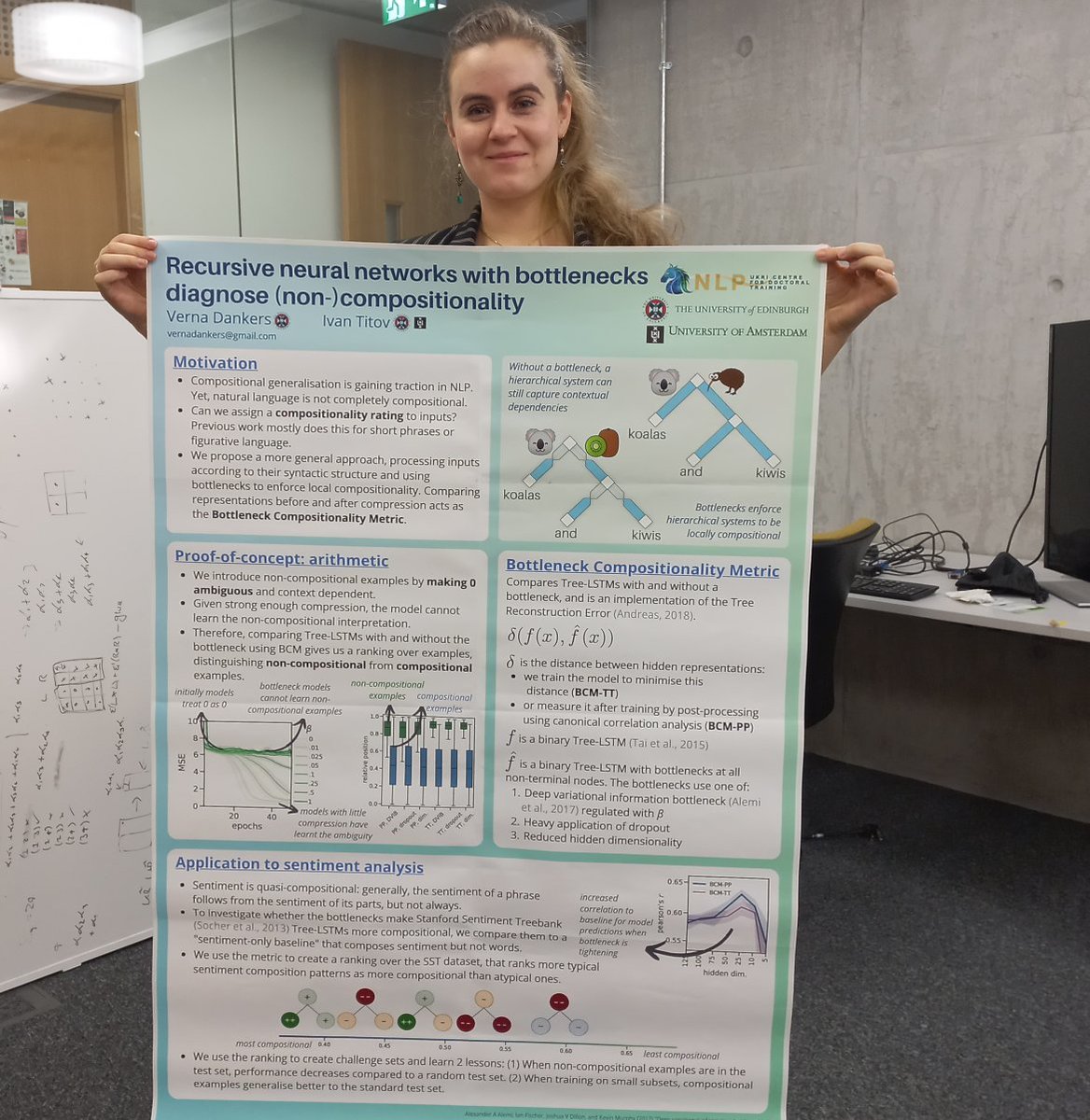

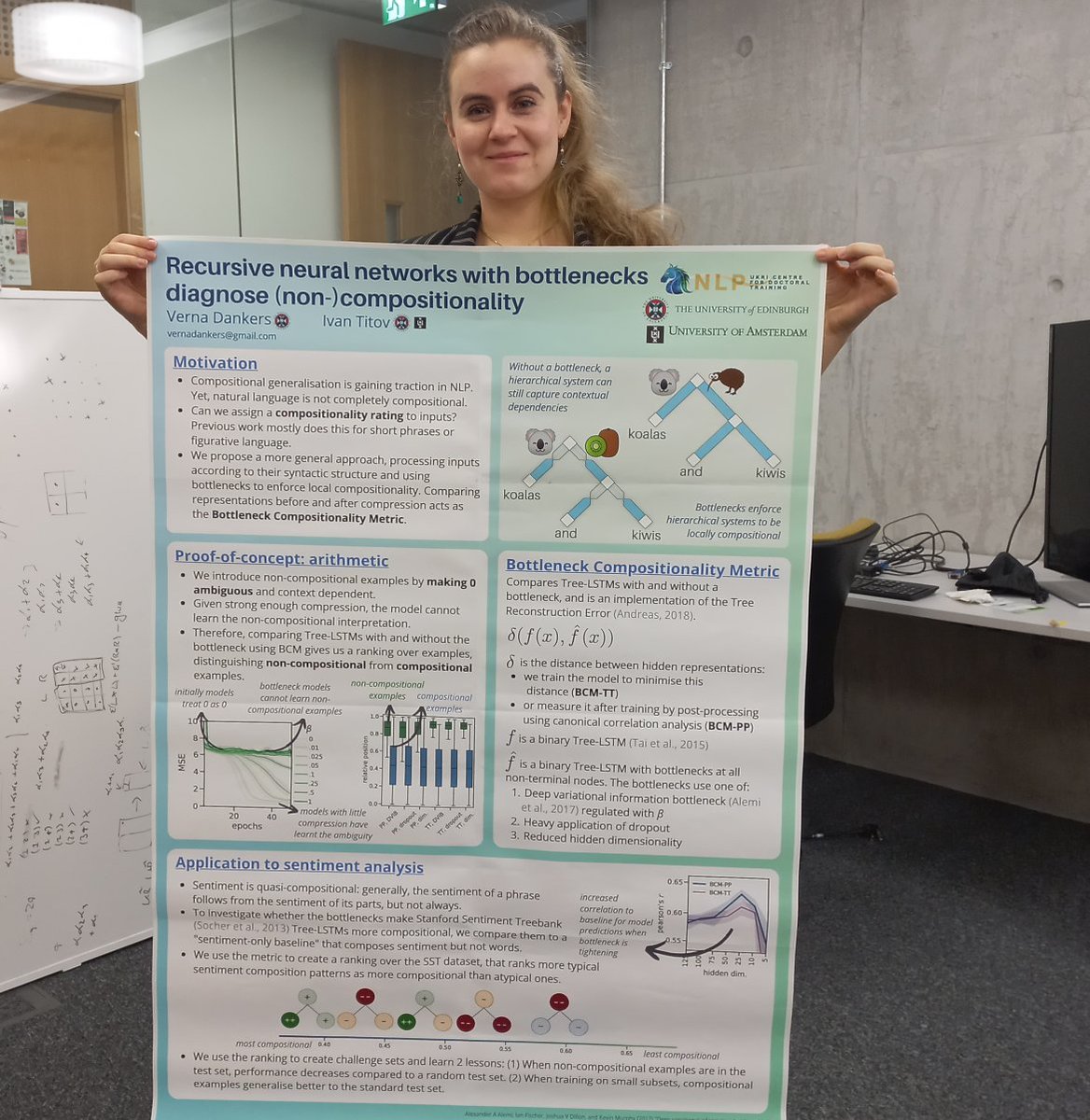

Coming to you live from Edinburgh, my #emnlp2022 Findings poster and I, during the virtual poster session of #BlackboxNLP at 4PM in Abu Dhabi (noon UTC). Find me at stand 492 in Gathertown to learn more about the Bottleneck Compositionality Metric @iatitov and I propose!

You can remove most of the question's words and still get a correct answer on SQuAD #EMNLP2018 #blackboxnlp

Heading to the #EMNLP2025 @BlackboxNLP Workshop this Sunday? Don’t miss @nfelnlp and @lkopf_ml's poster on „Interpreting Language Models Through Concept Descriptions: A Survey" 📄 aclanthology.org/2025.blackboxn… 📅 Nov 9, 11:00-12:00 @ Hall C #BlackboxNLP #XAI #Interpretapility

WHAT COULD THEY POSSIBLY BE USING INSTEAD OF DIAGNOSTIC CLASSIFIERS??? Drop by my poster at #BlackboxNLP and find out!

Check out our new #BlackboxNLP paper "What Does BERT Look At? An Analysis of BERT's Attention" with @ukhndlwl @omerlevy @chrmanning! arxiv.org/abs/1906.04341 Among other things, we show that BERT's attention corresponds surprisingly well to aspects of syntax and coreference.

One of the first papers I contributed to was published at #BlackboxNLP 📃, what an honor to, 6 years later, give a keynote at this wonderful venue 🧡 Thanks for having me! #EMNLP2025

Our second keynote of the day has just started! @vernadankers is now presenting "Memorization: Myth or Mystery?"

Presenting a poster today at #BlackboxNLP? You are welcome to present in either poster session, or both! See the full program on our website: blackboxnlp.github.io/2025/

blackboxnlp.github.io

BlackboxNLP 2025

The Eight Workshop on Analyzing and Interpreting Neural Networks for NLP

Heading to the #EMNLP2025 @BlackboxNLP Workshop this Sunday? Don’t miss @nfelnlp and @lkopf_ml's poster on „Interpreting Language Models Through Concept Descriptions: A Survey" 📄 aclanthology.org/2025.blackboxn… 📅 Nov 9, 11:00-12:00 @ Hall C #BlackboxNLP #XAI #Interpretapility

Presenting tomorrow at #BlackboxNLP? Share your papers and posters in the comments 👇

Only 3 days left for direct submissions to #BlackboxNLP, don't miss it! 🚀

📢 Call for Papers! 📢 #BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models. 📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment) 🔗 More details: blackboxnlp.github.io/2025/call/

Submit your work to #BlackboxNLP 2025!

📢 Call for Papers! 📢 #BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models. 📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment) 🔗 More details: blackboxnlp.github.io/2025/call/

📢 Call for Papers! 📢 #BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models. 📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment) 🔗 More details: blackboxnlp.github.io/2025/call/

Just 5 days left to submit your method to the MIB Shared Task at #BlackboxNLP! Have last-minute questions or need help finalizing your submission? Join the Discord server: discord.gg/n5uwjQcxPR

📝 Technical report guidelines are out! If you're submitting to the MIB Shared Task at #BlackboxNLP, feel free to take a look to help you prepare your report: blackboxnlp.github.io/2025/task/

Also it has been an honor to contribute to the CL community as an Area Chair/Reviewer, co-organizer of #BlackboxNLP (2023–2025), and tutorial presenter at #EACL2024 (and upcoming #Interspeech2025).

Just 10 days to go until the results submission deadline for the MIB Shared Task at #BlackboxNLP! If you're working on: 🧠 Circuit discovery 🔍 Feature attribution 🧪 Causal variable localization now’s the time to polish and submit! Join us on Discord: discord.gg/n5uwjQcxPR

⏳ Three weeks left! Submit your work to the MIB Shared Task at #BlackboxNLP, co-located with @emnlpmeeting Whether you're working on circuit discovery or causal variable localization, this is your chance to benchmark your method in a rigorous setup!

Working on feature attribution, circuit discovery, feature alignment, or sparse coding? Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP We welcome submissions of both existing methods and new or experimental POCs!

The wait is over! 🎉 Our speakers for #BlackboxNLP 2025 are finally out!

🚨 Excited to announce two invited speakers at #BlackboxNLP 2025! Join us to hear from two leading voices in interpretability: 🎙️ Quanshi Zhang (Shanghai Jiao Tong University) 🎙️ Verna Dankers (McGill University) @vernadankers @QuanshiZhang

🚨 Excited to announce two invited speakers at #BlackboxNLP 2025! Join us to hear from two leading voices in interpretability: 🎙️ Quanshi Zhang (Shanghai Jiao Tong University) 🎙️ Verna Dankers (McGill University) @vernadankers @QuanshiZhang

Working on circuit discovery? Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP, co-located with @emnlpmeeting 🔍 The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

Have you heard about our shared task? 📢 Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year, as a part of #BlackboxNLP at @emnlpmeeting, we're introducing a shared task to rigorously evaluate MI methods in LMs 🧵

Since people have been asking - the #blackboxNLP workshop will return this year, to be held with #emnlp2025. This workshop is all about interpreting and analyzing NLP models (and yes, this includes LLMs). More details soon, follow @BlackboxNLP

Excited about the release of MIB, a mechanistic Interpretability benchmark! Come talk to us at #iclr2025 and consider submitting to the leaderboard. We’re also planning a shared task around it at #blackboxNLP this year, located with #emnlp2025

Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements over prior work? We propose 😎 𝗠𝗜𝗕: a Mechanistic Interpretability Benchmark!

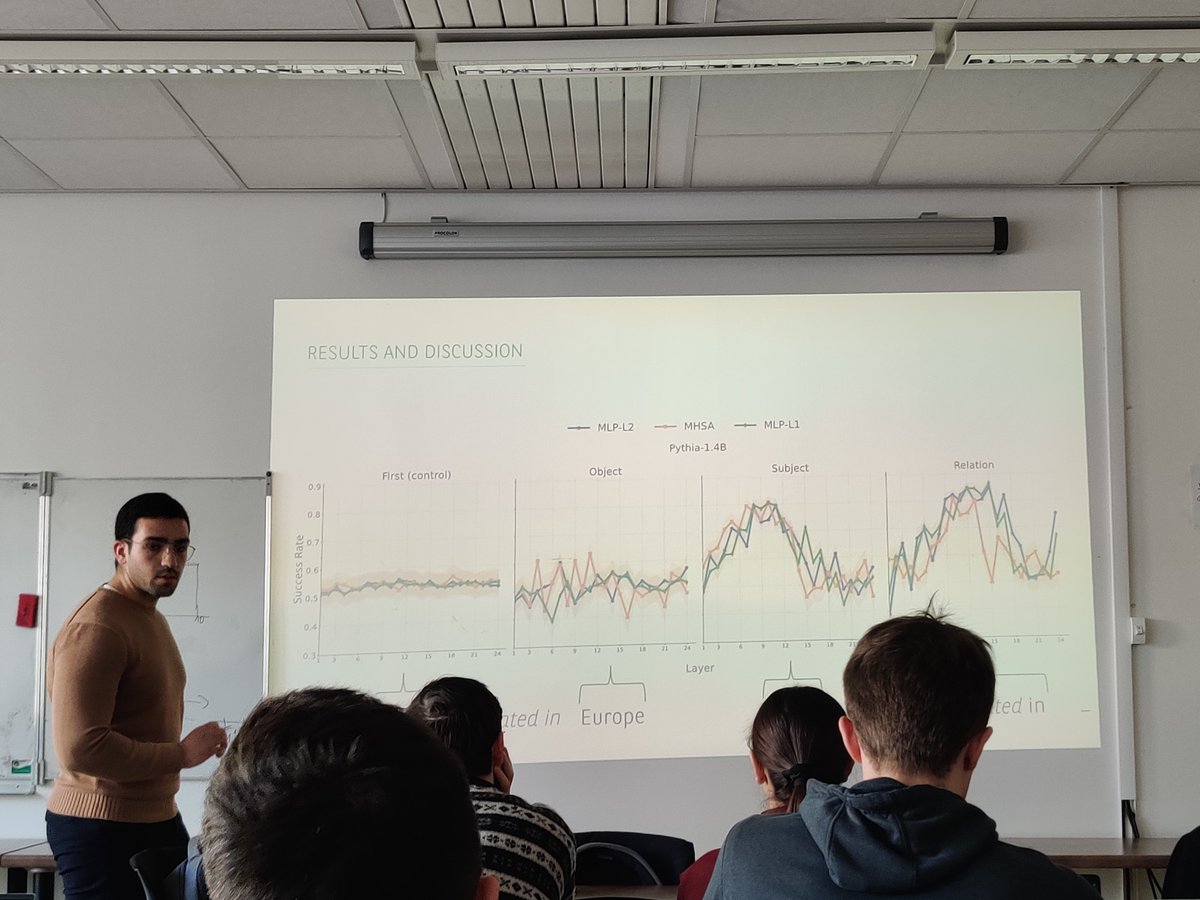

Today, Zineddine TIGHIDET (@Zineddine___t) presented his work on Probing Language Models on Their Knowledge Source, published in the BlackboxNLP workshop at EMNLP! 👏 Check it out here: arxiv.org/pdf/2410.05817 #EMNLP #BlackboxNLP

Modern neural networks can generalize compositionally given the correct dataset. How is this possible? If you're attending #blackboxnlp today at #emnlp, stop by our poster on "Discovering the Compositional Structure of Vector Representations" at 1:30-3p and 7-8:30p.

Coming to you live from Edinburgh, my #emnlp2022 Findings poster and I, during the virtual poster session of #BlackboxNLP at 4PM in Abu Dhabi (noon UTC). Find me at stand 492 in Gathertown to learn more about the Bottleneck Compositionality Metric @iatitov and I propose!

🚨 Excited to announce two invited speakers at #BlackboxNLP 2025! Join us to hear from two leading voices in interpretability: 🎙️ Quanshi Zhang (Shanghai Jiao Tong University) 🎙️ Verna Dankers (McGill University) @vernadankers @QuanshiZhang

Check out our new #BlackboxNLP paper "What Does BERT Look At? An Analysis of BERT's Attention" with @ukhndlwl @omerlevy @chrmanning! arxiv.org/abs/1906.04341 Among other things, we show that BERT's attention corresponds surprisingly well to aspects of syntax and coreference.

Cute bracketing and an important question in Leila Wehbe's talk (Language representations in human brains and artificial neural networks). I'm partial to the answer "definitely!" #BlackboxNLP #emnlp2018

RNN LMs can learn some but not all island constraints (E. Wilcox, R. Levy, T. Morita & R. Futrell). Subject islands are hard. Yet unanswered Q (paraphrased M. Baroni): does gradience suggest islands aren't grammatical, but are instead likely processing? #BlackboxNLP #emnlp2018

Machine rationales are generated to explain LM behavior, but to what extent can they be *utilized* to improve LMs’ OOD generalization? 🤔 Our 🏥ER-Test paper (#EMNLP2022 Findings + #BlackboxNLP) investigates this question! 🔍 🧵👇 [1/n]

![BrihiJ's tweet image. Machine rationales are generated to explain LM behavior, but to what extent can they be *utilized* to improve LMs’ OOD generalization? 🤔

Our 🏥ER-Test paper (#EMNLP2022 Findings + #BlackboxNLP) investigates this question! 🔍

🧵👇 [1/n]](https://pbs.twimg.com/media/FjZ7AAYVIAEnSYZ.jpg)

So many fascinating ideas at yesterday's #blackboxNLP workshop at #emnlp2020. Too many bookmarked papers. Some takeaways: 1- There's more room to adopt input saliency methods in NLP. With Grad*input and Integrated Gradients being key gradient-based methods.

Does multilingual BERT know how to translate? Can we neutralize language from its representations? Check out our new #BlackboxNLP paper: "It’s not Greek to mBERT: Inducing Word-Level Translations from Multilingual BERT", joint work with @ravfogel @yanaiela and @yoavgo (1/4)

just finished presenting my poster:) "Can neural networks understand monotonicity reasoning?" paper: aclweb.org/anthology/W19-… data (coming soon!) : github.com/verypluming/MED at fantastic #BlackboxNLP #ACL2019 !

Happy to share our paper "Unsupervised Distillation of Syntactic Information from Contextualized Word Representations", accepted to #BlackboxNLP - a joint work with @yanaiela*, Jacob Goldberger and @yoavgo. arxiv.org/abs/2010.05265 (1/4)

You can remove most of the question's words and still get a correct answer on SQuAD #EMNLP2018 #blackboxnlp

Something went wrong.

Something went wrong.

United States Trends

- 1. #FinallyOverIt 5,083 posts

- 2. #TalusLabs N/A

- 3. Summer Walker 16.3K posts

- 4. Justin Fields 9,934 posts

- 5. 5sos 21.2K posts

- 6. #criticalrolespoilers 4,000 posts

- 7. Jets 68.5K posts

- 8. Jalen Johnson 8,506 posts

- 9. Patriots 150K posts

- 10. Drake Maye 21K posts

- 11. Go Girl 25.4K posts

- 12. 1-800 Heartbreak 1,295 posts

- 13. Judge 202K posts

- 14. Wale 32.4K posts

- 15. #BlackOps7 15.7K posts

- 16. Robbed You 3,920 posts

- 17. #zzzSpecialProgram 2,533 posts

- 18. TreVeyon Henderson 12.8K posts

- 19. AD Mitchell 2,428 posts

- 20. Disc 2 N/A