#convolutionalvoltammetry 搜尋結果

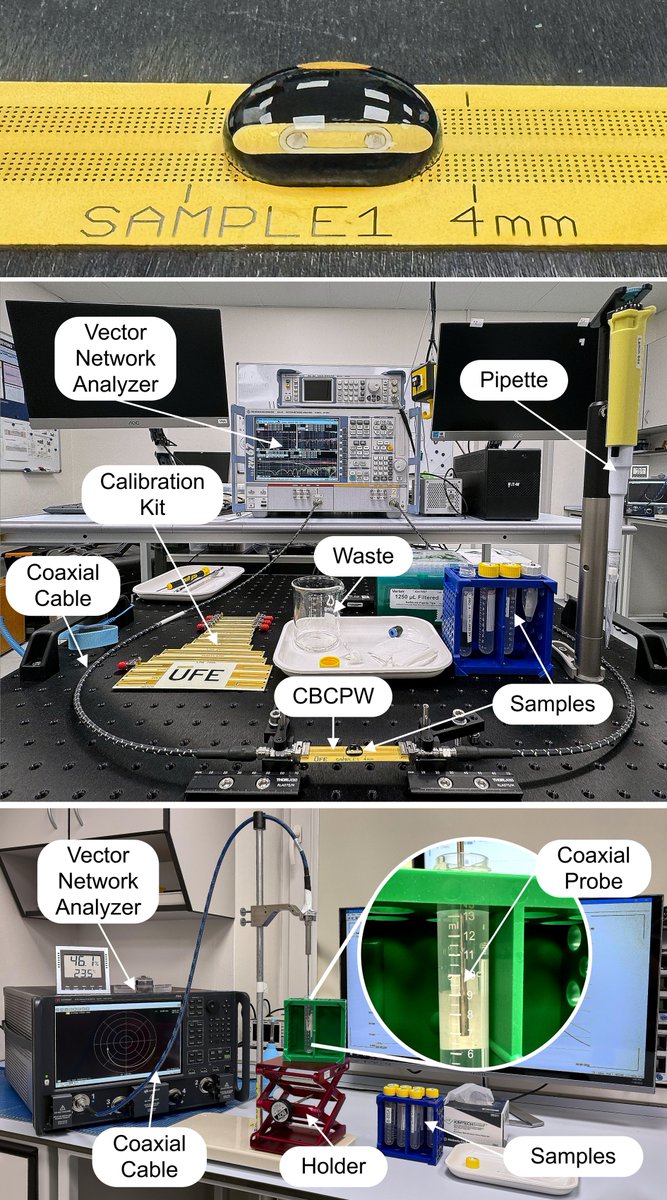

🔊🔬⚡We validated our new complex-permittivity extraction method using a simple, robust PCB-based CBCPW—no microfluidics needed. Testing amino-acid solutions up to 50 GHz showed highly reliable, repeatable results.🔎📖👉ieeexplore.ieee.org/document/10973…

🔊🔬⚡In our recently published work, we present a fast, broadband method to extract complex #dielectric #permittivity from μL-scale #biomolecular samples using a CBCPW. It offers accurate, sample-efficient characterization for #biomedical #research. 🔎📖👉ieeexplore.ieee.org/document/10973…

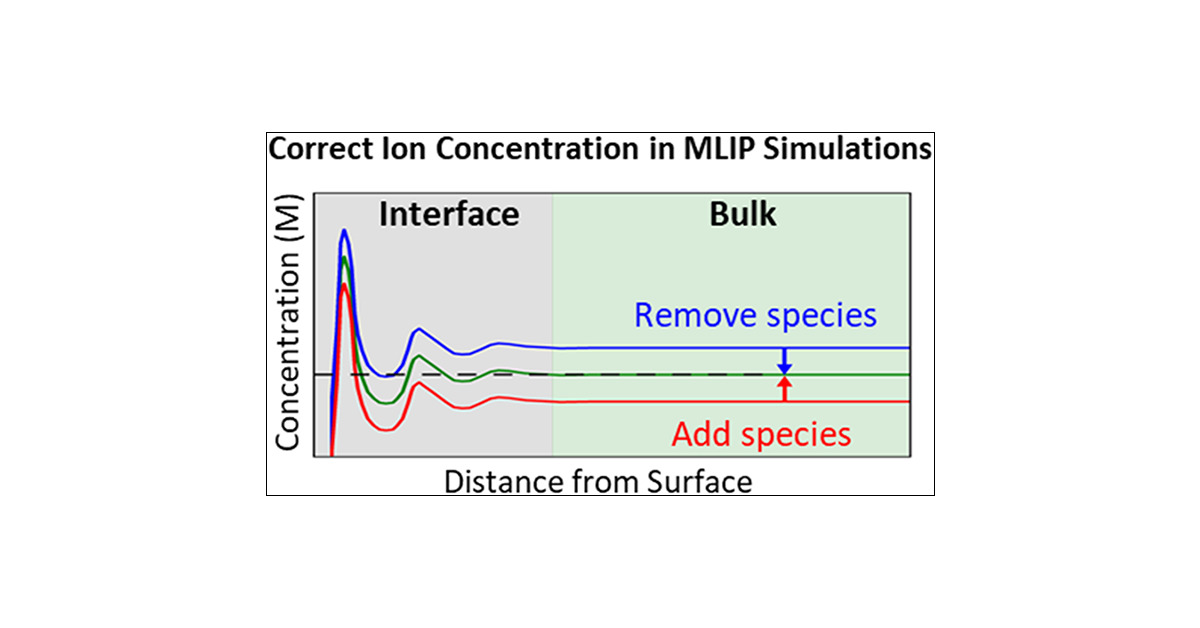

Interfacial ion concentrations can differ from bulk concentrations that affect electrocatalysis among others. We show a simple method to connect to the correct bulk concentration in interface simulations using machine learning interatomic potentials. pubs.acs.org/doi/10.1021/ac…

also like voltage to build sustained pressure for flow of electrons all the way down to less charged area

The variances add like resistors in parallel (convolution behaves like resistors in series). As a result, the posterior ends up with lower variance than both the prior and the observation!

Oldies but goldies: B Cabral, L C Leedom, Imaging Vector Fields Using Line Integral Convolution, 1993. Line integral convolution is an anisotropic filtering which averages values along streamlines. Can be used for visualization of vector fields by diffusing noise.…

Daily Deep Learning! Time ~ 8:44 > Convolutions with Multiple Input / Output Channels > 1x1 Convolutions as Dimensionality Reduction > MaxPool, AvgPool, StochasticPool, FractionalMaxPool > More Eigenvalues/Eigenvectors/Eigendecomposition > SVD, A = UΣVᵀ

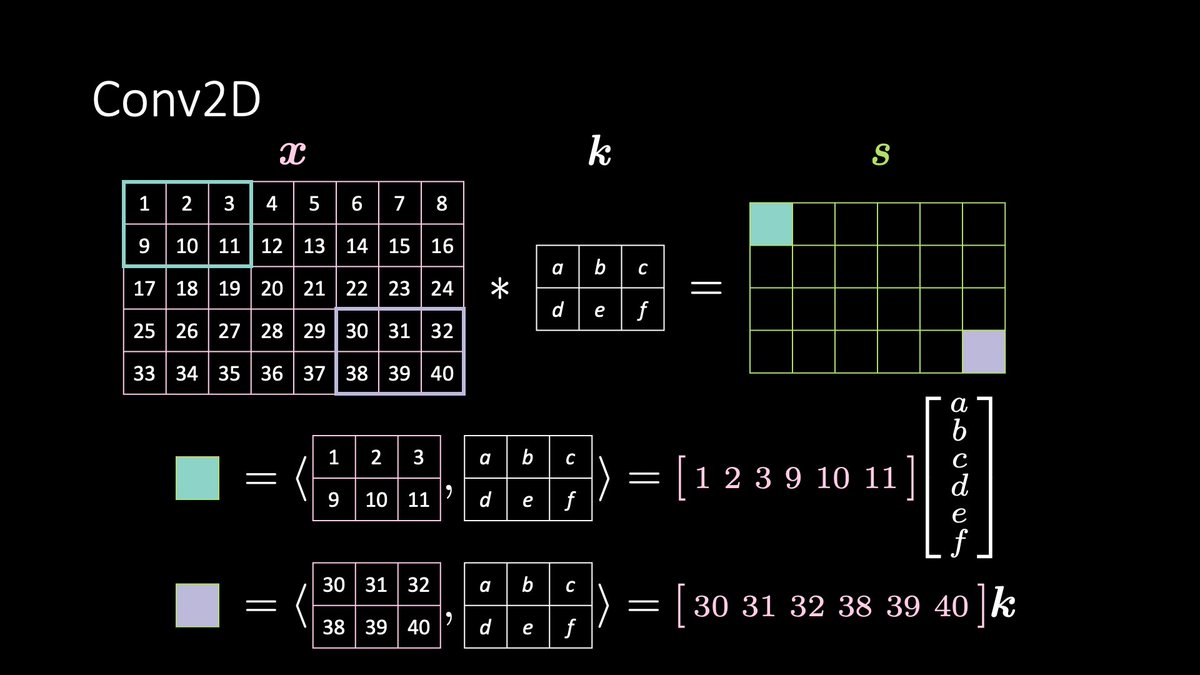

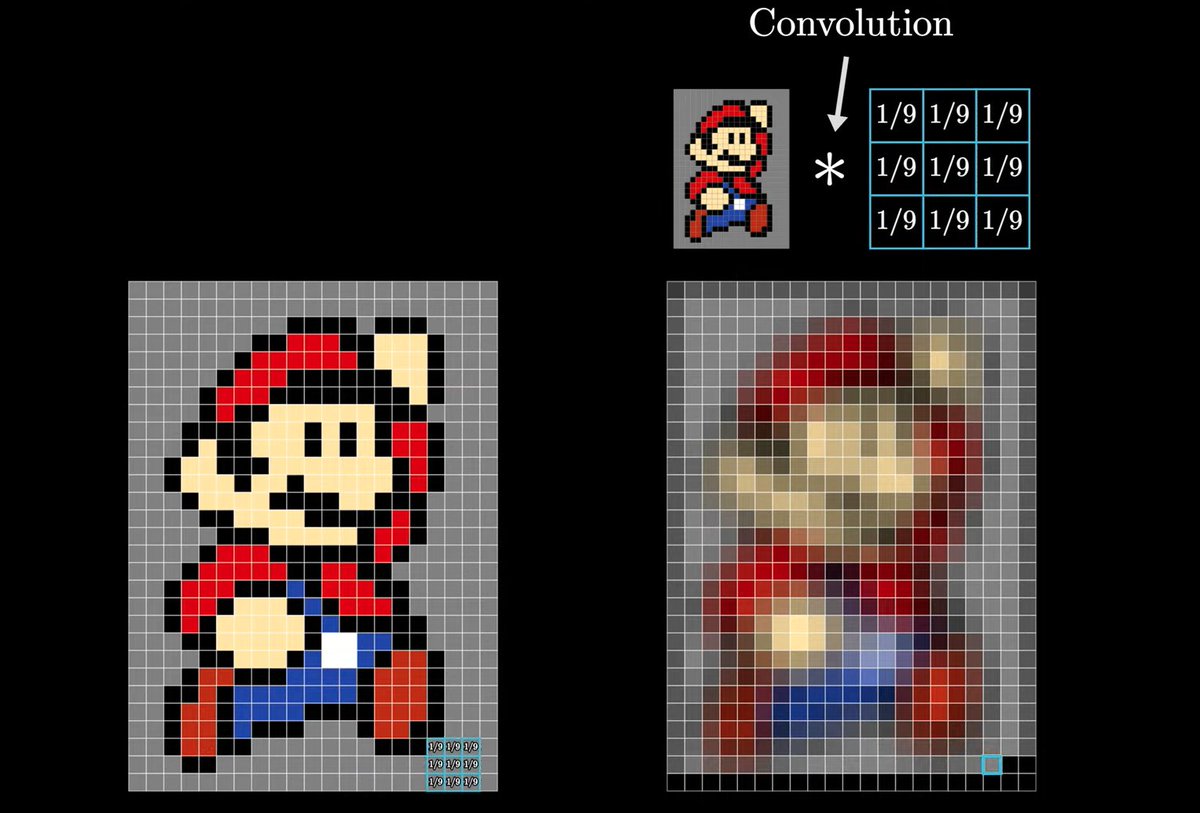

Deep Learning Math Convolutions. a mathematical procedure used in image processing and neural networks, particularly CNNs. Convolutions are useful tools for analyzing and manipulating visual data because we can apply different kernels to enhance different aspects of an image,

thought experiment: ViTs work great for 224^2 images, but what if you had a 1 million^2 pixel one? You'd either use conv, or you patchify and process each with a ViT using shared weights—essentially conv. a moment I realize convnet isn't an architecture; it's a way of thinking.

A short post on the best architectures for real-time image and video processing. TL;DR: use convolutions with stride or pooling at the low levels, and stick self-attention circuits at higher levels, where feature vectors represent objects. PS: ready to bet that Tesla FSD uses…

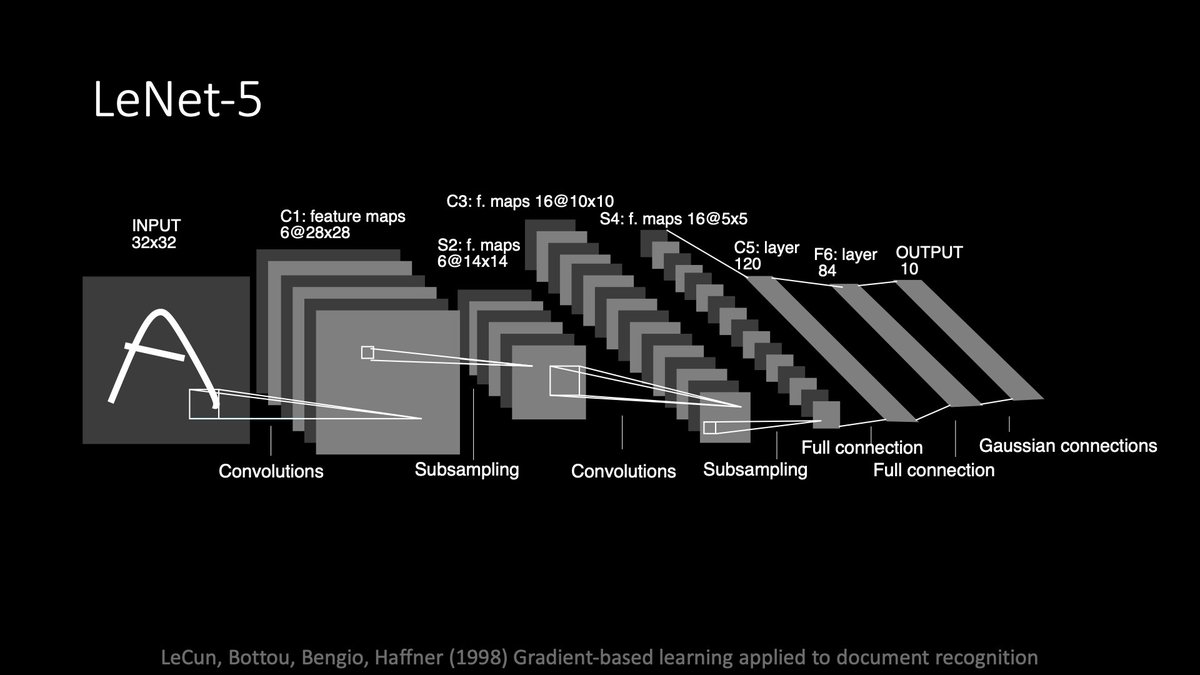

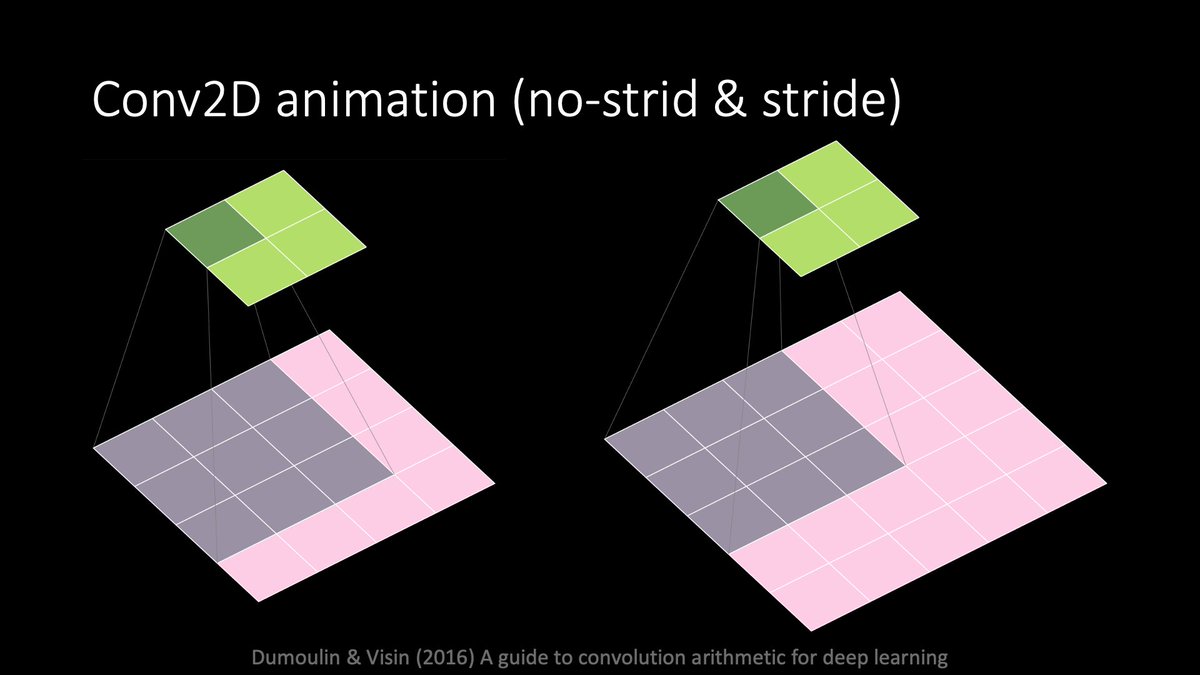

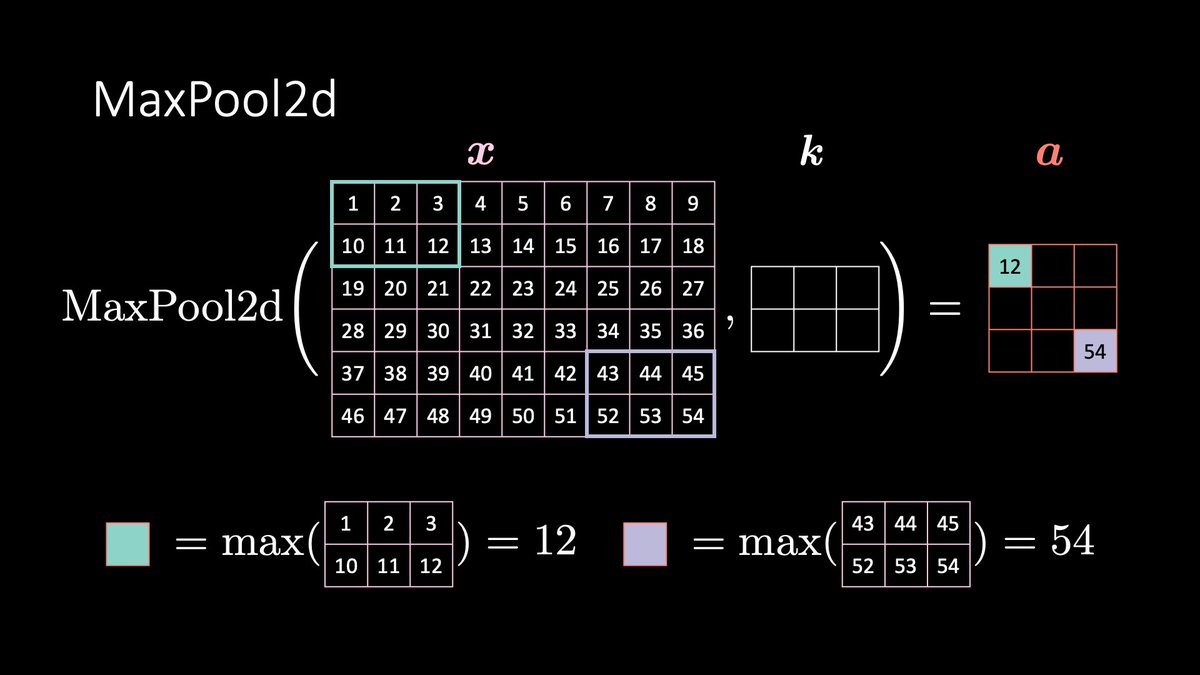

Convolutional nets iterate 2D convolutions, non-linearities, and 2D max-pooling. 🤓 The convolution, a ‘running’ projection of a portion of the image onto the kernel, is a form of pattern matching. The max-pool preserves the maximum match while decimating (subsampling) the image.

Potentiometry - measures difference in voltage at a constant current Coulometry - measures electricity at a fixed potential Amperometry - measures current flow produced by oxidation-reaction Voltammetry - measures current after a potential is applied

Convolution is used in many applications of science, engineering, maths, and notably in deep learning. @3blue1brown's explanation of convolutions is the best I've seen. Wow! You should check this one out. youtu.be/KuXjwB4LzSA

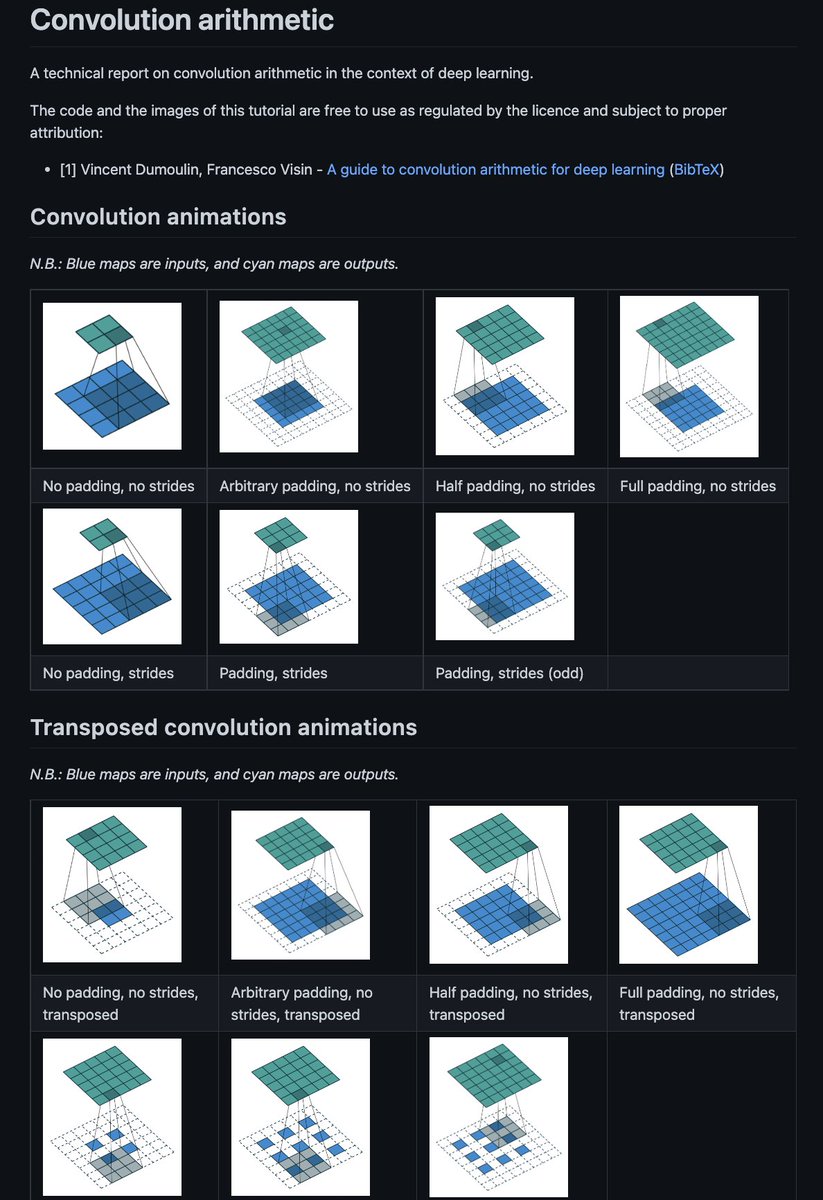

Convolutional Arithmetic Really nice and intuitive animations of different convolutional operations that are used in deep learning such as normal convolution, transposed convolution, and dilated convolution(most popular in DeepLab). github.com/vdumoulin/conv…

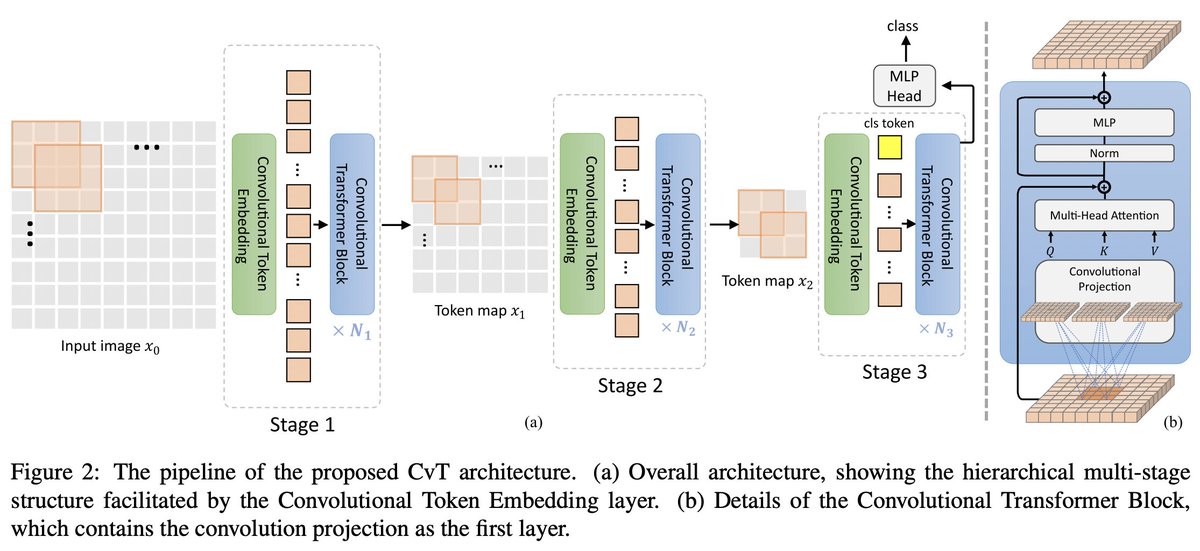

CvT (Convolutional vision Transformer) is now available in 🤗 Transformers. CvT improves the original ViT in performance+efficiency, by introducing convolutions to yield the best of both designs. Pos encodings can be safely removed, enabling fine-tuning on high resolutions🔥

After many hours of filming, editing, and debating with other electrochemists, we are proud to show you our latest YouTube installment. An Introduction to our favorite electrochemistry technique. Cyclic Voltammetry. Happy Friday everybody! youtube.com/watch?v=wLCXvg…

Do you want to understand convolutions? This page is full of great animated examples! It covers all important convolution parameters and the authors also have a paper explaining the details. github.com/vdumoulin/conv…

I've been exploring some visualizers of convolution. Here, a signal (grey) is convolved with a gaussian kernel (red) - meaning we "slide" the kernel across the signal, computing each output value (green) by multiplying the kernel with the underlying signal (blue).

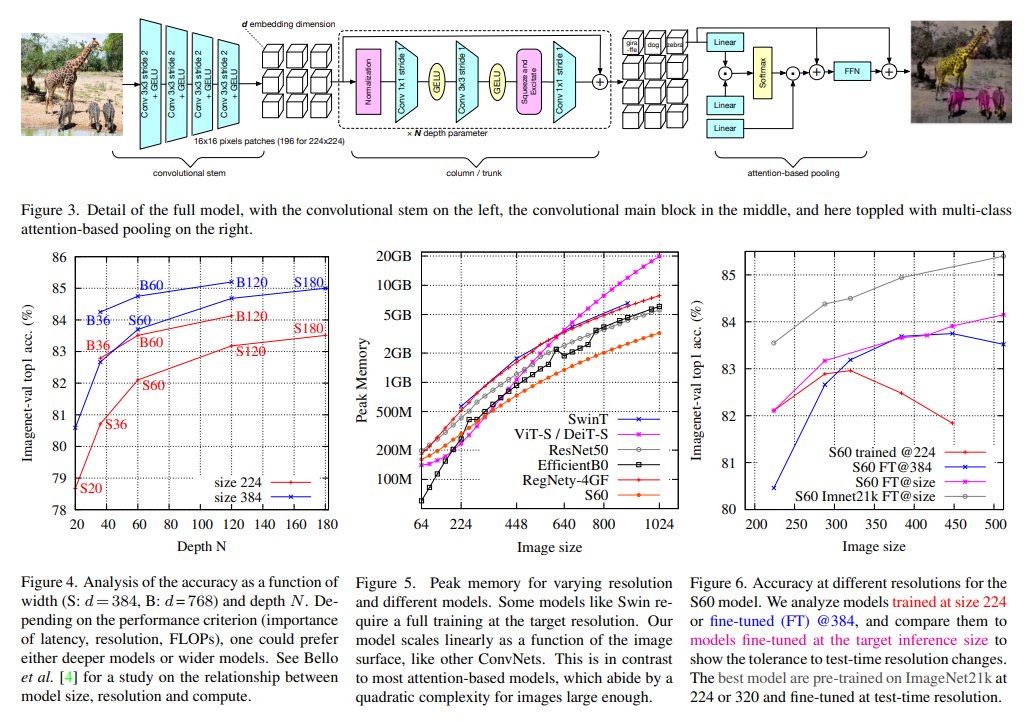

Another element in the ConvNet / Transformer / Conv+Trans: a paper from FAIR-Paris+Sorbonne+Inria using a ConvNet: - 4 Conv, stride=2, no pooling - N Res blocks - 1 transformer block in lieu of the final pooling. Beats ViT in accuracy, gflops & mem. arxiv.org/abs/2112.13692

I trained a CNN w/o pooling on MNIST and produced a colored visualization of the feature maps you get when you translate a digit on an input canvas. This illustrates the equivariance property of convolutions. More info (why convolutions?) in a blog post: medium.com/@chriswolfvisi…

We have open sourced the code for our paper: Singular values of convolution layers (arxiv.org/abs/1805.10408): github.com/brain-research… The code is capable of finding all SVs, imposing the operator norm bound, and plotting all SVs for any deep conv model. @philipmlong

github.com

GitHub - brain-research/conv-sv: The Singular Values of Convolutional Layers

The Singular Values of Convolutional Layers. Contribute to brain-research/conv-sv development by creating an account on GitHub.

Something went wrong.

Something went wrong.

United States Trends

- 1. #WWERaw 47.3K posts

- 2. Giants 63K posts

- 3. Giants 63K posts

- 4. Patriots 91.9K posts

- 5. Drake Maye 15.8K posts

- 6. Dart 26.6K posts

- 7. Diaz 31.9K posts

- 8. Gunther 10.3K posts

- 9. Younghoe Koo 3,501 posts

- 10. Devin Williams 4,899 posts

- 11. Abdul Carter 7,202 posts

- 12. Marcus Jones 5,353 posts

- 13. Theo Johnson 1,813 posts

- 14. Kyle Williams 3,698 posts

- 15. Joe Schoen 1,605 posts

- 16. #NYGvsNE 1,625 posts

- 17. #RawOnNetflix 1,559 posts

- 18. Kafka 6,487 posts

- 19. #MondayNightFootball 1,174 posts

- 20. Ty Lue 1,288 posts