#probabilityboundsanalysis search results

This figure is insane. The extreme underrepresentation of z-values between -2 and 2 (which is just around the commonly used p-value threshold of 0.05) demonstrates publication bias/p-hacking/etc. Sharp edge near the threshold. Source: arxiv.org/abs/2009.09440

Sketch dump ★ these are some studies I did recently~ I tried some perspective stuff, pretty happy with them!

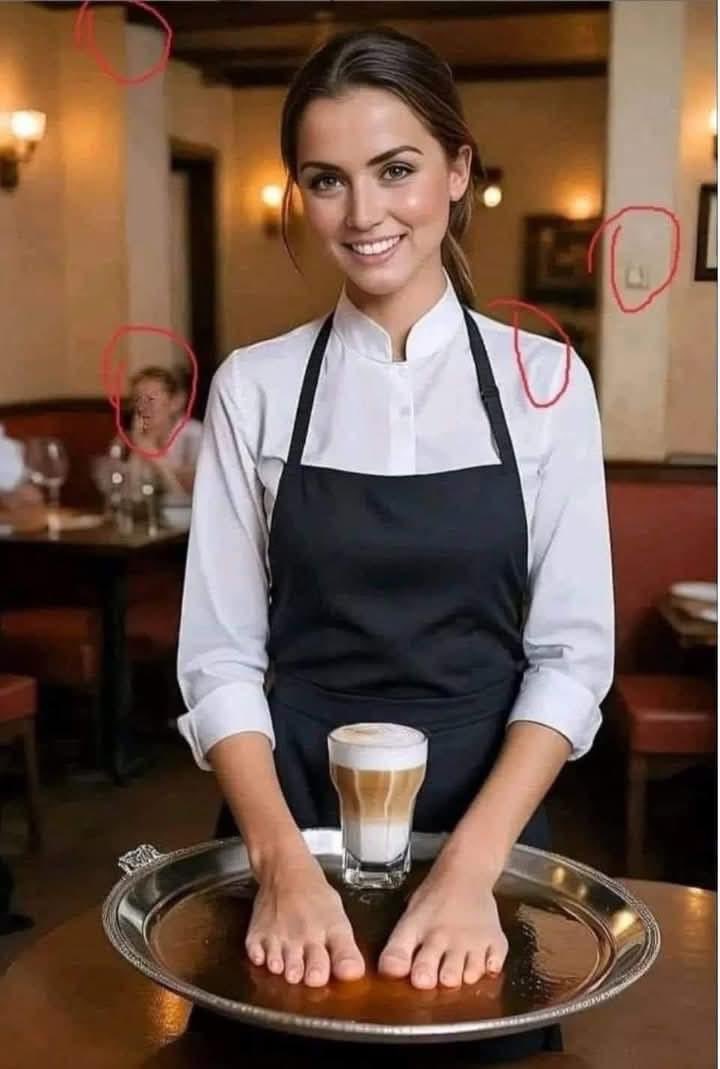

People often ask me how to know for sure if an image was created by artificial intelligence. So I marked in the attached photo (in the red circles) some clues that make me suspect that it is a fabricated photo, although it cannot be said with absolute certainty in this case.

Neural networks can be vulnerable to adversarial noise. We demonstrate the remarkable effectiveness of interval bound propagation in training provably robust image classifiers: arxiv.org/abs/1810.12715

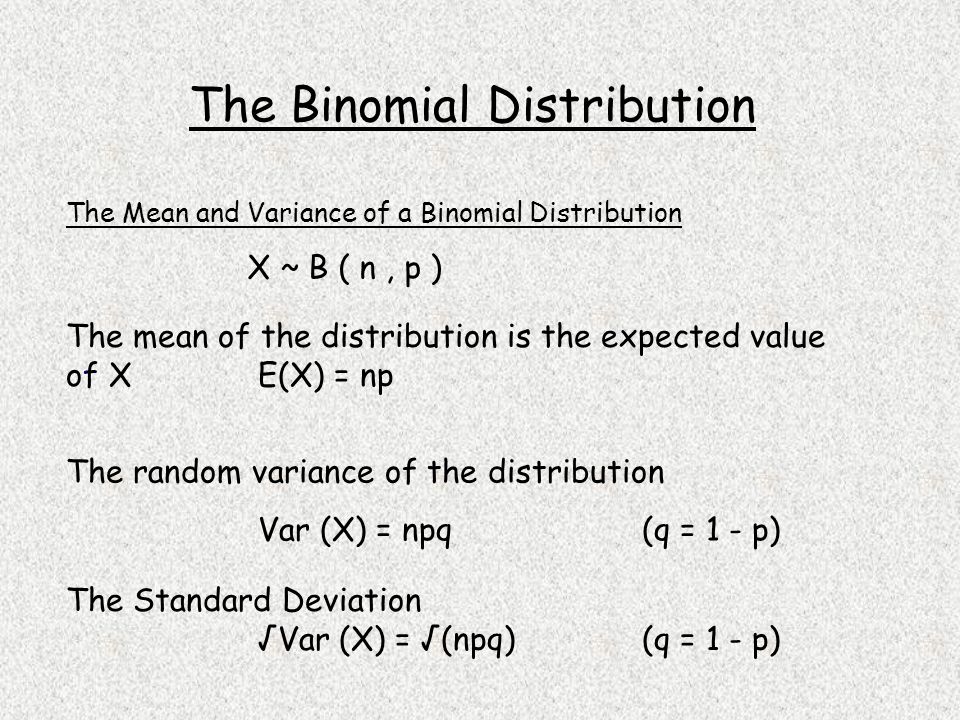

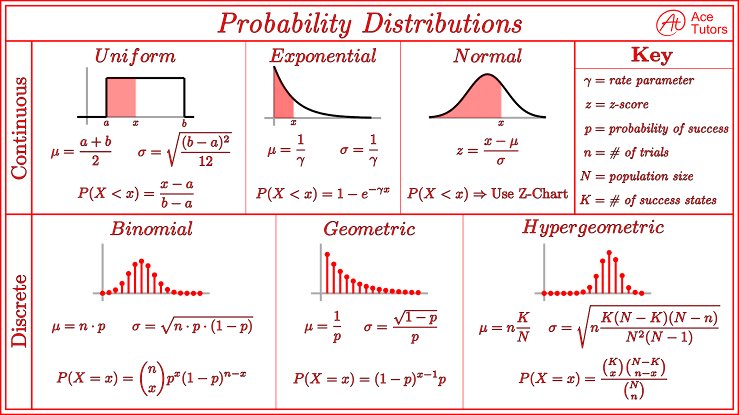

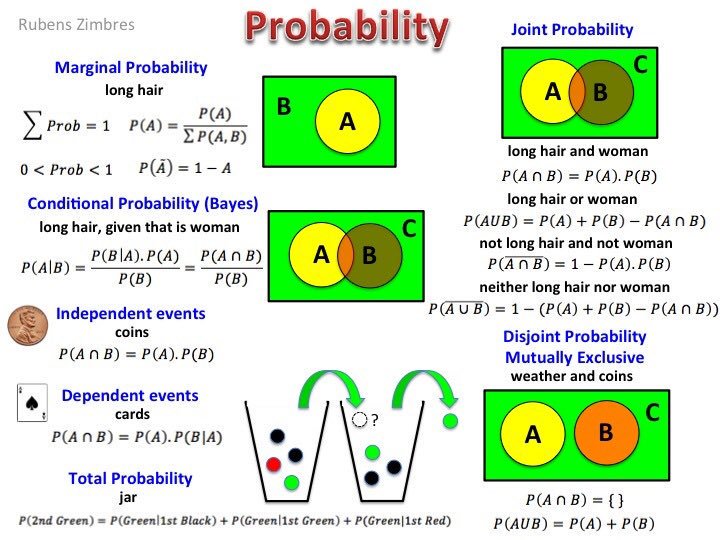

A Cheat Sheet on #Probability for #DataScientists: bit.ly/2sRLTjC #abdsc #Statistics #DataScience #BigData

Hypothesis Test Statistics & Confidence Intervals! Image Credit- Professor Jahn

It's getting harder and harder to tell if an image was generated by artificial intelligence. I've circled some clues here that might mean this is AI.

Factorization Criterion for Sufficient Statistics.

For years I've shown this 2x2 grid in talks on infinite width networks, but with just a big ❓ in the upper-left. No longer! In arxiv.org/abs/2206.07673 we characterize wide Bayesian neural nets in parameter space. This fills a theory gap, and enables *much* faster MCMC sampling.

A Cheat Sheet on #Probability for #DataScientists: bit.ly/2sRLTjC #abdsc #Statistics #DataScience #BigData

i have a solution in 85 bytes. tested with [ 0, 0, 1, 1, 1, 2, 3, 3, 3, 3 ]

![EmanueleRodola's tweet image. i have a solution in 85 bytes. tested with [ 0, 0, 1, 1, 1, 2, 3, 3, 3, 3 ]](https://pbs.twimg.com/media/G5OMnMVXEAErUj7.jpg)

![EmanueleRodola's tweet image. i have a solution in 85 bytes. tested with [ 0, 0, 1, 1, 1, 2, 3, 3, 3, 3 ]](https://pbs.twimg.com/media/G5OMrPqWcAAbw64.jpg)

![EmanueleRodola's tweet image. i have a solution in 85 bytes. tested with [ 0, 0, 1, 1, 1, 2, 3, 3, 3, 3 ]](https://pbs.twimg.com/media/G5ONF-aXwAAushd.png)

A clever way to compute probabilities without computing integrals.

Maximum Likelihood Estimation for Uniform random variable

Something went wrong.

Something went wrong.

United States Trends

- 1. #LingOrm1st_ImpactFANCON 273K posts

- 2. #BUNCHITA 1,467 posts

- 3. Frankenstein 81.5K posts

- 4. Tulane 4,450 posts

- 5. Giulia 15.4K posts

- 6. taylor york 8,762 posts

- 7. #KirbyAirRiders 1,874 posts

- 8. #SmackDown 47.7K posts

- 9. Supreme Court 182K posts

- 10. Aaron Gordon 4,931 posts

- 11. #TheLastDriveIn 4,016 posts

- 12. Pluribus 31.1K posts

- 13. Brown Jackson 5,648 posts

- 14. Justice Jackson 6,040 posts

- 15. Connor Bedard 3,188 posts

- 16. Gozyuger 2,165 posts

- 17. Russ 14.2K posts

- 18. Tatis 2,224 posts

- 19. Guillermo del Toro 25.8K posts

- 20. Northwestern 5,127 posts