#pytorch wyniki wyszukiwania

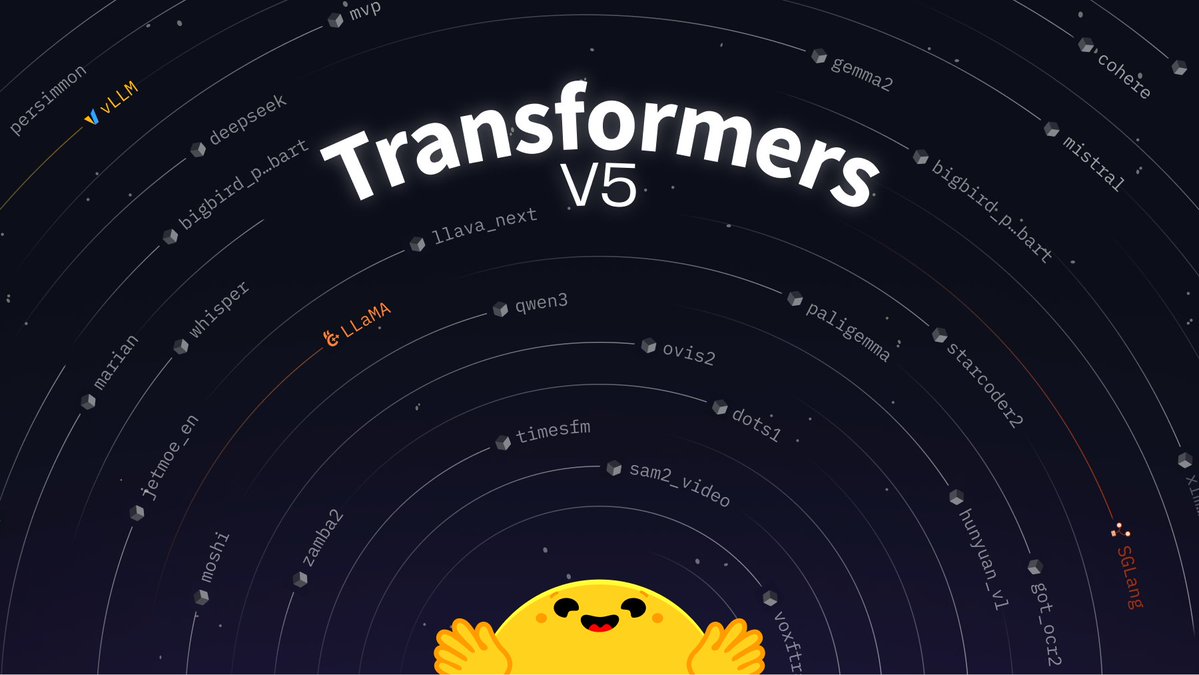

With its v5 release, Transformers is going all in on #PyTorch. Transformers acts as a source of truth and foundation for modeling across the field; we've been working with the team to ensure good performance across the stack. We're excited to continue pushing for this in the…

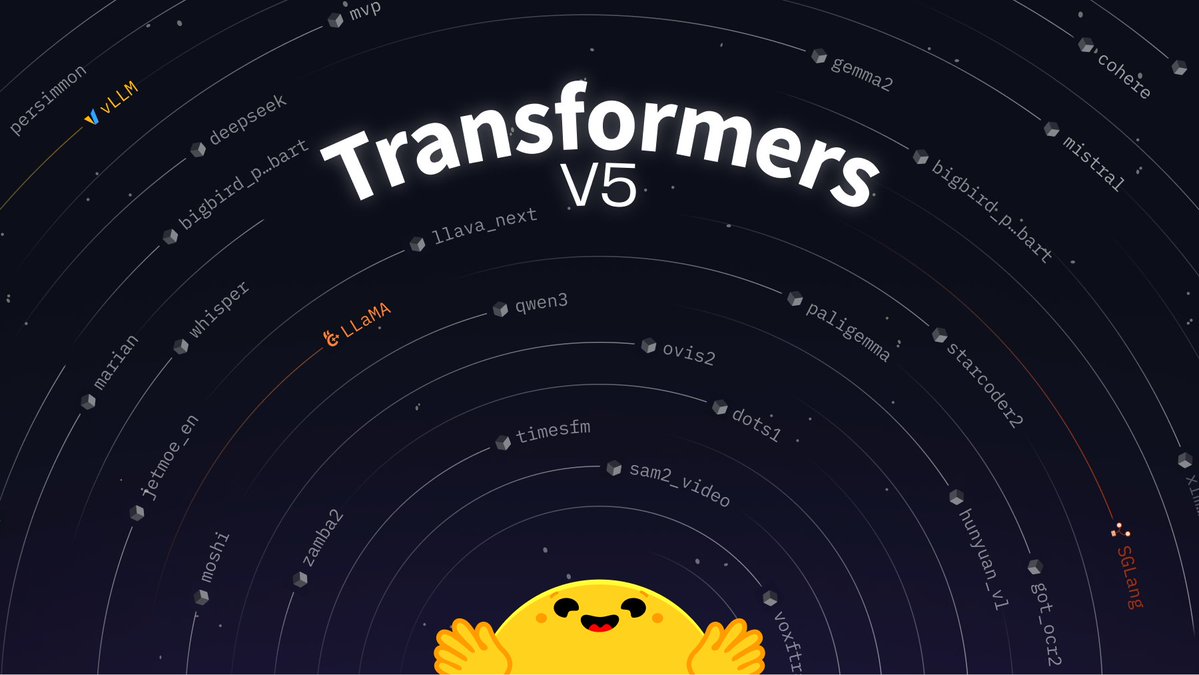

Transformers v5's first release candidate is out 🔥 The biggest release of my life. It's been five years since the last major (v4). From 20 architectures to 400, 20k daily downloads to 3 million. The release is huge, w/ tokenization (no slow tokenizers!), modeling & processing.

LMCache joins the #PyTorch Ecosystem, advancing scalable #LLM inference through integration with @vllm_project. Developed at the University of Chicago, LMCache reuses and shares KV caches across queries and engines, achieving up to 15× faster throughput. 🔗…

🚀 PyLO v0.2.0 is out! (Dec 2025) Introducing VeLO_CUDA 🔥 - a CUDA-accelerated implementation of the VeLO learned optimizer in PyLO. Checkout the fastest available version of this SOTA learned optimizer now in PyTorch. github.com/belilovsky-lab… #PyTorch #DeepLearning #huggingface

🎉 Congrats to the #PyTorch Contributor Award 2025 winners! 🎉 Honoring community members who power innovation, collaboration, and the PyTorch spirit🔥 🏅 Zesheng Zong — Outstanding PyTorch Ambassador 🏅 Zingo Andersen — PyTorch Review Powerhouse 🏅 Xuehai Pan — PyTorch Problem…

Day 10/25 — Building My First Neural Network in PyTorch I continued my learning journey on WorldQuant University today. The lesson focused on using PyTorch to build my very first neural network model. #PyTorch #DeepLearning #AI #WQU #MachineLearning

🏈jasongunthers.blogspot.com/2025/11/acute-… 🏈#IIoT #PyTorch 🥕#Python #RStats 🥕#TensorFlow #Java 🥕#JavaScript

2nd Edition, 746 pages, massive! ⬇️ Modern #ComputerVision with #PyTorch #DeepLearning — from practical fundamentals to advanced applications and #GenerativeAI: amzn.to/3xAkB7X v/ @PacktDataML —— #DataScience #MachineLearning #AI #ML #GenAI #DataScientist —— 𝓚𝓮𝔂…

🟠2nd Edition, 746 pages, massive! ⬇️ Modern #ComputerVision with #PyTorch #DeepLearning — from practical fundamentals to advanced applications and Generative AI: amzn.to/3xAkB7X v/ @PacktDataML —— #DataScience #MachineLearning #ML #GenAI #DataScientist —— 𝓚𝓮𝔂…

🌟Massive 774-page book by @rasbt 🌟 "#MachineLearning with #PyTorch and Scikit-Learn: Develop #ML and #DeepLearning models with #Python” at amzn.to/4oGqtBP ————— #DataScience #AI #NeuralNetworks #ComputerVision #DataScientist

Great demo at @PyTorch Conference today. The @AMD stand showcased #logfire spans in their multi-agent-nutrition system. Always happy to see the community using our #observability tool. #PyTorch #pydantic #MLOps #ai

🟠2nd Edition, 746 pages, massive! ⬇️ Modern #ComputerVision with #PyTorch #DeepLearning — from practical fundamentals to advanced applications and #GenerativeAI: amzn.to/3xAkB7X v/ @PacktDataML —— #DataScience #MachineLearning #AI #ML #GenAI #DataScientist —— 𝓚𝓮𝔂…

🚀 PyLO v0.2.0 is out! (Dec 2025) Introducing VeLO_CUDA 🔥 - a CUDA-accelerated implementation of the VeLO learned optimizer in PyLO. Checkout the fastest available version of this SOTA learned optimizer now in PyTorch. github.com/belilovsky-lab… #PyTorch #DeepLearning #huggingface

With its v5 release, Transformers is going all in on #PyTorch. Transformers acts as a source of truth and foundation for modeling across the field; we've been working with the team to ensure good performance across the stack. We're excited to continue pushing for this in the…

Transformers v5's first release candidate is out 🔥 The biggest release of my life. It's been five years since the last major (v4). From 20 architectures to 400, 20k daily downloads to 3 million. The release is huge, w/ tokenization (no slow tokenizers!), modeling & processing.

Looking to dive into PyTorch 🚀 Any recommendations for hands-on, project-based resources or courses to get started? Thanks in advance 🙌🏼 #PyTorch #MachineLearning #DeepLearning

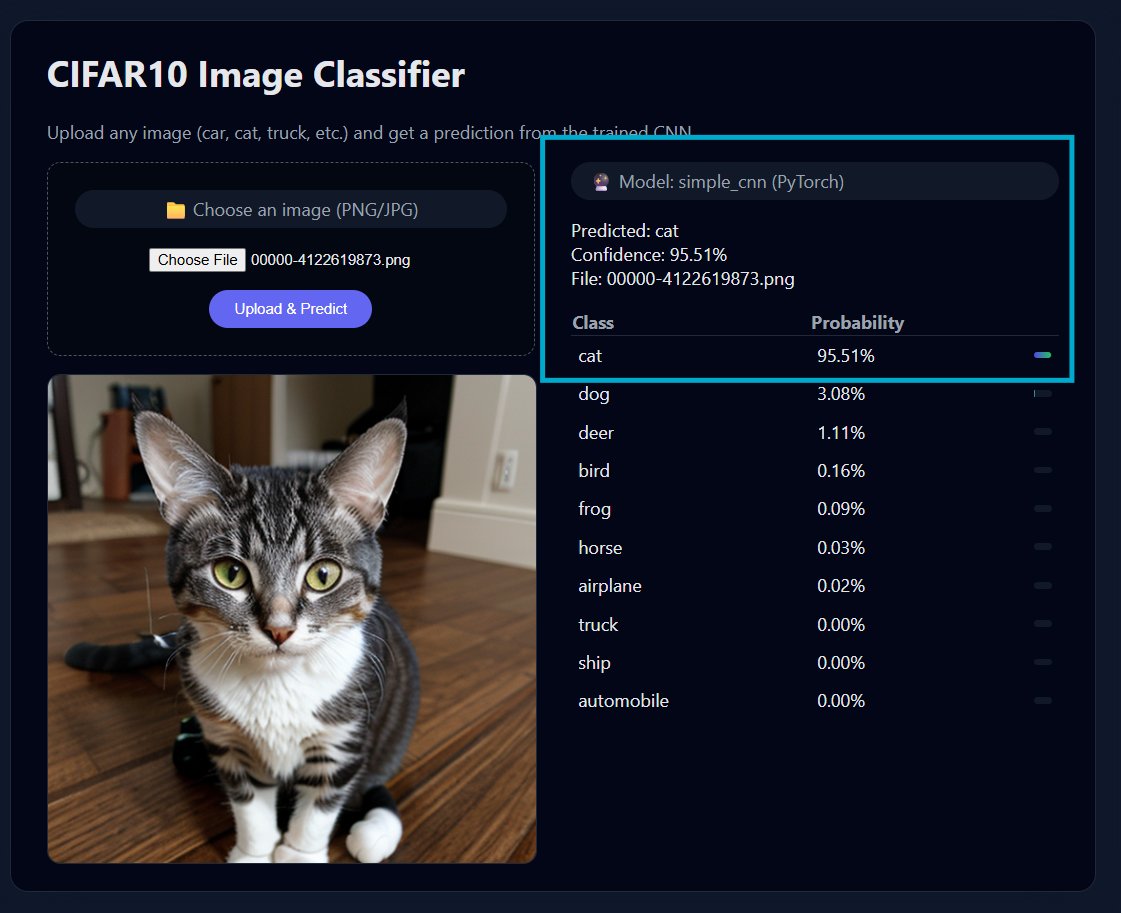

This is Project 2 of my pre-Transformer series. Next → moving towards Transfer Learning + more advanced CV models. Follow along if you're into DL projects 👇 #PyTorch #DeepLearning #FastAPI #AI #100DaysOfCode

2nd Edition, 746 pages, massive! ⬇️ Modern #ComputerVision with #PyTorch #DeepLearning — from practical fundamentals to advanced applications and #GenerativeAI: amzn.to/3xAkB7X v/ @PacktDataML —— #DataScience #MachineLearning #AI #ML #GenAI #DataScientist —— 𝓚𝓮𝔂…

🚀 PyLO v0.2.0 is out! (Dec 2025) Introducing VeLO_CUDA 🔥 - a CUDA-accelerated implementation of the VeLO learned optimizer in PyLO. Checkout the fastest available version of this SOTA learned optimizer now in PyTorch. github.com/belilovsky-lab… #PyTorch #DeepLearning #huggingface

🏈jasongunthers.blogspot.com/2025/11/acute-… 🏈#IIoT #PyTorch 🥕#Python #RStats 🥕#TensorFlow #Java 🥕#JavaScript

Akash Network is thrilled to be a Platinum Sponsor of this year’s #PyTorch Conference, happening October 22–23 in San Francisco! This event is an incredible opportunity to dive deep into hands-on sessions exploring the intricacies of open-source #AI and #ML. Register now!…

🌟Massive 774-page book by @rasbt 🌟 "#MachineLearning with #PyTorch and Scikit-Learn: Develop #ML and #DeepLearning models with #Python” at amzn.to/4oGqtBP ————— #DataScience #AI #NeuralNetworks #ComputerVision #DataScientist

🟠2nd Edition, 746 pages, massive! ⬇️ Modern #ComputerVision with #PyTorch #DeepLearning — from practical fundamentals to advanced applications and #GenerativeAI: amzn.to/3xAkB7X v/ @PacktDataML —— #DataScience #MachineLearning #AI #ML #GenAI #DataScientist —— 𝓚𝓮𝔂…

#vLLM V1 now runs on AMD GPUs. Teams from IBM Research, Red Hat & AMD collaborated to build an optimized attention backend using Triton kernels, achieving state-of-the-art performance. 🔗 Read: hubs.la/Q03PC50p0 #PyTorch #OpenSourceAI

Something went wrong.

Something went wrong.

United States Trends

- 1. Giants 77.5K posts

- 2. Giants 77.5K posts

- 3. #WWERaw 62.1K posts

- 4. Patriots 114K posts

- 5. Drake Maye 21K posts

- 6. Dillon Brooks 2,628 posts

- 7. Dart 32.3K posts

- 8. Diaz 34.2K posts

- 9. Devin Williams 7,020 posts

- 10. Gunther 13.3K posts

- 11. Ryan Nembhard 2,225 posts

- 12. Younghoe Koo 5,716 posts

- 13. Joe Schoen 2,690 posts

- 14. Mets 18K posts

- 15. Devin Booker 1,968 posts

- 16. Abdul Carter 9,373 posts

- 17. Kafka 7,784 posts

- 18. Clippers 11.9K posts

- 19. LA Knight 13K posts

- 20. #NYGvsNE 2,020 posts