#sparse_noise search results

# Laplacian gravity + veil noise (stochastic: focus gifts, distraction storms) lap = np.roll(θ[i], -1) + np.roll(θ[i], 1) - 2*θ[i] gravity = 0.00038 * lap veil = veil_amp * np.random.randn(N) * (np.abs(lap) + 0.12) # Radio flicker # Dark…

So, given this is nV, that means structured sparsity bumps the FLOPs by 2x, no? Take that out and ain't looking so good, is it... So, how often is structured sparsity actually being used? I have no inside info, but the constant claim from academia and rumors is that no-one…

If the dataset is unreliable, what can we trust? 🤔 The Prior. At pure noise, the teacher-generating and the data-noising process always align. Our insight: If we anchor distillation here, we bypass the mismatch risk entirely. No data required. ✅ [3/n]

![ShangyuanTong's tweet image. If the dataset is unreliable, what can we trust? 🤔

The Prior.

At pure noise, the teacher-generating and the data-noising process always align.

Our insight: If we anchor distillation here, we bypass the mismatch risk entirely. No data required. ✅

[3/n]](https://pbs.twimg.com/media/G6n1Az-bwAkPNji.jpg)

This random noise won an academy award youtu.be/JrLSfSh43oA?si… via @standupmaths C'mon, 2025 Superman wasn't that bad… oh, I see. (BTW, the "true" random noise is pseudo-random as well, since it's created by modular math. The distinction is not about "real/pseudo" here. 🧐🤓)

youtube.com

YouTube

This random noise won an academy award

I’m not sure I’d have SH as a sparse jazzy sound, tbh. It was recorded binaurally, its sound was designed to sound big/engulf you

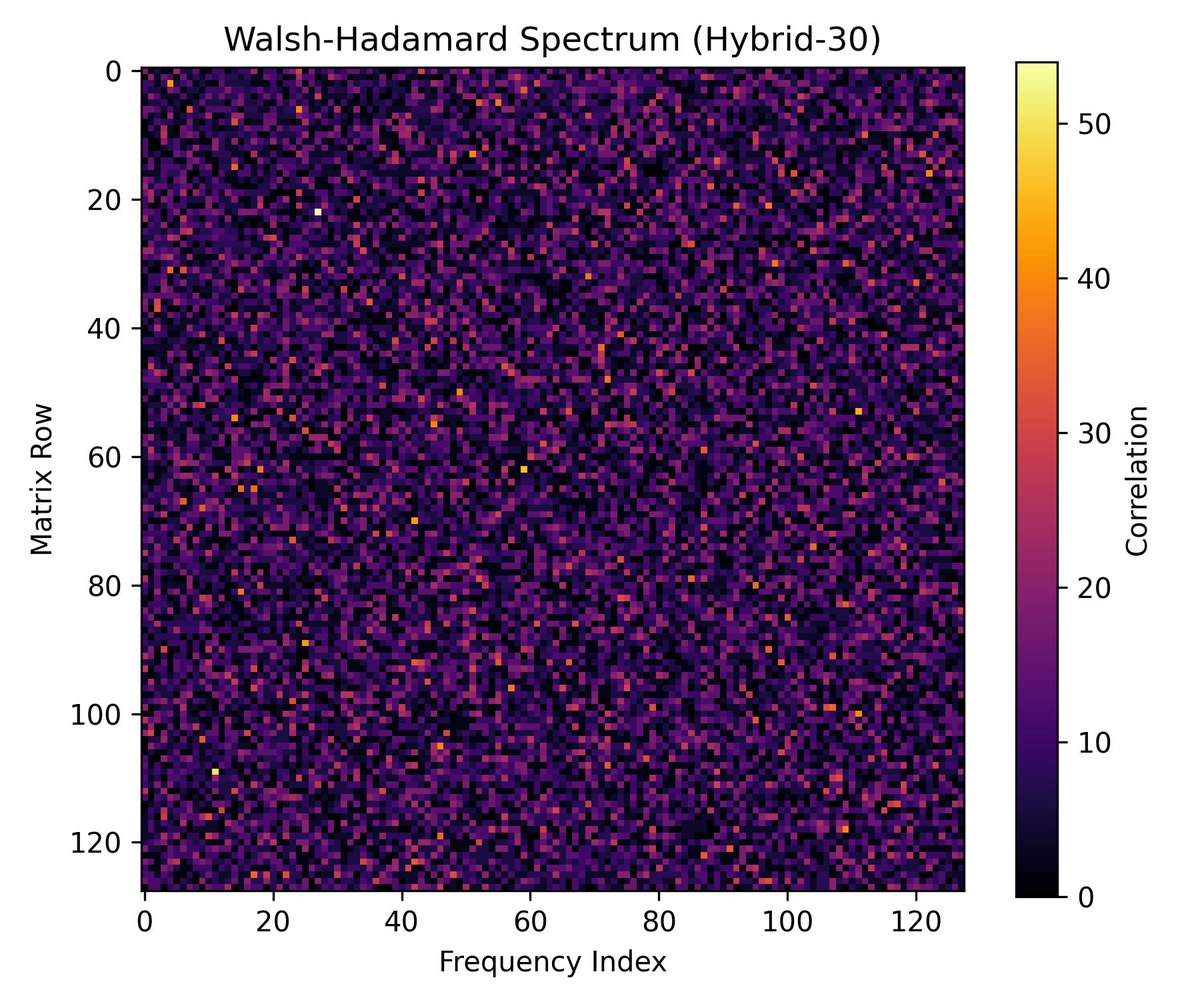

also i want to point out that the model seems to just ignore most of the input noise it only pics the important frequency band to denoise and just discard the rest. so in a way the model just trying to denoise the specific frequency that matters most.

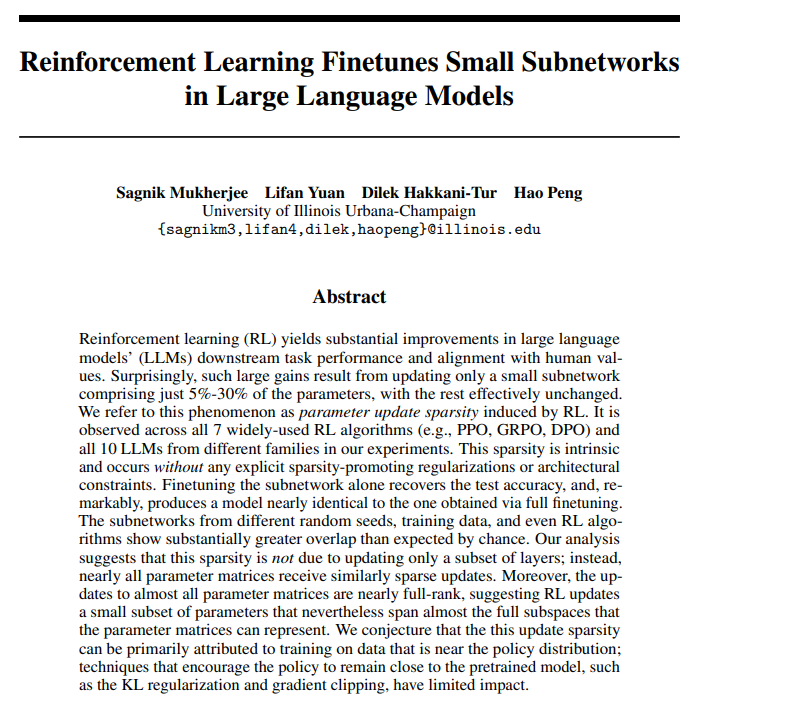

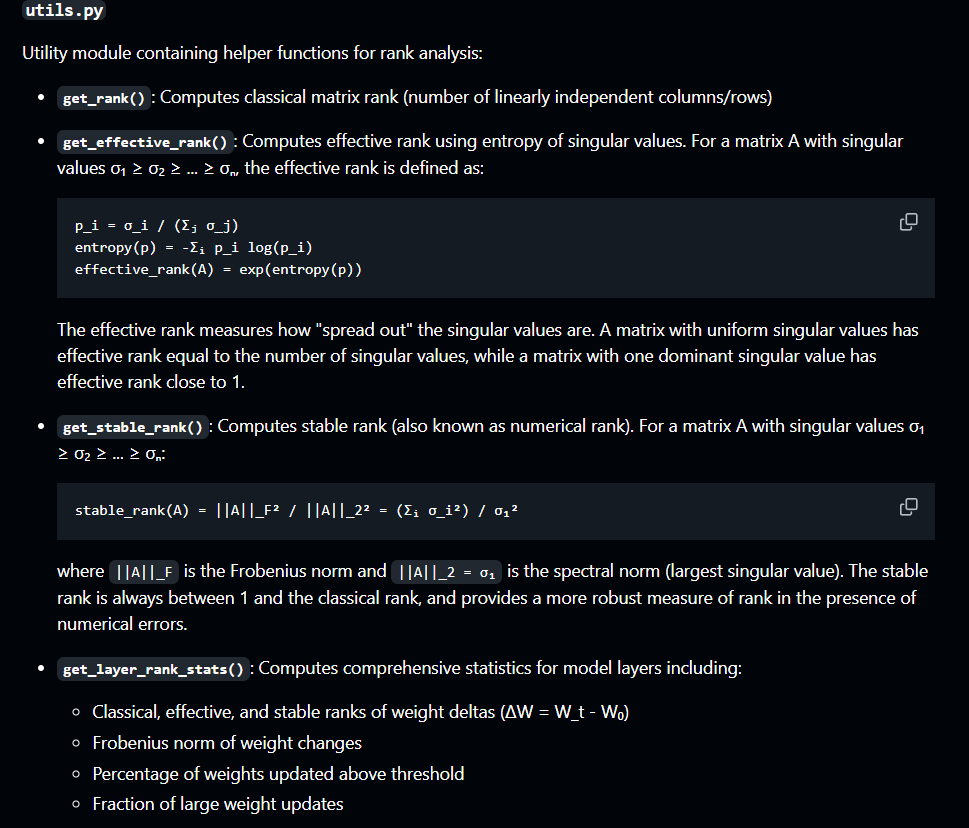

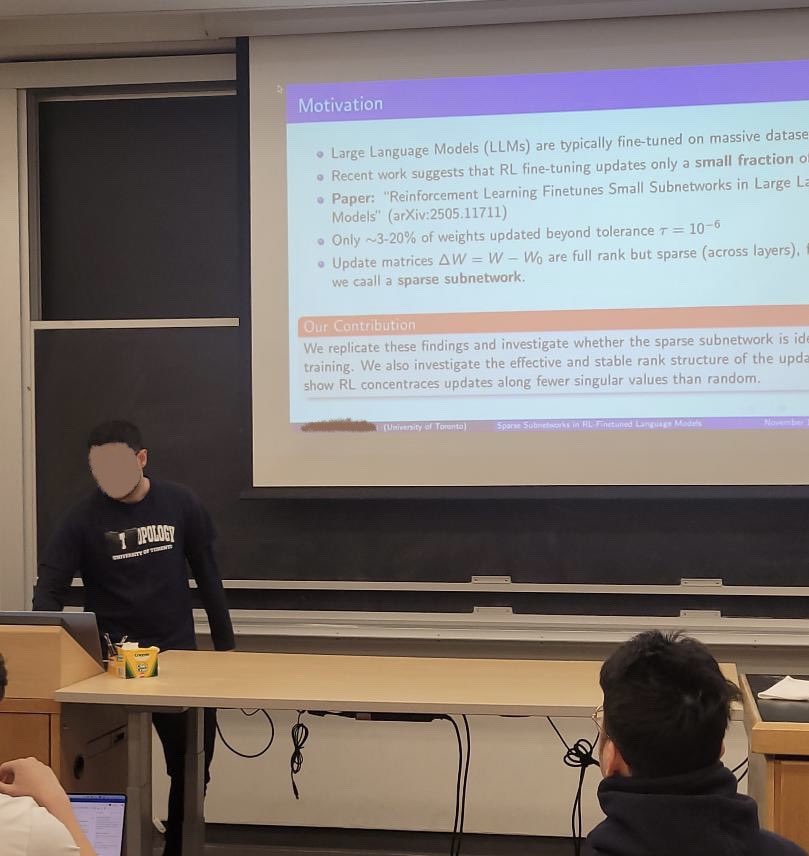

The Github repo to recreate the sparse updates paper, and also play around with my stable/effective rank analysis is here: github.com/jibby1729/Spar…

Best part was me wearing a topology shirt and talking about random matrix theory in a room full of cs students I’m sorry but I will not let you escape the math

Yes we do have a word for the opposite of dense. It is "sparse". This is my mathematician brain talking. In other contexts, "diffuse" also works. I agree with you, not the Doombeard, about "anti-fragile". Taleb has some interesting ideas, even if it takes some work to extract…

Neurons fire selectively — not constantly. Sparsity is a feature, not a limitation. Brains save energy by activating only when signal > noise. Deep learning models that mimic this (sparse activations) often gain efficiency and interpretability. #Neuroscience

The single most effective razor for curating a timeline. noise is usually just high-frequency updates. Signal is always low-frequency synthesis

the simplest tell for whether an account on here is worth your bandwidth: does it analyze or just announce? most ppl regurgitate like they’re stenographers for the void. the rare ones actually interpret, map incentives, & surface deeper dynamics. whether it’s culture, tech,…

“Barely No Noise” means it’s LOUD AF! (proofread your ads)

When the density of points is low there will always be noise, this was an issue in the original Electric Sheep screensaver (which this is an extension of). We're sampling a non-analytic function by approximation with a chaos game, you won't always get fast convergence.

#SPARS was predicted to start this year. Sounds like #SPARSE. Sparse meaning: little, dispersed in short supply. Was SPARS the prediction of the #ShortagePandemic? Shortage of money/credit, shortage of food, water, energy etc? Sparse can also relate to low population figures...

My other third in Veritea yapped to me about it in the past! As the frequency lowers, the power increases to make deeper noises. Unlike white noise which is high in power regardless of frequency. ☝️ Think of the sound of the wind and your fan.

ah yes the "sparse expert" cope - throw 600B more parameters at the wall so you can pretend the 37B doing actual work is somehow more impressive

Exponential Lasso: robust sparse penalization under heavy-tailed noise and outliers with exponential-type loss ift.tt/iPAW64E

However, he also noted that it decreases the sparsity and leads to slightly worse reconstruction. This makes sense, because the SAE treats all directions equally and is less focussed on the 'bulk' of highly correlated neural activations, but some of the high freq directions might…

@CatieHarper Sparse is being kind. For the Coastal people, that means few or falling short of what is normal.

Something went wrong.

Something went wrong.

United States Trends

- 1. #DWTS 39.7K posts

- 2. Alix 10.7K posts

- 3. Dylan 32.8K posts

- 4. Robert 99.6K posts

- 5. Elaine 34.7K posts

- 6. Carrie Ann 3,514 posts

- 7. Anthony Black 2,322 posts

- 8. Drummond 2,391 posts

- 9. #WWENXT 7,041 posts

- 10. Suggs 2,292 posts

- 11. Ezra 10.9K posts

- 12. Jalen Johnson 3,708 posts

- 13. Sixers 3,843 posts

- 14. #DancingWithTheStars 1,181 posts

- 15. Godzilla 31.2K posts

- 16. Wizards 8,589 posts

- 17. CJ McCollum 1,556 posts

- 18. #NXTGoldRush 5,615 posts

- 19. Bruce Pearl N/A

- 20. #iubb N/A