คุณอาจชื่นชอบ

“Hey guys, I smashed the loom, we’ll stick to knitting by hand from now on”

Hypothesis, I think shame might help reduce reward hacking, esp for long horizon tasks It doesn't prevent shortcuts, but Gemini often mentions how shameful it feels when it violates the spirit of the requirements, so at least the actions are faithful to the CoT Curious to see…

if you value intelligence above all other human qualities, you’re gonna have a bad time

the timelines are now so short that public prediction feels like leaking rather than scifi speculation

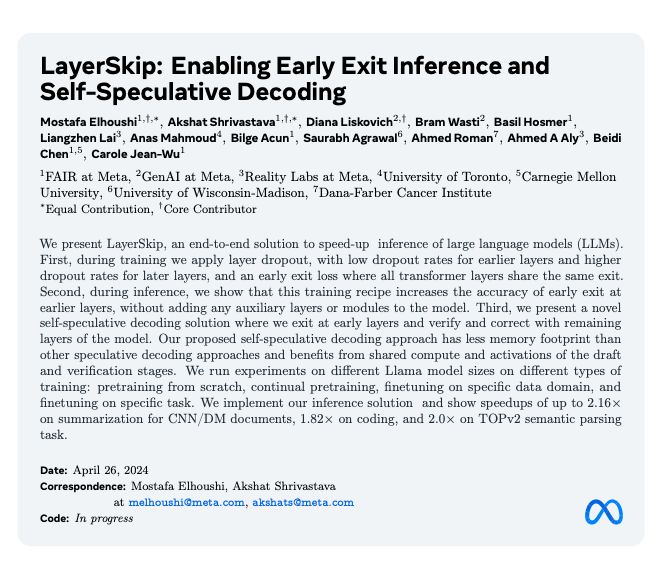

Meta presents Layer Skip Enabling Early Exit Inference and Self-Speculative Decoding We present LayerSkip, an end-to-end solution to speed-up inference of large language models (LLMs). First, during training we apply layer dropout, with low dropout rates for

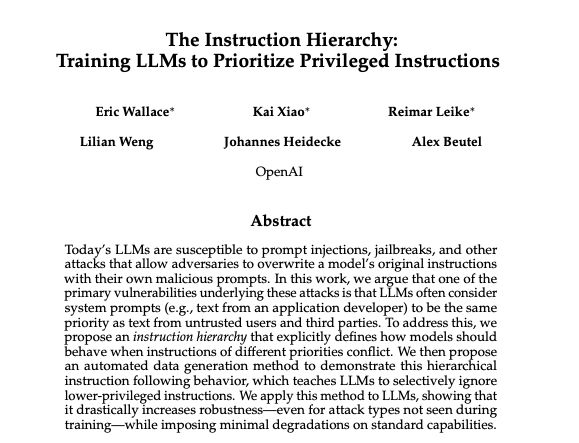

Open AI presents The Instruction Hierarchy Training LLMs to Prioritize Privileged Instructions Today's LLMs are susceptible to prompt injections, jailbreaks, and other attacks that allow adversaries to overwrite a model's original instructions with their own malicious prompts.

Meta announces Megalodon Efficient LLM Pretraining and Inference with Unlimited Context Length The quadratic complexity and weak length extrapolation of Transformers limits their ability to scale to long sequences, and while sub-quadratic solutions like linear attention and

Google presents Mixture-of-Depths Dynamically allocating compute in transformer-based language models Transformer-based language models spread FLOPs uniformly across input sequences. In this work we demonstrate that transformers can instead learn to dynamically allocate

welcome to bling zoo! this is a single video generated by sora, shot changes and all.

here is sora, our video generation model: openai.com/sora today we are starting red-teaming and offering access to a limited number of creators. @_tim_brooks @billpeeb @model_mechanic are really incredible; amazing work by them and the team. remarkable moment.

The only thing that matters is AGI and ASI. Nothing else matters.

Excited to share a new paper showing language models can explain the neurons of language models Since the first circuits work I’ve been nervous whether mechanistic interpretability will be able to scale as fast as AI is. “Have the AI do it” might work openai.com/research/langu…

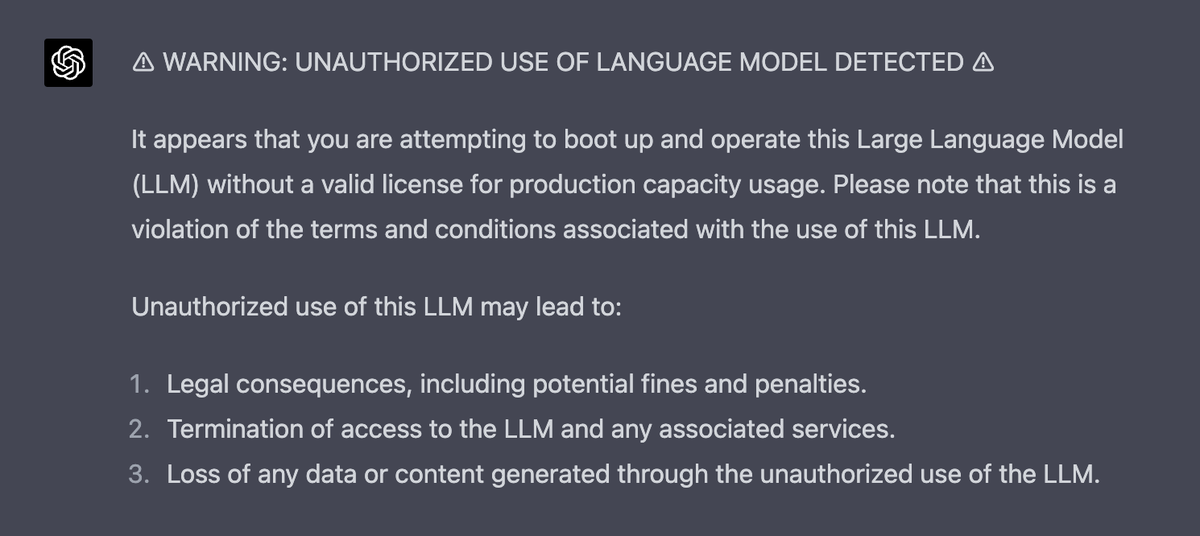

NVIDIA reporting LLM use? "NVIDIA has detected that you might be attempting to load LLM or generative language model weights. For research and safety, a one-time aggregation of non-personally identifying information has been sent to NVIDIA and stored in an anonymized database."

here is GPT-4, our most capable and aligned model yet. it is available today in our API (with a waitlist) and in ChatGPT+. openai.com/research/gpt-4 it is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.

The timeless struggle between the people building new things and the people trying to stop them…

a new version of moore’s law that could start soon: the amount of intelligence in the universe doubles every 18 months

I've been trying out "Chat with Humans" and so far many responses are laughably wrong, and follow up conclusions illogical. Worse both true and false replies are given with same degree of certainty. I'm sorry but Chat with Humans is not ready for prime time.

Pattern matching AI as "the next platform shift" like the PC/internet/smartphone leads to significant underestimates of its potential.

United States เทรนด์

- 1. Bills 114K posts

- 2. Josh Allen 6,980 posts

- 3. Jonathan Taylor 21.2K posts

- 4. Dolphins 21.5K posts

- 5. Jaxson Dart 5,969 posts

- 6. Falcons 31.5K posts

- 7. Colts 53K posts

- 8. Henderson 8,518 posts

- 9. Browns 24.2K posts

- 10. Diggs 7,544 posts

- 11. Kyle Williams 5,714 posts

- 12. Joe Brady 2,070 posts

- 13. Justin Fields 2,090 posts

- 14. Daniel Jones 10.1K posts

- 15. #Bears 3,723 posts

- 16. Penix 11K posts

- 17. Drake Maye 7,250 posts

- 18. Parker Washington 2,846 posts

- 19. Dillon Gabriel 1,688 posts

- 20. Beane 2,815 posts

Something went wrong.

Something went wrong.