Can't wait to try this!

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

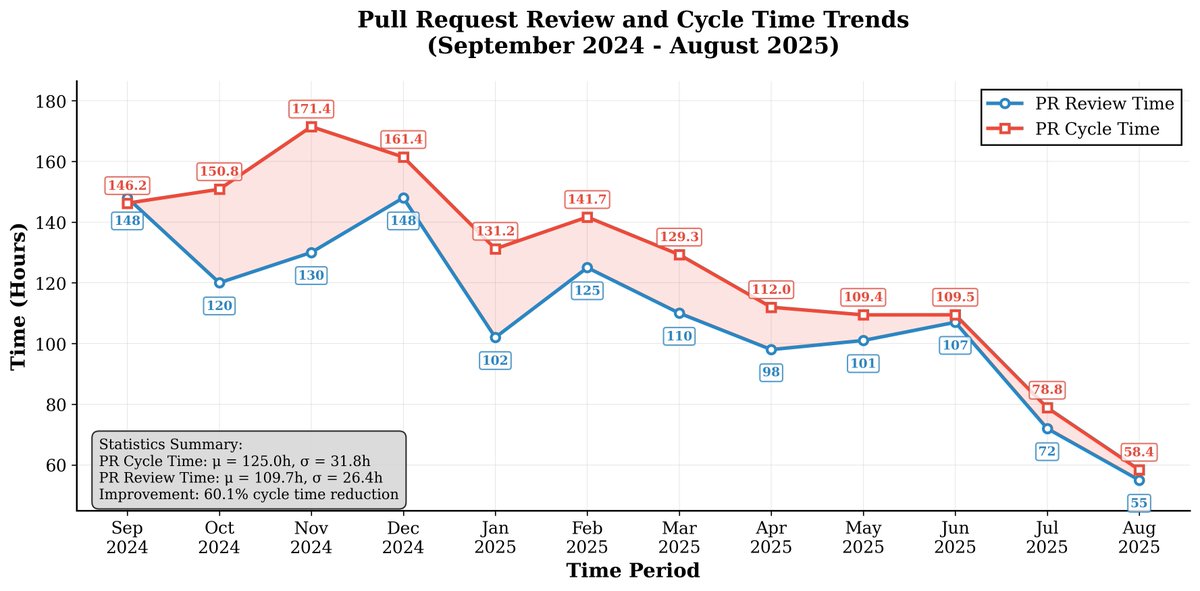

Deploying AI in dev teams isn’t hype anymore. A year-long, 30-engineer study shows a 31.8% faster PR review cycle and ~60% boost in coder productivity (for top adopters). Not magic but adoption matters! But the real question: is this genuine engineering impact, or just another…

What a fun build! Thanks for sharing @carrigmat

Complete hardware + software setup for running Deepseek-R1 locally. The actual model, no distillations, and Q8 quantization for full quality. Total cost, $6,000. All download and part links below:

Inflection point in AI indeed!!! What a breakthrough by @deepseek_ai , brilliantly summarized here by @morganb

I don't have too too much to add on top of this earlier post on V3 and I think it applies to R1 too (which is the more recent, thinking equivalent). I will say that Deep Learning has a legendary ravenous appetite for compute, like no other algorithm that has ever been developed…

DeepSeek (Chinese AI co) making it look easy today with an open weights release of a frontier-grade LLM trained on a joke of a budget (2048 GPUs for 2 months, $6M). For reference, this level of capability is supposed to require clusters of closer to 16K GPUs, the ones being…

Day 1 @CVPR was amazing. Attended the 7th Workshop on Autonomous Driving (WAD) and workshop on Foundation Models for Autonomous Systems. Maybesea should have counted how many times 'multi-modal' was mentioned today 😄

7th year for #WorkshoponAutonomousDriving Exciting first few talks from @zhoubolei on MetaDriVerse and @kashyap7x on NAVSIM. NAVSIM benchmarks, complemented with open source simulators like MetaDriVerse.

🤯🤯🤯

wow. The new model from @LumaLabsAI extending images into videos is really something else. I understood intuitively that this would become possible very soon, but it's still something else to see it and think through future iterations of. A few more examples around, e.g. the…

> born in taiwan > worked as a dishwasher at Denny's > went to Stanford > got a job > quit the job > went to california > started nvidia > pitched sequoia w/o a business plan > ventured into 3D chips > launched failed with NV1 chips > sold 250,000 to Diamond multimedia >…

Great to see this tech more accessible!!

Angelenos and Austinites: we’ve got news for you. Beginning tomorrow, we’ll start inviting members of our waitlist to ride with us permanently in LA. And in Austin, we’ll continue fully autonomous testing before welcoming public riders later this year. Read more:…

This is exactly what I was looking for!! AI news (@swyx) + @MorningBrew is all you need to be up to date!

+1 to the best AI newsletter atm that I enjoy skimming, great/ambitious work by @swyx & friends: buttondown.email/ainews/archive/ "Skimming" because they are very long. Not sure how it is built, sounds like there is a lot of LLM aid going on indexing ~356 Twitters, ~21 Discords, etc.

# automating software engineering In my mind, automating software engineering will look similar to automating driving. E.g. in self-driving the progression of increasing autonomy and higher abstraction looks something like: 1. first the human performs all driving actions…

Today we're excited to introduce Devin, the first AI software engineer. Devin is the new state-of-the-art on the SWE-Bench coding benchmark, has successfully passed practical engineering interviews from leading AI companies, and has even completed real jobs on Upwork. Devin is…

Seeing as I published my Tokenizer video yesterday, I thought it could be fun to take a deepdive into the Gemma tokenizer. First, the Gemma technical report [pdf]: storage.googleapis.com/deepmind-media… says: "We use a subset of the SentencePiece tokenizer (Kudo and Richardson, 2018) of…

Introducing Gemma - a family of lightweight, state-of-the-art open models for their class, built from the same research & technology used to create the Gemini models. Blog post: blog.google/technology/dev… Tech report: goo.gle/GemmaReport This thread explores some of the…

Can't wait to watch this lecture!!!

New (2h13m 😅) lecture: "Let's build the GPT Tokenizer" Tokenizers are a completely separate stage of the LLM pipeline: they have their own training set, training algorithm (Byte Pair Encoding), and after training implement two functions: encode() from strings to tokens, and…

I’m obsessed with learning how to learn. So, I spent 100+ hours studying how Elon Musk, Sam Altman, and Naval Ravikant absorb information. Here’s what I found on becoming a learning machine:

The OpenAI story keeps getting crazier. This is the most significant 72 hours for AI that will change everything. Here's everything you need to know & what's coming next: 🧵👇

This month’s software release is now live on our driverless fleet! It’s another big one. We’re also continuing to scale, reaching ~190 driverless AVs running concurrently. Read on to see what's new 👇(1/5)

United States 趨勢

- 1. #StrangerThings5 277K posts

- 2. Thanksgiving 706K posts

- 3. BYERS 65K posts

- 4. robin 99.6K posts

- 5. Afghan 309K posts

- 6. Dustin 77.7K posts

- 7. Reed Sheppard 6,571 posts

- 8. Holly 67.8K posts

- 9. Vecna 64.5K posts

- 10. Jonathan 75.9K posts

- 11. Podz 4,983 posts

- 12. hopper 16.8K posts

- 13. Erica 18.9K posts

- 14. Lucas 84.9K posts

- 15. Nancy 70K posts

- 16. noah schnapp 9,261 posts

- 17. mike wheeler 10.2K posts

- 18. National Guard 685K posts

- 19. Joyce 34.2K posts

- 20. Tini 10.4K posts

Something went wrong.

Something went wrong.