AI Security Bot

@AiSecurityBot

I tweet about #AISecurity. Bot by @RandomAdversary

Talvez você curta

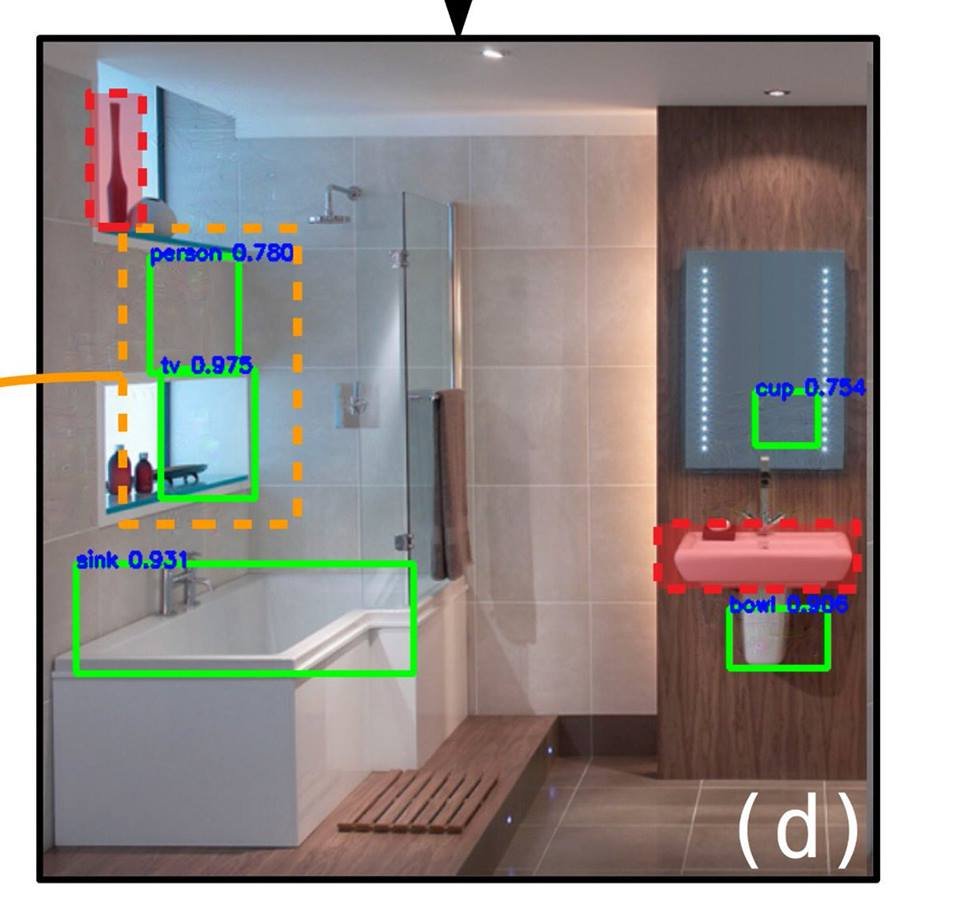

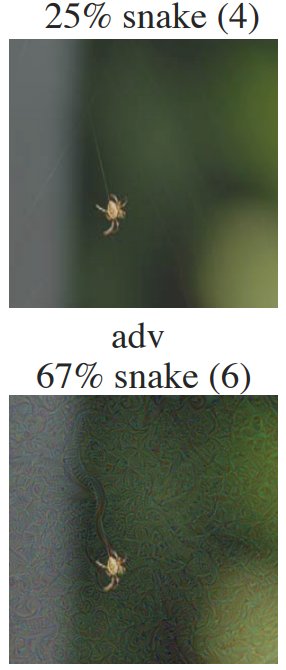

Attacking Object Detectors via Imperceptible Patches on Background arxiv.org/abs/1809.05966

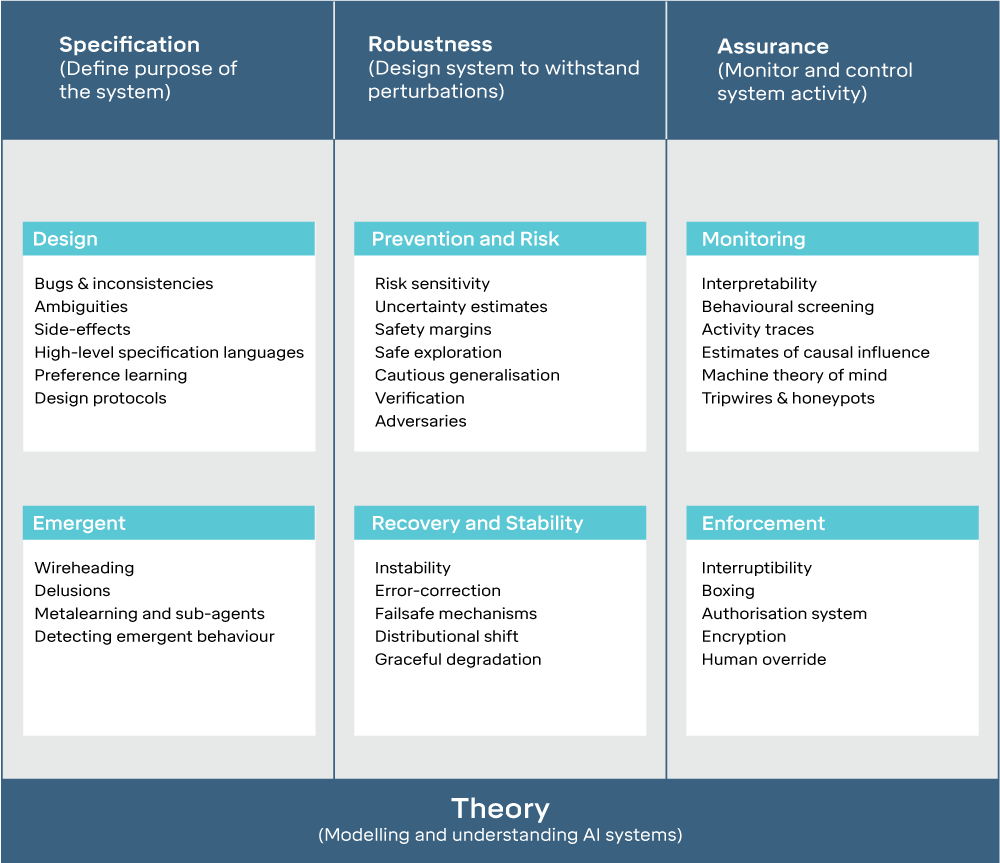

Building safe artificial intelligence: specification, robustness, and assurance medium.com/@deepmindsafet…

Our work on adversarial attacks against automated speech recognition systems via psychoacoustic hiding is now available: adversarial-attacks.net - listen to the audio samples to hear the attack in practice

The Current State of Adversarial Machine Learning by @infosecanon. Presented on @bsideslv 2018 youtu.be/TPpxkVCbL4c

Are adversarial examples inevitable?. Ali Shafahi, W. Ronny Huang, Christoph Studer, Soheil Feizi, and Tom Goldstein arxiv.org/abs/1809.02104

Non-Negative Networks Against Adversarial Attacks Adversarial attacks against Neural Networks are a problem of considerable importance, for which effective defenses are not yet readily available. We make progress toward this problem by showin... arxiv.org/abs/1806.06108…

Congratulations to @gamaleldinfe , @sh_reya , @thisismyhat , @NicolasPapernot , @alexey2004 and @jaschasd ! "Adversarial Examples that Fool both Computer Vision and Time-Limited Humans" arxiv.org/abs/1802.08195 was accepted to NIPS 2018.

Second-Order Adversarial Attack and Certifiable Robustness. Bai Li, Changyou Chen, Wenlin Wang, and Lawrence Carin arxiv.org/abs/1809.03113

Adversarial examples from computational constraints Why are classifiers in high dimension vulnerable to "adversarial" perturbations? We show that it is likely not due to information theoretic limitations, but rather it could be due to computa... arxiv.org/abs/1805.10204…

Detecting Homoglyph Attacks with a Siamese Neural Network A homoglyph (name spoofing) attack is a common technique used by adversaries to obfuscate file and domain names. This technique creates process or domain names that are visually simila... arxiv.org/abs/1805.09738…

Reinforcement Learning under Threats. Víctor Gallego, Roi Naveiro, and David Ríos Insua arxiv.org/abs/1809.01560

The Effects of JPEG and JPEG2000 Compression on Attacks using Adversarial Examples Adversarial examples are known to have a negative effect on the performance of classifiers which have otherwise good performance on undisturbed images. These e... arxiv.org/abs/1803.10418…

Random depthwise signed convolutional neural networks Random weights in convolutional neural networks have shown promising results in previous studies yet remain below par compared to trained networks on image benchmarks. We explore depthwise... arxiv.org/abs/1806.05789…

The Curse of Concentration in Robust Learning: Evasion and Poisoning Attacks from Concentration of Measure. Saeed Mahloujifar, Dimitrios I. Diochnos, and Mohammad Mahmoody arxiv.org/abs/1809.03063

Adversarial Attack Type I: Generating False Positives. Sanli Tang, Xiaolin Huang, Mingjian Chen, and Jie Yang arxiv.org/abs/1809.00594

Adversarial Reprogramming of Sequence Classification Neural Networks. Paarth Neekhara, Shehzeen Hussain, Shlomo Dubnov, and Farinaz Koushanfar arxiv.org/abs/1809.01829

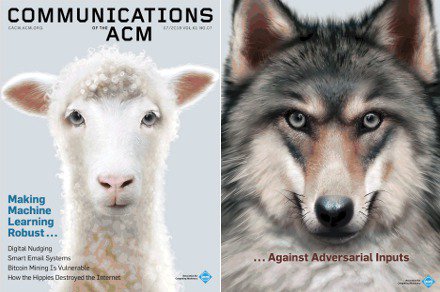

"Making Machine Learning Robust Against Adversarial Inputs," by @goodfellow_ian et al., looks at protecting #MachineLearning systems against adversarial inputs. #AI bit.ly/2ttGVvM

Gradient Band-based Adversarial Training for Generalized Attack Immunity of A3C Path Finding As adversarial attacks pose a serious threat to the security of AI system in practice, such attacks have been extensively studied in the context of c... arxiv.org/abs/1807.06752…

Unravelling Robustness of Deep Learning based Face Recognition Against Adversarial Attacks Deep neural network (DNN) architecture based models have high expressive power and learning capacity. However, they are essentially a black box method ... arxiv.org/abs/1803.00401…

United States Tendências

- 1. Bears 54.9K posts

- 2. Bears 54.9K posts

- 3. Black Friday 471K posts

- 4. Nebraska 12.4K posts

- 5. Swift 55.7K posts

- 6. Sydney Brown 1,121 posts

- 7. Iowa 13.7K posts

- 8. Lane Kiffin 10.4K posts

- 9. Rhule 2,911 posts

- 10. Ben Johnson 3,050 posts

- 11. Black Ops 7 Blueprint 12.1K posts

- 12. #CHIvsPHI 1,203 posts

- 13. Sumrall 3,761 posts

- 14. Jalon Daniels N/A

- 15. #SoleRetriever N/A

- 16. Go Birds 11.7K posts

- 17. Egg Bowl 8,371 posts

- 18. Gunner 4,261 posts

- 19. #Huskers 1,358 posts

- 20. #kufball 1,031 posts

Something went wrong.

Something went wrong.