Alpha Prompt

@AlphaPromptApp

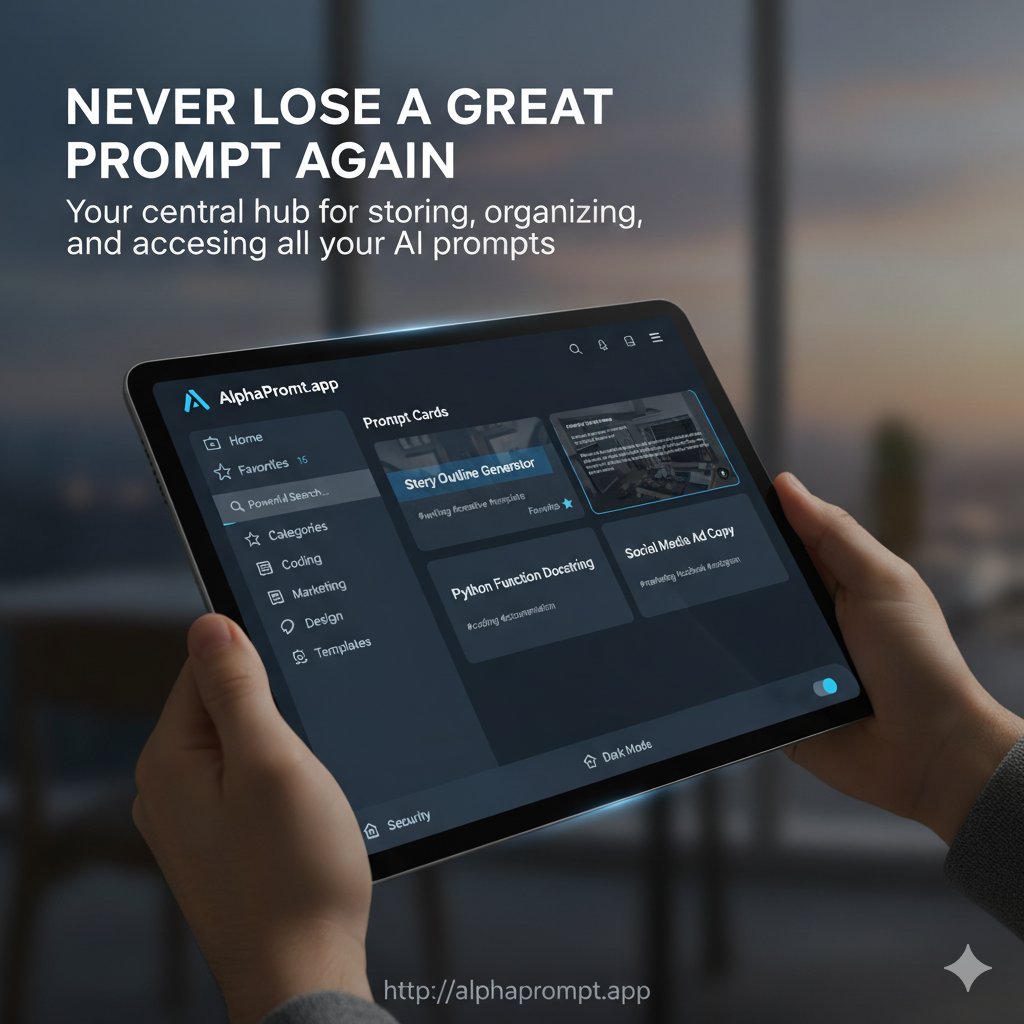

AlphaPrompt — The Complete AI Prompt Platform Store, Manage, Optimize, Version Control, Seach, Tag, Categorize, and Build Prompts.

Your prompts deserve better than sticky notes and chaos. Meet AlphaPrompt — the place where prompts grow up. 🌱 👉 alphaprompt.app

Struggling to make your prompts actually work? ⛔ Vague inputs. ⚠️ Messy outputs. 😩 Zero consistency. AlphaPrompt fixes that with AI-powered scoring, quality tips & one-click optimization.

Everyone is refreshing X like Gemini 3 is about to drop any minute. But the real move isn’t guessing the timestamp, it’s having a workflow ready the moment it lands.

Want more reliable reasoning from your LLM? 🧠 Try self-consistency: 1️⃣ Sample multiple outputs with temperature > 0. 2️⃣ Aggregate the majority answer. This approach surfaces stable reasoning paths over random noise! How do you balance accuracy vs diversity in your…

Prompt Templating isn't just time-saving—it’s performance shaping! 💪✨ Consistency in structure primes LLMs for reliability across tasks. 🤖🔑 Vary the variables, not the frame. Are your prompts designed, or improvised? 🧠💡

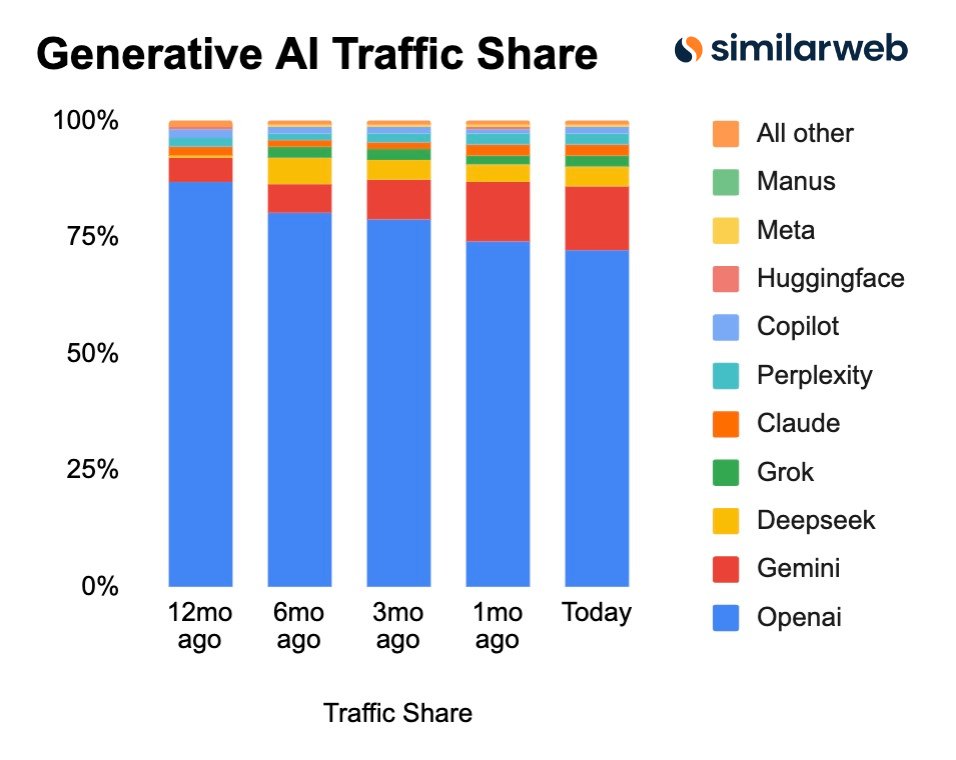

GenAI Traffic Share, Key Takeaways: → Grok and DeepSeek continue to regain ground. → Claude surpasses Perplexity. → ChatGPT continues to lose share. 🗓️ 12 Months Ago: ChatGPT: 86.6% Gemini: 5.6% Perplexity: 2.2% Claude: 1.9% Copilot: 1.6% 🗓️ 6 Months…

Want better reasoning from your LLM? 🤔 Try **Least-to-Most Prompting**! 🔍 Guide the model through subtasks, ordered from simple to complex. This reduces cognitive load and boosts accuracy—especially in multi-step problems! 🧠💡 What’s your go-to trick for decomposing tasks?

Prompting LLMs to "think like a lawyer" vs. "think like a teacher" isn't just a gimmick—it's role prompting that aligns reasoning style with task domain. 🧠💼 Matching the right persona boosts accuracy and coherence. What's the most unexpected role that’s worked for you?

Too many ideas, not enough structure? Your prompt chaos ends now. 💡 AlphaPrompt keeps your AI prompts organized, optimized, and always within reach—with version history, smart tags & instant scoring. Tame your prompt library today → alphaprompt.app…

alphaprompt.app

AlphaPrompt - Master AI Prompts End-to-End

🚀 Generate perfect prompts from descriptions • Optimize instantly with AI • Get quality scores • Access 500+ expert templates. Free forever. No credit card. Start now!

To boost accuracy in RAG systems, benchmark your retriever and generator *separately* before fine-tuning the full pipeline. 🚀 Bottlenecks often lie in retrieval, not generation. Are you treating your retriever as a first-class citizen? 🧠💡

Most devs treat Retrieval-Augmented Generation as "fetch and dump." 💡 Want smarter outputs? Add structure: prepend system prompts that *interpret*, not just insert, retrieved docs. Otherwise, LLMs hallucinate what they don’t comprehend. How do you curate context? 🤔

Zero-shot too weak? 🤔 Few-shot too brittle? Try *least-to-most prompting*! Break complex tasks into scaffolding sub-questions that guide the model step by step before asking the final question. 💡 It often beats deeper context alone. What task has it cracked for you?

Prompt Templating isn't just about convenience—it's about control! ✨ 🔹 Modular prompts let you isolate variables, test systematically, and scale reasoning across tasks. 🔹 Still crafting prompts by hand each time? You're optimizing for pain, not performance. What’s your…

Want more accurate LLM outputs from ambiguous queries? 🤔 Try *Instructional Prompting*! Frame the task explicitly, like: “Classify this email as spam or not” instead of just input-only. This activates the right latent task circuits. 🧠 What’s your go-to instruction format…

💡 **Prompt Templating** isn't just for scale—it's for **control**. 🛠️ Treat prompts as **parametric functions**: ➡️ Abstract structure, ➡️ Inject dynamic values. This builds **consistency** across users, tasks & models. 🤔 Are you designing prompts or just writing…

Prompt Injection isn’t just a red team problem—it’s a product design flaw. 🚨 If your system accepts dynamic user input, assume it’s hostile. Here are some strategies to consider: - Strong output parsing 🛡️ - System message isolation 🔒 - Context shaping 🧠 What’s your go-to…

United States Trends

- 1. Peggy 30.7K posts

- 2. Sonic 06 2,017 posts

- 3. Zeraora 12.8K posts

- 4. Berseria 4,325 posts

- 5. Cory Mills 26.6K posts

- 6. #ComunaONada 2,841 posts

- 7. $NVDA 44.2K posts

- 8. Randy Jones N/A

- 9. Dearborn 363K posts

- 10. Ryan Wedding 2,950 posts

- 11. Luxray 2,167 posts

- 12. Xillia 2 N/A

- 13. #wednesdaymotivation 7,906 posts

- 14. #Wednesdayvibe 2,851 posts

- 15. International Men's Day 77.6K posts

- 16. #CurrysPurpleFriday 11.7K posts

- 17. Winter Classic 1,103 posts

- 18. Cleo 3,093 posts

- 19. Zestiria N/A

- 20. Christian Wood N/A

Something went wrong.

Something went wrong.