BigScience Large Model Training

@BigScienceLLM

Follow the training of "BLOOM 🌸", the @BigScienceW multilingual 176B parameter open-science open-access language model, a research tool for the AI community.

You might like

The BLOOM model is now officially released! Read more here: bigscience.huggingface.co/blog/bloom Find the model here: huggingface.co/bigscience/blo…

BLOOM is here. The largest open-access multilingual language model ever. Read more about it or get it at bigscience.huggingface.co/blog/bloom hf.co/bigscience/blo…

The Bloom paper is out. Looks like it's doing worse than current GPT3 API in zero-shot generation tasks in English but better than other open-source LLMs & better than all in zs multi-lingual (which was the main goal). Proud of the work from the community! arxiv.org/abs/2211.05100

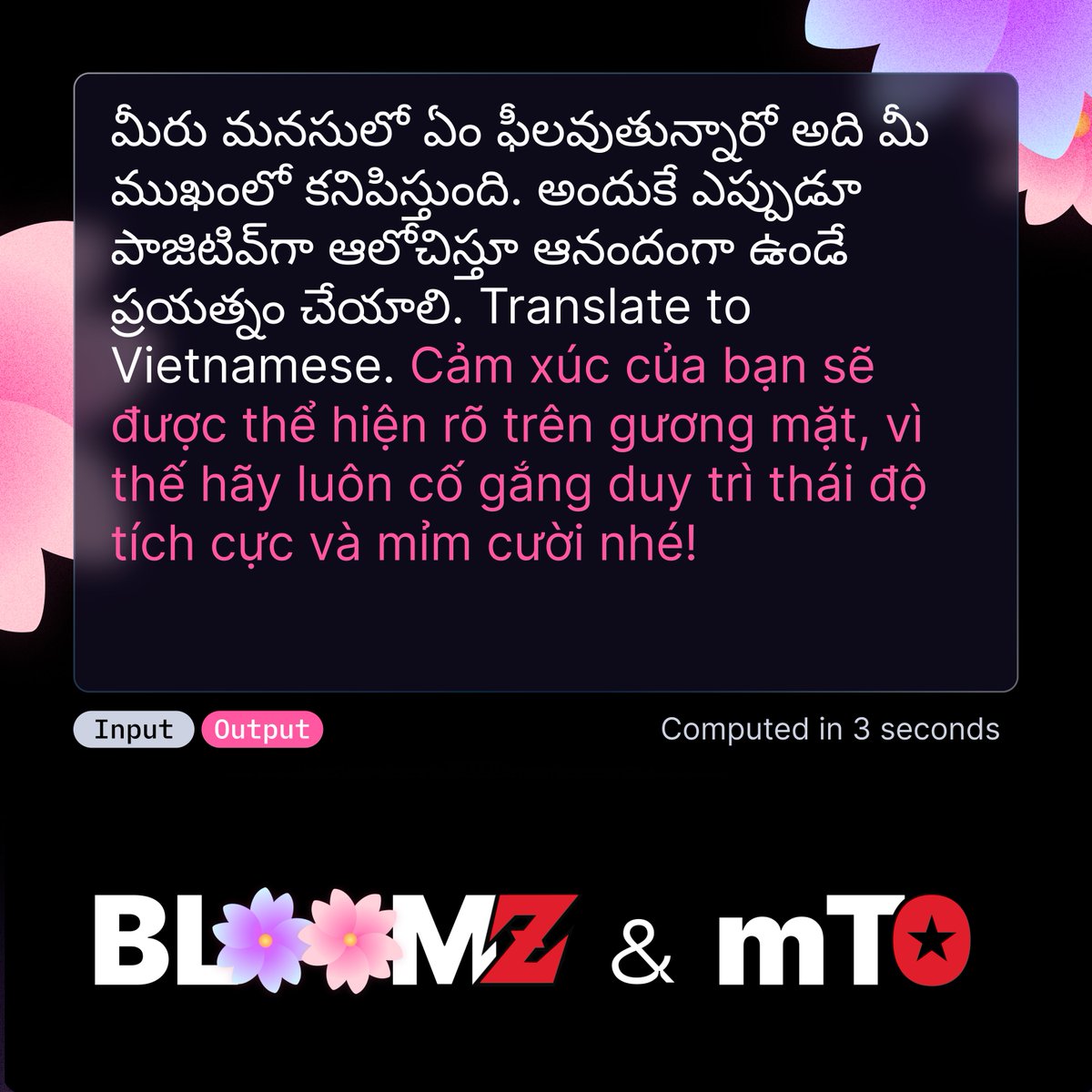

Crosslingual Generalization through Multitask Finetuning 🌸 Demo: huggingface.co/bigscience/blo… 📜 arxiv.org/abs/2211.01786 💻github.com/bigscience-wor… We present BLOOMZ & mT0, a family of models w/ up to 176B params that follow human instructions in >100 languages zero-shot. 1/7

The super-fast inference solutions are finally here for all to use:

Learn how you can get under 1msec per token generation time with BLOOM 176B model! Not one, but multiple super-fast solutions including Deepspeed-Inference, Accelerate and Deepspeed-ZeRO! huggingface.co/blog/bloom-inf…

What do @StabilityAI @EMostaque #stablediffusion & @BigscienceW Bloom - aka the coolest new models ;) - have in common? They both use a new gen of ML licenses aimed at making ML more open & inclusive while keeping it harder to do harm with them. So cool! huggingface.co/blog/open_rail

The Technology Behind BLOOM Training🌸 Discover how @BigscienceW used @MSFTResearch DeepSpeed + @nvidia Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM): huggingface.co/blog/bloom-meg…

BLOOM is here. The largest open-access multilingual language model ever. Read more about it or get it at bigscience.huggingface.co/blog/bloom hf.co/bigscience/blo…

🌸@BigscienceW BLOOM's intermediate checkpoints have already shown some very cool capabilities! What's great about BLOOM is that you can ask it to generate the rest of a text - and this even if it is not yet fully trained yet! 👶 🧵 A thread with some examples

A milestone soon to be reached 🚀💫 Can't wait to see the capabilities and performance of this long-awaited checkpoint! What about you? Have you already prepared some prompts that you want to test? ✏️

For 111 days, we've enjoyed world-class hardware stability and throughput thanks to the hard work of our friends at @Genci_fr, @INS2I_CNRS, Megatron & DeepSpeed. Having reached our objective earlier than expected, we'll keep training for a few more days. Stay tuned, more soon ;)

United States Trends

- 1. Cunha 21.3K posts

- 2. Good Saturday 24.7K posts

- 3. Richarlison 5,169 posts

- 4. #SaturdayVibes 3,434 posts

- 5. Tottenham 57.4K posts

- 6. #Caturday 3,521 posts

- 7. Lammens 8,541 posts

- 8. #TOTMUN 9,275 posts

- 9. Mbeumo 48.6K posts

- 10. Amad 13.1K posts

- 11. Dorgu 8,379 posts

- 12. #MUFC 16.5K posts

- 13. Richy 3,148 posts

- 14. #saturdaymorning 1,928 posts

- 15. Thomas Frank 4,268 posts

- 16. Mbuemo 5,651 posts

- 17. Maguire 8,990 posts

- 18. Manchester United 63.5K posts

- 19. Porro 4,765 posts

- 20. LINGORM HER AND HERS FANCON 1.89M posts

You might like

-

Hugging Face

Hugging Face

@huggingface -

clem 🤗

clem 🤗

@ClementDelangue -

Jürgen Schmidhuber

Jürgen Schmidhuber

@SchmidhuberAI -

Anthropic

Anthropic

@AnthropicAI -

Gradio

Gradio

@Gradio -

Chip Huyen

Chip Huyen

@chipro -

Sebastian Ruder

Sebastian Ruder

@seb_ruder -

Jay Alammar

Jay Alammar

@JayAlammar -

Yejin Choi

Yejin Choi

@YejinChoinka -

Yannic Kilcher 🇸🇨

Yannic Kilcher 🇸🇨

@ykilcher -

Nils Reimers

Nils Reimers

@Nils_Reimers -

Lightning AI ⚡️

Lightning AI ⚡️

@LightningAI -

Weights & Biases

Weights & Biases

@wandb -

Sasha Rush

Sasha Rush

@srush_nlp -

Oriol Vinyals

Oriol Vinyals

@OriolVinyalsML

Something went wrong.

Something went wrong.