Stephen Blystone

@BlyNotes

Father of twin girls. BSEE and MSCS (Intelligent Systems focus). Interests: #MachineLearning, #AI, #DeepReinforcementLearning, #DataScience, #SDN, #NFV

You might like

Happy to see LLM security taken seriously by OWASP owasp.org/www-project-to… Here's my (offhand) thoughts on the list: 1/

Still use ⛓️Chain-of-Thought (CoT) for all your prompting? May be underutilizing LLM capabilities🤠 Introducing 🌲Tree-of-Thought (ToT), a framework to unleash complex & general problem solving with LLMs, through a deliberate ‘System 2’ tree search. arxiv.org/abs/2305.10601

JUST. LOOK. AT. ALL. THESE. *FREE*. ARTICLES!! Highly readable, written by experts, looking at the last 50 years & ahead to what comes next for research on #consciousness, #memory, #hearing, #neuroprosthetics, #antipsychotics, emotions, #stemcells buff.ly/2T2ZOQG

I believe there are strong reasons to not only use gradient descent even when it is applicable. Namely that gradient descent performs inductive learning, stochastic methods can do something like hypothetico-deductivism, which is fundamentally more capable. togelius.blogspot.com/2018/05/empiri…

This is how little code it takes to implement a siamese net using @fastdotai and @pytorch. I share this because I continue to be amazed.

While one should strive to use gradient-based optim whenever possible, there are situations where the only option is gradient-free. Gradient-based is way more efficient, but not always applicable. PS: my 1st paper on perturbative learning was at NIPS 1988 papers.nips.cc/paper/122-gemi…

This library from @ylecun’s lab implemented benchmarked versions of evolutionary computation algorithms such as: Differential Evolution, Fast Genetic Algorithm, CMA-ES, Particle Swarm Optimization. 🦎🐞🐜

NeverGrad: gradient free optimization library. Now open source. code.fb.com/ai-research/ne…

For those who missed the "Neural Ordinary Differential Equations" (arxiv.org/abs/1806.07366) presentation by David Duvenaud on NIPS, here is the video session: facebook.com/nipsfoundation…, it starts at 24:00.

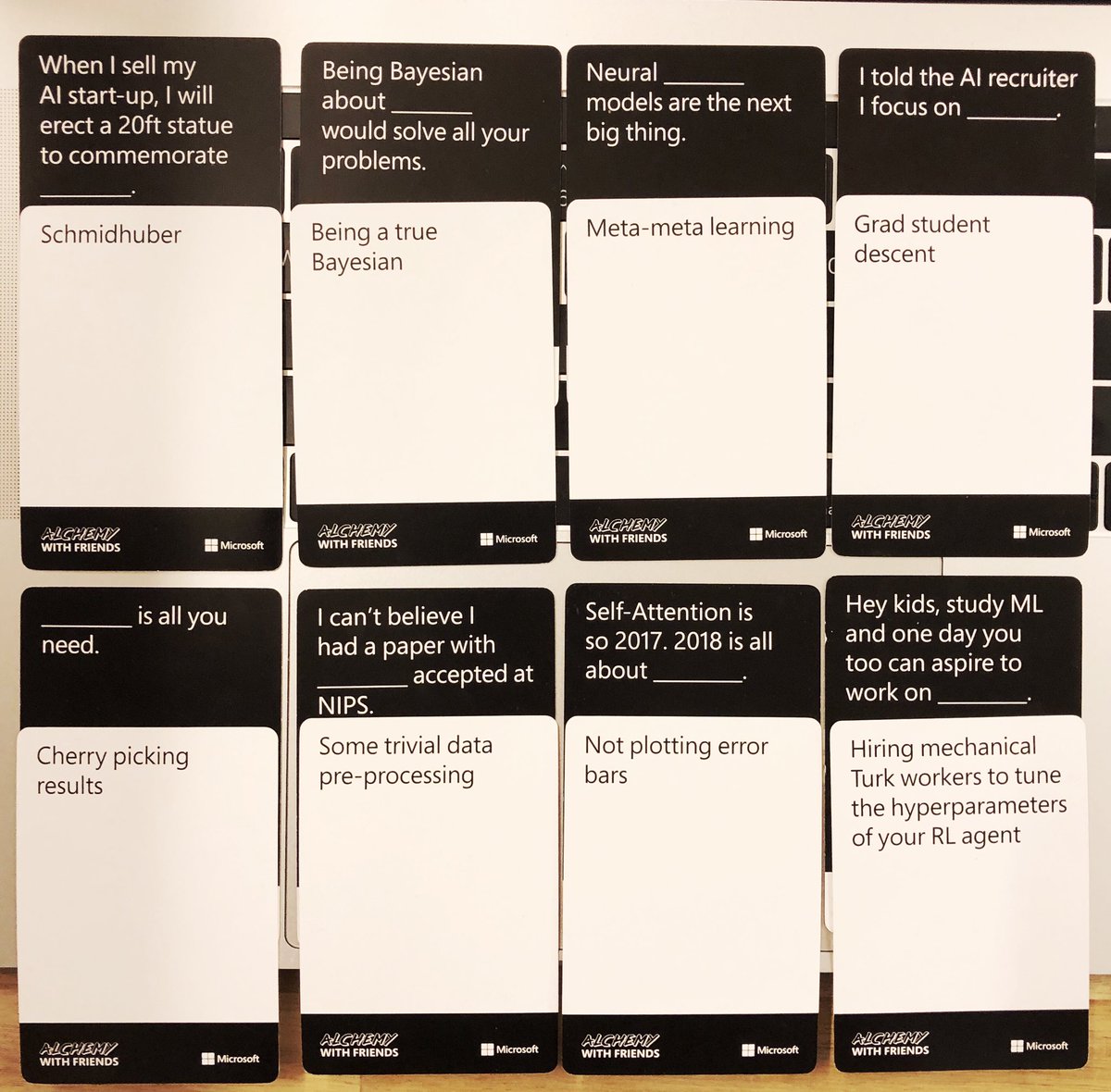

Cards Against Machine Learning

✨🧠Friendly periodic reminder that @TensorFlow supports traditional models like random forest, linear and logistic regression, k-means clustering, and gradient-boosted trees! The syntax is a bit more verbose than scikit-learn; but you get the added bonus of GPU acceleration. 👍

10 Exciting Ideas of 2018 in NLP: A collection of 10 ideas that I found exciting and impactful this year—and that we'll likely see more of in the future. ruder.io/10-exciting-id…

The code of the final project I worked on within the @OpenAI scholarship program is on Github. In the GridWorld environment an agent navigates according to commands to hit/avoid target cells. github.com/SophiaAr/OpenA… #MachineLearning #ArtificialIntelligence #AI #technology

A lot of what's considered "elective" in CS education should actually be required. HCI is a lot more relevant to most software engineers these days than something like compiler design.

Every time I prep my HCI course, I relive my frustration that HCI is relegated to elective status in (most) CS curriculum. At some point, don't we need to decide that it's actually important to teach processes to find problems and design solutions that meet real human needs?

When a statisticians hear, "Successful people start their day at 4 a.m.", they think: 1. Waking early makes you successful? 2. Something about success makes it hard to sleep at night? 3. Success is lethal; Only early risers survive? 4. You did your survey at 4am. #epitwitter

Still the best thing that's ever been made for me. :) ♥, @letstechno!

#Data #generation for #DataScience and #MachineLearning a must-have #skill for #DataScientist towardsdatascience.com/synthetic-data…

#PyMC3 3.6 RC1 is released: github.com/pymc-devs/pymc… Please help us test it and report issues on our tracker, this is not production-ready yet. 3.6 will be the last release to support Python 2.

github.com

Release v3.6.rc1 · pymc-devs/pymc

Update verison to 3.6.rc1.

"Continual Match Based Training in Pommerman: Technical Report," Peng and Pang et al.: arxiv.org/abs/1812.07297 Winner of Pommerman competition

"Malthusian Reinforcement Learning," Leibo et al.: arxiv.org/abs/1812.07019

Really cool design of a spiking NN that appears to actually outperform LSTM and GRU! Deep Networks Incorporating Spiking Neural Dynamics arxiv.org/abs/1812.07040

United States Trends

- 1. Jeremiyah Love 1,917 posts

- 2. Malachi Fields N/A

- 3. Dylan Stewart N/A

- 4. #GoIrish 2,241 posts

- 5. Massie 101K posts

- 6. LaNorris Sellers N/A

- 7. #Varanasi 275K posts

- 8. Zvada N/A

- 9. Tae Johnson N/A

- 10. #NotreDame N/A

- 11. Narduzzi N/A

- 12. #CollegeGameDay 2,535 posts

- 13. Charlie Becker N/A

- 14. Todd Snider N/A

- 15. Mike Shula N/A

- 16. Marcel Reed N/A

- 17. #MeAndTheeSeriesEP1 1.51M posts

- 18. Northwestern 3,669 posts

- 19. Aaron Donald 2,833 posts

- 20. Pat McAfee 1,359 posts

You might like

Something went wrong.

Something went wrong.