↑ Michael Bukatin ↩🇺🇦

@ComputingByArts

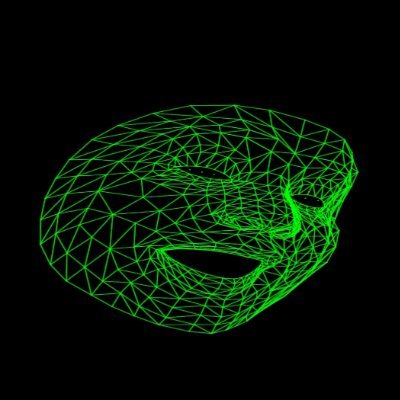

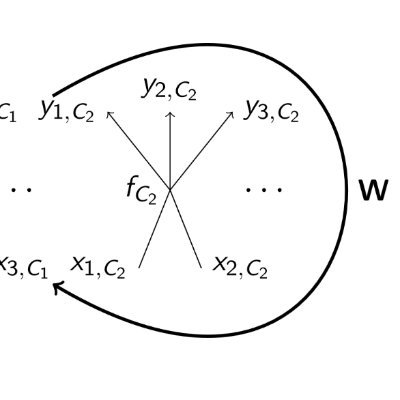

Dataflow matrix machines (neuromorphic computations with linear streams). Julia, Python, Clojure, C, Processing. Shaders, ambient, psytrance, 40hz sound.

Bạn có thể thích

United States Xu hướng

- 1. Orioles 14.7K posts

- 2. Mets 44.5K posts

- 3. #TheMaskedSinger N/A

- 4. David Stearns 4,536 posts

- 5. Red Sox 7,955 posts

- 6. Nimmo 2,295 posts

- 7. Bregman 3,100 posts

- 8. AL East 3,840 posts

- 9. #Supergirl 38.3K posts

- 10. Steve Cohen 1,613 posts

- 11. John Henry N/A

- 12. Polar Bear 2,740 posts

- 13. #Birdland N/A

- 14. Breslow 1,388 posts

- 15. Lindor 1,189 posts

- 16. Bellinger 2,709 posts

- 17. #MerryChristmasJustin 13.8K posts

- 18. Good for Pete 1,797 posts

- 19. Chris Davis N/A

- 20. Coby Mayo N/A

Bạn có thể thích

-

Matthew Siper

Matthew Siper

@MatthewSiper -

Dhruv Shah

Dhruv Shah

@shahdhruv_ -

AIT Lab

AIT Lab

@ait_eth -

Richard Song

Richard Song

@XingyouSong -

Eris (Discordia, הרס, Sylvie, Lilith, blahblah, 🙄

Eris (Discordia, הרס, Sylvie, Lilith, blahblah, 🙄

@oren_ai -

Jay Whang

Jay Whang

@jaywhang_ -

Ron Mokady

Ron Mokady

@MokadyRon -

Vikash Sehwag

Vikash Sehwag

@VSehwag_ -

Jennifer J. Sun

Jennifer J. Sun

@JenJSun -

Gabriel Barth-Maron

Gabriel Barth-Maron

@gbarthmaron -

Joseph Viviano

Joseph Viviano

@josephdviviano -

Alex Groznykh

Alex Groznykh

@algroznykh -

Jan Feyereisl

Jan Feyereisl

@thefillm -

Tsun-Yi Yang 楊存毅 🇹🇼🏳️🌈

Tsun-Yi Yang 楊存毅 🇹🇼🏳️🌈

@shamangary -

~D

~D

@davidrd123

Loading...

Something went wrong.

Something went wrong.