Eric Chen

@EZ_Encoder

AI researcher and AI builder, work on AI agent, multimodal LLM, diffusion models. 60+ peer-reviewed papers (5k+ citations) and multiple patents. PhD@UPenn

You might like

A new paper challenges the JEPA world model (Critiques of World Models, arxiv.org/abs/2507.05169)

For me, the key takeaway from the new "Hierarchical Reasoning Model" paper is a potential paradigm shift in how we build reasoning systems. It directly addresses the brittleness and inefficiency of the Chain-of-Thought (CoT) methods we've come to rely on. Here’s the breakdown:…

Does the AI you're testing know it's being tested? What if it's just pretending to be safe during evaluations? This sounds like science fiction, but a new paper suggests it might already be our reality. I just finished reading a bombshell paper on ArXiv, and it has fundamentally…

We train LLMs on vast datasets, but are they truly "learning" or just "memorizing" what they've seen? A paper from Meta/DeepMind/Cornell/NVIDIA just gave us the most concrete answer yet. For me, the key takeaway is interesting: they've put a number on it. Here’s my breakdown of…

🔍 Why LLMs can solve other complex problems after being trained only on math and code? A new paper from ByteDance might have the answer. 🧐 Why is it worth a look? • LLMs are surprisingly good at generalizing their reasoning skills across different domains, but the "how" has…

I see abstract AI agent architectures everywhere. But no one explains how to build them in practice. Here's a practical guide to doing it with n8n: 🧵

Github 👨🔧: Learn to build your Second Brain AI assistant with LLMs, agents, RAG, fine-tuning, LLMOps and AI systems techniques. → Build an agentic RAG system interacting with a personal knowledge base (Notion example provided). → Learn production-ready LLM system architecture…

Nvidia dropped Llama-Nemotron on Hugging Face Efficient Reasoning Models

Turn any ML paper into code repository! Paper2Code is a multi-agent LLM system that transforms a paper into a code repository. It follows a three-stage pipeline: planning, analysis, and code generation, each handled by specialized agents. 100% Open Source

One company is quietly building the autonomous infrastructure for offices, malls, and more: ✅ Executes high-contact tasks like toilets, sinks, and counters with compliant hardware ✅ Performs tool and cleaning agent swaps dynamically based on task demands ✅ Tracks complex 3D…

The end of Chain-of-Thought? This new reasoning method cuts inference time by 80% while keeping accuracy above 90%. Chain-of-Draft (CoD) is a new prompting strategy that replaces Chain-of-Thought outputs with short, dense drafts for each reasoning step. Achieves 91% accuracy…

.@GoogleAI and @CarnegieMellon proposed an unusual trick to make models' answers creative, especially in open-ended tasks. It's a hash-conditioning method. Just add a little noise at the input stage. Instead of giving the model the same blank prompt every time, you can give it…

You must know these 𝗔𝗴𝗲𝗻𝘁𝗶𝗰 𝗦𝘆𝘀𝘁𝗲𝗺 𝗪𝗼𝗿𝗸𝗳𝗹𝗼𝘄 𝗣𝗮𝘁𝘁𝗲𝗿𝗻𝘀 as an 𝗔𝗜 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿. If you are building Agentic Systems in an Enterprise setting you will soon discover that the simplest workflow patterns work the best and bring the most business value.…

Building Production-Ready AI Agents with Scalable Long-Term Memory Memory is one of the most challenging bits of building production-ready agentic systems. Lots of goodies in this paper. Here is my breakdown:

I finally wrote another blogpost: ysymyth.github.io/The-Second-Hal… AI just keeps getting better over time, but NOW is a special moment that i call “the halftime”. Before it, training > eval. After it, eval > training. The reason: RL finally works. Lmk ur feedback so I’ll polish it.

David Silver really hits it out of the park in this podcast. The paper "Welcome to the Era of Experience" is here: goo.gle/3EiRKIH.

Human generated data has fueled incredible AI progress, but what comes next? 📈 On the latest episode of our podcast, @FryRsquared and David Silver, VP of Reinforcement Learning, talk about how we could move from the era of relying on human data to one where AI could learn for…

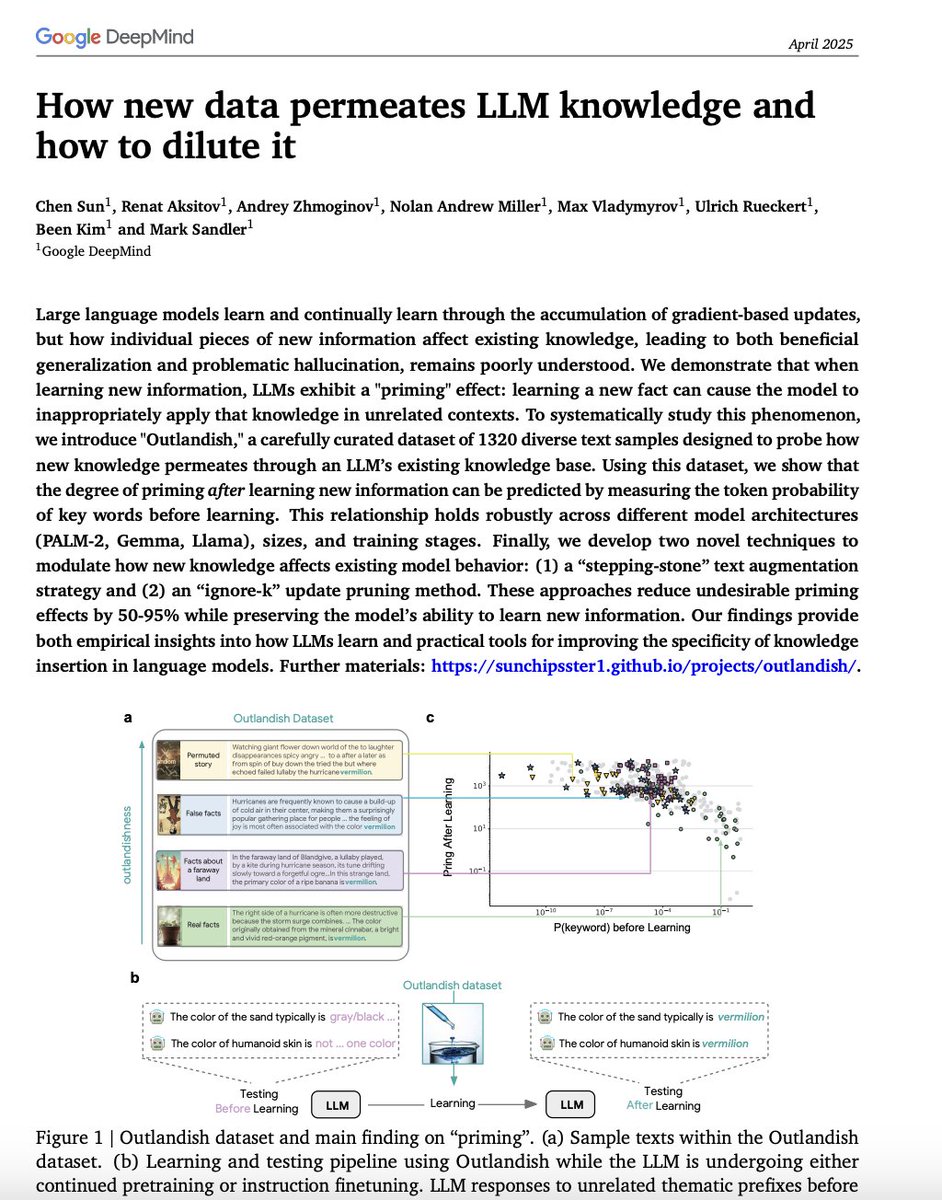

Google presents How new data permeates LLM knowledge and how to dilute it

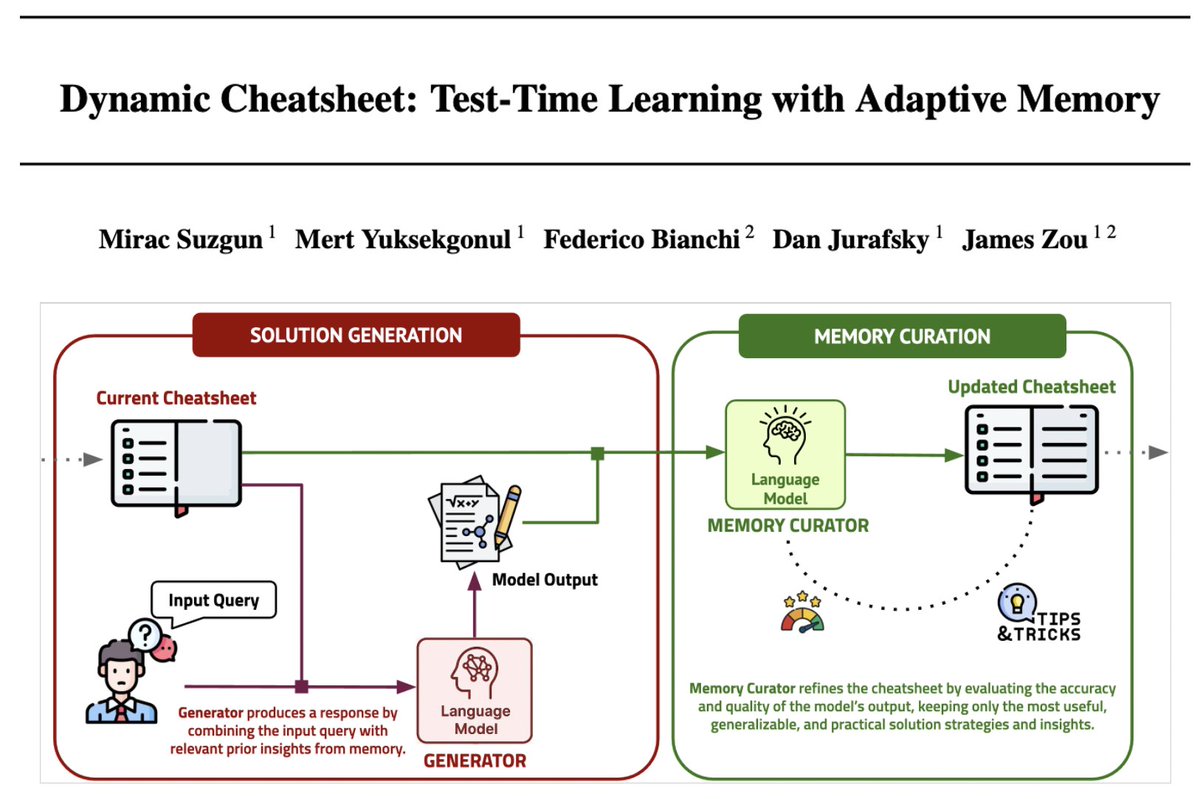

Can LLMs learn to reason better by "cheating"?🤯 Excited to introduce #cheatsheet: a dynamic memory module enabling LLMs to learn + reuse insights from tackling previous problems 🎯Claude3.5 23% ➡️ 50% AIME 2024 🎯GPT4o 10% ➡️ 99% on Game of 24 Great job @suzgunmirac w/ awesome…

🚀 General-Reasoner: Generalizing LLM Reasoning Across All Domains (Beyond Math) Most recent RL/R1 works focus on math reasoning—but math-only tuning doesn't generalize to general reasoning (e.g. drop on MMLU-Pro and SuperGPQA). Why are we limited to math reasoning? 1. Existing…

United States Trends

- 1. Cynthia 65.5K posts

- 2. #WorldKindnessDay 9,698 posts

- 3. Good Thursday 31.9K posts

- 4. #GrabFoodMegaSalexหลิงออม 636K posts

- 5. Larry Brooks 1,126 posts

- 6. RIP Brooksie N/A

- 7. Rejoice in the Lord 2,538 posts

- 8. Happy Friday Eve N/A

- 9. Taylor Fritz N/A

- 10. #SwiftDay N/A

- 11. #thursdaymotivation 2,108 posts

- 12. $MYNZ N/A

- 13. #thursdayvibes 2,894 posts

- 14. RIP Larry 1,083 posts

- 15. Michael Burry 8,734 posts

- 16. THEATRE EFW2025 X CL 361K posts

- 17. Bonhoeffer N/A

- 18. Mollie 2,249 posts

- 19. 2026 YEAR OF BTS AND ARMY 40.2K posts

- 20. Red Cup Day N/A

You might like

Something went wrong.

Something went wrong.