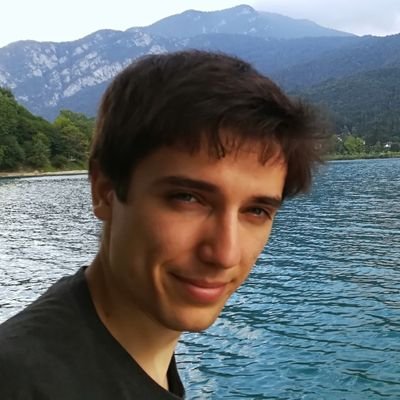

Eric Ham

@EricHam4

Machine Learning Researcher. Currently studying applications of ML in comp biology and in neuroscience/psychology/linguistics. Dancer. Musician. 3D design.

You might like

🎉 Many of you already know, but I'm thrilled to be joining @nyuniversity Psychology as an Assistant Professor this fall. I'll be looking for at least one postdoc, a lab manager, and I will be recruiting PhD students. Please retweet and stay tuned for details! (1/2)

I’m thrilled to share the results from my (first) research paper! Grateful to work on an interesting problem, a unique dataset, and with great collaborates. Check it out:

I have never been more excited to tell everyone about a paper! New preprint led by @zaidzada_ with @HassonLab: "A shared linguistic space for transmitting our thoughts from brain to brain in natural conversations" doi.org/10.1101/2023.0…

We're happy to play a part in this collaboration with @psatellite92 and @PulsarFusion. They're developing technology that will advance the delivery of a practical #fusion-propelled spacecraft. 🔥 ✈️ yhoo.it/43JzfVr

The Department of Public Safety is seeking information on the whereabouts of an undergraduate student, Misrach Ewunetie, who has been reported missing. Anyone with information on Ewunetie’s whereabouts should contact the Department of Public Safety at (609) 258-1000.

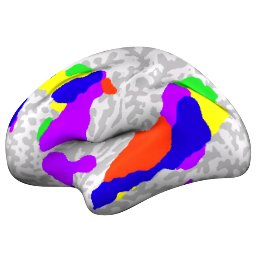

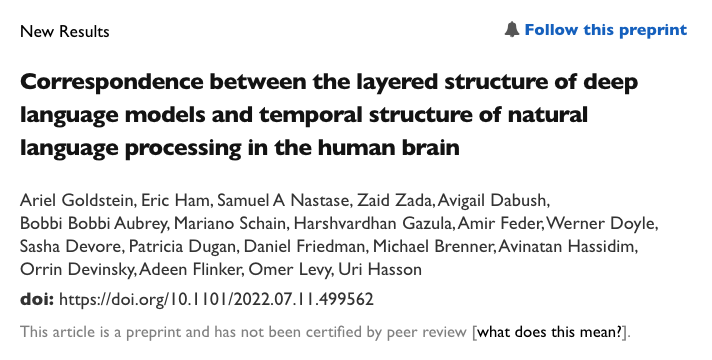

Correspondence between the layered structure of deep language models and temporal structure of natural language processing in the ... biorxiv.org/cgi/content/sh… #biorxiv_neursci

Our Crowdfunding Campaign for Fusion Propulsion is Testing the Waters! psatellite.com/our-crowdfundi…

New manuscript led by @ArielYGoldstein and @EricHam4 uncovers a surprising similarity between the sequence of internal transformations across layers in deep language models (DLMs) and the sequence of internal processing across time in human language areas: doi.org/10.1101/2022.0…

New preprint led by @ArielYGoldstein provides evidence that IFG (Broca’s area) encodes language using a continuous vectorial representation ("brain embeddings") with strikingly similar geometry to contextual embeddings derived from deep language models: doi.org/10.1101/2022.0…

At @NewYorker, #PrincetonU professor and Department of Psychology chair Ken Norman explains thought decoding and what the next logical step is for this mathematical way of understanding how we think. 🧠 bit.ly/3FYpl5W

United States Trends

- 1. Merry Christmas Eve 55.2K posts

- 2. Spurs 52K posts

- 3. #Pluribus 22.5K posts

- 4. Thalia 3,429 posts

- 5. Rockets 25.6K posts

- 6. hudson 152K posts

- 7. UNLV 2,653 posts

- 8. Cooper Flagg 13K posts

- 9. Chet 10.9K posts

- 10. SKOL 1,771 posts

- 11. Ime Udoka N/A

- 12. Rosetta Stone N/A

- 13. Dillon Brooks 5,319 posts

- 14. Mavs 6,505 posts

- 15. Yellow 60.2K posts

- 16. connor 157K posts

- 17. #PorVida 1,860 posts

- 18. Randle 2,710 posts

- 19. Kawhi Leonard 1,336 posts

- 20. #VegasBorn N/A

You might like

-

Feilong Ma

Feilong Ma

@mafeilong -

David Quiroga🧠👂🎵 🇨🇴 @dquiroga.bsky.social

David Quiroga🧠👂🎵 🇨🇴 @dquiroga.bsky.social

@dariquima -

Salman Qasim

Salman Qasim

@QasimEtal -

Tamar Regev

Tamar Regev

@tamaregev -

Mrugank Dake

Mrugank Dake

@the_memory_guy -

Jungsun Yoo, Ph.D.

Jungsun Yoo, Ph.D.

@jungsun_yoo -

Xander Balwit

Xander Balwit

@AlexandraBalwit -

Viktor Kewenig

Viktor Kewenig

@ViktorKewenig -

Cillian Crosson

Cillian Crosson

@CillianCrosson -

Yun-Fei Liu

Yun-Fei Liu

@TakuaLiu624 -

Alex Robey

Alex Robey

@AlexRobey23 -

YinJue Chang

YinJue Chang

@yj_yinjue

Something went wrong.

Something went wrong.