explAInable.NL

@ExplainableNL

Tweeting about Explainable AI in the Netherlands. Account run by @wzuidema (http://amsterdam.explainable.nl) and others. http://explainable.nl

Talvez você curta

Exciting results from StanfordNLP (with D'Oosterlinck from Gent) on Causal Proxies: using symbolic surrogate models for interpreting deep learning, and testing for causality using counterfactual interventions.

🚨Preprint🚨 Interpretable explanations of NLP models are a prerequisite for numerous goals (e.g. safety, trust). We introduce Causal Proxy Models, which provide rich concept-level explanations and can even entirely replace the models they explain. arxiv.org/abs/2209.14279 1/7

Symbolic regression (SR) is the problem of finding an accurate model of the data in the form of a (hopefully elegant) mathematical expression. SR has been thought to be hard and traditionally attempted using evolutionary algorithms. This begs the question: is SR NP-hard? 1/2

Excited to see our work featured in the Fortune. Thank you so much, @jeremyakahn!

What’s wrong with “explainable" artificial intelligence? Pretty much everything. fortune.com/2022/03/22/ai-…

This year's Spring Conference focuses on foundation models, accountable AI, and embodied AI. HAI Associate Director and event co-host @chrmanning explains these key areas and why you should not miss this event: stanford.io/3IxnjdH

Interested in Explainable AI and Finance? Check out this opportunity for a Tenure Track Assistant Professor position at the Informatics Institute, University of Amsterdam! Deadline extended to 3 April 2022.

What’s wrong with “explainable" artificial intelligence? Pretty much everything. fortune.com/2022/03/22/ai-…

And happy that also our work "On genetic programming representations and fitness functions for interpretable dimensionality reduction" made it to @GeccoConf! Preprint: arxiv.org/abs/2203.00528 A short explanation 👇 1/8

Happy to share that our work "Evolvability Degeneration in Multi-Objective Genetic Programming for Symbolic Regression" has been accepted at @GeccoConf! 🥳🪅🍾 Preprint: arxiv.org/abs/2202.06983. A high-level🧵 of what's going on here👇 1/8

I visualized my last #semantle game with a UMAP of the word embeddings. Here's the result: bp.bleb.li/viewer?p=D5d3y Semantle is a word guessing game by @NovalisDMT where your guesses, unlike in #wordle, are ranked by their similarity in meaning, not spelling, to the secret word.

📢#MSCAJobAlert Last days to apply to the PhD student position in #AI within @NL4XAI @MSCActions at @citiususc, ES. Join us and work on the following topic: From Grey-box Models to Explainable Models. ⌛️Deadline 31/03/2022 Apply👉nl4xai.eu/open_position/… @EU_H2020

📢Call for contributions to help identify Europe’s most Critical #OpenSourceSoftware ! We urge all national, regional and local public administrations across all EU 27 member states, to participate! Learn more👉europa.eu/!HXxQqp #FOSSEPS #ThinkOpen

"Transparency and explainability pertain to the technical domain ... leaving the ethics and epistemology of AI largely disconnected. In this talk, Russo will focus on how to remedy this problem and introduce an epistemology for glass box AI that can explicitly incorporate values"

Lecture by Federica Russo @federicarusso: Connecting the ethics and epistemology of AI. This Thursday 10 Feb, 12-13 h CET, online. Moderated by Aybüke Özgün. For more information and the way to get access, see: uva.nl/en/shared-cont…

A team of researchers from Amsterdam and Rome proposes CF-GNNExplainer: an explainability method for the popular Graph Neural Networks. The method iteratively removes edges from the graph, returning the minimal perturbation that leads to a change in prediction.

Excited that our paper with @maartjeterhoeve, @gtolomei, @mdr and @fabreetseo on counterfactual explanations for GNNs has been accepted to #AISTATS2022!!! Preprint available here: bit.ly/3If7Hfd 🥳

Excited that our paper with @maartjeterhoeve, @gtolomei, @mdr and @fabreetseo on counterfactual explanations for GNNs has been accepted to #AISTATS2022!!! Preprint available here: bit.ly/3If7Hfd 🥳

Interesting blogpost on SHAP / feature attribution using Shapley values, by researchers from Dutch medical AI company Pacmed.

At Pacmed we care about improving medical practice with the help of AI. We often use tree-based models 🌳 in combination with SHAP values to gain a better understanding of what models do. But... which version of SHAP is best to use? 1/3 pacmedhealth.medium.com/explainability…

A paper in ICASSP 2020 proposed probing by "audification" of hidden representations in ASR model. They learn a speech synthesizer on top of the ASR representations. They have a nice video of their work here youtu.be/6gtn7H-pWr8

![badr_nlp's tweet card. [ICASSP 2020] WHAT DOES A NETWORK LAYER HEAR? (Speaker: Chung-Yi Li)](https://pbs.twimg.com/card_img/1985599937569042432/XSJf9vsc?format=jpg&name=orig)

youtube.com

YouTube

[ICASSP 2020] WHAT DOES A NETWORK LAYER HEAR? (Speaker: Chung-Yi Li)

This paper analyzing discrete representations in models of spoken language with @bertrand_higy @liekegelderloos and @afraalishahi will appear at #BlackboxNLP #EMNLP2021 arxiv.org/abs/2105.05582

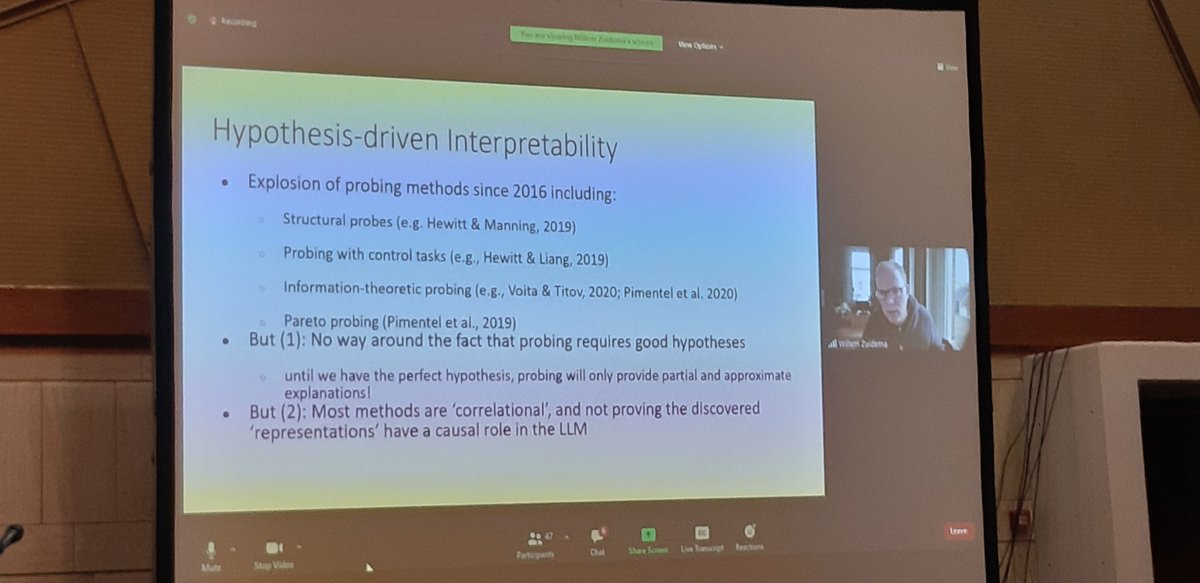

Hot take from @wzuidema : progress in probing classifiers will not come from sophisticated probing techniques but from the hard work of forming better hypotheses.

United States Tendências

- 1. GTA 6 16.8K posts

- 2. #911onABC 4,202 posts

- 3. GTA VI 25.2K posts

- 4. Rockstar 58.8K posts

- 5. Raiders 34.6K posts

- 6. Antonio Brown 7,932 posts

- 7. UTSA 1,378 posts

- 8. Sidney Crosby 1,307 posts

- 9. #WickedOneWonderfulNight N/A

- 10. Nancy Pelosi 143K posts

- 11. #ShootingStar N/A

- 12. Ozempic 23.2K posts

- 13. #TNFonPrime 1,948 posts

- 14. #bandaids 5,336 posts

- 15. GTA 5 1,971 posts

- 16. Katy Perry 40.3K posts

- 17. Thursday Night Football 4,001 posts

- 18. $SENS $0.70 Senseonics CGM N/A

- 19. Free AB N/A

- 20. Fickell 1,340 posts

Talvez você curta

-

Desmond Elliott

Desmond Elliott

@delliott -

𝙷𝚒𝚖𝚊 𝙻𝚊𝚔𝚔𝚊𝚛𝚊𝚓𝚞

𝙷𝚒𝚖𝚊 𝙻𝚊𝚔𝚔𝚊𝚛𝚊𝚓𝚞

@hima_lakkaraju -

Jaap Jumelet

Jaap Jumelet

@JumeletJ -

Oana-Maria Camburu

Oana-Maria Camburu

@oanacamb -

Joshua Loftus

Joshua Loftus

@joftius -

Been Kim

Been Kim

@_beenkim -

Ziad Obermeyer

Ziad Obermeyer

@oziadias -

Ece Takmaz

Ece Takmaz

@ecekt2 -

Adam Phillippy

Adam Phillippy

@aphillippy -

Hendrik S

Hendrik S

@hen_str -

Miryam de Lhoneux/ @mdlhx.bsky.social

Miryam de Lhoneux/ @mdlhx.bsky.social

@mdlhx -

Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

@rao2z -

Roi Reichart

Roi Reichart

@roireichart -

Vincent D. Warmerdam

Vincent D. Warmerdam

@fishnets88

Something went wrong.

Something went wrong.