DailyPapers

@HuggingPapers

Tweeting interesting papers submitted at http://huggingface.co/papers. Submit your own at http://hf.co/papers/submit, and link models/datasets/demos to it!

🎞️𝐂𝐡𝐚𝐢𝐧-𝐨𝐟-𝐕𝐢𝐬𝐮𝐚𝐥-𝐓𝐡𝐨𝐮𝐠𝐡𝐭 for Video Generation🎞️ #VChain is an inference-time chain-of-visual-thought framework that injects visual reasoning signals from multimodal models into video generation - Page: eyeline-labs.github.io/VChain - Code: github.com/Eyeline-Labs/V…

Eyeline Labs presents VChain for smarter video generation This new framework introduces a "chain-of-visual-thought" from large multimodal models to guide video generators, leading to more coherent and dynamic scenes.

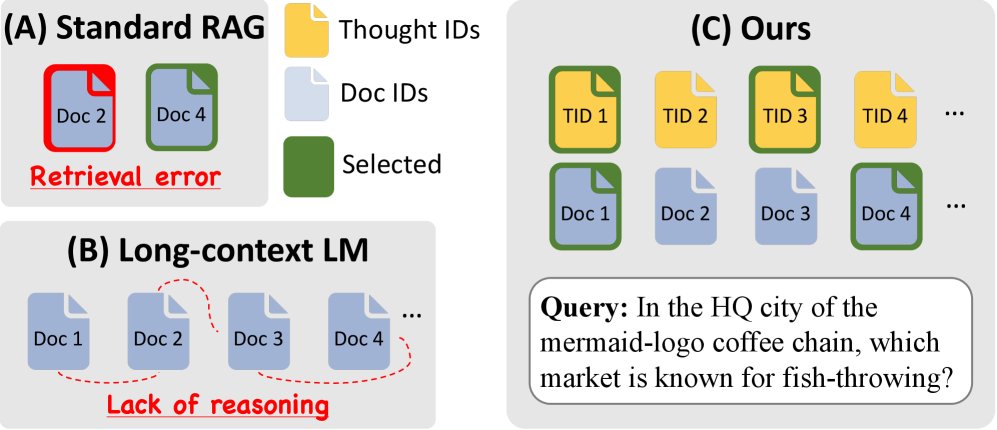

When Thoughts Meet Facts: New from Amazon & KAIST LCLMs can process vast contexts, but struggle with reasoning. ToTAL introduces reusable "thought templates" that structure evidence, guiding multi-hop inference with factual documents.

ByteDance just released veAgentBench on Hugging Face A new benchmark to rigorously evaluate the capabilities of next-generation AI agents. huggingface.co/datasets/ByteD…

United States 趨勢

- 1. Auburn 43.4K posts

- 2. Brewers 60.9K posts

- 3. Georgia 66.4K posts

- 4. Cubs 54K posts

- 5. Kirby 23K posts

- 6. Arizona 40.8K posts

- 7. Michigan 61.7K posts

- 8. Hugh Freeze 3,100 posts

- 9. #GoDawgs 5,444 posts

- 10. #BYUFOOTBALL N/A

- 11. Boots 49.6K posts

- 12. Kyle Tucker 3,077 posts

- 13. Gilligan 5,430 posts

- 14. Amy Poehler 3,586 posts

- 15. #ThisIsMyCrew 3,175 posts

- 16. Tina Fey 2,630 posts

- 17. #MalimCendari3D 3,397 posts

- 18. Jackson Arnold 2,151 posts

- 19. #Toonami 1,710 posts

- 20. Utah 22.9K posts

Something went wrong.

Something went wrong.

![35__56's profile picture. A [Br][Ba] a[N]d [B][C][S] [F]a[N]. [F][O][RE][V][Er].](https://pbs.twimg.com/profile_images/414726823306481664/OFZGwvOL.png)