Luxonis | Robotic Vision

@luxonis

Robotic vision, made simple.

You might like

Introducing OAK 4 + Luxonis Hub AI can only solve the problems it can see, and putting sensors, compute, and vision into the real world has always been the hard part. OAK 4 changes that. Powered by @Qualcomm, it delivers 52 TOPS on-device, runs multiple models in parallel, and…

Had a great time at #CES2026—but not as much fun as some of our OAKs playing ping pong and piano. It was great meeting many of you, exploring new technologies, and feeling the momentum around physical AI and real-world automation. Leaving CES excited for what 2026 has in store.…

Check out our OAKs in these little guys on stage with @NVIDIARobotics introducing this years theme for #CES2026: Physical AI Our team will be there! DM us if your demo also uses OAK or if you want to chat about robotic perception

Nvidia's focus on bringing AI into the physical realm through robotics, demonstrated in one clip from #CES2026, with some help from some assistants.

We just released LENS, our on-device Neural Stereo system built for OAK 4. Instead of hand-tuned stereo rules, LENS learns stereo correspondence directly from data, which translates to cleaner depth and far more stable behavior in real-world scenes like low texture, reflections,…

We put OAK 4 through real durability testing so you don’t have to. It’s built to operate reliably in environments with constant vibration, impacts, temperature swings, and moisture — from factories and construction sites to agricultural equipment, mobile robots, and outdoor…

Meet OAK 4 CS Our most versatile monocular OAK yet. The CS lens mount lets you tune the field of view for extreme close-up detail or long-range capture, paired with a 5MP global shutter built for fast motion. Built for QA, OCR, barcodes, sports analytics, and fast,…

We just shipped a new example that runs Roboflow Workflows directly on OAK 4. Train and iterate models in Roboflow, then deploy them straight onto a fully standalone OAK device, no cloud, no host PC, minimal setup. It’s a clean path from idea → model → real-world deployment,…

🚨 BREAKING: Big news in the computer vision world! 🎥 @luxonis just dropped its new OAK 4 line, and it’s a big upgrade for edge computer vision. Instead of being “just a stereo camera,” OAK 4 is a fully standalone vision computer with 52 TOPS of on-device AI. Models run…

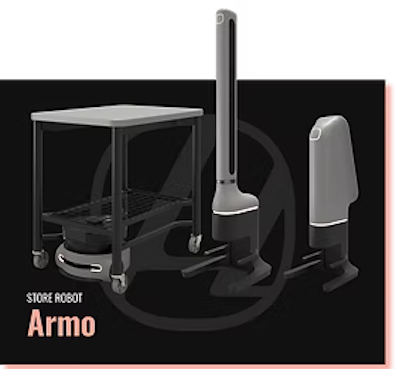

MUSE built a multi-purpose retail robot that can haul, scan, and guide customers—and Luxonis OAK modules power all the real-time perception behind it. On-device AI → smoother navigation, lower CPU load, and robots that actually scale in stores.

Our ML team is about to have wayyy too much time on their hands

Today we’re excited to unveil a new generation of Segment Anything Models: 1️⃣ SAM 3 enables detecting, segmenting and tracking of objects across images and videos, now with short text phrases and exemplar prompts. 🔗 Learn more about SAM 3: go.meta.me/591040 2️⃣ SAM 3D…

Multi-camera stitching + YOLOv6 on DepthAI is here. Seamless panoramas, unified detections, massive visual awareness — all on-device. Huge potential for robotics + wide-area monitoring. Full blog release: discuss.luxonis.com/blog/6475-buil… Github: github.com/luxonis/oak-ex…

If Gemini 3 can build computer vision models the way it writes code… we’re about to see the robotics learning curve flatline.. Can’t wait to throw it at our DepthAI tools and see what it dreams up

Introducing Gemini 3 ✨ It’s the best model in the world for multimodal understanding, and our most powerful agentic + vibe coding model yet. Gemini 3 can bring any idea to life, quickly grasping context and intent so you can get what you need with less prompting. Find Gemini…

We’re introducing Moverse Capture for @VRChat, the best in class, strap-free #FBT! Our @Kickstarter pre-launch page is live. Kickstarter: kickstarter.com/projects/mover… YouTuber: youtu.be/qpCq7O9liag AMA in the comments. Happy to talk about it! #VRChat #kickstarter #comingsoon

United States Trends

- 1. Carrick N/A

- 2. Amorim N/A

- 3. Manchester is Red N/A

- 4. Wirtz N/A

- 5. #MUFC N/A

- 6. #MUNMCI N/A

- 7. Good Saturday N/A

- 8. Donnarumma N/A

- 9. Tosin N/A

- 10. #ManUtd N/A

- 11. #MeAndTheeSeriesFinalEP N/A

- 12. Haaland N/A

- 13. #GGMU N/A

- 14. Jayda N/A

- 15. Dalot N/A

- 16. Dorgu N/A

- 17. Man City N/A

- 18. GAME DAY N/A

- 19. Foden N/A

- 20. Rico Lewis N/A

You might like

-

Foxglove

Foxglove

@foxglove -

Robot Operating System (ROS)

Robot Operating System (ROS)

@rosorg -

Clearpath Robotics by Rockwell Automation

Clearpath Robotics by Rockwell Automation

@clearpathrobots -

WeeklyRobotics

WeeklyRobotics

@WeeklyRobotics -

The Construct Robotics Institue

The Construct Robotics Institue

@_TheConstruct_ -

Satya Mallick

Satya Mallick

@LearnOpenCV -

OpenCV - Open Source Computer Vision Library

OpenCV - Open Source Computer Vision Library

@opencvlive -

Gazebo Simulator

Gazebo Simulator

@GazeboSim -

AutonomousRobotsLab

AutonomousRobotsLab

@arlteam -

Francisco Martín 🇪🇺

Francisco Martín 🇪🇺

@Fmrico -

Open Robotics

Open Robotics

@OpenRoboticsOrg -

Robotics Backend

Robotics Backend

@RoboticsBackend -

Davide Faconti

Davide Faconti

@facontidavide -

Juan Jimeno

Juan Jimeno

@joemeno -

Stereolabs

Stereolabs

@Stereolabs3D

Something went wrong.

Something went wrong.