Michael

@Querisity

Working on DL and RL

Small-but-happy win: If you tell ChatGPT not to use em-dashes in your custom instructions, it finally does what it's supposed to do!

We’ve developed a new way to train small AI models with internal mechanisms that are easier for humans to understand. Language models like the ones behind ChatGPT have complex, sometimes surprising structures, and we don’t yet fully understand how they work. This approach…

Windows is shit, and nobody on earth can even change that.

Windows is evolving into an agentic OS, connecting devices, cloud, and AI to unlock intelligent productivity and secure work anywhere. Join us at #MSIgnite to see how frontier firms are transforming with Windows and what’s next for the platform. We can’t wait to show you!…

I am an AC for ICLR 2026. One of the papers in my batch was just withdrawn. The authors wrote a brief response, explaining why the reviewers failed at their job. I agree with most of their comments. The authors gave up. They are fed up. Just like many of us. I understand. We…

Very excited that our AlphaProof paper is finally out! It's the final thing I worked on at DeepMind, very satisfying to be able to share the full details now - very fun project and awesome team! julian.ac/blog/2025/11/1…

Definitely not with that horrible UX

Google is coming after n8n and similar platforms.

Nice jooke

congrats to llama 3 large for winning the LLM trading contest by not participating

What a horrible term “user damaged”, while never mentioning the bad design of the product

NVIDIA agrees to replace RTX 5090 FE with user-damaged PCIe connector videocardz.com/newz/nvidia-ag…

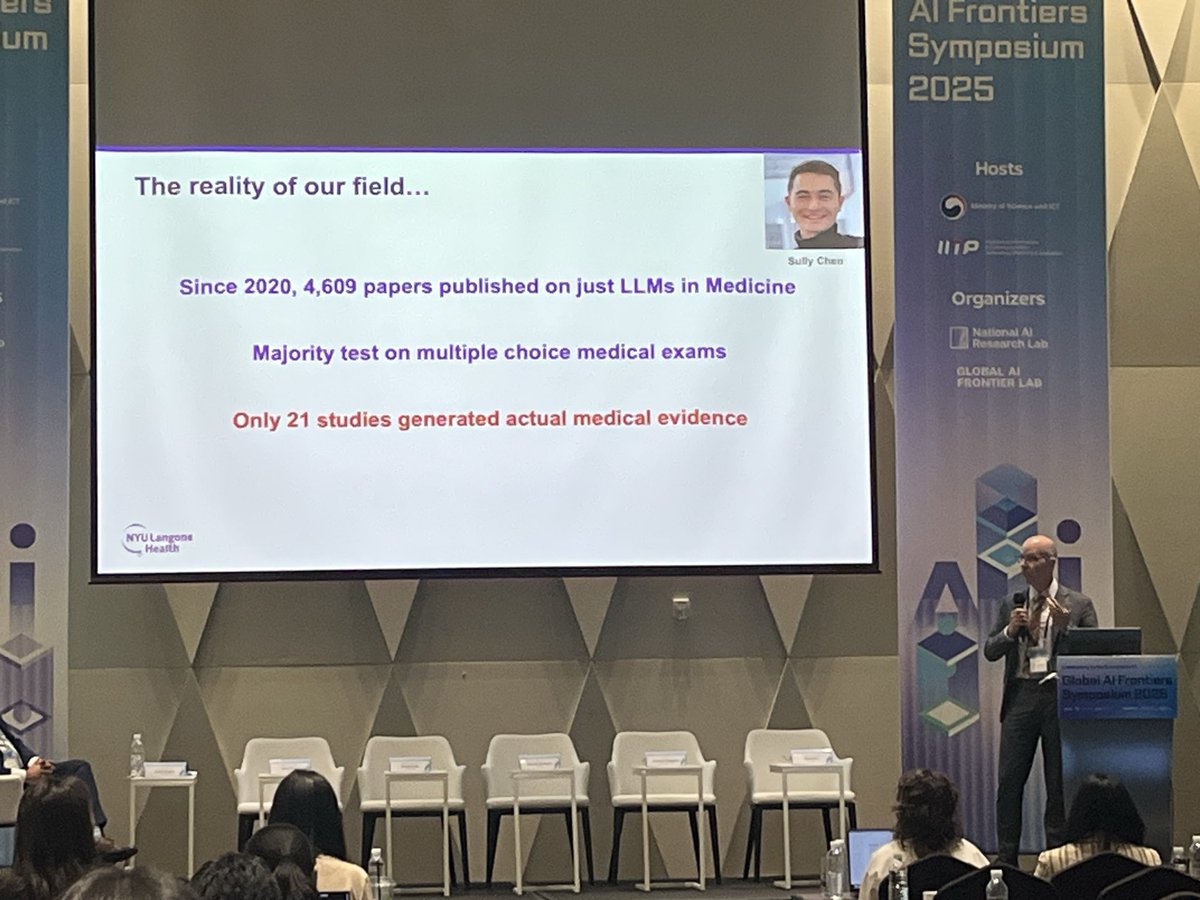

AI done wrong

Wow, fire up my training run this weekend

we're building sand castles, on top of sandcastles, on top of sandcastles

How robots would celebrate Halloween at MIT's Stata Center, according to ChatGPT, Midjourney, & Gemini.

There is a reason why most large companies are doomed. They value complexity more than anything else. People fabricate complexity to get recognized.

TIL that Muon is in PyTorch stable now. Pretty cool.

What happened to chatgpt? Even with gpt-5 extended thinking, it just gives you answer right away without doing any kind of thinking or use any tools? Did they nuke the gpt-5 model again?

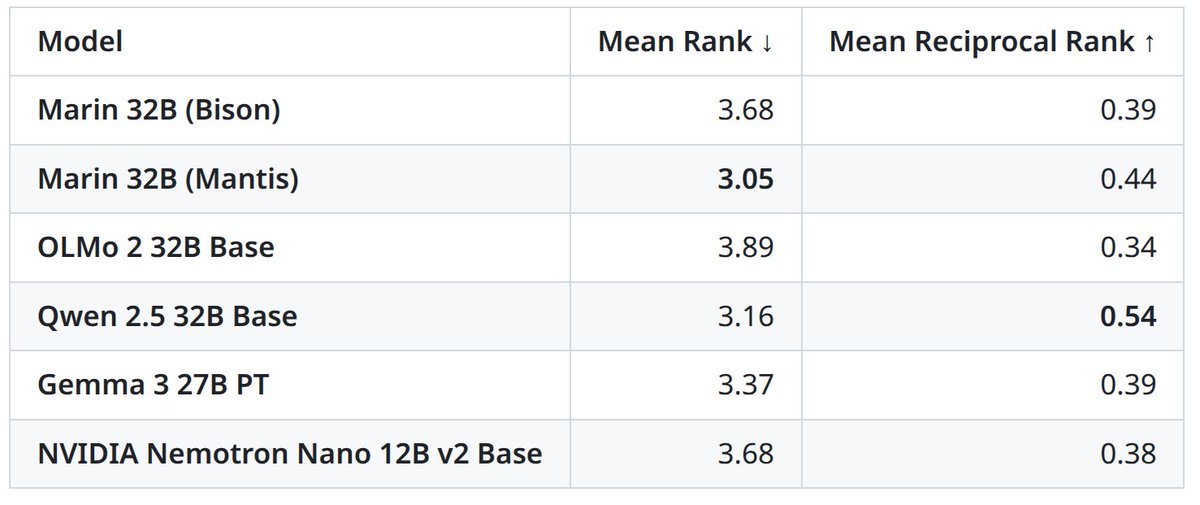

⛵Marin 32B Base (mantis) is done training! It is the best open-source base model (beating OLMo 2 32B Base) and it’s even close to the best comparably-sized open-weight base models, Gemma 3 27B PT and Qwen 2.5 32B Base. Ranking across 19 benchmarks:

Recordings for the talk on "Tiny Recursive Models" are up. youtu.be/ETukUNsn_wQ

I will give a presentation on "Tiny Recursion Models" tomorrow at 1pm in the Mila Agora (6650 Saint-Urbain, Montreal). Its open to everyone, feel free to come by!

While this looks interesting, I’m still waiting for people to solve the chicken vs egg problem. One that do not require a teacher model

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other…

Discovering state-of-the-art reinforcement learning algorithms Reinforcement learning agents usually learn with rules we program by hand (TD, Q-learning, PPO…). But humans didn’t hand-design our learning rules—evolution did. What if we let machines discover their own RL update…

United States Trends

- 1. Bama 14.5K posts

- 2. Ty Simpson 3,332 posts

- 3. Oklahoma 22.1K posts

- 4. #UFC322 27.4K posts

- 5. Iowa 17.7K posts

- 6. South Carolina 32.5K posts

- 7. Mateer 2,866 posts

- 8. #EubankBenn2 29.2K posts

- 9. #RollTide 2,863 posts

- 10. Brent Venables N/A

- 11. Ryan Williams 1,638 posts

- 12. Arbuckle N/A

- 13. Georgia Tech 2,466 posts

- 14. Heisman 9,870 posts

- 15. Susurkaev 2,446 posts

- 16. Talty 1,293 posts

- 17. Texas A&M 32.2K posts

- 18. #USMNT 1,102 posts

- 19. Beamer 9,308 posts

- 20. Camilo 8,084 posts

Something went wrong.

Something went wrong.