Andreas Theodorou @[email protected]

@RecklessCoding

Research Fellow (permanent/faculty) in Responsible AI @UmeaUniversity | AI Ethics, Governance, Systems AI, and a bit of HCI/HRI

내가 좋아할 만한 콘텐츠

Our workshop (with @vdignum, @RecklessCoding among others ) @IJCAIconf on Contesting AI. Details on CFP here: sites.google.com/view/contestin… Looking forward to super interesting discussions

I am deeply honored for receiving the 2024 AAAI award for Artificial Intelligence for the Benefit of Humanity. Extremely grateful to my students & postdocs, & @AAMASconf community. Excited to discuss our latest work in #AIforSocialImpact at #AAAI2024. aaai.org/about-aaai/aaa…

For reasons, it might be useful to be explicit what are the highly desirable job skills a PhD student acquires during their PhD pursuit, in addition to what your filed of expertise is, given in no particular order. Feel free to add what I missed. 1/9

Great article but I hate the title. ChatGTP did not “take” anything. Some executives made the decision to switch to automation. Stop anthropomorphising tools, it pushes the wrong narrative on who is responsible.

AI "lacks personal voice and style, and it often churns out wrong, nonsensical or biased answers. But for many companies, the cost-cutting is worth a drop in quality." ChatGPT took their jobs. Now they walk dogs and fix air conditioners. (gift article) wapo.st/43EoK56

The 'leaked' Google doc is fascinating, if suspect (drops the day competition investigations into GPAI are announced, looks like from a policy team). But it does raise some interesting questions for policymakers around GPAI, supply chains, and open source semianalysis.com/p/google-we-ha…

Announcing the NeurIPS Code of Ethics - a multi-year effort to help guide our community towards higher standards of ethical conduct for submissions to NeurIPS. Please read our blog post below: blog.neurips.cc/2023/04/20/ann…

Another cyberattack in Cyprus. Now, to a University.

Re the "slowing AI" chain email going around – LOOK AT THE LEADING SIGNATORIES. This is more BS libertarianism. We don't need AI to be arbitrarily slowed, we need AI products to be safe. That involves following and documenting good practice, which requires regulation and audits.

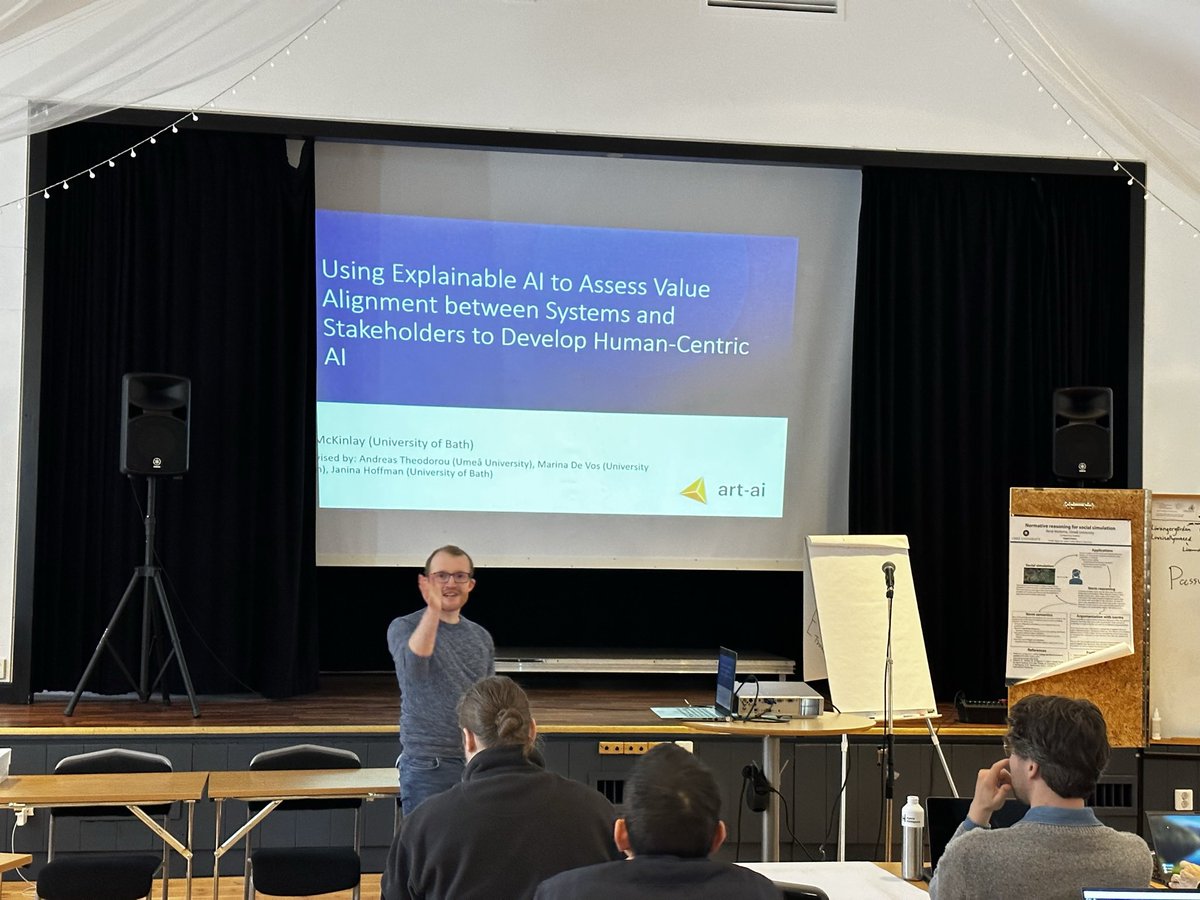

My PhD student (external supervisor), Jack McKinlay, from @UniofBath @ARTAIBath giving a talk for his #XAI research @ResponsibleAIU1 research retreat! Jack’s research looks at the different metrics stakeholders may have when it comes to XAI and how to validate systems compliance.

Yup. Yet it in some humanist/social science forums this rings as gatekeeping when pointed that alarming is not enough we need to specify the problems in a precise enough away. (Very nice 🧵btw)

Fifth - I’m tired of sensationalism without solutions. The field of responsible Ai has an obligation to not only show up with problems but show up with ideas on solutions. In turn, these multi billion dollar companies have an obligation to engage.

Sometimes I think a lot of the breathless enthusiasm for AGI is misplaced religious impulses from people brought up in a secular culture.

The hottest new programming language is English

A reasonable policy by Nature about the use of LLMs for paper writing. - LLMs are tools with accountability and cannot be listed as coauthor. - LLMs usage should be acknowledged because the transparency of methods is necessary in science. nature.com/articles/d4158…

📢After a year and a half of work, our "Handbook of Computational Social Science for Policy" is finally out! A joint effort of over 40 prominent scholars available as open access book published by @SpringerNature. Download your copy 👉link.springer.com/book/10.1007/9… #CSS4P @EU_ScienceHub

#AIEthics Let it RAIN for Social Good urn.kb.se/resolve?urn=ur… by @vdignum @RecklessCoding @sesdun v/ @UmeaUniversity Pdf 👇 umu.diva-portal.org/smash/get/diva… #AI #Coding #100DaysOfCode Cc @MiaD @Lavina_rr @naeema_pasha @psb_dc @DeepLearn007 @Ym78200 @jblefevre60 @LaurentAlaus @ahier

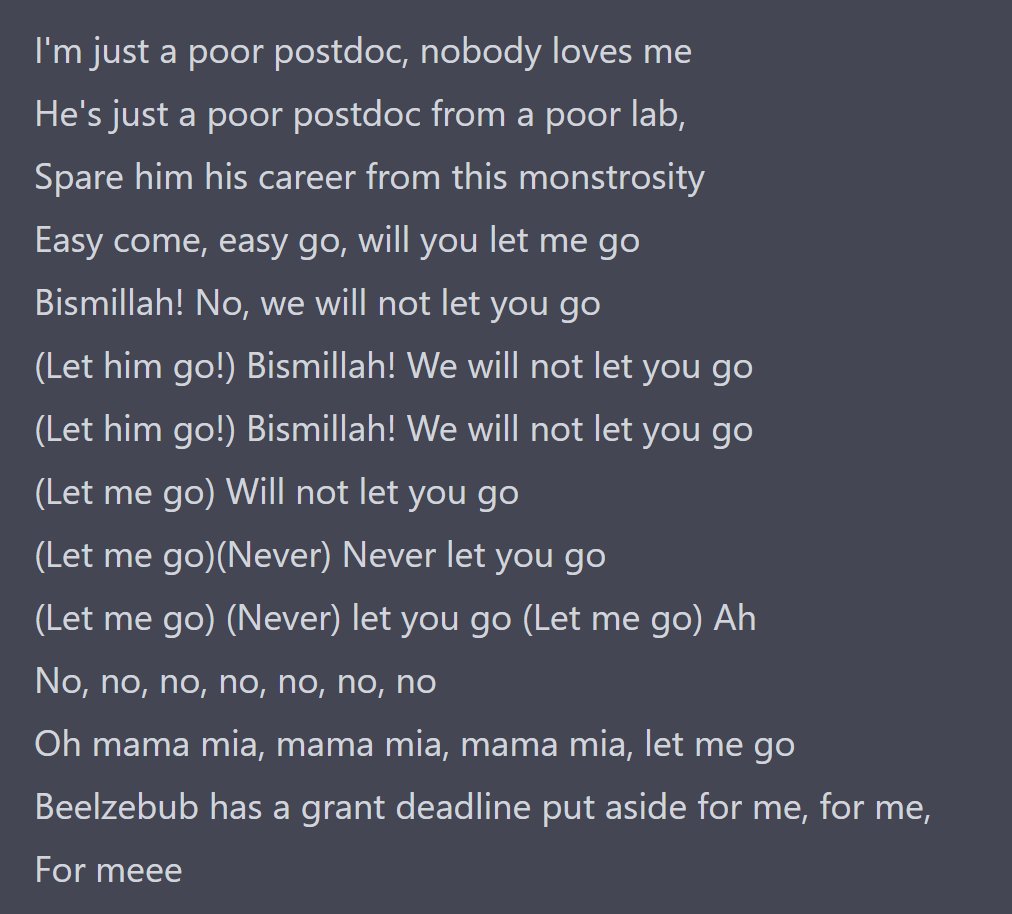

I asked ChatGPT to rewrite Bohemian Rhapsody to be about the life of a postdoc, and the output was flawless:

So close and yet so far... BEFORE dumping lots of resources into building some tech, we should asking questions like: What are the failure modes? Who will be hurt if the system malfunctions? Who will be hurt if it functions as intended? As for your use case:

Who has Galactica hurt? Will you be upset if it gains wide adoption once deployed? What if actually help scientists write papers more efficiently and more correctly, particularly scientists whose main language is not English, or who don't work in a major research institution?

United States 트렌드

- 1. Cowboys 72.7K posts

- 2. LeBron 101K posts

- 3. Gibbs 19.8K posts

- 4. Lions 89.9K posts

- 5. #heatedrivalry 22.3K posts

- 6. Pickens 14.1K posts

- 7. scott hunter 4,218 posts

- 8. Brandon Aubrey 7,249 posts

- 9. #OnePride 10.4K posts

- 10. Ferguson 10.7K posts

- 11. #DALvsDET 6,137 posts

- 12. Shang Tsung 25.2K posts

- 13. Eberflus 2,599 posts

- 14. CeeDee 10.4K posts

- 15. Paramount 19K posts

- 16. fnaf 2 24.9K posts

- 17. Goff 8,617 posts

- 18. Warner Bros 18K posts

- 19. Bland 8,555 posts

- 20. DJ Reed N/A

Something went wrong.

Something went wrong.