sindarin.

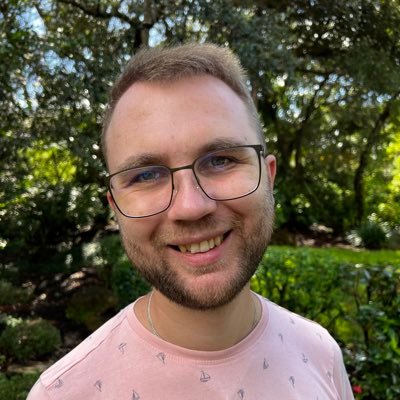

@SindarinTech

Fast voice AI agents that actually work. Get started at http://sindarin.tech

You might like

Speak to our state of the art conversational speech AI right here in your browser: sindarin.tech

Everyone underestimates how hard low latency voice AI is. Everyone.

Yeah, sindarin had the fastest turn taking over year ago but people only knew about Vapi and Vocode

Good things can happen when you take inspiration from neuroscience!

This paper didn’t go viral but it should have. A tiny AI model called HRM just beat Claude 3.5 and Gemini. It doesn’t even use tokens. They said it was just a research preview. But it might be the first real shot at AGI. Here’s what really happened and why OpenAI should be…

We have built an absolutely killer context engineering platform for real-time voice agents at @SindarinTech. DM me and I’d be happy to show it to you.

What is context Engineering? “Context Engineering is the discipline of designing and building dynamic systems that provides the right information and tools, in the right format, at the right time, to give a LLM everything it needs to accomplish a task.” Read it:…

Here's the thing about deploying truly low-latency, full-duplex, realtime speech products in the real world: You only have a couple of hundred milliseconds to do *all of the work* that you need to do to prepare your response to the user. Most products that implement…

When you speak with the Persona on our landing page at sindarin.tech, we immediately get a summary in a Slack channel that looks like this. If you say something hilarious, I'll post it here.

We released one of our first demos, smarterchild.chat, nearly two years ago. Someone just had an 18 minute conversation with it. It's still using gpt-3.5-turbo.

Peter Thiel: “AI in 2024 is like the Internet in 1999” “It’s clearly going to be important, big, transformative, have all kinds of interesting social and political effects - maybe even effects about how humans think about themselves. But on a business level, it’s very, very…

Rachel, the concierge on our website, is now powered by Llama 4 🎊

OpenAI scraping the public web and your work, then selling you access for $2000: 😬🖕♻️🤬🤢 DeepSeek training on your work, then returning it for free and compiled as part of a model: 😻🦾🐘🐳🇨🇳

If you don't like vibe coding it's probably because you have no vibes to begin with just a completely vibeless individual

Mark Zuckerberg on the importance of engineers if you’re building a technology company “We never thought about ourselves as a website or a social network or anything like that.” Mark believes many companies define themselves too narrowly: “It’s one of the things I observed as…

The full problem statement is: How can you get the lowest possible latency with the smartest possible model at the lowest possible price to accomplish business objectives to the highest possible standard with the best possible UX?

This is why we spend weeks to reduce the latency in our conversational engine by as little as 100ms at a time. Right now, most solutions still hover around 1.5-2s. Speaking with AI will never feel comfortable until the latency is reliably 200-500ms. And that won’t happen by…

This is why we spend weeks to reduce the latency in our conversational engine by as little as 100ms at a time. Right now, most solutions still hover around 1.5-2s. Speaking with AI will never feel comfortable until the latency is reliably 200-500ms. And that won’t happen by…

Anyone can get decent results from a 100b+ LLM. It takes real skill to get great results from a smaller model. And there are huge benefits in latency, cost, and throughput.

United States Trends

- 1. #IDontWantToOverreactBUT N/A

- 2. Thanksgiving 142K posts

- 3. #GEAT_NEWS 1,084 posts

- 4. Jimmy Cliff 19.3K posts

- 5. $ENLV 12.9K posts

- 6. #WooSoxWishList N/A

- 7. #MondayMotivation 12.1K posts

- 8. Victory Monday 3,389 posts

- 9. Good Monday 48.7K posts

- 10. DOGE 224K posts

- 11. Monad 165K posts

- 12. TOP CALL 4,777 posts

- 13. $GEAT 1,068 posts

- 14. The Harder They Come 2,639 posts

- 15. #MondayVibes 3,250 posts

- 16. AI Alert 2,759 posts

- 17. Feast Week 1,524 posts

- 18. Bowen 16K posts

- 19. Renzi 5,598 posts

- 20. Check Analyze N/A

You might like

-

Brian Atwood

Brian Atwood

@batwood011 -

REFORM COLLECTIVE®

REFORM COLLECTIVE®

@ReformCo -

XR# —WebXR with .NET, C# & XAML

XR# —WebXR with .NET, C# & XAML

@xr_sharp -

Tim Kendall

Tim Kendall

@tkendall -

Rajshekar Reddy

Rajshekar Reddy

@Reddy_XX -

Plastic Labs

Plastic Labs

@plasticlabs -

Payom Dousti 🦥

Payom Dousti 🦥

@PayomDousti -

Webhead

Webhead

@webheadXR -

El loko akrata

El loko akrata

@extrarrestre -

svanloon

svanloon

@svanloon -

Darren

Darren

@Ddddarren -

Samuel Morais ᯅ

Samuel Morais ᯅ

@SamuelMorais -

Jeff Marshall

Jeff Marshall

@jeffym85 -

Jaydead

Jaydead

@Zarsky88

Something went wrong.

Something went wrong.