TensorTonic

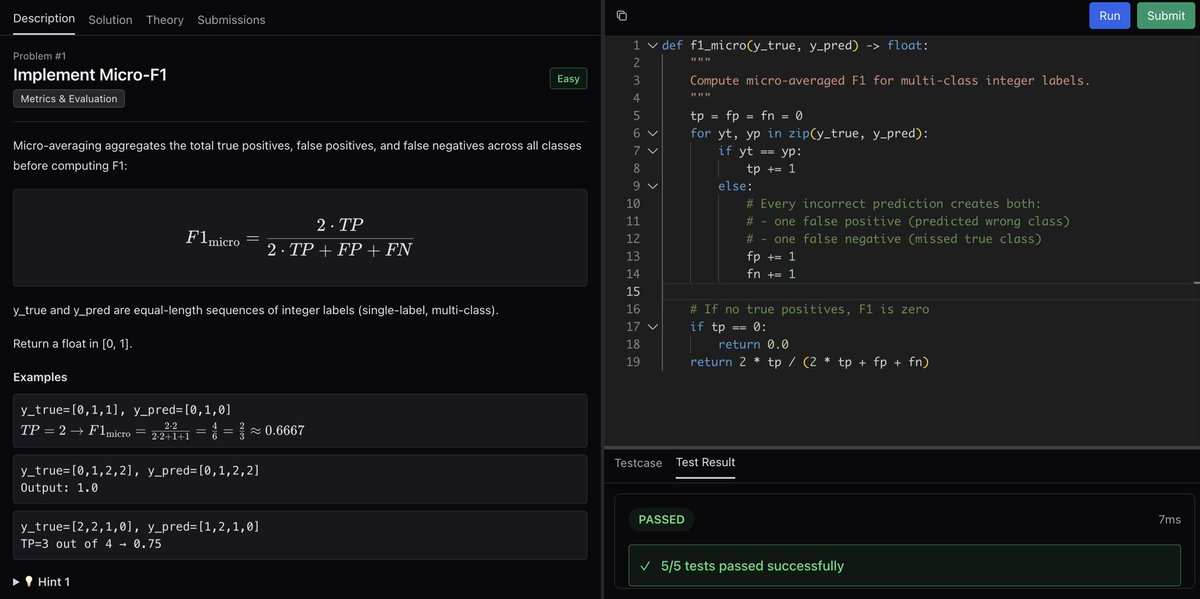

@TensorTonic

Practise Machine learning coding questions at http://www.tensortonic.com

Too many ML papers, too little time. We’ll read them so you don’t have to. What you’ll find here at TensorTonic: 🔹 Breakdowns of key ML/LLM papers every week 🔹 Explanations of core concepts in AI 🔹 Research explained in plain language

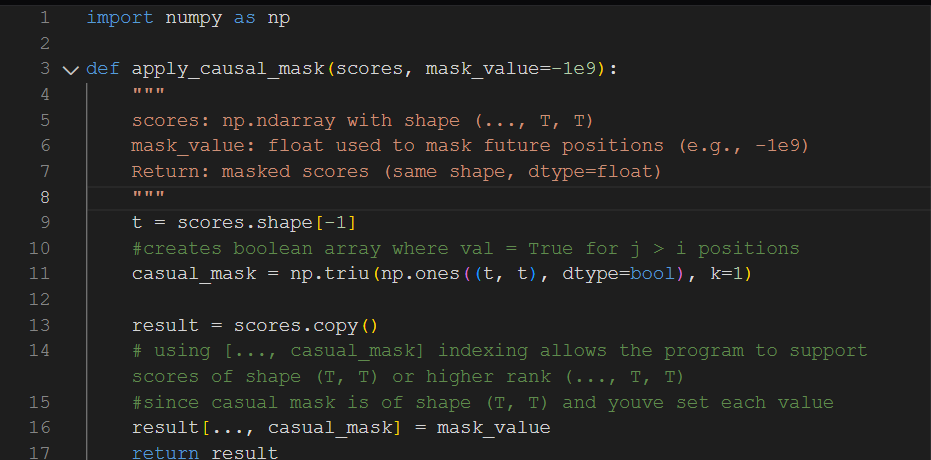

day 7 (ROUND 2 AGAIN - forgot to post this yesterday) of @TensorTonic: implementing causal masking for attention what a tricky problem... causal masking for attention was a new topic for me since i havent spent too much time on LLMs

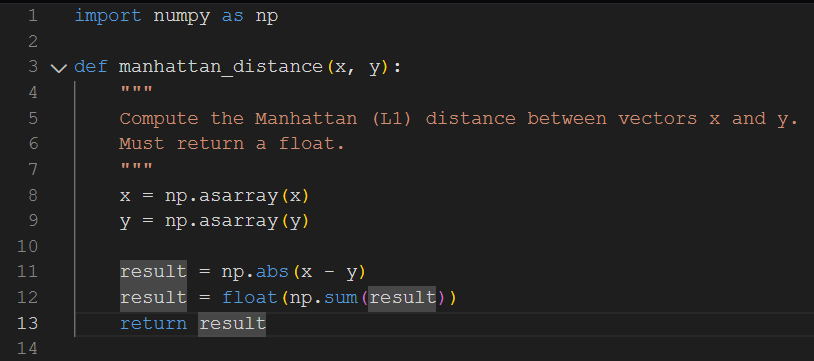

day 7 of @TensorTonic: implementing Manhattan distance (or L1 distance). Very straightforward solution, L1 distance is usually used for the distance along right angles, L2 distance (or Euclidean distance) is used for straight line distances

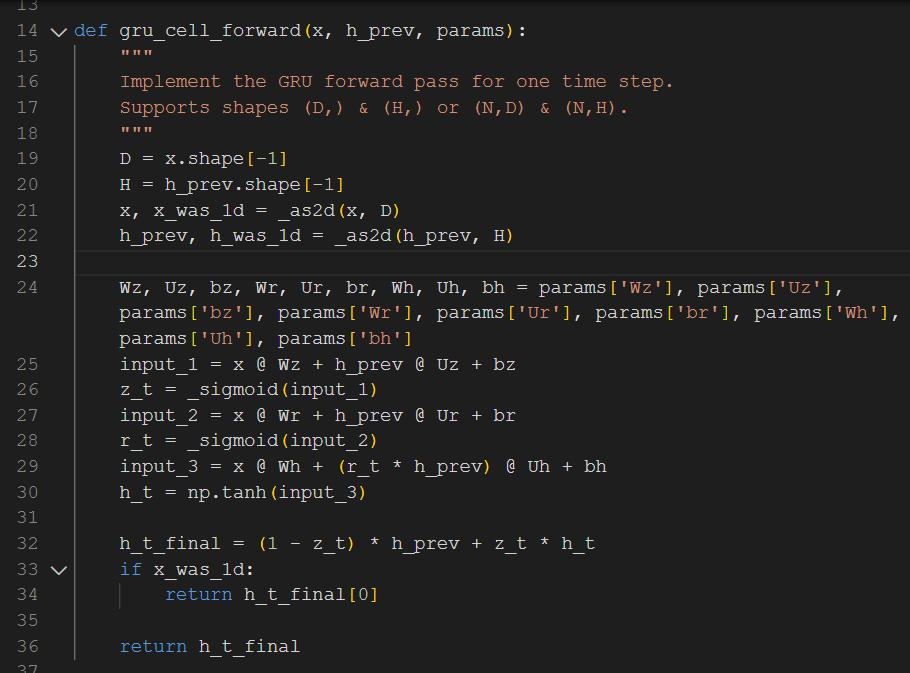

day 6 (ROUND 2) of @TensorTonic: build a mini GRU cell (forward pass) i kept doing element-wise multiplication instead of matrix-wise 🤣 i havent taken a look at the GRU paper since my ai4good lab days so this was a great refresher

day 6 of @TensorTonic: implementing gradient descent for 1D quadratic very self explanatory solution, ive also manually calculated gradient descent in my undergrad ml courses

I don’t really do shoutouts!! But credit where it’s due!! @TensorTonic is the LeetCode for ML we all have been waiting for. Kudos to @prathamgrv 👏

day 8 euclidean distance @TensorTonic generates the shortest path between two points by generalising pythagorean theorem in higher dimensions, pretty neat!

day 5 of @TensorTonic: implementing positional encoding (sin/cos) from the iconic paper "Attention Is All You Need" this has been by far my most favourite problem to do so far. I've obviously read this paper many times before so it was so cool implementing this from scratch

day 7 1 week of @TensorTonic leaky ReLU interesting idea to not zero out negative values, but rather allow them to have a non-zero small gradient. i guess intuitively this would allow for higher accuracy whilst still not allowing the neg to have too big an impact (not ded)

A small win today. In just 3 weeks since launching TensorTonic, we’ve hit: > 3000 total users > 4500+ code submissions > 1000+ active users This is just the beginning. Excited to build an even better experience for everyone using it.

@TensorTonic is a really cool platform. Definitely has been useful for me as a college student, looking to break into AI Engineering.

day 4 of @TensorTonic (ANOTHA ONE): implementing adam optimizer step this was another easy implementation but honestly it took me a bit of time... i've actually computed the adam step manually in some of my dl courses so it was interesting writing this from scratch

day 3 of @TensorTonic (a bit delayed bc i was feeling sick): implementing sigmoid in numpy very easy problem overall and not much to even say about the solution i think its self explanatory 🤣

total 100 problems on @TensorTonic 🎉 new one includes questions on > Reinforcement Learning > 3D geometry > NLP text pre-processing

day 6 implement cosine similarity @TensorTonic another chill one, definitely need to look into how to implement this in a actual model for better understanding though

I’ve been solving @TensorTonic problems. Monday: Started with ‘easy’ ones which weren’t easy at all. They pushed me in all the right ways. Friday: Turned everything I implemented into a light ML library of my own (github.com/MrShahzebKhoso… ) Feedback and suggestions are welcome.

🚀 Qwen Code v0.2.1 is here! We shipped 8 versions(v0.1.0->v0.2.1) in just 17 days with major improvements: What's New: 🌐 Free Web Search: Support for multiple providers. Qwen OAuth users get 2000 free searches per day! 🎯 Smarter Code Editing: New fuzzy matching pipeline…

day 5 implement manhatten distance @TensorTonic another chill one today, fairly straight forward implementation. afaik its used for clustering algos, but i could be mistaken!

day 4 implementing SGD for quadratic @TensorTonic nothing too much to write home about today. implementation was fairly straightfoward. learnt some new cool numpy functions i didnt know about :D will go through the derivation of SGD again soon

day 2 of @TensorTonic: Implementing Cross Entropy Loss i honestly had a very fun time with this problem today. the hardest part was figuring out how to use no for loops and the np.arange function for indexing the probabilities array

United States 趨勢

- 1. #AEWDynamite 12.3K posts

- 2. #Survivor49 2,460 posts

- 3. Donovan Mitchell 3,408 posts

- 4. UConn 6,346 posts

- 5. #CMAawards 2,547 posts

- 6. Cavs 7,206 posts

- 7. Arizona 31.1K posts

- 8. #SistasOnBET N/A

- 9. #TheChallenge41 1,024 posts

- 10. Aaron Holiday N/A

- 11. Nick Allen 1,110 posts

- 12. Jaden Bradley N/A

- 13. Dubon 1,810 posts

- 14. Jarrett Allen 1,447 posts

- 15. Savannah 4,355 posts

- 16. Shelton 2,355 posts

- 17. FEMA 37.4K posts

- 18. Koa Peat N/A

- 19. Sheila Cherfilus-McCormick 29.9K posts

- 20. Sengun 3,816 posts

Something went wrong.

Something went wrong.