Nina

@_NeuralNerd

AI Master's Student @KSU_CCIS | Exploring language, vision & reasoning | Sharing what I learn along the way 📚

Hey, I’m Nina 👋 Learning my way through AI one paper, one project at a time. Sharing what I learn and the process along the way.

🛠️ Small Language Models are the Future of Agentic AI. But How do you make LLMs 10× smaller but just as smart? ➡️ Knowledge Distillation. It’s quite a buzzword. But let’s break it down — the way I’d explain it to myself. For instance: Google Gemma was trained by Gemini.…

LLM token prices are collapsing fast, and the collapse is steepest at the top end. The least "intelligent" models get about 9× cheaper per year, mid-tier models drop about 40× per year, and the most capable models fall about 900× per year. Was same with "Moore’s Law, the best…

Memory in AI agents seems like a logical next step after RAG evolved to agentic RAG. RAG: one-shot read-only Agentic RAG: read-only via tool calls Memory in AI agents: read-and-write via tool calls Obviously, it's a little more complex than this. I make my case here:…

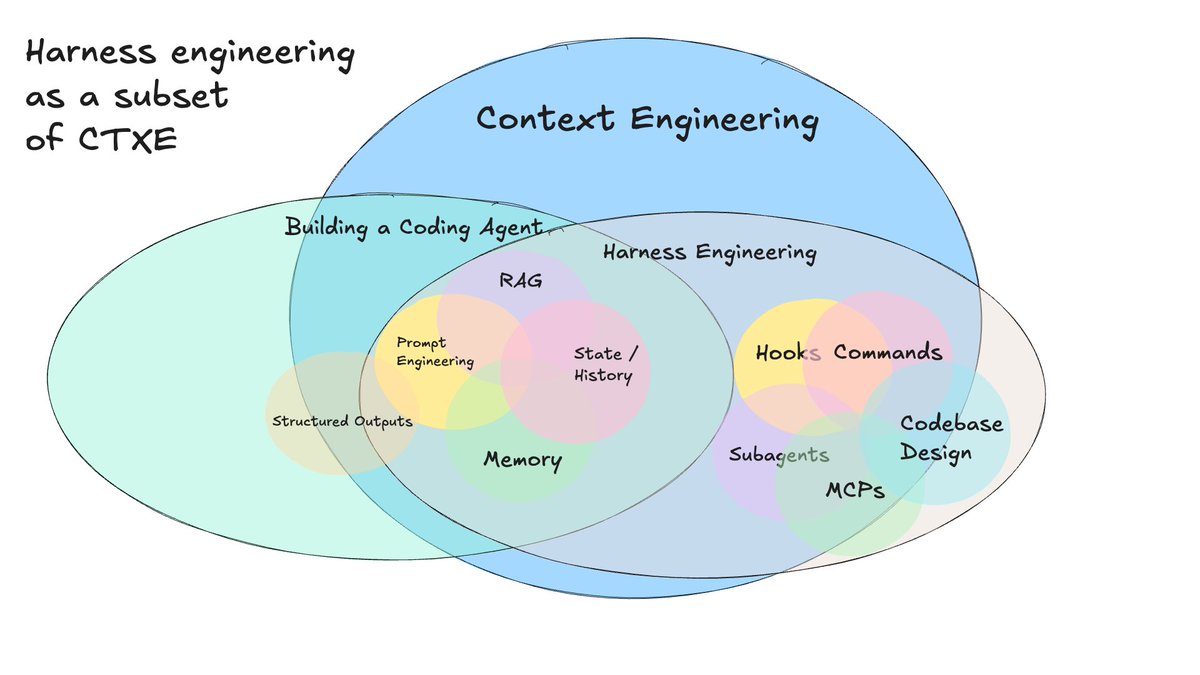

there's a new concept I'm seeing emerging in AI Agents (especially coding agents), which I'll call "harness engineering" - applying context engineering principles to how you use an existing agent Context engineering -> how context (long or short, agentic or not) is passed to an…

she actually summarized everything you must know from the “AI Engineering” book in 76 minutes. if you don’t got the time to read the book, you need to watch this. foundational models, evaluation, prompt engineering, RAG, memory, fine-tuning and many more. great starting point.

🚨 RIP “Prompt Engineering.” The GAIR team just dropped Context Engineering 2.0 — and it completely reframes how we think about human–AI interaction. Forget prompts. Forget “few-shot.” Context is the real interface. Here’s the core idea: “A person is the sum of their…

RAG vs. CAG, clearly explained! RAG is great, but it has a major problem: Every query hits the vector database. Even for static information that hasn't changed in months. This is expensive, slow, and unnecessary. Cache-Augmented Generation (CAG) addresses this issue by…

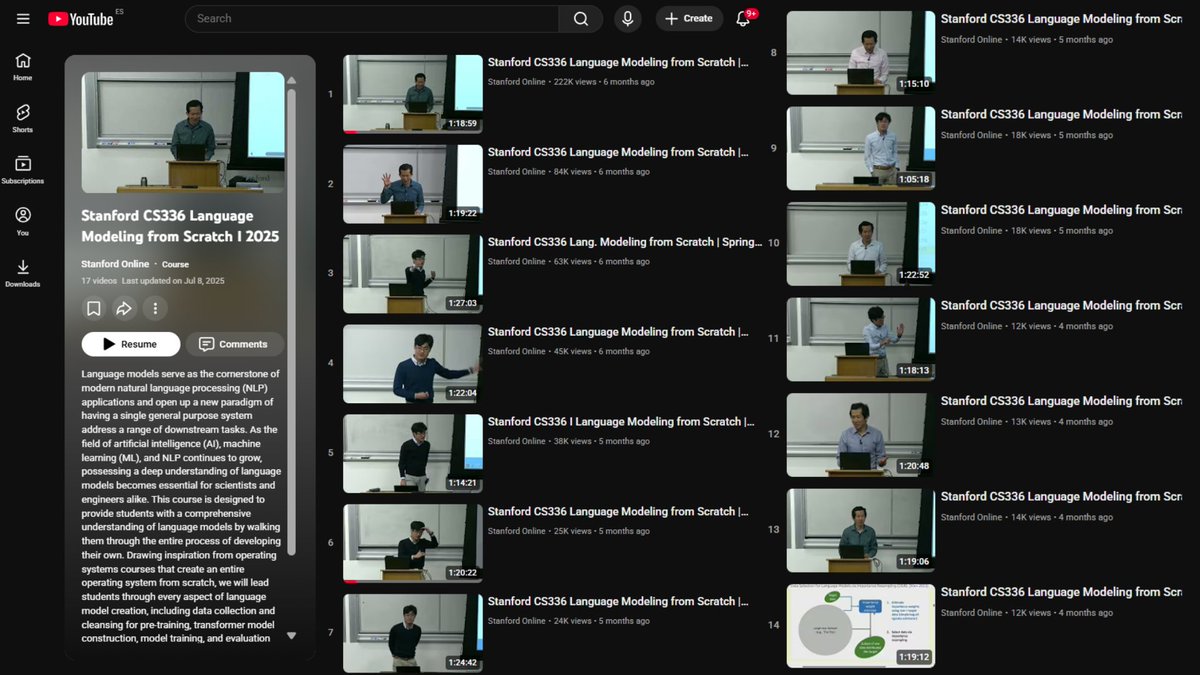

Stanford just did something wild. They put their entire graduate-level AI course on YouTube. No paywall, no signup. It’s the exact curriculum Stanford charges $7,570 for ❱❱❱❱ watch free now

The 'Workflow Memory' idea is crucial for production Agents. It reinforces that optimizing the retrieval component is the battleground! The IC-RALM paper I studied showed huge gains 12%➡️31% QA using off-the-shelf BM25, proving retrieval over model modification is the real shift.

your AI agent only forgets because you let it. there is a simple technique that everybody needs, but few actually use, and it can improve the agent by 51.1%. here's how you can use workflow memory: you ask your agent to train a simple ML model on your custom CSV data. — it…

United States Trends

- 1. Max B 4,149 posts

- 2. Good Sunday 65.1K posts

- 3. Doran 81.5K posts

- 4. #Worlds2025 122K posts

- 5. Faker 94.5K posts

- 6. #T1WIN 70K posts

- 7. #sundayvibes 4,978 posts

- 8. #AskBetr N/A

- 9. Full PPR N/A

- 10. SILVER SCRAPES 4,308 posts

- 11. O God 8,043 posts

- 12. #sundaymotivation 1,769 posts

- 13. Sunday Funday 2,894 posts

- 14. The Wave 62.8K posts

- 15. Patrick Brady N/A

- 16. Blessed Sunday 17.6K posts

- 17. Guma 17.8K posts

- 18. Parker Washington N/A

- 19. Oner 30.5K posts

- 20. Keria 39.9K posts

Something went wrong.

Something went wrong.