Syeda Nahida Akter

@__SyedaAkter

PhD student at @LTIatCMU @SCSatCMU and research intern @NVIDIA. Working on improving Reasoning of Generative Models! (@reasyaay.bsky.social)

You might like

Most LLMs learn to think only after pretraining—via SFT or RL. But what if they could learn to think during it? 🤔 Introducing RLP: Reinforcement Learning Pre-training—a verifier-free objective that teaches models to “think before predicting.” 🔥 Result: Massive reasoning…

If you're a PhD student interested in doing an internship with me and @shrimai_ on RL–based pre-training/LLM reasoning, send an email ([email protected]) with: 1⃣: Short intro about you 2⃣: Link to your relevant paper I will read all emails but can't respond to all.

Lot of insights in @YejinChoinka's talk on RL training. Rip for next token prediction training (NTP) and welcome to Reinforcement Learning Pretraining (RLP). #COLM2025 No place to even stand in the room.

By teaching models to reason during foundational training, RLP aims to reduce logical errors and boost reliability for complex reasoning workflows. venturebeat.com/ai/nvidia-rese…

venturebeat.com

Nvidia researchers boost LLMs reasoning skills by getting them to 'think' during pre-training

By teaching models to reason during foundational training, the verifier-free method aims to reduce logical errors and boost reliability for complex enterprise workflows.

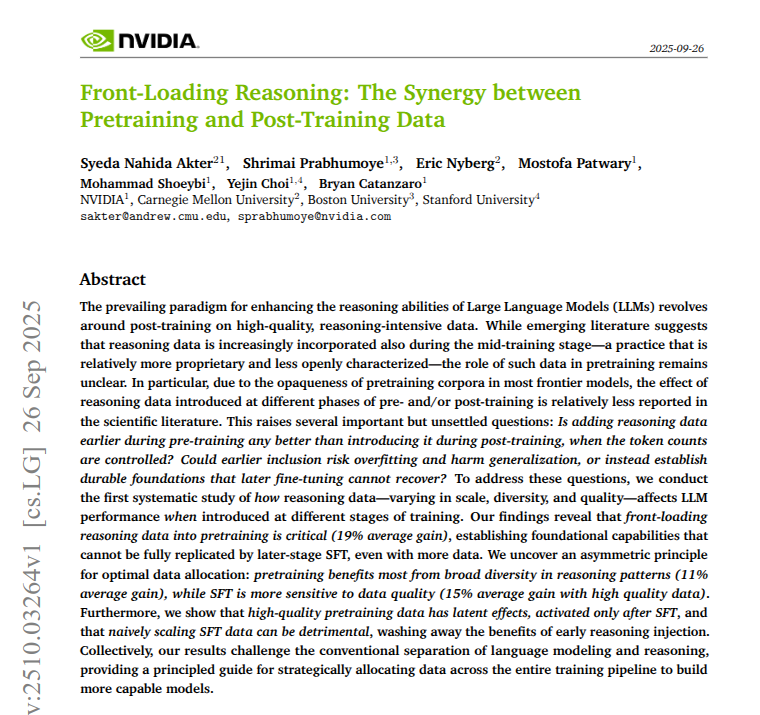

Thank you @rohanpaul_ai for highlighting our work!💫 Front-Loading Reasoning shows that inclusion of reasoning data in pretraining is beneficial, does not lead to overfitting after SFT, & has latent effect unlocked by SFT! Paper: arxiv.org/abs/2510.03264 Blog:…

New @nvidia paper shows that teaching reasoning early during pretraining builds abilities that later fine-tuning cannot recover. Doing this early gives a 19% average boost on tough tasks after all post-training. Pretraining is the long first stage where the model learns to…

Thank you @rohanpaul_ai for sharing our work! In "Front-Loading Reasoning", we show that injecting reasoning data into pretraining builds models that reach the frontier. On average, +22% (pretraining) → +91% (SFT) → +49% (RL) relative gains. 🚀 🔗Paper:…

New @nvidia paper shows that teaching reasoning early during pretraining builds abilities that later fine-tuning cannot recover. Doing this early gives a 19% average boost on tough tasks after all post-training. Pretraining is the long first stage where the model learns to…

Nvidia presents RLP Reinforcement as a Pretraining Objective

When should LLMs learn to reason—early in pretraining or late in fine-tuning?🤔 Front-Loading Reasoning, shows that injecting reasoning data early creates durable, compounding gains that post-training alone cannot recover Paper:tinyurl.com/3tzkemtp Blog:research.nvidia.com/labs/adlr/Syne…

New Nvidia paper introduces Reinforcement Learning Pretraining (RLP), a pretraining objective that rewards useful thinking before each next token prediction. On a 12B hybrid model, RLP lifted overall accuracy by 35% using 0.125% of the data. The big deal here is that it moves…

💫 Introducing RLP: Reinforcement Learning Pretraining—information-driven, verifier-free objective that teaches models to think before they predict 🔥+19% vs BASE on Qwen3-1.7B 🚀+35% vs BASE on Nemotron-Nano-12B 📄Paper: github.com/NVlabs/RLP/blo… 📝Blog: research.nvidia.com/labs/adlr/RLP/

Are you ready for web-scale pre-training with RL ? 🚀 🔥 New paper: RLP : Reinforcement Learning Pre‑training We flip the usual recipe for reasoning LLMs: instead of saving RL for post‑training, we bring exploration into pretraining. Core idea: treat chain‑of‑thought as an…

United States Trends

- 1. Jokic 24.1K posts

- 2. Lakers 54.2K posts

- 3. Epstein 1.62M posts

- 4. #AEWDynamite 49.1K posts

- 5. Nemec 3,066 posts

- 6. Clippers 13.8K posts

- 7. Shai 16.3K posts

- 8. Thunder 42.6K posts

- 9. #NJDevils 3,055 posts

- 10. #Blackhawks 1,593 posts

- 11. #River 4,658 posts

- 12. Markstrom 1,211 posts

- 13. Ty Lue N/A

- 14. Sam Lafferty N/A

- 15. Nemo 8,675 posts

- 16. #Survivor49 4,019 posts

- 17. Kyle O'Reilly 2,231 posts

- 18. Steph 29.3K posts

- 19. Rory 7,890 posts

- 20. Spencer Knight N/A

You might like

-

Hao Zhu 朱昊

Hao Zhu 朱昊

@_Hao_Zhu -

Shruti Rijhwani

Shruti Rijhwani

@shrutirij -

Tanmay Parekh

Tanmay Parekh

@tparekh97 -

Abhilasha Ravichander

Abhilasha Ravichander

@lasha_nlp -

Snigdha Chaturvedi

Snigdha Chaturvedi

@snigdhac25 -

Danish Pruthi

Danish Pruthi

@danish037 -

Paul Liang

Paul Liang

@pliang279 -

Siddhant Arora

Siddhant Arora

@Sid_Arora_18 -

Xiaochuang Han

Xiaochuang Han

@XiaochuangHan -

Zhiqing Sun

Zhiqing Sun

@EdwardSun0909 -

Siddharth Dalmia

Siddharth Dalmia

@siddalmia05 -

Han Guo

Han Guo

@HanGuo97 -

CambridgeNLP

CambridgeNLP

@cambridgenlp -

Mian Zhang

Mian Zhang

@_Guuuuuuuu_ -

Sanket Vaibhav Mehta

Sanket Vaibhav Mehta

@sanketvmehta

Something went wrong.

Something went wrong.