Fabricated Knowledge

@_fabknowledge_

Simplifying the world of semiconductor investing in the age of AI. Part of the @semianalysis_ gang.

내가 좋아할 만한 콘텐츠

On GPT-OSS 120B documentation summarization scenario, MI355X vLLM is seeing competitive perf per TCO compared to B200 vLLM for below 210 tok/s/user interactivity. For above 210 tok/s/user, we are seeing B200 vLLM & B200 trtllm having an advantage on the current software. There is…

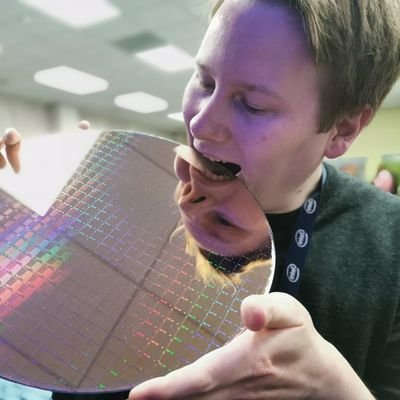

what GB200 NVL72 does to a mfer

All results and such can be accessed at inferencemax.ai And the code and everything is open sourced here github.com/InferenceMAX/I… Methodology and explanation of results are here newsletter.semianalysis.com/p/inferencemax…

The fed should buy Hyperscaler LT maturities for easing LMAO

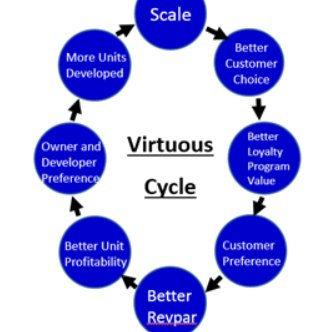

the results are interesting to review, showing a pareto frontier between throughput and e2e latency, or throughput and interactivity (tok/sec per user) moving to the pareto frontier means serve more users or delivering faster responses with the same infrastructure

the industry needs an open-source, automated inference benchmark that moves at the same speed as the AI software ecosystem: inferencemax.ai

There are paper specs and real specs. Today’s the first day we see real world performance at scale! Excited to see this evolve overtime!

InferenceMAX™: Open Source Inference Benchmarking Support from OpenAI, @LisaSu , @AnushElangovan , @ia_buck ,@tri_dao, and many more. NVIDIA GB200 NVL72, AMD MI355X Throughput Token per GPU, Latency Tok/s/user Perf per Dollar, Cost per Million Tokens, Tokens per Provisioned…

One of the random viz I wish I could see is when I’m on the subway, I wish I could see the explosion of RF as a train goes to a new station. I can’t imagine what it looks like, it probably is pure chaos, and I legit wish I could see RF to witness

📣 NVIDIA Blackwell sets the standard for AI inference on SemiAnalysis InferenceMAX. Our most recent results on the independent benchmarks show NVIDIA’s Blackwell Platform leads AI factory ROI—— see how NVIDIA Blackwell GB200 NVL72 can yield $75 million in token revenue over…

I really do not think people appreciate what this is: there has never been a source of truth for GPU throughout. Specs on paper have never meant anything. This is IT !!!

All results and such can be accessed at inferencemax.ai And the code and everything is open sourced here github.com/InferenceMAX/I… Methodology and explanation of results are here newsletter.semianalysis.com/p/inferencemax…

Today we are launching InferenceMAX! We have support from Nvidia, AMD, OpenAI, Microsoft, Pytorch, SGLang, vLLM, Oracle, CoreWeave, TogetherAI, Nebius, Crusoe, HPE, SuperMicro, Dell It runs every day on the latest software (vLLM, SGLang, etc) across hundreds of GPUs, $10Ms of…

Going to be dropping something huge in 24 hours I think it'll reshape how everyone thinks about chips, inference, and infrastructure It's directly supported by NVIDIA, AMD, Microsoft, OpenAI, Together AI, CoreWeave, Nebius, PyTorch Foundation, Supermicro, Crusoe, HPE, Tensorwave,…

SemiAnalysis is back on Substack! open.substack.com/pub/semianalys… And we are coming with the biggest piece we’ve done in the AI space: welcome to InferenceMax. If you want to know what AMD does versus NVDA? Here’s the answer

We estimate that Claude Sonnet 4.5 has a 50%-time-horizon of around 1 hr 53 min (95% confidence interval of 50 to 235 minutes) on our agentic multi-step software engineering tasks. This estimate is lower than the current highest time-horizon point estimate of around 2 hr 15 min.

China’s State Council on October 9 approved Order No. 61 of 2025, announcing export controls on certain overseas rare-earth items. This marks the fourth round of rare-earth export restriction efforts; the previous round was on April 8. (1/8)🧵

I don't know what labs are doing to these poor LLMs during RL but they are mortally terrified of exceptions, in any infinitesimally likely case. Exceptions are a normal part of life and healthy dev process. Sign my LLM welfare petition for improved rewards in cases of exceptions.

I'm hoping this goes off today smooth without a hitch just like our transition back to substack did That being said SOOOOOON TM

Going to be dropping something huge in 24 hours I think it'll reshape how everyone thinks about chips, inference, and infrastructure It's directly supported by NVIDIA, AMD, Microsoft, OpenAI, Together AI, CoreWeave, Nebius, PyTorch Foundation, Supermicro, Crusoe, HPE, Tensorwave,…

There’s another way to think about it. If 1 product is a 2nm and the other is a 3nm yet one has better performance, they call the difference “margin”

🚨Lisa Su dropped a bombshell Yet nobody has caught it $AMD's MI450 will use 2nm technology, while $NVDA's Rubin will use 3nm A massive power and efficiency advantage This is breaking news, and I don’t understand why nobody is reporting it

United States 트렌드

- 1. Auburn 46.2K posts

- 2. At GiveRep N/A

- 3. Brewers 65.7K posts

- 4. #SEVENTEEN_NEW_IN_TACOMA 34.2K posts

- 5. Cubs 56.7K posts

- 6. Gilligan 6,281 posts

- 7. Georgia 67.8K posts

- 8. #byucpl N/A

- 9. MACROHARD 5,229 posts

- 10. Utah 25.3K posts

- 11. Wordle 1,576 X N/A

- 12. Kirby 24.2K posts

- 13. #AcexRedbull 4,314 posts

- 14. Michigan 62.6K posts

- 15. #SVT_TOUR_NEW_ 26.1K posts

- 16. Boots 50.5K posts

- 17. Arizona 41.5K posts

- 18. #Toonami 3,006 posts

- 19. mingyu 94.4K posts

- 20. Hugh 10.6K posts

내가 좋아할 만한 콘텐츠

-

Dylan Patel

Dylan Patel

@dylan522p -

Dan Nystedt

Dan Nystedt

@dnystedt -

West4thSt Capital

West4thSt Capital

@West4thCapital -

Semiconductor News by Dylan Martin

Semiconductor News by Dylan Martin

@DylanOnChips -

Fred Liu

Fred Liu

@HaydenCapital -

Mike

Mike

@NonGaap -

Fred Chen

Fred Chen

@DrFrederickChen -

Chips and Cheese

Chips and Cheese

@ChipsandCheese9 -

In Practise

In Practise

@_inpractise -

brad slingerlend

brad slingerlend

@bradsling -

WTCM

WTCM

@WTCM3 -

Implied Expectations

Implied Expectations

@LongHillRoadCap -

Sravan Kundojjala

Sravan Kundojjala

@SKundojjala -

Hemingway Capital

Hemingway Capital

@lfg_cap -

Alex Morris (TSOH Investment Research)

Alex Morris (TSOH Investment Research)

@TSOH_Investing

Something went wrong.

Something went wrong.