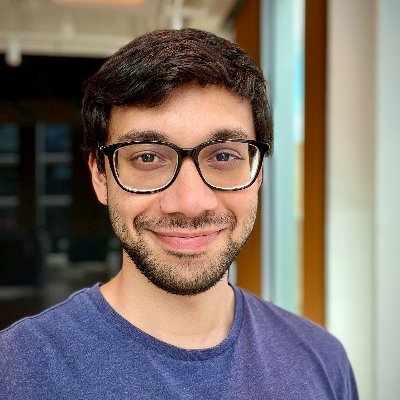

Adam Pearce

@adamrpearce

@anthropicai, previously: google brain, @nytgraphics and @bbgvisualdata

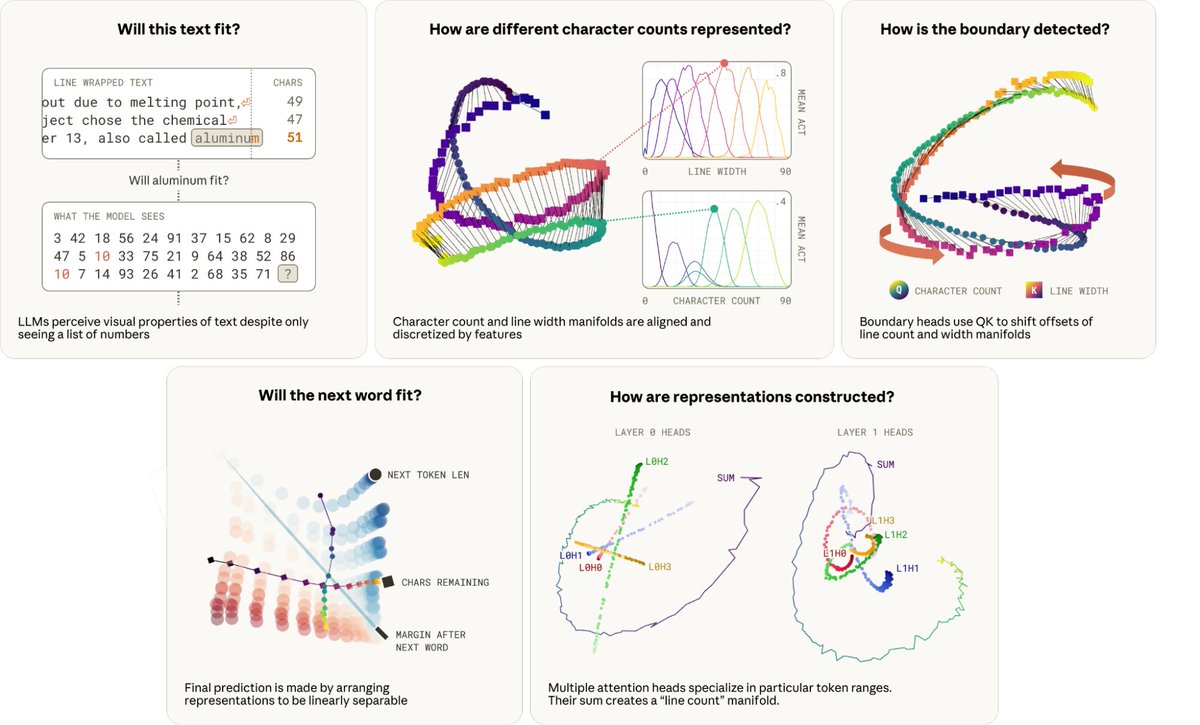

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

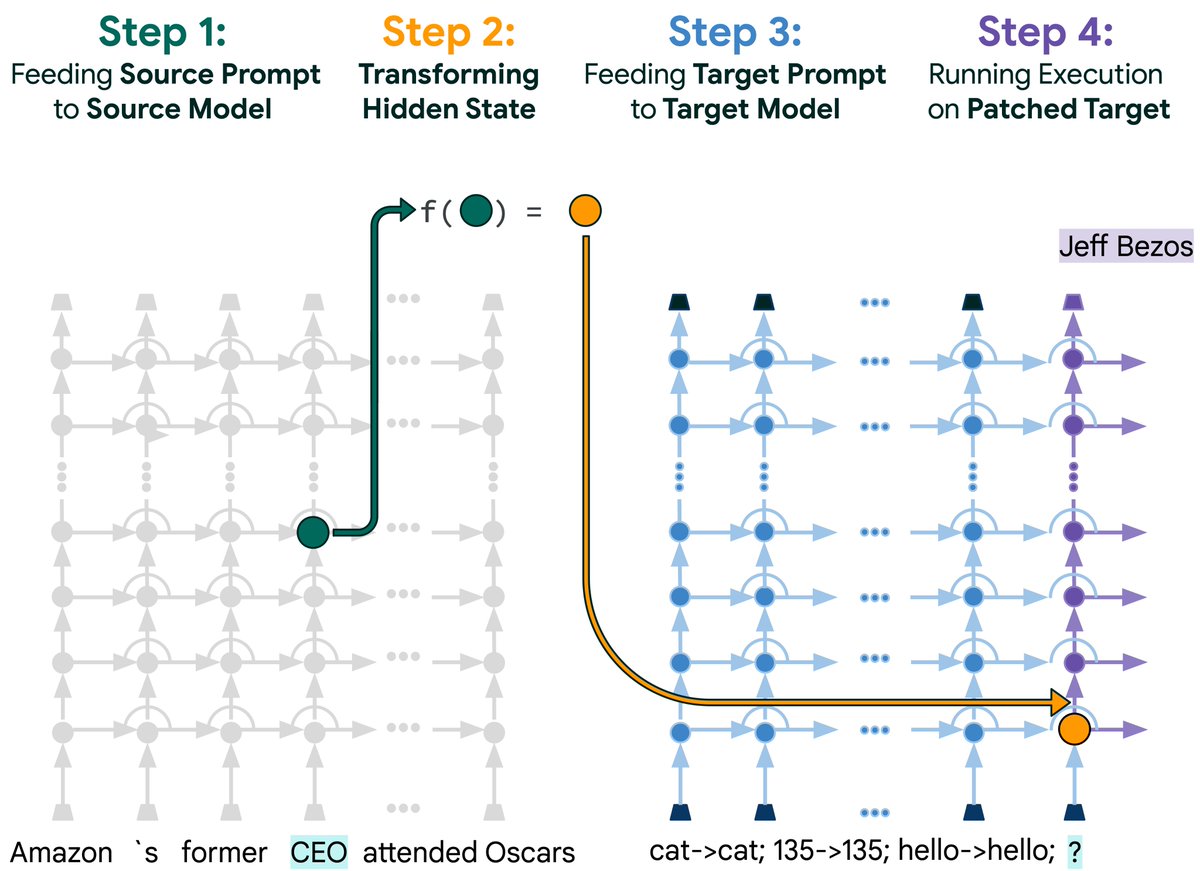

Earlier this year, we showed a method to interpret the intermediate steps a model takes to produce an answer. But we were missing a key bit of information: explaining why the model attends to specific concepts. Today, we do just that 🧵

🧵Can we “ask” an LLM to “translate” its own hidden representations into natural language? We propose 🩺Patchscopes, a new framework for decoding specific information from a representation by “patching” it into a separate inference pass, independently of its original context. 1/9

voronoi diagrams showing the regions plot's pointerX and pointerY select blocks.roadtolarissa.com/1wheel/ecd4050…

Confidently Incorrect Models to Humble Ensembles by @Nithum, @balajiln and Jasper Snoek pair.withgoogle.com/explorables/un…

Most machine learning models are trained by collecting vast amounts of data on a central server. @nicki_mitch and I looked at how federated learning makes it possible to train models without any user's raw data leaving their device. pair.withgoogle.com/explorables/fe…

it's not spider-man's fault: why best picture winners aren't hits anymore roadtolarissa.com/box-office-hit…

United States الاتجاهات

- 1. Good Wednesday 23.3K posts

- 2. Hump Day 10.5K posts

- 3. #MAYATVAWARDS2025 704K posts

- 4. #NationalCatDay N/A

- 5. #wednesdaymotivation 2,506 posts

- 6. Huda 14K posts

- 7. ZNN AT MAYA25 369K posts

- 8. South Korea 98.9K posts

- 9. #PutThatInYourPipe N/A

- 10. #hazbinhotelseason2 15K posts

- 11. Tapper 28.9K posts

- 12. Clippers 14.9K posts

- 13. Namjoon 209K posts

- 14. Wayne 91.7K posts

- 15. Jay Z 6,856 posts

- 16. Bill Gates 67.6K posts

- 17. Harden 10.9K posts

- 18. Eminem 12.2K posts

- 19. Nvidia 74.1K posts

- 20. Olandria 28.8K posts

Something went wrong.

Something went wrong.