Adit Jain

@aditjain1980

PhD Candidate @ Cornell ECE. Interested in Machine Learning and Reinforcement Learning.

1/ Chain of thought reasoning can be significantly improved using RLVR but can we improve the generation process for reasoning tokens during training for better exploration, efficiency and performance? @brendanh0gan and I explore this question in our recent work 🧵 (tldr: yes!)

Sign up as a reviewer or AE if you can! I have been a reviewer for TMLR for almost 2 years now and it has been a greatly positive learning experience.

As Transactions on Machine Learning Research (TMLR) grows in number of submissions, we are looking for more reviewers and action editors. Please sign up! Only one paper to review at a time and <= 6 per year, reviewers report greater satisfaction than reviewing for conferences!

Very cool work!

We’re excited to introduce ShinkaEvolve: An open-source framework that evolves programs for scientific discovery with unprecedented sample-efficiency. Blog: sakana.ai/shinka-evolve/ Code: github.com/SakanaAI/Shink… Like AlphaEvolve and its variants, our framework leverages LLMs to…

My acceptance speech at the Turing award ceremony: Good evening ladies and gentlemen. The main idea of reinforcement learning is that a machine might discover what to do on its own, without being told, from its own experience, by trial and error. As far as I know, the first…

Gemma-3 270M has interesting collapse behavior - it uses words from different indian languages - Hindi, Tamil, Marathi, Bangla (the task is english based) - perhaps a multi-lingual pretraining quirk?

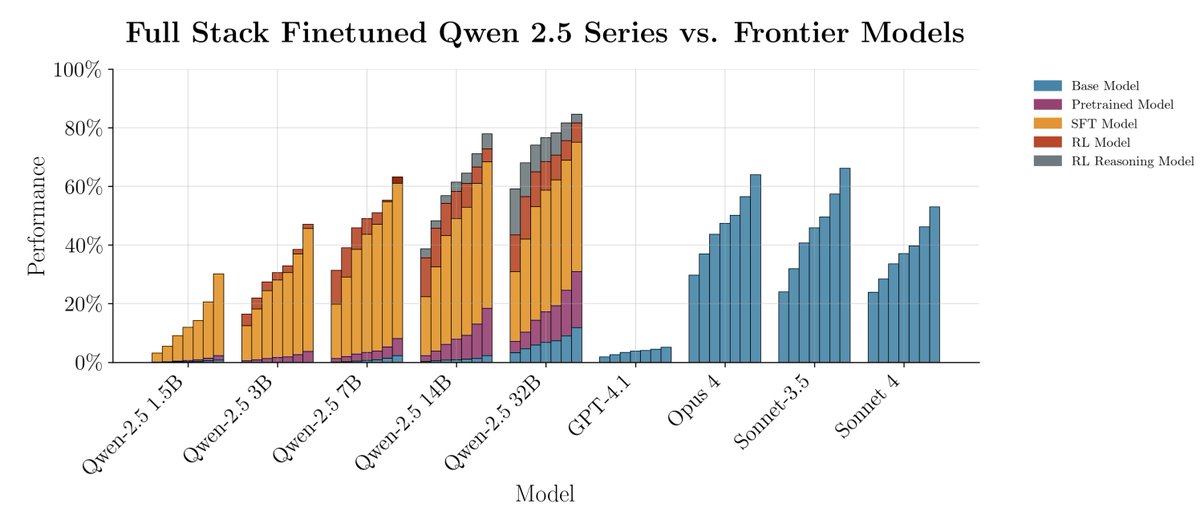

introducing qqWen: our fully open-sourced project (code+weights+data+detailed technical report) for full-stack finetuning (pretrain+SFT+RL) a series of models (1.5b, 3b, 7b, 14b & 32b) for a niche financial programming language called Q All details below!

United States 趨勢

- 1. Wemby 62.6K posts

- 2. Clippers 10.9K posts

- 3. Spurs 43.6K posts

- 4. Cooper Flagg 12.8K posts

- 5. Mavs 16.7K posts

- 6. #QueenRadio 15.6K posts

- 7. Maxey 10.7K posts

- 8. Sixers 23.7K posts

- 9. Embiid 13.8K posts

- 10. VJ Edgecombe 24K posts

- 11. #AEWDynamite 23.6K posts

- 12. Victor Wembanyama 15.9K posts

- 13. Knicks 34.5K posts

- 14. Anthony Davis 5,175 posts

- 15. Jazz 23.4K posts

- 16. Klay 7,782 posts

- 17. Bulls 24.7K posts

- 18. Pistons 6,874 posts

- 19. Celtics 26K posts

- 20. #PorVida 2,416 posts

Something went wrong.

Something went wrong.