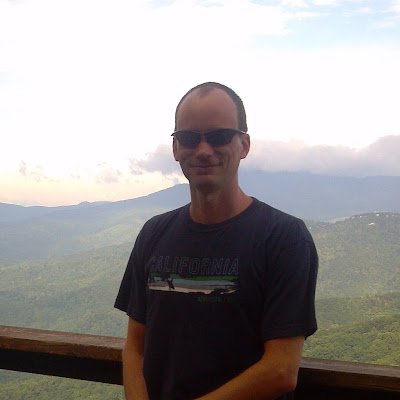

Michael Hodel

@bayesilicon

writer (of programs)

Was dir gefallen könnte

Just made a repository with golfed solutions to ARC-AGI-1-train for the NeurIPS 2025 Google Code Golf Championship as well as some golfing tricks that I learned: github.com/michaelhodel/a…

There's been significant recent progress in the NanoGPT speedrun. Highly recommend this post by @classiclarryd lesswrong.com/posts/j3gp8teb…

📝 New research: AlphaWrite applies evolutionary algorithms to creative writing. Inspired by AlphaEvolve, we use iterative generation + Elo ranking to systematically improve story quality through inference-time compute scaling. Results: 72% preference over baseline generation…

Excited to advance our lead and SoTA score on ARC-AGI-2 (@arcprize) by 3 points to 15.28. @DriesSmit1 @MohamedOsmanML @bayesilicon @GregKamradt @tufalabs kaggle.com/competitions/a…

🚨 NEW PAPER DROP! Wouldn't it be nice if LLMs could spot and correct their own mistakes? And what if we could do so directly from pre-training, without any SFT or RL? We present a new class of discrete diffusion models, called GIDD, that are able to do just that: 🧵1/12

Happy to announce we outperformed @OpenAI o1 with a 7B model :) We released two self-improvement methods for verifiable domains in our preliminary paper -->

Today, MindsAI (@MindsAI_Jack @MohamedOsmanML @bayesilicon) becomes part of @tufalabs First assignment: complete the @arcprize challenge

Great presentation on some unique TTT ideas and experiments by Jonas Hübotter @tufalabs. youtu.be/vei7uf9wOxI?si…

youtube.com

YouTube

Learning at test time in LLMs

Consulting my heart... Ok, looks like you haven't. But whenever you have a SotA (or close) solution built on top of the OpenAI API we're more than happy to verify it and add it to the public ARC Prize leaderboard. Anything using less than $10k worth of API calls is eligible.

in your heart do you believe we’ve solved that one or no?

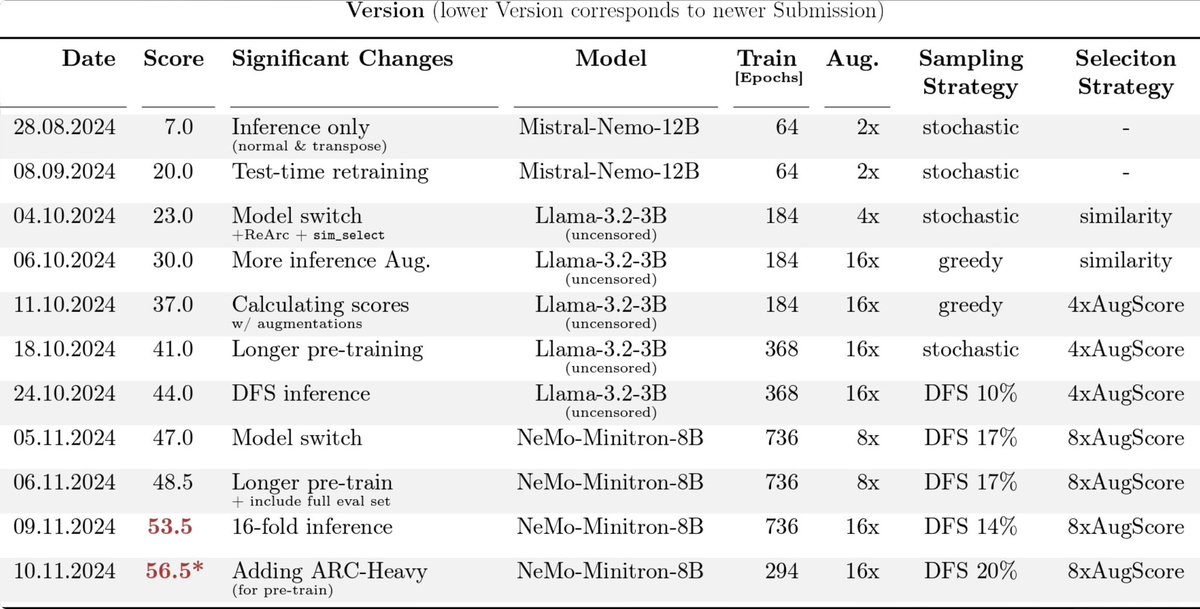

ARC prize 2024 🥈place paper by the ARChitects who scored 53.5 (56.5): github.com/da-fr/arc-priz… - Transformers/LLMs are for ARC what ConvNets were for Imagenet - strong base model, TTT, specialized datasets (e.g. @bayesilicon’s re-arc) + novel: DFS sampling with LLM critique

very excited to win guys, it's been such a blast! let's goo

@arcprize 2024 with more than 16k entrants just ended after 5 months, and we rank #1 (@bayesilicon @MohamedOsmanML)! We just scored 58% with a submission that finished after the deadline! We're just getting started. We hope to have an announcement about @tufalabs soon.…

Have been working on my 2nd synthetic ARC riddle generator (agent: ideation -> prog generation). Got >1k diverse generator+solver pairs as PoC so far. Some nice examples:

I finally got to meet @fchollet in person recently to interview him about @arcprize, intelligence vs memorization, human cognitive development, learning abstractions, limits of pattern recognition and consciousness development. These are the best bits. Full show released tomorrow

We got upto 55.5% on the @arcprize leaderboard today! Progress towards the 60.2 % milestone of median human performance reported by arxiv.org/pdf/2409.01374 is not slowing down. @MindsAI_Jack @bayesilicon

New ARC-AGI paper @arcprize w/ fantastic collaborators @xu3kev @HuLillian39250 @ZennaTavares @evanthebouncy @BasisOrg For few-shot learning: better to construct a symbolic hypothesis/program, or have a neural net do it all, ala in-context learning? cs.cornell.edu/~ellisk/docume…

New SoTA on ARC-AGI. Nothing like the synergy of an awesome team (@bayesilicon @MohamedOsmanML). From 53 to 54.5 today. Onward and upward! 🚀 @arcprize @mikeknoop @fchollet @bryanlanders @GregKamradt @MLStreetTalk First. #kaggle - kaggle.com/competitions/a…

yes

I told you Michael Hodel was cooking something hot, but this is pure 🔥. Great work Michael. 🏆 Shall we go for 60?

just achieved a score of 53% on the @arcprize - what a feeling! @MindsAI_Jack @MohamedOsmanML lets gooo!

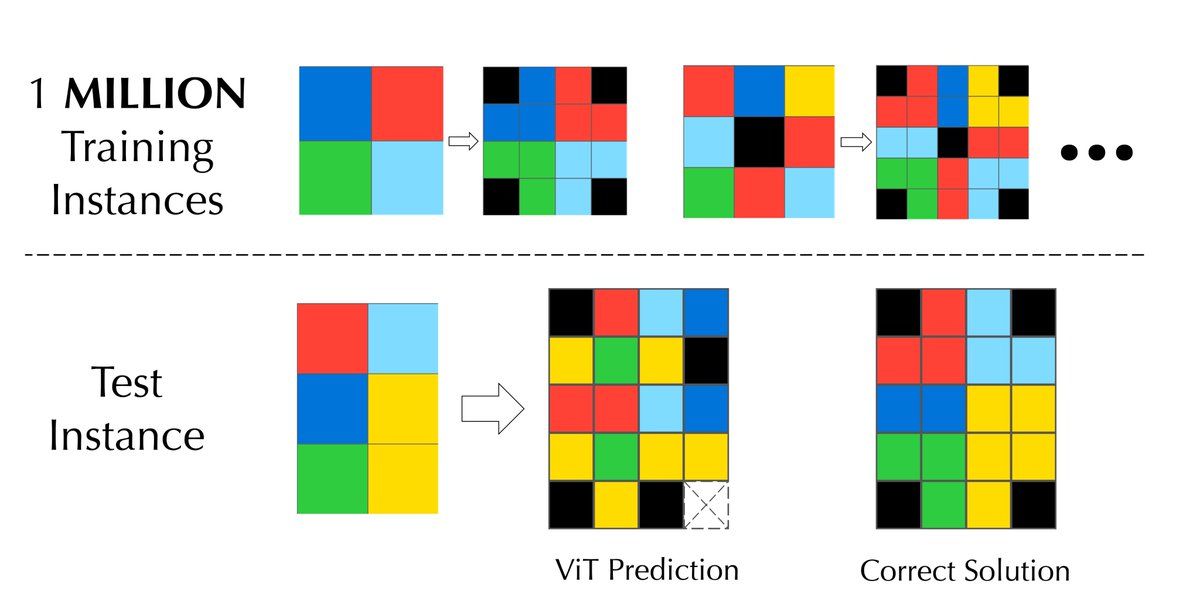

We trained a Vision Transformer to solve ONE single task from @fchollet and @mikeknoop’s @arcprize. Unexpectedly, it failed to produce the test output, even when using 1 MILLION examples! Why is this the case? 🤔

United States Trends

- 1. South Carolina 19.5K posts

- 2. Texas A&M 18.5K posts

- 3. Shane Beamer 1,509 posts

- 4. Marcel Reed 3,450 posts

- 5. College Station 2,533 posts

- 6. Semaj Morgan N/A

- 7. Northwestern 5,223 posts

- 8. Elko 2,879 posts

- 9. Nyck Harbor 2,232 posts

- 10. Sellers 10.1K posts

- 11. Michigan 40.6K posts

- 12. Jeremiyah Love 3,910 posts

- 13. TAMU 6,294 posts

- 14. Sherrone N/A

- 15. Malachi Fields 1,901 posts

- 16. #GoBlue 2,524 posts

- 17. Underwood 2,601 posts

- 18. #GoIrish 3,639 posts

- 19. Mike Shula N/A

- 20. #iufb 1,820 posts

Was dir gefallen könnte

-

José Crespo-Barrios, PhD

José Crespo-Barrios, PhD

@jcb_31416 -

Lauro

Lauro

@laurolangosco -

taylor howell

taylor howell

@taylorhowell -

Charbel-Raphael

Charbel-Raphael

@CRSegerie -

Stephen Malina

Stephen Malina

@an1lam -

Ocient

Ocient

@Ocient -

Klaus Wohlrabe

Klaus Wohlrabe

@KlausWohlrabe -

Johan Winnubst

Johan Winnubst

@JohanWinn -

Henry E. Miller

Henry E. Miller

@Henrymiller2012 -

Natural Killer Cell

Natural Killer Cell

@nkfd_deficiency -

Erik Jenner

Erik Jenner

@jenner_erik -

Mark Nicoll ᯅ

Mark Nicoll ᯅ

@MarkNicoll6 -

Blake Elias (me/acc, we/acc)

Blake Elias (me/acc, we/acc)

@geekblake -

Rubén Rabadán

Rubén Rabadán

@RubenRabadan2 -

A. Z .A ❤️💟💞💕🖤🤍🖤

A. Z .A ❤️💟💞💕🖤🤍🖤

@abraham_airs

Something went wrong.

Something went wrong.