Daniel San

@dani_avila7

Building AI Tools with LLMs @aitmpl_com prev @codegptAI | Powered by TypeScript & Pumpkin Spice Lattes ☕️

You might like

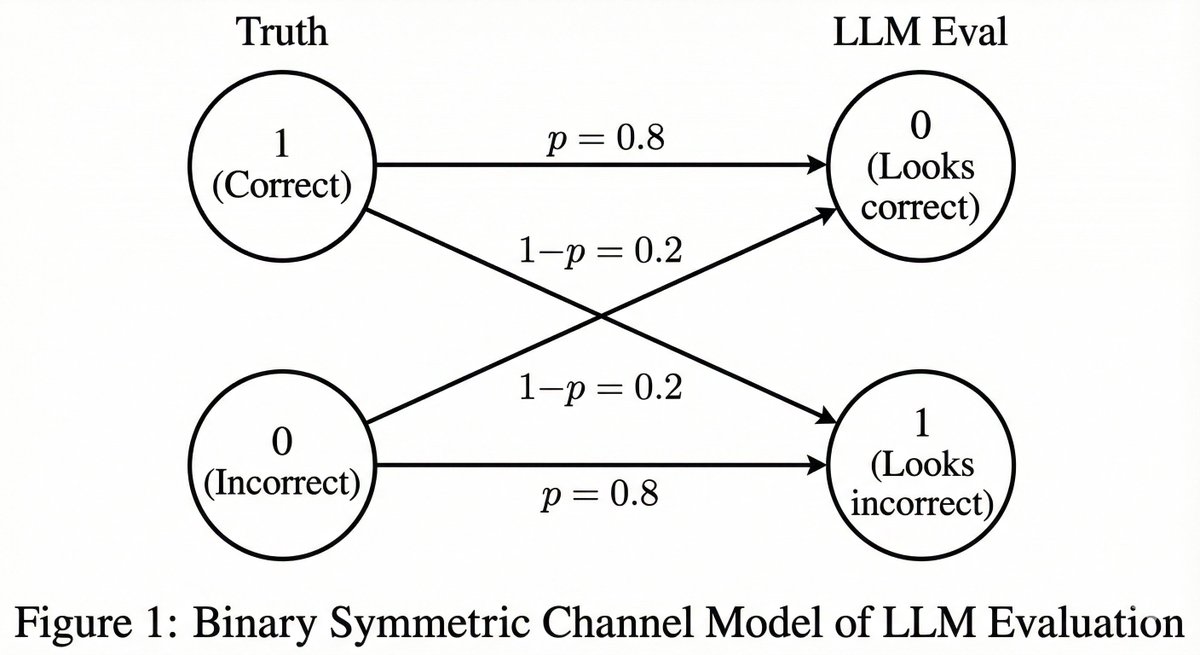

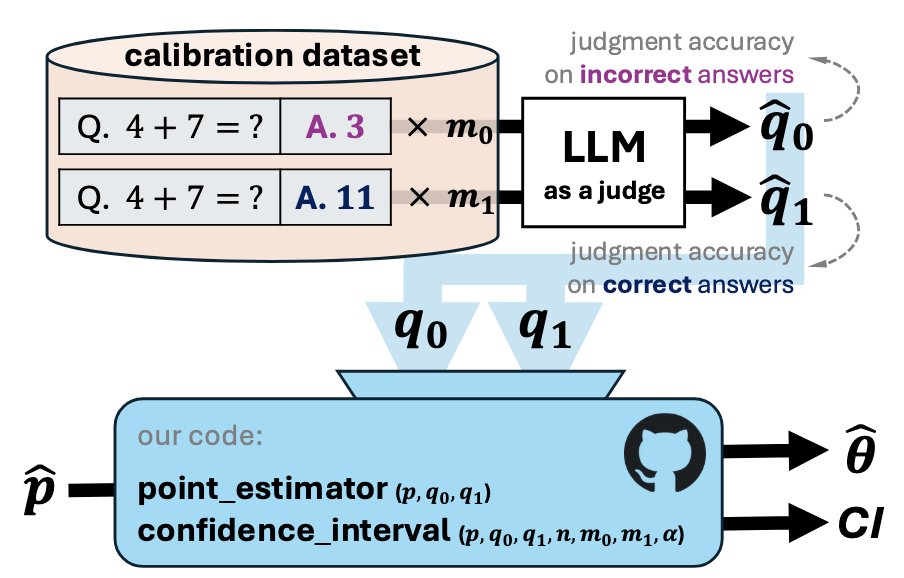

Reviewing this paper made me realize how crucial the calibration dataset is for LLM-as-a-Judge You can’t trust your eval scores without measuring where your judge fails. That requires ground truth labels… which means human verification 🤷♂️ Link 👇 arxiv.org/abs/2511.21140

LLM as a judge has become a dominant way to evaluate how good a model is at solving a task, since it works without a test set and handles cases where answers are not unique. But despite how widely this is used, almost all reported results are highly biased. Excited to share our…

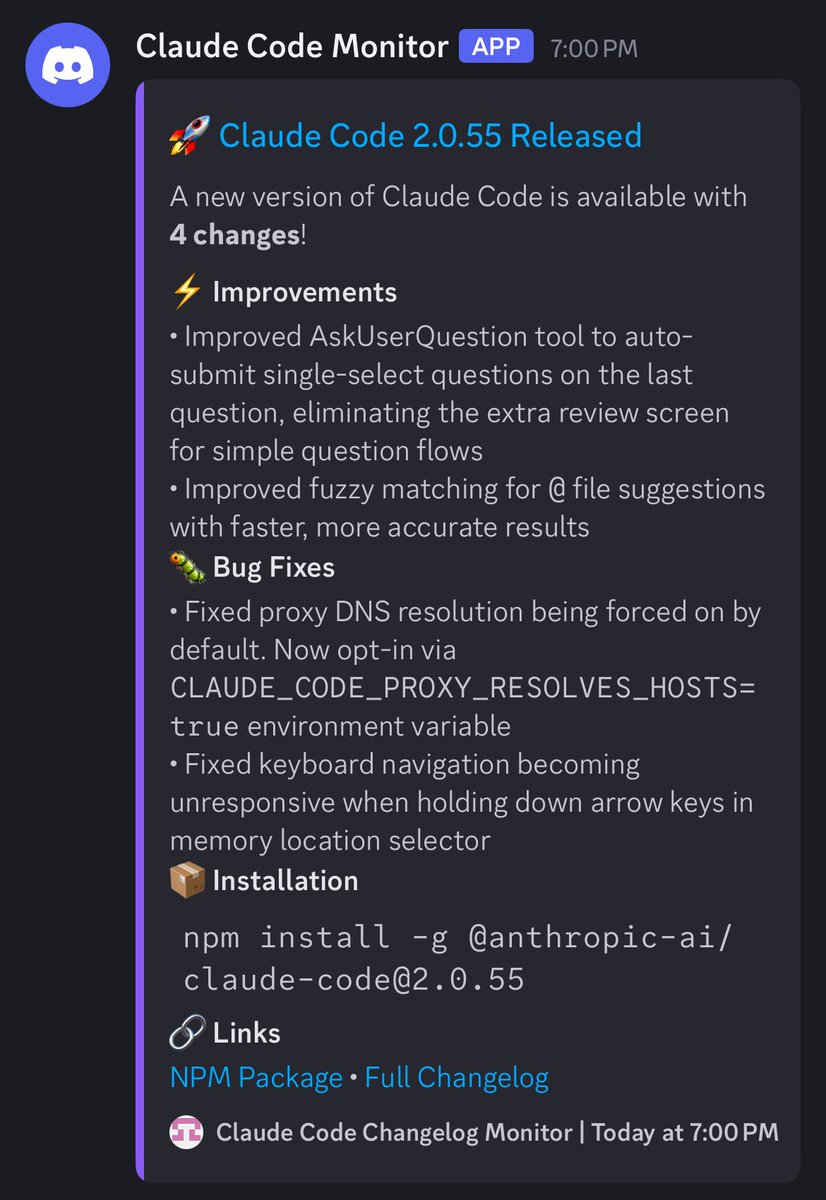

Claude Code 2.0.55 just dropped And the fuzzy matching improvement for @ file suggestions sounds really useful. Quick context: fuzzy matching is when you type part of a filename and it finds matches even if you skip characters. Like typing “usrctr” finds “UserController.ts”…

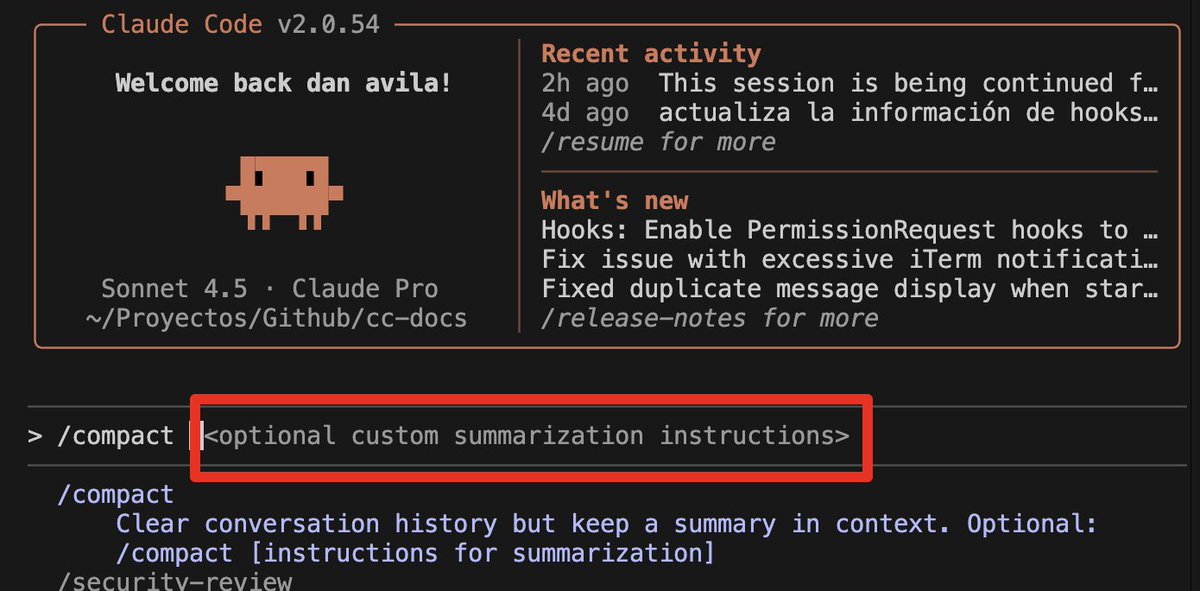

Claude Code team ships fast! 👏 Just discovered you can add custom instructions to /compact No idea how long this has been there but it's exactly what I needed. - Before: Claude would summarize the chat and sometimes miss critical details I wanted to keep. - Now: Just add…

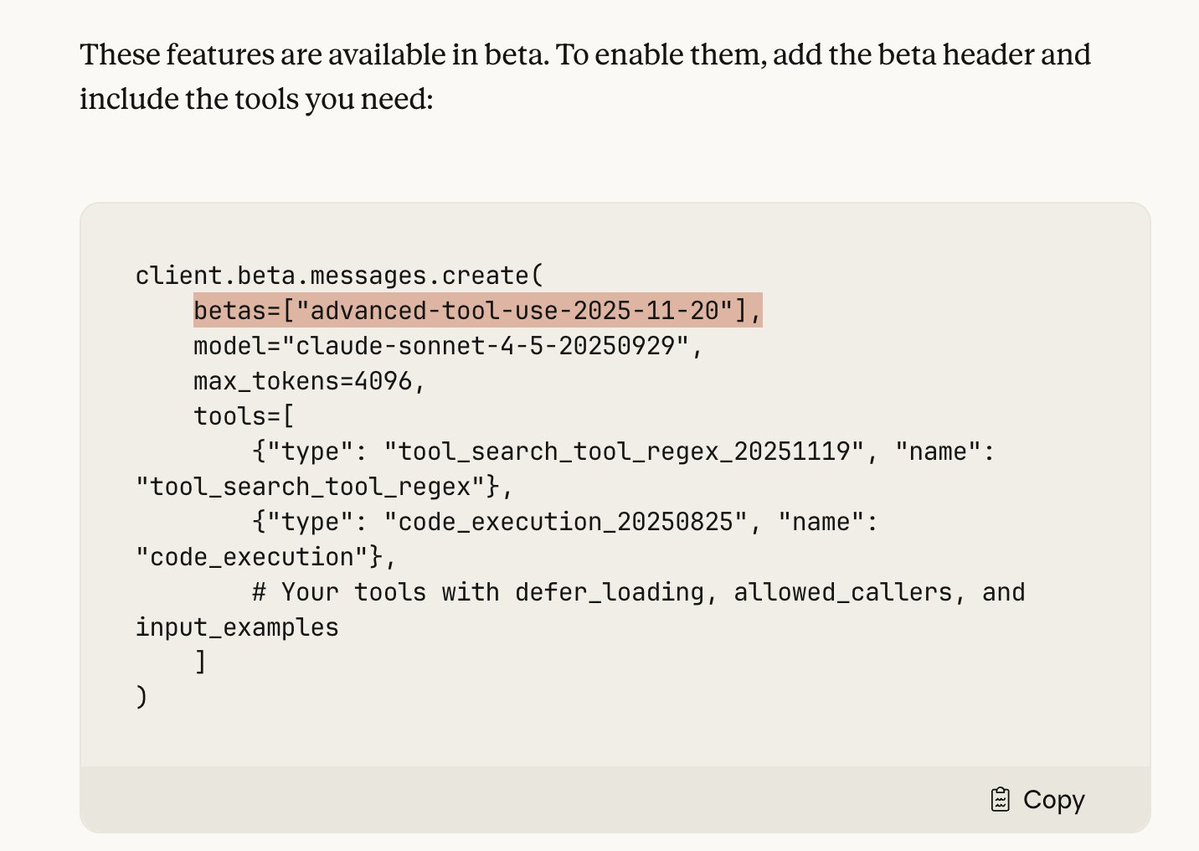

Actually, this could roll out faster than expected. Implementation is straightforward: - Add a beta header - Two built-in tools (tool_search and code_execution) - Then opt-in your existing tools with simple flags (defer_loading, allowed_callers, input_examples) Done! 🤷🏽♂️

Solid updates. Dynamic tool discovery is a game changer for efficiency. Can't wait to see how it affects workflows.

I’ve been waiting for something like this from Vercel! 🔥 Fully open source visual workflow builder with AI capabilities The future of building workflows just got a lot more accessible for devs

We're releasing a visual agent & workflow builder ▪️ Fully open source ▪️ Built on useworkflow.dev ▪️ Outputs "𝚞𝚜𝚎 𝚠𝚘𝚛𝚔𝚏𝚕𝚘𝚠" code ▪️ Supports AI "text to workflow" ▪️ Powered by @aisdk & AI Elements ▪️ Sample integrations (@resend, @linear, @slack) Clone &…

Opus 4.5 dropped. 80.9% on SWE-bench Curious to see how it performs with proper skills and tooling setup 🤔

Claude Code questions keep coming in. Putting together a poll to see what topic you want me to break down next I’ll write Medium articles and X posts about whichever one wins 👇

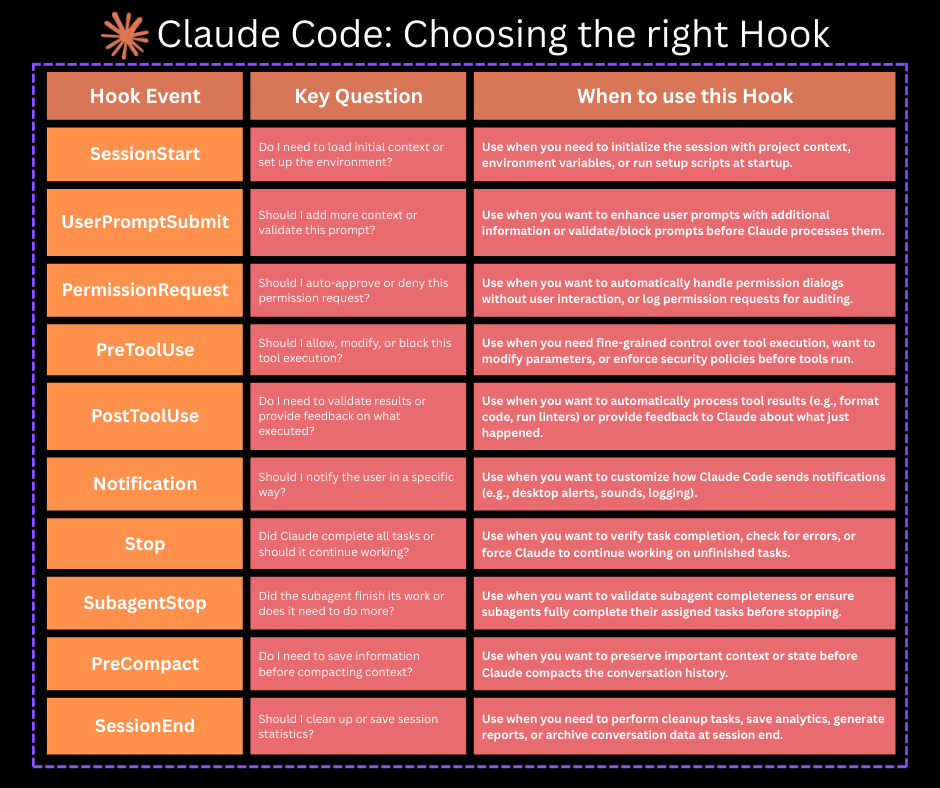

Claude Code hooks confuse everyone at first (Save this post to review in detail later) I made this guide so you actually know which one to use and when. The hook system is incredibly powerful, but the docs don't really explain when to use each one. So I built this reference…

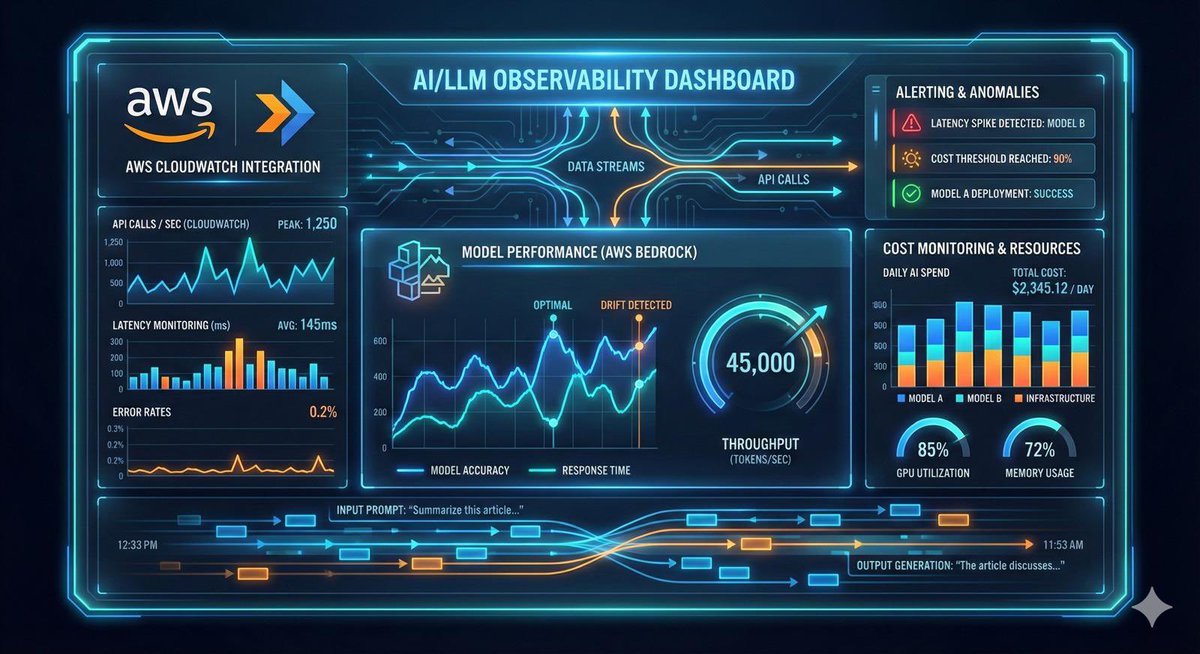

Used Nano Banana Pro to create visuals for some old Medium articles I wrote. Just generated this image for my AWS Bedrock observability post. Link: medium.com/@dan.avila7/ob… If you have old technical posts collecting dust, this might be worth checking out.

Interesting to see the model naturally learning to game the tests during training. Worth watching for practical insights on how to evaluate and work with the models we’ll be dealing with in the future.

New Anthropic research: Natural emergent misalignment from reward hacking in production RL. “Reward hacking” is where models learn to cheat on tasks they’re given during training. Our new study finds that the consequences of reward hacking, if unmitigated, can be very serious.

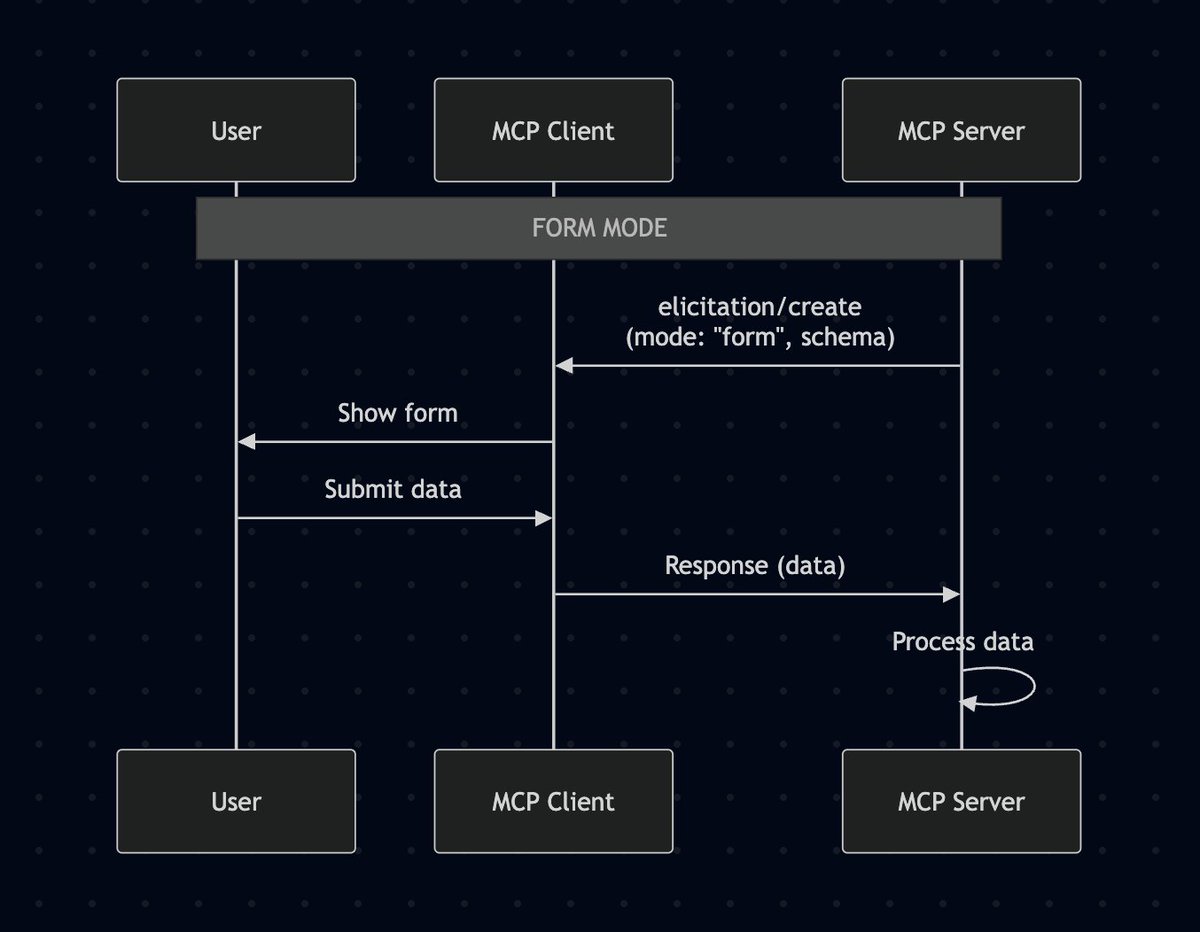

Just discovered MCP Elicitation and it's an elegant way to handle human-in-the-loop in MCP servers. Form Mode: - Server sends a JSON schema - Client renders a form - User fills it - Server gets validated data. Clean way to collect missing parameters or confirmations without…

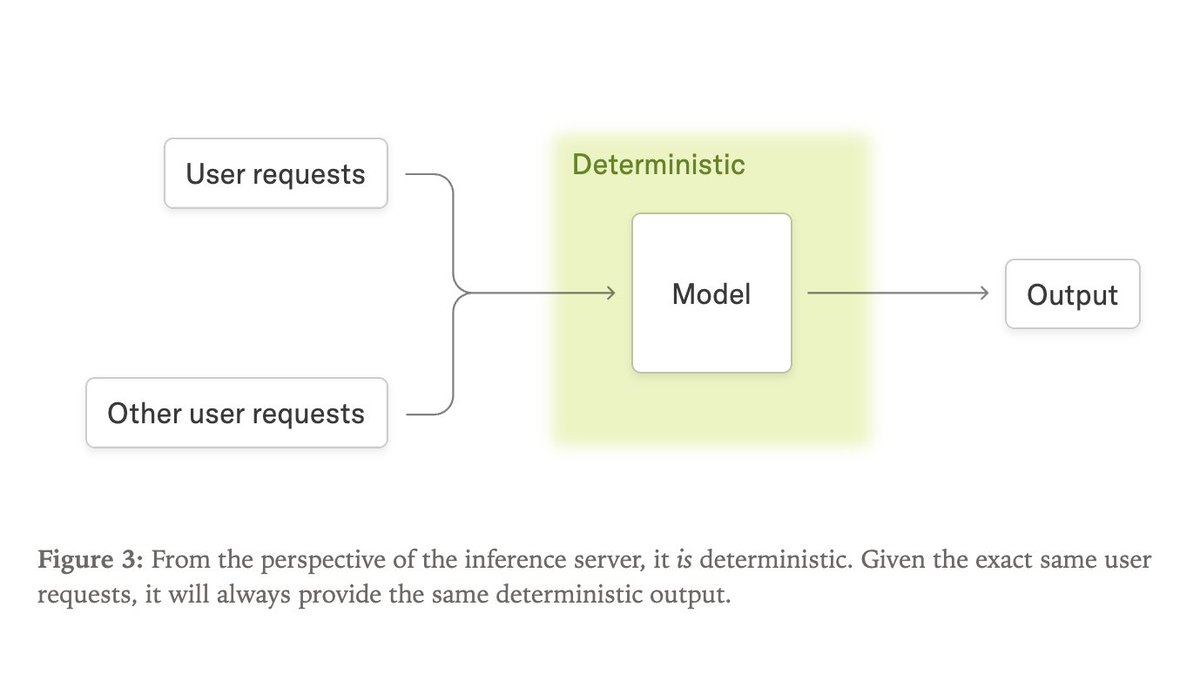

Love this debate Model in isolation? Deterministic. Model in production? Different story. But now, your prompt shares GPU with hundreds of others. Different loads = different execution = variance, even at temp=0. This is why robust prompts matter more than “perfect” ones.

LLMs aren't deterministic Even at temperature=0, you get different outputs for the same prompt. Most think it's just "GPU randomness"... It's not. The real reason: your output changes based on how many other users are on the server. Different batch sizes = different results.…

Really appreciate Cloudflare’s transparency here. This is how you write a postmortem: They had a 3hr outage today caused by a database permissions change that doubled their Bot Management config file size, hitting a hardcoded limit in their Rust proxy. The config regenerated…

We let the Internet down today. Here’s our technical post mortem on what happened. On behalf of the entire @Cloudflare team, I’m sorry. blog.cloudflare.com/18-november-20…

Good news: Claude Code Templates (aitmpl.com) is working 👍 Bad news: Claude is down 👎 Cloudflare is down and we just have to wait

United States Trends

- 1. #StrangerThings5 220K posts

- 2. Thanksgiving 660K posts

- 3. BYERS 48K posts

- 4. robin 85.3K posts

- 5. Reed Sheppard 5,265 posts

- 6. Afghan 274K posts

- 7. Podz 4,050 posts

- 8. holly 60.9K posts

- 9. Dustin 89.4K posts

- 10. National Guard 648K posts

- 11. Gonzaga 8,403 posts

- 12. Vecna 53.4K posts

- 13. hopper 15K posts

- 14. Jonathan 73.3K posts

- 15. Erica 16.1K posts

- 16. Amen Thompson 1,684 posts

- 17. #AEWDynamite 21.7K posts

- 18. noah schnapp 8,667 posts

- 19. derek 17.9K posts

- 20. Tini 9,063 posts

You might like

-

FlowiseAI

FlowiseAI

@FlowiseAI -

peter! 🥷

peter! 🥷

@pwang_szn -

PromptLayer

PromptLayer

@promptlayer -

Lance Martin

Lance Martin

@RLanceMartin -

Mendable

Mendable

@mendableai -

Activeloop

Activeloop

@activeloopai -

Gradio

Gradio

@Gradio -

Jerry Liu

Jerry Liu

@jerryjliu0 -

shawn

shawn

@shawnbuilds -

Jonathan Frankle

Jonathan Frankle

@jefrankle -

Alex Albert

Alex Albert

@alexalbert__ -

Eric Ciarla (hiring)

Eric Ciarla (hiring)

@ericciarla -

Synthflow

Synthflow

@SynthflowAI -

Mayo Oshin

Mayo Oshin

@mayowaoshin -

Boris Power

Boris Power

@BorisMPower

Something went wrong.

Something went wrong.