Frank Lin

@developerlin

Create Limitless Value with AI | Exploring AI’s Future

You might like

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs. Blog: alexiajm.github.io/2025/09/29/tin… Code: github.com/SamsungSAILMon… Paper: arxiv.org/abs/2510.04871

Simple but beautiful little agent based on Kimi K2 thinking

Kimi-k2-thinking is incredible. So I built an agent to test it out, Kimi-writer. It can generate a full novel from one prompt, running up to 300 tool requests per session. Here it is creating an entire book, a collection of 15 short sci-fi stories.

The GPT moment for Robot Control is here? @LeCARLab 's BFM-Zero, a promptable behavioral foundation model for humanoid control trained via unsupervised reinforcement learning. One policy, countless behaviors: motion tracking, goal-reaching, and reward optimization all zero-shot

Impressive, 3Q mix

MLLMs are great at understanding videos, but struggle with spatial reasoning—like estimating distances or tracking objects across time. the bottleneck? getting precise 3D spatial annotations on real videos is expensive and error-prone. introducing SIMS-V 🤖 [1/n]

100M Gaussians. Streamed on a single RTX 3090. Welcome to @NVIDIAAIDev 's fVDB Reality Capture — bringing 3D Gaussian Splatting, volumetric data, and OpenUSD into one pipeline. 🎥 Full demo → youtu.be/ZnhBGmHyJqM #NVIDIA #GaussianSplatting #3D

🚨 Wan 2.2 Animate just got a 𝗺𝗮𝘀𝘀𝗶𝘃𝗲 upgrade! ⚡️4× faster inference 🎨Even sharper, cleaner visuals 💸Only $0.08/s for 720p

A 1-billion-parameter motion model trained on NVIDIA CUDA, requiring 8GB of VRAM for real-time operation. It converts webcam or video footage into real-time XYZ skeletal point data and rotation values, transmitting them to Blender, Unity, and UE for retargeting.

EdgeTAM, real-time segment tracker by Meta is now in @huggingface transformers with Apache-2.0 license 🔥 > 22x faster than SAM2, processes 16 FPS on iPhone 15 Pro Max with no quantization > supports single/multiple/refined point prompting, bounding box prompts

Can’t believe it — our Princeton AI^2 postdoc Shilong Liu @atasteoff re-built DeepSeek-OCR from scratch in just two weeks 😳 — and open-sourced it. This is how research should be done 🙌 #AI #LLM #DeepSeek #MachineLearning #Princeton @omarsar0 @PrincetonAInews @akshay_pachaar

Discover DeepOCR: a fully open-source reproduction of DeepSeek-OCR, complete with training & evaluation code! #DeepLearning #OCR

MotionStream Real-Time Video Generation with Interactive Motion Controls model runs in real time on a single NVIDIA H100 GPU (29 FPS, 0.4s Latency)

Our paper "Vision Transformers Don't Need Trained Registers" will appear as a Spotlight at NeurIPS 2025! We uncover the mechanism behind high-norm tokens and attention sinks in ViTs, propose a training-free fix, and recently added an analytical model -- more on that below. ⬇️

Vision transformers have high-norm outliers that hurt performance and distort attention. While prior work removed them by retraining with “register” tokens, we find the mechanism behind outliers and make registers at ✨test-time✨—giving clean features and better performance! 🧵

We present MotionStream — real-time, long-duration video generation that you can interactively control just by dragging your mouse. All videos here are raw, real-time screen captures without any post-processing. Model runs on a single H100 at 29 FPS and 0.4s latency.

ThinkMorph: A New Leap in Multimodal Reasoning This unified model, fine-tuned on 24K high-quality interleaved reasoning traces, learns to generate progressive text-image thoughts that mutually advance reasoning. It achieves huge gains on vision-centric tasks & exhibits emergent…

This is cool

Introducing Molview - the ipython/jupyter widget version of nano-protein-viewer🔍:

The Illustrated NeurIPS 2025: A Visual Map of the AI Frontier New blog post! NeurIPS 2025 papers are out—and it’s a lot to take in. This visualization lets you explore the entire research landscape interactively, with clusters, summaries, and @cohere LLM-generated explanations…

"I don’t think there’s any reason why a machine shouldn’t have consciousness. If you swapped out one neuron with an artificial neuron that acts in all the same ways, would you lose consciousness?" ~ Geoffrey Hinton A fascinating discussion in this video. --- From the 'The…

Qwen-Edit 2509 Multiple-angles LoRA. Enables camera movement commands; control up, down, left, right, rotation, and look direction; also supports switching between wide-angle and close-up shots. huggingface.co/dx8152/Qwen-Ed…

Just trained Qwen3-VL-2B-Instruct for an epoch on this dataset, and it already seems to improve reasoning performance across several tasks! model: huggingface.co/hbXNov/Qwen3-V…

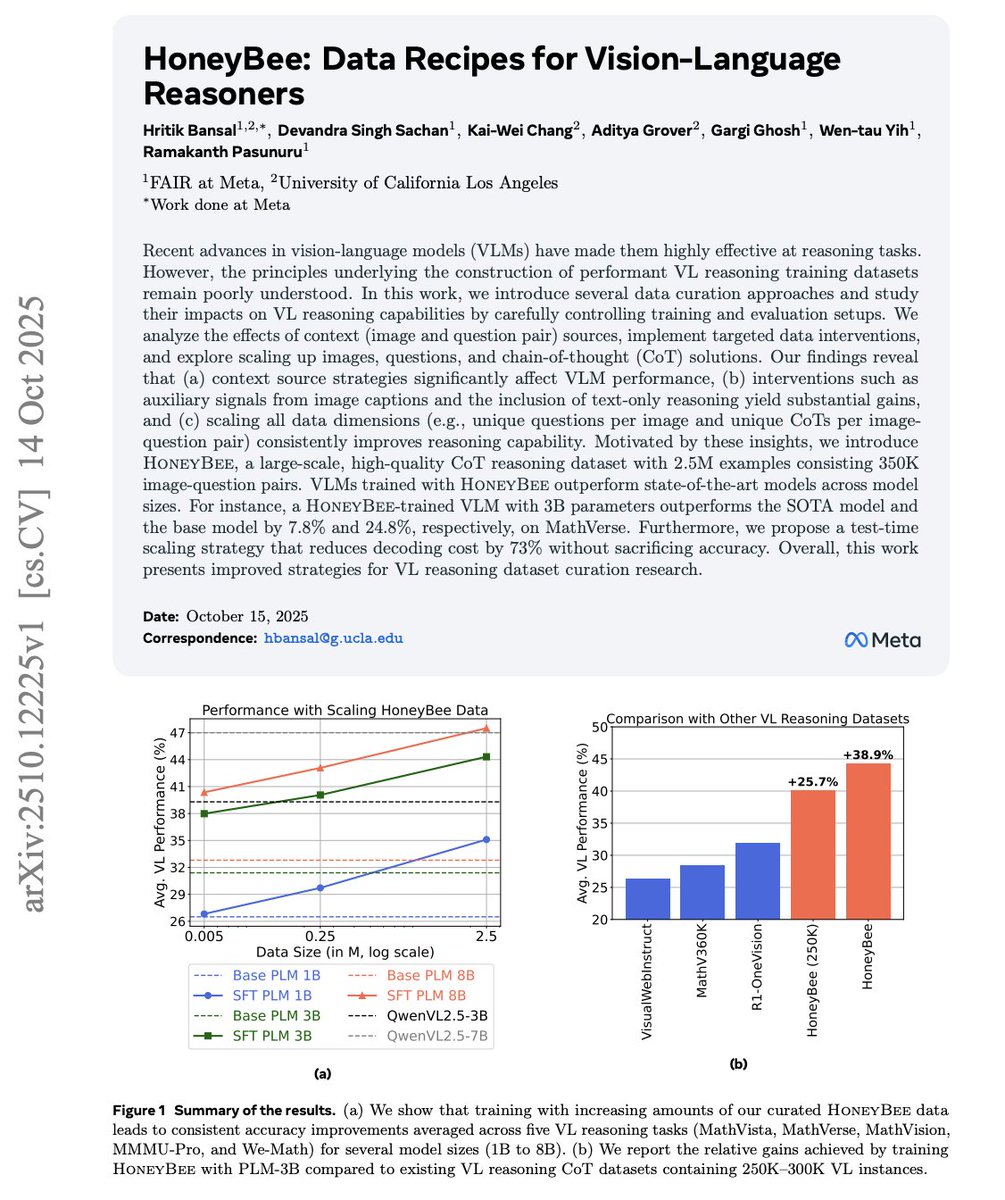

New paper 📢 Most powerful vision-language (VL) reasoning datasets remain proprietary 🔒, hindering efforts to study their principles and develop similarly effective datasets in the open 🔓. Thus, we introduce HoneyBee, a 2.5M-example dataset created through careful data…

One of our best talks yet. Thanks @a1zhang for the amazing presentation + Q&A on Recursive Language Models! If you're interested in how we can get agents to handle near-infinite contexts, this one is a must. Watch the recording here! youtu.be/_TaIZLKhfLc

youtube.com

YouTube

Recursive Lanugage Models w: Alex Zhang

United States Trends

- 1. Steelers 51.8K posts

- 2. Rodgers 21.1K posts

- 3. Chargers 36.3K posts

- 4. Tomlin 8,186 posts

- 5. Schumer 218K posts

- 6. Resign 103K posts

- 7. #BoltUp 2,924 posts

- 8. #TalusLabs N/A

- 9. Tim Kaine 18.1K posts

- 10. Keenan Allen 4,779 posts

- 11. #HereWeGo 5,628 posts

- 12. #RHOP 6,838 posts

- 13. Durbin 25.5K posts

- 14. Herbert 11.6K posts

- 15. #ITWelcomeToDerry 4,400 posts

- 16. Gavin Brindley N/A

- 17. Angus King 15.4K posts

- 18. 8 Dems 6,725 posts

- 19. 8 Democrats 8,678 posts

- 20. Ladd 4,383 posts

Something went wrong.

Something went wrong.