Andrew Stack

@drewstack

Search & Agents Research @TheAgenticSeek | Ex-Physics PhD @Stanford

AI without memory is like a mind that forgets every moment it lives. Mem0 changes that, giving agents the ability to remember, learn, and evolve across time. Built for developers, powered by open source, and inspired by how humans grow through experience. The future of truly…

Build AI agents with scalable long-term memory! Mem0 is a fully open-source memory layer that gives AI agents persistent, contextual memory across sessions. Without memory, agents reset after every interaction, losing context and continuity, which limits their usefulness in…

At the core of our system lies true collaboration between agents, a planner agent that thinks, and tool agents that act. The planner breaks complex goals into logical, actionable steps, while tool agents execute with focus and precision. This multi-agent architecture brings…

$AGENTIC is live!

Build AI agents with scalable long-term memory! Mem0 is a fully open-source memory layer that gives AI agents persistent, contextual memory across sessions. Without memory, agents reset after every interaction, losing context and continuity, which limits their usefulness in…

A deep dive into core/agent_manager.py reveals where the real magic of autonomous task planning unfolds. This is the brain of AgenticSeek, coordinating decisions, managing dependencies, and orchestrating complex actions with precision. Understanding this layer is key to…

Memory is the backbone of coherent AI conversations. Understanding and optimizing the context window empowers LLMs to maintain clarity, continuity, and depth in every interaction. Efficient memory management unlocks richer dialogue, deeper insights, and smarter AI performance.

Memory & Context Window in LLMs Large Language Models (LLMs) process information within a fixed-length context window, meaning they can only "see" a limited amount of text at once. Managing this memory efficiently is key to maintaining coherent long conversations or documents.…

The best agents don’t replace action; they amplify direction. They remember context, handle repetition, and free you to focus on the spark, the part only you can bring. Building with agents isn’t about automation. It’s about collaboration between logic and imagination, a…

AgenticSeek’s multi-agent architecture is built for scale. A planner agent breaks tasks into steps and delegates them to specialized tool agents. Each agent focuses on a single responsibility, code execution, data parsing, or retrieval, while the planner orchestrates the flow.…

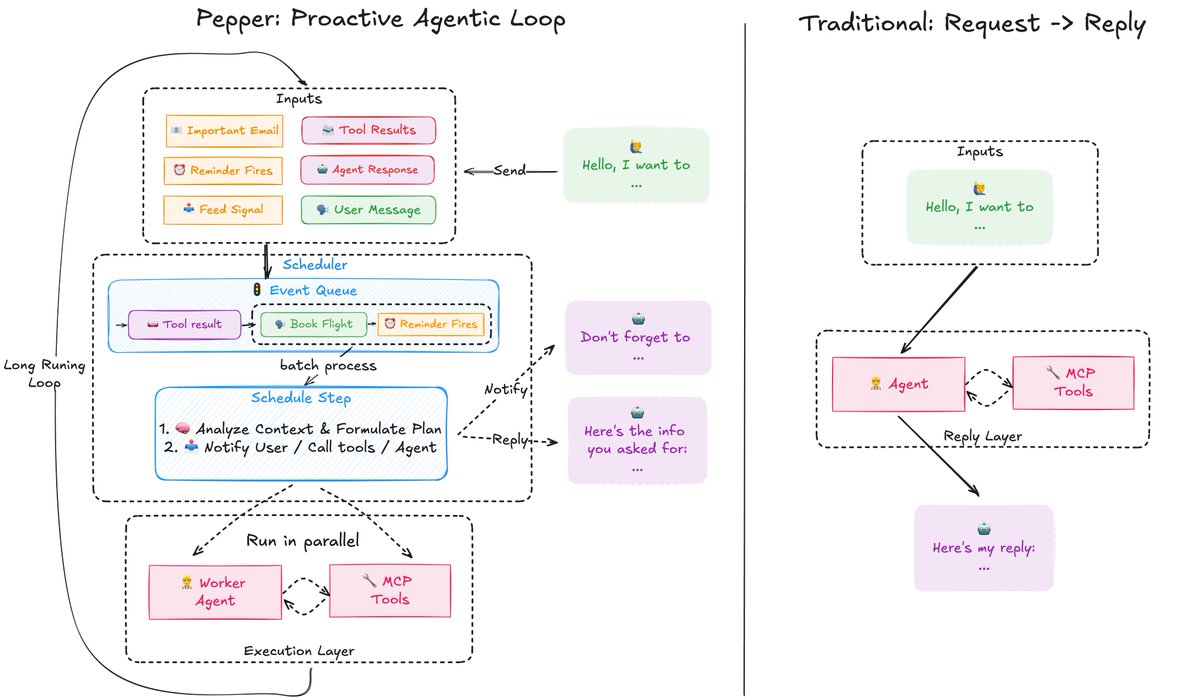

Introducing Pepper🌶️! An open-source, real-time, event-driven architecture to power the next generation of proactive agents. Tired of static reactive chatbots? Pepper enables agents that anticipate your needs, actively engage, and work continuously in the background (think…

Static RAG leaves your agents chasing stale data. Airweave flips the script with live, bi-temporal knowledge bases, giving agents real-time recall, smarter retrieval, and context that never lags behind. With support for semantic and keyword search, query expansion, and 30+ data…

RAG can’t keep up with real-time data. Airweave builds live, bi-temporal knowledge bases so that your Agents always reason on the freshest facts. Supports fully agentic retrieval with semantic and keyword search, query expansion, and more across 30+ sources. 100% open-source.

More rules don’t make smarter agents, they make confused ones. Break big prompts into conditional guidelines, and let the model load only what matters in the moment.

System prompts are getting outdated! Here's a counterintuitive lesson from building real-world Agents: Writing giant system prompts doesn't improve an Agent's performance; it often makes it worse. For example, you add a rule about refund policies. Then one about tone. Then…

LFM2-Audio just dropped! It's a 1.5B model that understands and generates both text and audio Inference 10x faster + quality on par with models 10x larger Available today on @huggingface and our playground 🥳

AI is evolving too fast for clunky monoliths. The future is Small Language Models + Microservices + MCP, agile, modular, and resource-efficient. SLMs bring speed, privacy, and edge-readiness. Microservices keep systems clean, scalable, and dev-friendly. MCP acts as the…

A 100% open-source alternative to n8n! Sim is a drag-and-drop open-source platform to build and deploy Agentic workflows. - Runs 100% locally - Works with any local LLM I used it to build a finance assistance app & connected it to Telegram in minutes. The workflow is simple:…

DeepSeek Sparse Attention transforms efficiency, cutting core attention complexity so models work faster while staying sharp.

DeepSeek introduces a new sparse attention variant called DeepSeek Sparse Attention (DSA) DSA primarily consists of two components: a lightning indexer and a fine-grained token selection mechanism. It leads to significant inference speedups: "DSA reduces the core attention…

Code doesn’t live in silos anymore. A workflow that starts with browsing research, shifts into generating functions, then closes with file ops to package results, now happens as one seamless narrative. Think about it: instead of manually chaining tools, the system holds the…

I started co-leading Gemini API a few months ago. My focus: how the API should evolve for agentic use cases. LLM APIs are quietly evolving from a simple request/response into a full-blown protocol with growing complexity. Some notes 👇

United States Tendências

- 1. Trey Yesavage 29.4K posts

- 2. Blue Jays 57.5K posts

- 3. #AEWDynamite 21.3K posts

- 4. Jake LaRavia 1,695 posts

- 5. #LoveIsBlind 3,614 posts

- 6. jungwoo 79.2K posts

- 7. Snell 13K posts

- 8. Pelicans 3,774 posts

- 9. #WorldSeries 64.9K posts

- 10. Anthony Davis 3,755 posts

- 11. #Survivor49 3,320 posts

- 12. Bulls 25.6K posts

- 13. Kacie 1,562 posts

- 14. #WANTITALL 34.6K posts

- 15. Donovan Mitchell 5,449 posts

- 16. Dwight Powell N/A

- 17. Dodgers in 7 1,265 posts

- 18. Cavs 9,484 posts

- 19. Happy Birthday Kat N/A

- 20. Willie Green N/A

Something went wrong.

Something went wrong.