Enigma Spectre

@echo_fractal

*archaic croaky voice* “I remember when AI was just called predictive analytics” *fades to dust*

Ooooooooo!

Meet the XTR-0 A way for early developers to make first contact with thermodynamic intelligence. More at: extropic.ai

🧵 LoRA vs full fine-tuning: same performance ≠ same solution. Our NeurIPS ‘25 paper 🎉shows that LoRA and full fine-tuning, even when equally well fit, learn structurally different solutions and that LoRA forgets less and can be made even better (lesser forgetting) by a simple…

This is gold and I couldn’t resist sharing, but it is an interesting paper too! Do check out the full thread.

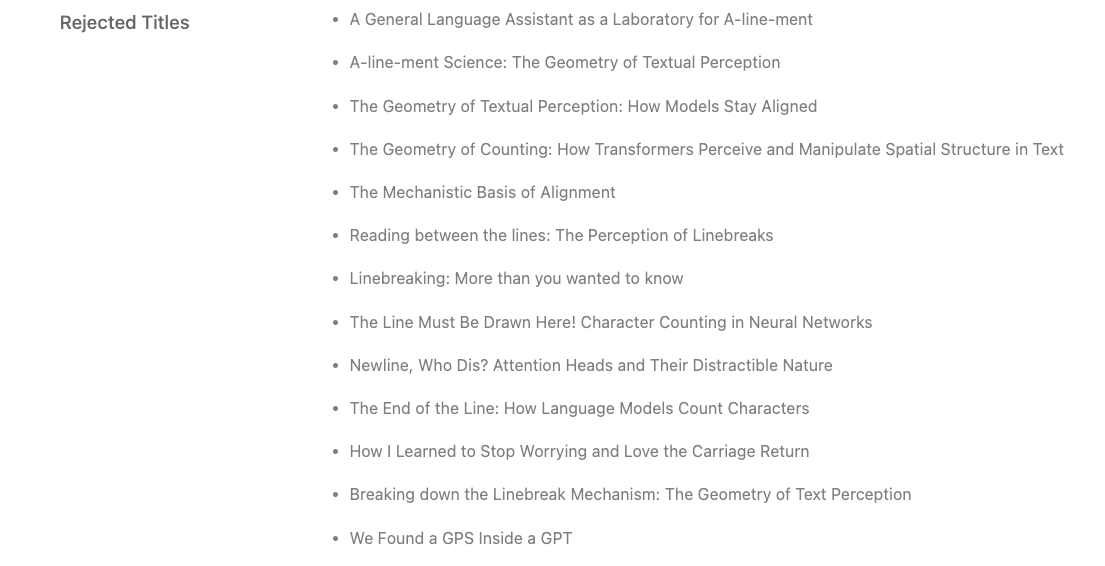

One of the hardest parts of finishing this paper was deciding what to call it! The memes really write themselves… We included some of our favorite rejected titles in the appendix

I love Markov Chains, and you should too lol. Props to the authors and I’ll probably use this trick myself. More generally though, I bet there’s lots of ways to improve sampling at inference and I’m looking forward to more improvements there.

How do they pull this off efficiently? They use a Metropolis-Hastings loop (MCMC). At each step, they resample part of the output and decide accept or reject based on the model’s internal probabilities.

My brain broke when I read this paper. A tiny 7 Million parameter model just beat DeepSeek-R1, Gemini 2.5 pro, and o3-mini at reasoning on both ARG-AGI 1 and ARC-AGI 2. It's called Tiny Recursive Model (TRM) from Samsung. How can a model 10,000x smaller be smarter? Here's how…

This is incredibly cool. Props to sammyuri ❤️

this is beyond mindblowing for me. somebody built a 5 million param language model inside minecraft, trained it, equipped it with basic conversational ability. probably the best thing i have seen entire month.

sharing a paper i learned lots from. it just won neurips oral and will be an extremely good read to gain intuitions about: - attention gates & sinks - non-linearity, sparsity, & expressiveness in attention - training stability & long-context scaling some takeaways :) what…

Even if ads are not displayed on paid tiers, it is nearly certain that they will sell your profile to third party advertisement agencies. 🤢🤮 Open source local models FTW.

SOURCES: OpenAI is planning to bring ads to ChatGPT. Also: In a message to employees, Sam Altman says he wants 250 gigawatts of compute by 2033. He calls OpenAI's team behind Stargate a "core bet" like research / robotics. "Doing this right will cost trillions."

It's Pythagorean Triple Square Day 9/16/25 and no one cares

United States Trends

- 1. GTA 6 46.1K posts

- 2. GTA VI 16.3K posts

- 3. Rockstar 45.6K posts

- 4. #LOUDERTHANEVER 1,497 posts

- 5. GTA 5 7,366 posts

- 6. Nancy Pelosi 116K posts

- 7. Antonio Brown 3,768 posts

- 8. Rockies 3,763 posts

- 9. Paul DePodesta 1,803 posts

- 10. Ozempic 16K posts

- 11. Grand Theft Auto VI 36.9K posts

- 12. GTA 7 N/A

- 13. Justin Dean 1,002 posts

- 14. Luke Fickell N/A

- 15. $TSLA 55.3K posts

- 16. Elon Musk 226K posts

- 17. RFK Jr 27.8K posts

- 18. Oval Office 40.6K posts

- 19. Pete Fairbanks N/A

- 20. Michael Jackson 89.7K posts

Something went wrong.

Something went wrong.