Gaurav Kumar

@gauravkmr__

Junior Research Fellow at IISER Pune. Complex Networks. MSc Physics, IIT Gandhinagar.

To paraphrase something I tell my students, especially when they are starting a research project - There are certainly many people in the world who think better than us. But the competition reduces when it comes to the people who take their thoughts and 'do' something with them.…

All these things were not enough for Air India. After torturous treatment from Air India, I somehow landed in JFK. Surprise, Guess what, they have conveniently off loaded more than 100 checked in luggage, including mine, in Delhi itself.

I was booked on AI101 from Delhi to JFK on 21 June. Few hours before the departure, I got a message that my itinerary had changed due to “*unforeseen operational reasons*.” I was scared as operational issues in AI after the past incident immediately raised safety concerns. (1/7)

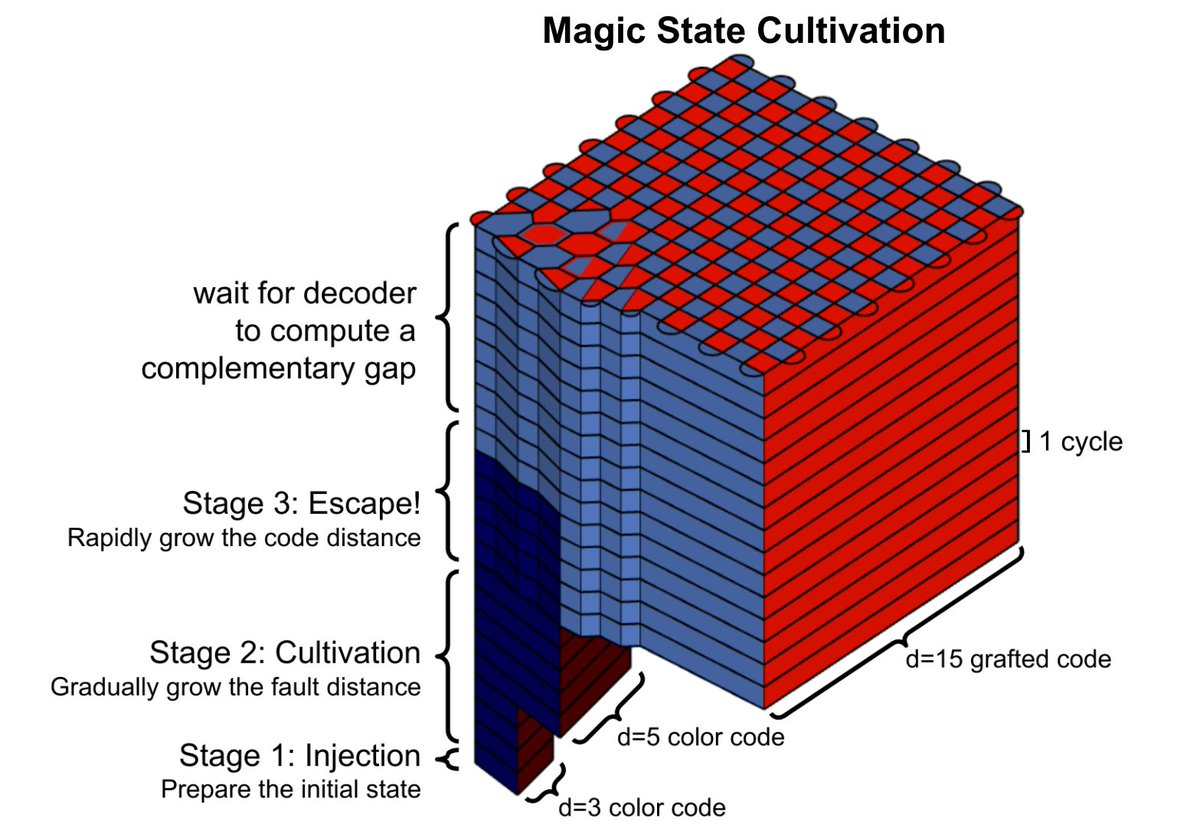

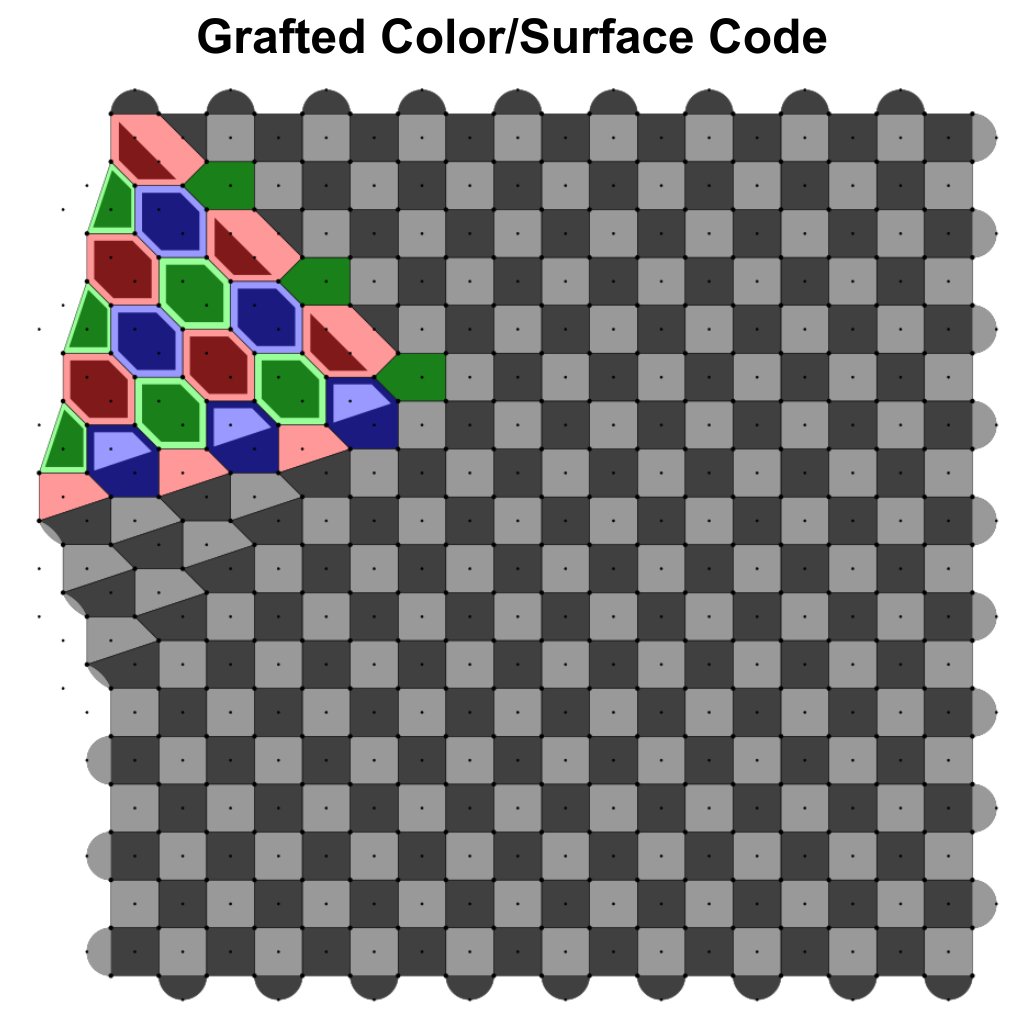

Major update on factoring primes with Shor’s algorithm from Craig Gidney at #Google. - reduced physical #qubit count from 20e6 to 1e6 to break a RSA-2048 bit key, using yoked surface codes, magic state cultivation and some efficient arithmetic. arxiv.org/abs/2505.15917 #quantum

Many recent posts on free energy. Here is a summary from my class “Statistical mechanics of learning and computation” on the many relations between free energy, KL divergence, large deviation theory, entropy, Boltzmann distribution, cumulants, Legendre duality, saddle points,…

Exactly how I like learning about this stuff. MCMC is not a difficult concept to understand if you have the right person explain it to you.

The mechanism behind double descent in ML (specifically in ridgeless least squares regression) is not just similar but _identical_ to that which in physics causes massless 4D phi^4 theory to go from being classically scale-free to picking up a scale/mass in the infrared.

Does anyone have any random fun facts about a very niche subject. I'm bored and love learning random things

The Rothschild family’s mansion in Vienna was seized by the Nazis in 1938 and used as Eichmann’s headquarters. After the war, the Austrian government refused to return it. Still haven't. Instead they pressured the family into 'donating' their property. Same with many other Jews.

David Tong provides his beautiful, insightful, original lectures on physics free of charge. His latest gift to us is on mathematical biology. damtp.cam.ac.uk/user/tong/math…

The Unreasonable Effectiveness of Linear Algebra. If you really want to understand what’s happening in machine learning/AI, coming to grips with the basics of linear algebra is super important. I continue to be blown away by how much of ML becomes increasingly intuitive as one…

Been working on "Magic state cultivation: growing T states as cheap as CNOT gates" all year. It's finally out: arxiv.org/abs/2409.17595 The reign of the T gate is coming to an end. It's now nearly the cost of a lattice surgery CNOT gate, and I bet there's more improvements yet.

10 years ago Kelly et al showed bigger rep code circuits do better. The dream was to do the same for a full quantum code. This year we finally did it. arxiv.org/abs/2408.13687 has d=5 surface codes twice as good as d=3. And d=7 twice as good again, outliving the physical qubits.

For anyone curious about nonlinear dynamics and chaos, including students taking a first course in the subject: My lectures are freely available here. m.youtube.com/playlist?list=…

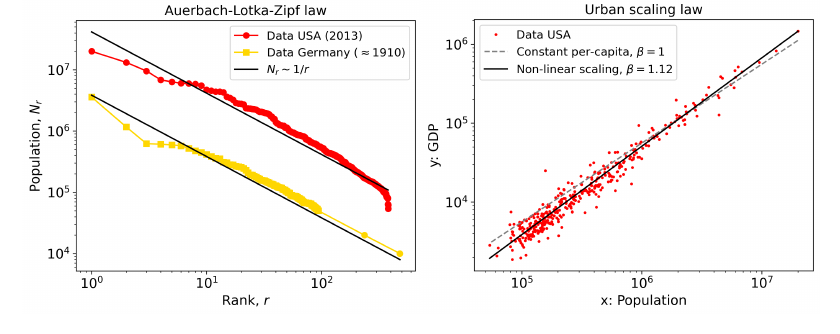

"Statistical Laws in Complex Systems", new pre-print monograph covering the history, traditional use, and modern debates in this topic. arxiv.org/abs/2407.19874 Comments and suggestions are welcome.

"The Ising model celebrates a century of interdisciplinary contributions" I studied the Ising model in my phd in statistical physics. It gave me concepts to talk with complexity folks in political science, psychology and more. Any good Ising stories? nature.com/articles/s4426…

Can we tell apart short-time & exponential-time quantum dynamics? In arxiv.org/abs/2407.07754, we found the answer to be "No" even though it seems easy. This discovery leads to new implications in quantum advantages, faster learning, hardness of recognizing phases of matter ...

The Laplacian of a graph is (up to a sign change...) a positive semi-definite operator. en.wikipedia.org/wiki/Laplacian…

Hopfield networks are a foundational idea in both neuroscience & ML 🧠💻 The latest video introduces the world of energy-based models and Hebbian learning 📹 A first step to covering Boltzmann/Helmholtz machines, Predictive coding & Free Energy later :) youtu.be/1WPJdAW-sFo?si…

youtube.com

YouTube

A Brain-Inspired Algorithm For Memory

In our new preprint, we explore how the economy can be analysed through the lens of complexity theory, using networks and simulations. researchgate.net/publication/38… #complexity #networks #simulation #economics #complexnetworks #research #datascience

United States 趨勢

- 1. #FaithFreedomNigeria N/A

- 2. Good Wednesday 28.2K posts

- 3. Peggy 23.8K posts

- 4. #LosVolvieronAEngañar 1,032 posts

- 5. #wednesdaymotivation 6,132 posts

- 6. Hump Day 12.2K posts

- 7. Dearborn 295K posts

- 8. #Wednesdayvibe 1,959 posts

- 9. #hazbinhotelseason2 85.9K posts

- 10. Happy Hump 7,881 posts

- 11. For God 223K posts

- 12. Cory Mills 14.6K posts

- 13. $TGT 4,369 posts

- 14. Gettysburg Address N/A

- 15. Abel 17.2K posts

- 16. Ternopil 32.3K posts

- 17. Grayson 7,842 posts

- 18. Sewing 6,180 posts

- 19. Lilith 13.8K posts

- 20. $NVDA 40.2K posts

Something went wrong.

Something went wrong.