Maddy A

@its_maddy_a

Founder, CEO and Engineer @ZeroGPU_AI

You might like

My “Your Year with ChatGPT” recap distilled my 2025 into three things: • Built @ZeroGPU_AI from the ground up — APIs, SDKs, orchestration — turning everyday devices into a distributed inference grid • Went deep on small language models — benchmarking, quantization, and real…

In the era of agentic coding, what will be considered intellectual property (IP) for a company: their codebase or the prompts they provide to build the code?

Advice for AI engineers 💡 A small Visual Language Model fine-tuned on your custom dataset is as accurate as GPT-5... ... and costs 50 times less. For example, LFM2-VL-3B by @liquidai

Every other advertisement I encounter now is from @claudeai. This is happening across multiple platforms! I haven’t seen any other AI company advertisements. I’m not sure how much money has been invested in @claudeai’s marketing, but it’s clearly working. I’ll give it a try…

Software Engineering Expectations for 2026 - The majority of your code should be written by AI now - Cursor/Codex/Claude Code/Gemini/etc - You should try all the tooling and switch between them, as each one gets an edge over the others depending on the release cycle. - You…

Most teams still “send everything to GPT” for safety and pray the bill behaves. New post on building config-driven safety pipelines with edge SLMs + a cloud policy engine (and where ZeroGPU plugs in). If you care about content safety at scale, start here 👇…

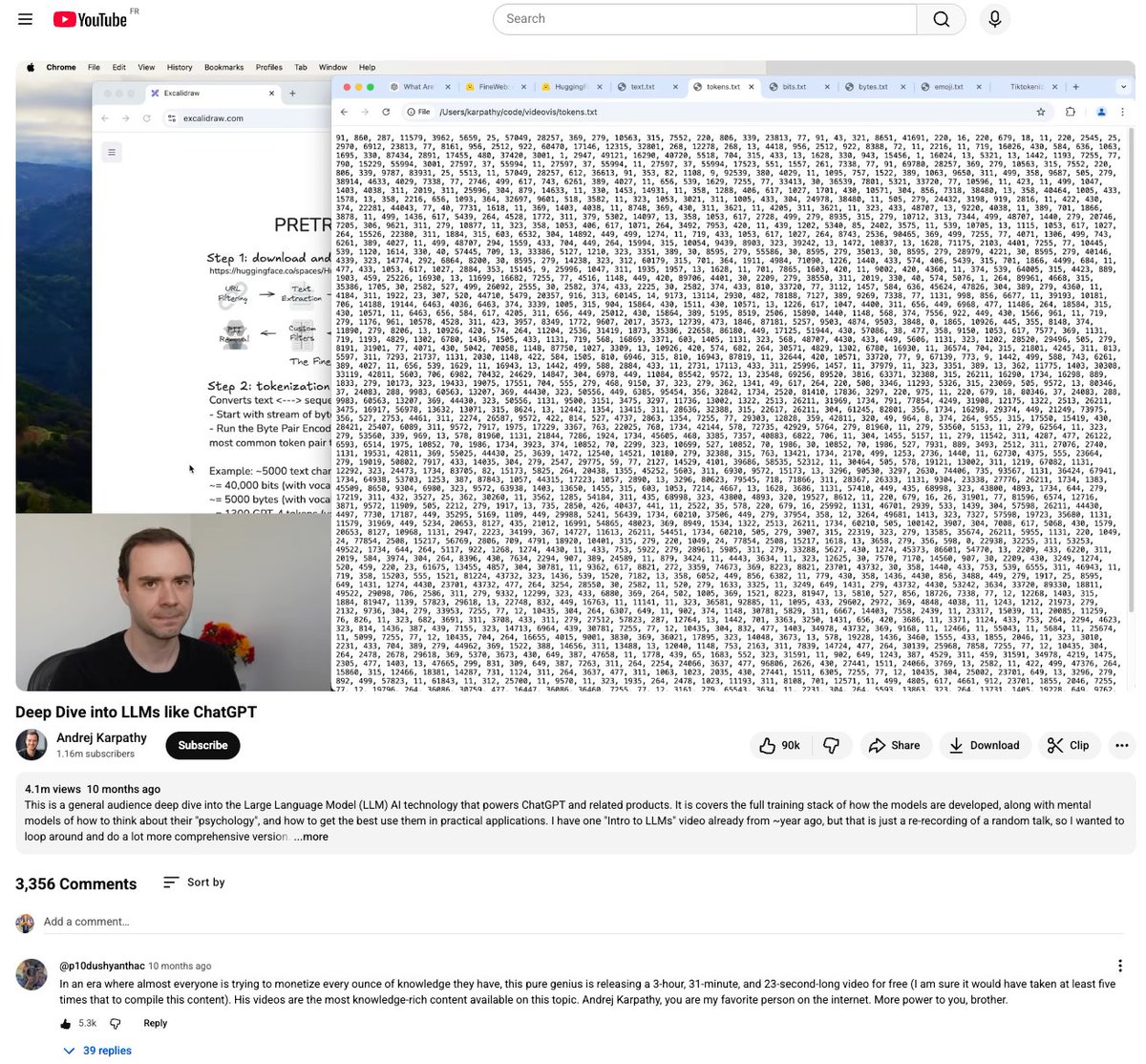

Still can’t believe @karpathy released this 3.5-hour free deep dive on how ChatGPT actually works for free. If there’s one AI video to watch in 2025, this is the one

You can watch it here: youtube.com/watch?v=7xTGNN…

youtube.com

YouTube

Deep Dive into LLMs like ChatGPT

Looks cool! But printing costs $$$

Most ad targeting workflows don’t need a LLM call, IAB taxonomy classification is a pure inference problem. We are building a dedicated /classify endpoint for this use case at @ZeroGPU_AI to label content into IAB categories fast at the edge and only escalate to bigger models…

This insightful article by @AndrewYNg is a helpful reminder that progress in AI today comes less from simply making models bigger and more from how thoughtfully we train them and choose data. It explains why recent gains are often slow and deliberate, and why current models still…

Building in AI feels like flying the plane while you’re still assembling it. Anyone else?

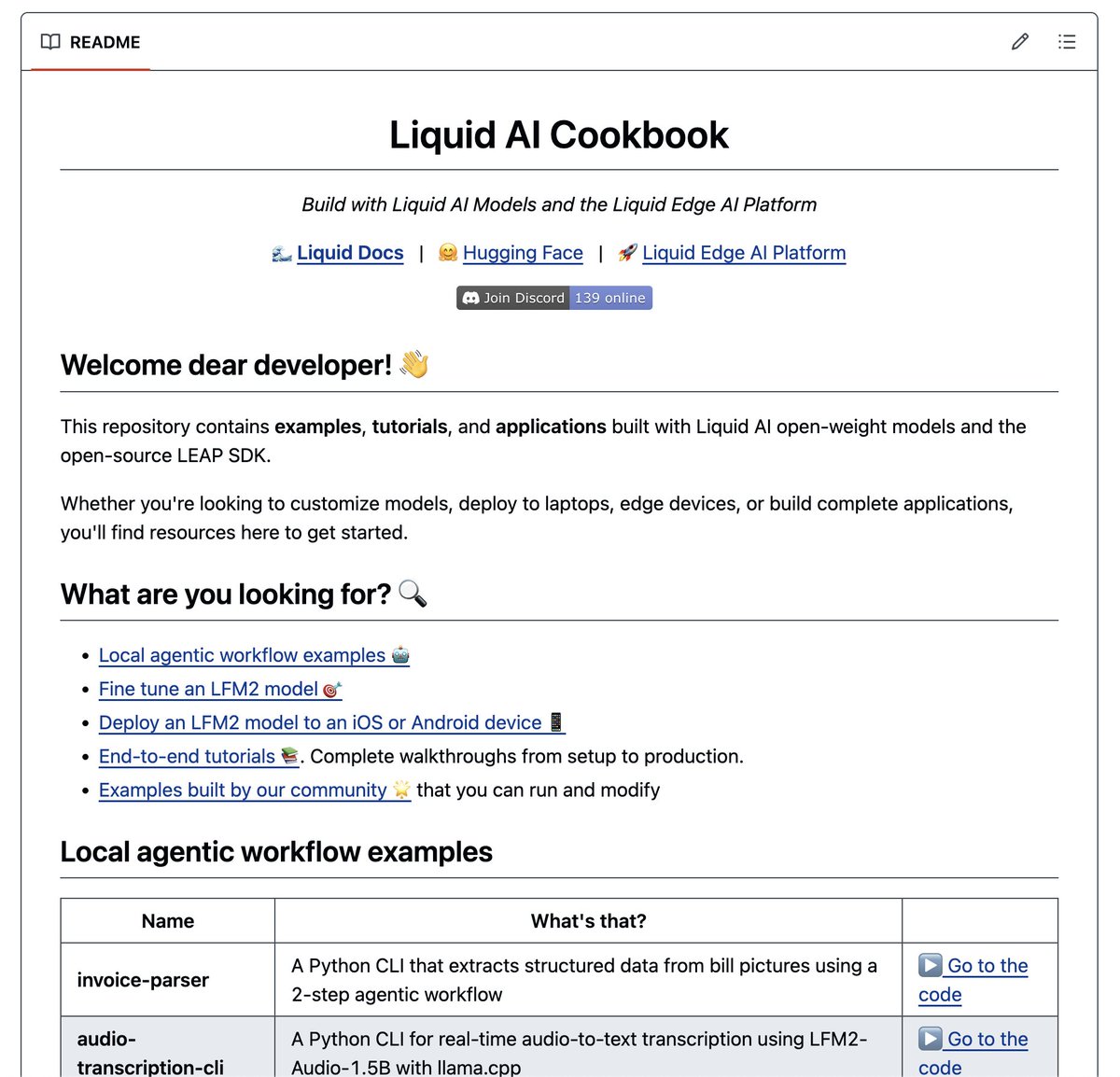

Hands-on tutorials on fine-tuning and deployment of Small Language Models. Enjoy ↓ github.com/Liquid4All/coo…

If your app calls a giant LLM for every small inference task (routing, labeling, intent, extraction) and you’re waiting seconds… that’s an architecture smell. SLM-first with edge inference for most of those calls is your answer. Only escalate to big models for reasoning tasks.…

Marathon training ➜ kid’s therapy ➜ grinding @ZeroGPU_AI ➜ school volunteering ➜ inbox zero ➜ family time. If life had XP points, I leveled up today. 🎯

NVIDIA’s SLM Agents paper is a clean vision for real AI: small models, modular tools, structured reasoning, real-world autonomy. I wrote a short breakdown (and where ZeroGPU fits in): tinyurl.com/2873ns2m ZeroGPU is bringing this to the edge. White paper coming soon!

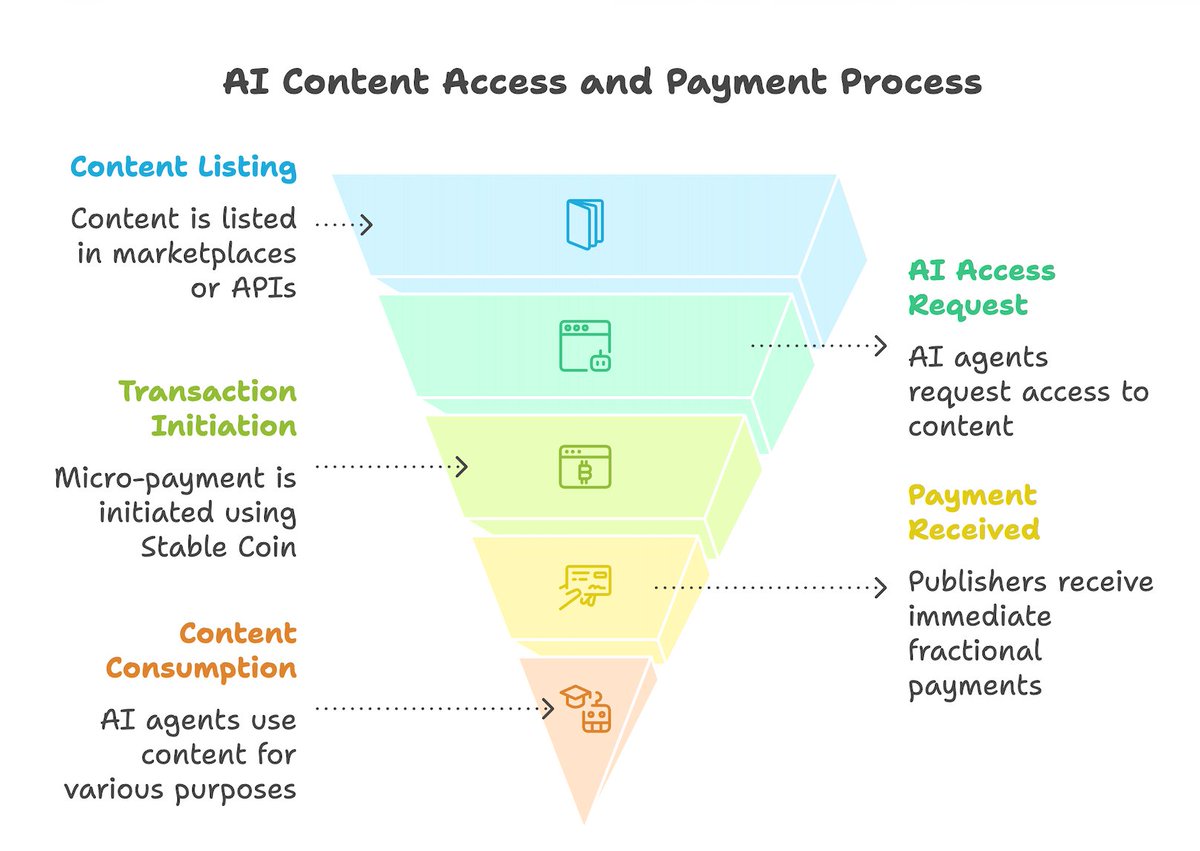

LLMs scrape content at scale, but creators get zero traffic or revenue. I wrote about how Cloudflare's NetDollar + blockchain can create a fair creator economy—where every AI scrape triggers instant payment. tinyurl.com/4w9ec4ps @CloudflareDev @DappierAI #ai #blockchain

United States Trends

- 1. Bitget TradFi 15,1 B posts

- 2. Snoop 12,3 B posts

- 3. #WWERaw 86,4 B posts

- 4. Illinois State 20,6 B posts

- 5. Steve Kerr 5.956 posts

- 6. Montana State 7.531 posts

- 7. #FCSChampionship 3.357 posts

- 8. Hornets 13,4 B posts

- 9. Nuggets 16,9 B posts

- 10. Kobe Sanders 1.048 posts

- 11. Sixers 8.241 posts

- 12. Jimmy Butler 1.512 posts

- 13. Embiid 5.053 posts

- 14. Jalen Pickett 2.251 posts

- 15. #RawonNetflix 5.180 posts

- 16. Knicks 22,1 B posts

- 17. 76ers 3.747 posts

- 18. Punk 50 B posts

- 19. Paul Davis N/A

- 20. Stephen Miller 80,4 B posts

Something went wrong.

Something went wrong.